How do you even pronounce Kubernetes?

Hint: coo-ber-net-ees

In this post I'll be recounting some of the invaluable lessons I've learned over the past few months. At the start of the final semester of my undergrad, I elected an introductory course to DevOps. I'd heard the term here and there, and I also had some idea about just how popular the role of a DevOps engineer had become in recent years. But other than that, I really had no clue about what the role entailed.

Fast forward 4 months to today and I can proudly say that I'm far less clueless than when I had started out. Heck, I even managed to deploy my own Kubernetes cluster in a working project.

In a nutshell, DevOps is all about streamlining the processes involved in software development & deployment to make life easier for everyone (the developer, the software users, and the maintainers).

Here's some of the stuff I learned about (and will be discussing in this post as well):

- Virtualization

- Containers

- Pods

- Dockerfiles

- Building dockerfiles

- Container Clusters

- Achieving High-Availability

- Micro-Services

- Service meshes

- CI/CD

- GitOps

Not to mention a whole plethora of tools. Really, there's just so many great DevOps tools out there, that it's equally rewarding and daunting trying to learn about them all. I mean, just take a look at this periodic table of DevOps tools.

But more important than the tools, is to understand the need for DevOps in the first place.

What's a container, and why does my cluster have so many of them?

Before we answer that question, we need to know a bit about virtualization. Traditionally, Virtual Machines were used to run several distinct applications on a single hardware system. In most cases, this involved installing and configuring the VM software, installing the OS, and then finally deploying the application. As you can see, there's many different layers to this process. What if all I wanted to do was deploy a small service that only provides me some kind of button highlighting functionality in a web app? Do I really need to spin up an entire VM? That doesn't sound too efficient.

This is where containers come in. Compared to regular VMs, containers use a highly streamlined form of OS virtualization. They can be thought of as logically isolated processes that contain everything needed to run a particular piece of software. All the dependencies, source files and environment configurations are bundled into a neat little container. Moreover, these containers are highly portable and function exactly the same no matter where you run them. There's no need to configure the developer environment for each machine you chose to deploy a container on. Just get the container up and running and you'll execute the exact same code across every machine you choose to run it on.

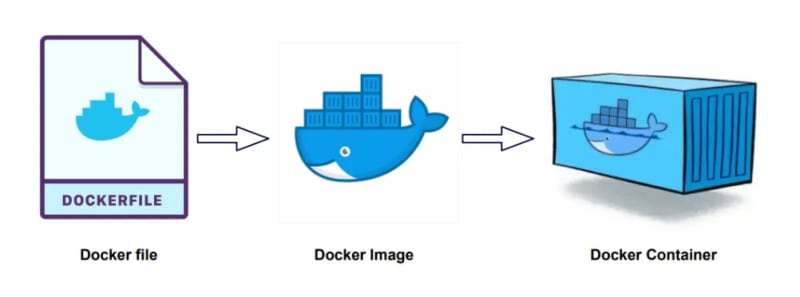

In order to run a container, there's a three step process involved. You first write something called a dockerfile, which is basically a schematic defining the structure of your container. Then using this schematic you build a container image with the help of an image builder. Then finally, you run the container in a container runtime.

If you take one or more containers and bundle them into a wrapper, you get something called a pod. Each pod exists to serve a particular function, something that you can call a service. A machine that runs multiple pods together is called a node, and a container orchestration software like Kubernetes manages a group of several nodes called a cluster. It handles high-availability and resilience, auto-scales up or down based on needs, provides authorization, and does many, many more things.

That's pretty cool! But there's one glaring problem to address. How do we make each of these little services running on their own pods (micro-services basically) communicate with each other in a safe and efficient manner? For that we use something like Istio. It's a piece of software that injects a separate container called a sidecar-proxy inside a pod (pods are wrappers for multiple containers, remember?), and all communication going to and from the pod passes through the sidecar-proxy first. This sidecar provides many extremely useful features that vastly improve and standardize the communication between services. A whole network of these proxies is called a service mesh.

There's a whole lot more to the world of containers and container orchestration, but this is pretty much the gist of it.

CI/CD, Version Control, Source Control, Single Source of Truth...

A big part of DevOps is creating CI/CD pipelines that make the process of software development efficient and reliable. CI/CD is short for Continuous Integration/Continuous Development, and it basically means creating a set of automated steps that allow a developer to have changes in their code quickly deployed into a running form.

Here's what a basic CI/CD workflow would look like:

- A developer writes some code and makes a commit to their code repository.

- A tool like GitHub Actions or Jenkins builds a container image from the code and runs some user-defined tests against it.

- If the tests clear, the image is pushed to a Container Registry (think of it like GitHub but for containers).

- The image is pulled from the registry and deployed inside a Kubernetes cluster.

If you use a tool like Flux or Argo CD, you can add one more dimension to this whole process by introducing GitOps to your workflow. Flux, for example, will constantly monitor the configuration of your current cluster and compare it against a configuration repo defined by the developers (this is usually just a git repo too). If it finds any discrepancy between the desired state and the current state, Flux will bring the cluster state in line with the configuration defined in the git repo. This is why its called Git Ops, because Git acts as the Single Source of Truth.

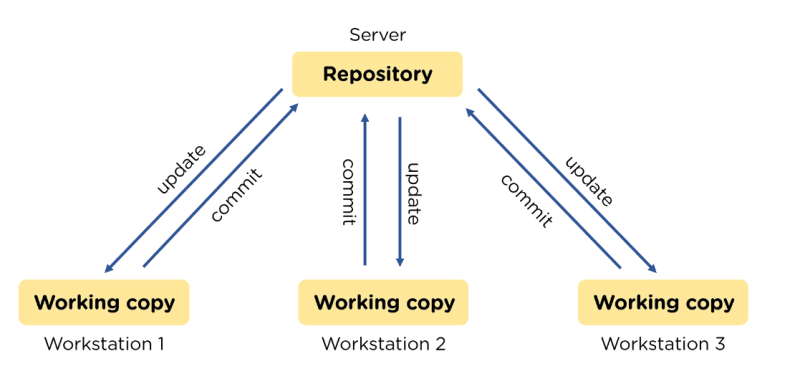

Another crucial concept is Version/Source control. This basically refers to keeping track of changes made to code. Most of us are familiar with version control in one way or the other (such as git), but there's a different dimension to it that I discovered recently. Rolling new updates to an already running software platform can be tricky. But tools like Kubernetes provide features like Deployments that help route traffic between different software versions, and Istio does something similar at a more granular level to route traffic between canary builds of different services in the service mesh.

The Open Source Community

An amazing thing about the world of DevOps is that so much of all the magic making it possible is open-source. The communities behind each tool are constantly pushing out new updates and improving features by the day.

During my course, this inspired me to take a deeper look at some of these tools in the hopes of contributing to them. A crucial thing to remember is that you don't have to start out big, in fact that's near impossible. Start small, but start early. Remember, the quicker you fail at something, the quicker you get to learn and improve.

In order to get started with open-source contributions, you can begin by scoping out the GitHub repo for the project you'd like to work on. Take a look at the issues list posted under each repo, and see if you can find something that makes sense to you. Usually, you'll find issues labeled with tags such as 'bug' or 'good first issue', and this will help you identify the nature of the problem you want to solve.

Do be mindful of the guidelines that each project follows. If you can't find them in the documentation or on the project website, you can try looking through previously merged pull-requests and try to mimic their code structure.

It can take a while to get a grasp of everything and to know where and how to start, but don't give up. Just keep at it and soon enough you'll find yourself making just a tiny bit of sense of things. After that, write down your solution, make a Pull-Request, and then wait for the code to get approved. Don't lose heart if it doesn't get merged. Use any feedback you get to improve your next contribution. Even something as simple as updating the documentation can be really useful to the wider community, so don't shy away from the vast world of open-source!

A wonderful platform that incubates many amazing tools is called the Cloud Native Computing Foundation. They bring together a great many useful tools under one hub, promote development, and are a rich source of information for anyone interested in following open-source development. I highly recommend that you check it out.

Conclusion

These past few months have been a highly educative experience. Even though I have only scratched the surface of the vast world of DevOps, I have already begun to appreciate the complexity of all the processes involved in giving life to so much of modern software. Development isn't just about coding. It's also about getting that code to run everywhere, all the time, for everyone. My course has come to an end, but my journey with DevOps has just begun.

Top comments (0)