If you’ve ever run virtual guests on platforms like KVM, Xen, Hyper-V, VMware, or VirtualBox, you probably think of disk attached to the guest as an image file of a special format (qcow2, VHDX, VMDK, VDI), which lies somewhere on the disk of the host file system. Lesser known is that most of these platforms support other storage options, namely network file systems (NFS, SMB), logical volumes (partitions), disks, and, most notably here, ZFS volume.

File-based arrangement certainly has value for some users. From my experience it is the most convenient arrangement for guests you want to rapidly create and destroy, or when you don’t care much about anything else than plain storage.

But it has drawbacks too. Here’s one of them. One relies on virtualization-specific designs which do not translate well from one platform to another. As someone who tried to convert image from qcow2 to VMDK or VHDX, or tried to create an instant JeOS-like VM image for VMware with open source tools, I witnessed, that in the former case one may end up with fixed-size image and, in the latter case, with image booting only from slow virtual IDE controller. Some of those designs may have fatal flaws.

Even if you think you will stick to one platform and you are not interested in platform interoperability, you may want to use your operating system-native tools with your guests. In OpenIndiana, an illumos distribution, our system components like IPS (package manager), Zones (OS-level virtualization/"container"), and KVM (HW virtualization) are well integrated with ZFS, the advanced file system and volume manager, and can leverage its features like snapshots and encryption.

Thanks to this level of integration on OpenIndiana we can run KVM guests in a Zone and thus make it well isolated from other tenants on the host, control its resources, and more.

Interestingly, VirtualBox can leverage raw device as a storage for guest. For our purposes ZFS volume will be an ideal device. First, I will show you how to create a VirtualBox guest running off a ZFS volume, then we will use ZFS snapshotting feature to save state of the guest, later on we will send the guest to another ZFS pool, and finally we will run the guest from an encrypted ZFS volume.

If you want to try this on your systems, here are the prerequisites: Host with ZFS file system. I use OpenIndiana 2019.04 updated to the latest packages to get ZFS encryption, but other Unices may do as well, but I haven't tried. I have two ZFS pools present: rpool is the default one, tank is one I use for some of the examples. VirtualBox with Oracle VM VirtualBox Extension Pack needs to be installed and working (I used version 6.0.8). You also need another system with graphical interface, where you can display guest's graphical console, unless you display on your Host.

Legend: $ introduces user command, # introduces root command. Guest/Host/Workstation denotes environment where the command is being executed.

I advise caution as VirtualBox guests write to raw devices and using wrong volume may result in data loss.

Create guest with ZFS volume

First, as root create rpool/vboxzones/myvbox volume of 10 Gb:

Host # zfs create rpool/vboxzones

Host # zfs create -V 10G rpool/vboxzones/myvbox

Then again as root create VirtualBox guest with SATA controller, reserve port 1 for the disk:

Host # VBoxManage createvm --name myvbox --register

Host # VBoxManage modifyvm myvbox --boot1 disk --boot2 dvd --cpus 1 \

--memory 2048 --vrde on --vram 4 --usb on --nic1 nat --nictype1 82540EM \

--cableconnected1 on --ioapic on --apic on --x2apic off --acpi on \

--biosapic=apic

Host # VBoxManage storagectl myvbox --name SATA --add sata \

--controller intelahci --bootable on

Host # VBoxManage storageattach myvbox --storagectl SATA --port 0 \

--device 0 --type dvddrive --medium OI-hipster-text-20190511.iso

Host # VBoxManage internalcommands createrawvmdk \

-filename /rpool/vboxzones/myvbox/myvbox.vmdk \

-rawdisk /dev/zvol/rdsk/rpool/vboxzones/myvbox

The last command creates a pass-through VMDK image which gets attached to the guest, but in reality, nothing gets written to this file and /dev/zvol/rdsk/rpool/vboxzones/myvbox volume is used directly instead. Attach the image to the guest:

Host # VBoxManage storageattach myvbox --storagectl SATA --port 1 \

--device 0 --type hdd --medium /rpool/vboxzones/myvbox/myvbox.vmdk \

--mtype writethrough

Start the guest, connect to it via RDP client (rdekstop and FreeRDP on Unix):

Host # VBoxManage startvm myvboxdisk --type headless

Workstation $ xfreerdp /v:$(Host_IP)

If you see the guest’s bootloader you can proceed and install the guest from ISO image, in the "Disks" screen of the installer, you’ll see your disk:

Create a snapshot of the disk

Record the approximate time of the snapshot by creating a file with a timestamp in it:

Guest $ date > date

Now with this arrangement, we can create a snapshot of the volume:

Host # zfs snapshot rpool/vboxzones/myvbox@first

Wait some time and rewrite the timestamp:

Guest $ date > date

Power off the guest and rollback to the first snapshot with the -r option so that ZFS lets us rollback to any, even older, snapshot:

Guest # poweroff

Host # zfs rollback -r rpool/vboxzones/myvbox@first

Start the guest and check that the timestamp is set to the one from the first snapshot:

Guest $ cat date

Hooray! We demonstrated that VirtualBox guest works with ZFS snapshots.

Send guest disk to another ZFS pool

Now lets see if we can send the guest disk to another ZFS pool and run it from there.

Guest # poweroff

Host # zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 118G 40.2G 77.8G - - 18% 34% 1.00x ONLINE -

tank 232G 22.2G 210G - - 1% 9% 1.00x ONLINE -

Host # zfs send rpool/vboxzones/myvbox | zfs receive -v tank/vboxzones/myvbox

receiving full stream of rpool/vboxzones/myvbox@--head-- into tank/vboxzones/myvbox@--head--

received 9.34GB stream in 153 seconds (62.5MB/sec)

Now, “rewire” the pass-through disk so it points to the new location:

Host # VBoxManage storageattach myvbox --storagectl SATA --port 1 \

--device 0 --type hdd --medium emptydrive

Host # VBoxManage closemedium disk /rpool/vboxzones/myvbox/myvbox.vmdk \

--delete

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Host # VBoxManage internalcommands createrawvmdk \

-filename /rpool/vboxzones/myvbox/myvbox.vmdk \

-rawdisk /dev/zvol/rdsk/tank/vboxzones/myvbox

RAW host disk access VMDK file /rpool/vboxzones/myvbox/myvbox.vmdk created successfully.

Host # VBoxManage storageattach myvbox --storagectl SATA --port 1 \

--device 0 --type hdd --medium /rpool/vboxzones/myvbox/myvbox.vmdk \

--mtype writethrough

Host # VBoxManage startvm myvbox --type headless

Waiting for VM "myvbox" to power on…

VM "myvbox" has been successfully started.

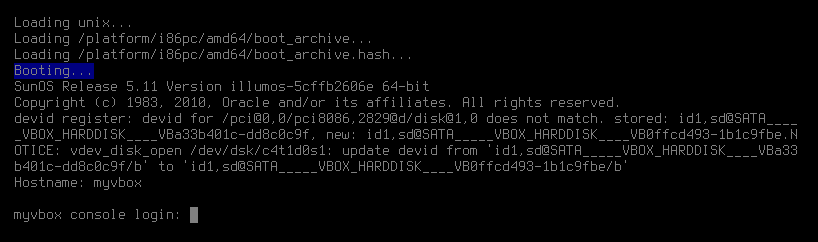

On boot the guest will notice that disk’s device ID does not match the recorded one but corrects it to what we actually have and won't warn on the next boot:

So, we proved that the guests disk can be send to another ZFS pool and successfully started from there.

Start guest from an encrypted volume

Now, lets see if the guest starts from an encrypted ZFS volume.

First, create an encrypted file system tank/vboxzones_encrypted with a passphrase entered on the prompt:

Host # zfs create -o encryption=on -o keyformat=passphrase \

-o keylocation=prompt tank/vboxzones_encrypted

Enter passphrase:

Re-enter passphrase:

Then send stream to the new encrypted dataset:

Host # zfs send rpool/vboxzones/myvbox | zfs receive -v tank/vboxzones_encrypted/myvbox

receiving full stream of rpool/vboxzones/myvbox@--head-- into tank/vboxzones_encrypted/myvbox@--head--

received 9.34GB stream in 166 seconds (57.6MB/sec)

And recreate the guest pass-through disk to point to the encrypted dataset:

Host # VBoxManage storageattach myvbox --storagectl SATA --port 1 \

--device 0 --type hdd --medium emptydrive

Host # VBoxManage closemedium disk /rpool/vboxzones/myvbox/myvbox.vmdk \

--delete

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Host # rm /rpool/vboxzones/myvbox/myvbox.vmdk

Host # VBoxManage internalcommands createrawvmdk \

-filename /rpool/vboxzones/myvbox/myvbox.vmdk \

-rawdisk /dev/zvol/rdsk/tank/vboxzones_encrypted/myvbox

RAW host disk access VMDK file /rpool/vboxzones/myvbox/myvbox.vmdk created successfully.

Host # VBoxManage storageattach myvbox --storagectl SATA --port 1 \

--device 0 --type hdd --medium /rpool/vboxzones/myvbox/myvbox.vmdk \

--mtype writethrough

Host # VBoxManage startvm myvbox --type headless

Waiting for VM "myvbox" to power on…

VM "myvbox" has been successfully started.

To prove that we really run from an encrypted volume, lets unload the encryption key from memory manually (or restarting the Host) and start the guest again:

Host # zfs unmount /tank/vboxzones_encrypted

Host # zfs unload-key tank/vboxzones_encrypted

Host # VBoxManage startvm myvbox --type headless

Waiting for VM "myvbox" to power on…

VBoxManage: error: Could not open the medium '/rpool/vboxzones/myvbox/myvbox.vmdk'.

VBoxManage: error: VD: error VERR_ACCESS_DENIED opening image file '/rpool/vboxzones/myvbox/myvbox.vmdk' (VERR_ACCESS_DENIED)

VBoxManage: error: Details: code NS_ERROR_FAILURE (0x80004005), component MediumWrap, interface IMedium

As expected it failed because the encrypted volume is unreadable for VirtualBox. Lets decrypt it and start the guest again:

Host # zfs load-key tank/vboxzones_encrypted

Enter passphrase for 'tank/vboxzones_encrypted':

Host # VBoxManage startvm myvbox --type headless

Waiting for VM "myvbox" to power on…

VM "myvbox" has been successfully started.

After decryption the guest starts correctly.

In these three examples we

- created a snapshot and then rolled back to it,

- sent guest disk as a ZFS stream to another ZFS pool, and

- started guest off an encrypted dataset. Thus we demonstrated that we can leverage OS-level file system features in VirtualBox guests thanks to fairly unknown VirtualBox ability to use plain storage devices.

Concerns and Future directions

Unfortunately not everything works ideally. Whatever I try disk IO of the raw ZFS volume is significantly lower than IO performance with the VDI disk (or the Global Zone, but I treat it here mostly as a base-line for comparison).

I have run “seqwr” and “seqrd” test modes from the fileio test of the sysbench 0.4.1 benchmark on an old Samsung 840 PRO SSD (MLC) disk which seems to show some wear:

| Operation / Storage type | Global Zone (host) | VDI disk (guest) | ZFS dataset (guest) |

| Sequential Write (2Gb file) | 36.291 Mb/sec | 54.302 Mb/sec | 11.847 Mb/sec |

| Sequential Read (2Gb file) | 195.63 Mb/sec | 565.63 Mb/sec | 155.21 Mb/sec |

There are two things of concern:

- ZFS volume is three times slower on sequential write, and ~20 % slower on sequential read then the Global Zone;

- the VDI disk performance can actually be higher (sic) than in Global Zone.

The first takeaway may mean that unneeded fsync() occurs on some layer and the second takeaway may mean that something fishy is happening with synchronizing files to the underlying file system on the hypervisor side. Perhaps fsync() being ignored to some degree? (In any case these numbers are to be taken with a grain of salt and are good only to show the contrast between various storage types and their potential limitations.)

To summarize, it turns out that the level of integration we see with KVM on various illumos distributions can be met with VirtualBox as we seems to have necessary building blocks. But it needs to be investigated what's the culprit on the IO performance side and how, Zones can be added to the mix so that additional tenant isolation is achieved (especially because VirtualBox is run as root because of the use of ZFS volumes).

Acknowledgement: Johannes Schlüter’s ZFS and VirtualBox blog post inspired me to write this article.

Top comments (5)

Things to explore as to why it could be slower on zfs:

recordsizefor filesystem,volblocksizefor volume) you could be seeing write inflation. In the host, look for a lot of reads during a write workload and look to see if the amount of data written by the host is significantly more than that written to the guest.zfs_immediate_write_sz) but vbox is chopping them up into smaller writes, the data may be written to the zil (zil exists even if log devices don't) and again to its permanent home.Thanks Mike! Very much appreciate your comment. I will go thru those things and see what difference they make, just started with

ashift, which was indeed set to9(512B) but should be to13(8K).Minor typo:

"control it's resources, and more." ->

"control its resources, and more."

Thanks, Jon. Typo fixed.

a brilliant use of virtualisation ! using it to experiment with new file systems WITHOUT messing up your host or native env