I shared a blog post about configuring a complete electron application production pipeline, you can visit the medium post here if you'd like to read more.

Alternatively, a copy of the article is below. I've tried to reproduce styling as best I can within this Markdown editor, sorry for any inconsistencies

This article aims to outline a complete Electron development to rollout project pipeline. I’ll be covering everything from creating your initial Electron boilerplate with React, all the way through to building and publishing to an Amazon AWS S3 Bucket. We will then look at creating a static Gatsby site to list our distributions, delving into automatic S3 deployment and utilizing CloudFront and Route 53. The complete project for this can be found on Github.

Let’s get our project started by laying out our boilerplate, we’re going to make this easier by using create-reacton-app. Short disclaimer, I wrote it… so if your having problems, sorry! Okay back to work, install create-reactron-app globally and then execute with your PROJECT_NAME.

npm install -g create-reactron-app

create-reactron-app PROJECT_NAME

cd PROJECT_NAME

It’s that simple! Now you have a fully fledged Electron app with React boilerplate! You can run npm run start to open a development environment or npm run build && npm run dist to compile distribution binaries. It’s up to you what you’d like your app to do and look like from here. In the next section we will look at publish your distributions so their available to anyone!

Publishing Distributions

Let’s look at deploying out binaries for public access using an Amazon AWS S3. You’ll need to setup an Amazon AWS account before we start an hopefully have experience with the AWS services.

Getting your credentials

Login to your AWS account and access the IAM service, navigating into the users area. Select Add User and specify a name for the user who will access this projects deployment bucket, I named mine after my project agora-deploy. Tick Programmatic access and proceed to Set permissions. Here you want to search for ‘S3’ and select the S3-Manager permission. This will grant full access to S3 instances, primarily the read-write we need. Finally Add Tags, Review and Create user once you’ve configured any extras. Be sure to copy the access_key_id and access_secret_key displayed, we will need these later to configure the AWS-CLI. The permissions for your new user should now contain at least the following.

AWS Command Line Interface

Please follow the instructions on the following link to install and setup AWSCLI for your operating system.

Once you’ve got the command line tools setup, open the credentials file with nano ~/.aws/credentials and insert the keys we copied early when creating a new IAM user. I’ve named my configuration agora-deploy, this is useful if you have multiple projects with separate AWS access on your system.

[agora-deploy]

aws_access_key_id=JFRSHDKGYCNFPZMWUTBV

aws_secret_access_key=FK84mfPSdRtZgpDqWvb04fDen0fr3DZ87hTYEE1n

S3 Buckets

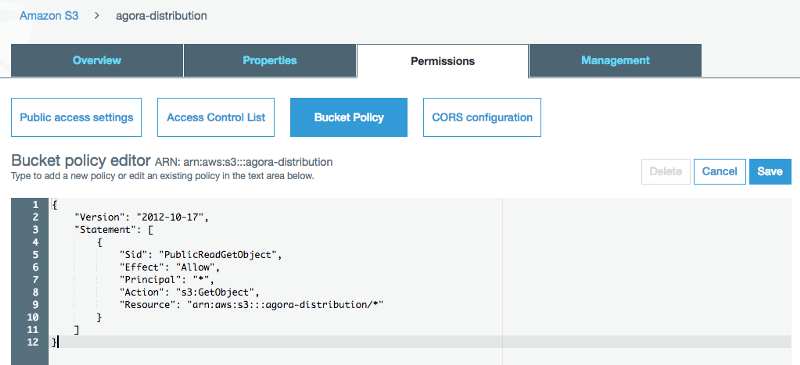

Now that you’ve setup your command line, navigate back to AWS console and create a new bucket to hold your distribution binaries. Just use the default settings when creating the bucket, then navigate through the Permissions and into the Bucket Policy.

Insert the following policy, granting public access to GetObject’s from the bucket. Be sure to insert YOUR_BUCKET_ARN followed by the top level directory your opening, I used /* here for all contents.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "YOUR_BUCKET_ARN/*"

}

]

}

And your set, the buckets now ready to serve your distribution. You could manually upload the build files, or continue onto the next section were we will look at automating distribution publishing.

Configuring Electron-Builder

We need to add some additional configuration to our Electron build properties for our new S3 instance. Add the following publish settings to your package.json.

"build": {

...

"publish": [

{

"provider":"s3",

"bucket":"YOUR_BUCKET_NAME"

}

]

}

I also updated my scripts with the following two commands.

"scripts": {

...

"dist": "electron-builder --publish never --linux --mac",

"release": "npm run dist --publish always"

}

The dist script compiles binaries without publishing and the release script executes the dist script with while overwriting the --publish flag. If you’ve configured your AWS command line credentials and spelled everything correctly, you should be able to run npm run release to have electron-builder build and publish your distributions.

Content Delivery

Now that we have our application project setup, building and publishing, we can move onto creating our web interface to help user easily find the installer they need. The source code for this section can be found at the following GitHub repository.

Project Setup

I’m going to be building a static site to list the distributions. For this I’ll be using Gatsby and configuring automatic publishing to S3, and served from CloudFront. Let’s start by installing Gatsby’s command line interface tools, then run gatsby new followed by our application name to create our project boilerplate.

npm install -g gatsby-cli

gastby new APP_NAME

I’ll quickly add links to the binaries using the S3 Object URL within the index.js component. You can build your site out however you’d like here.

<Link to=”https://s3-ap-southeast-2.amazonaws.com/agora-distribution/Agora-0.2.3.dmg”>Mac</Link>

<Link to=” https://s3-ap-southeast-2.amazonaws.com/agora-distribution/Agora+0.2.3.AppImage”>Linux</Link>

Finally we can run npm run build to compile our static site, then move onto configuring our S3 and CloudFront environment to display it. The output of our build will be in the public folder, the contents of this folder is what we will move to our S3 Bucket.

Please be aware that some AWS services can take up to 24 hours to propagate changes across all edge locations, and be discoverable.

Before we can begin, you’ll need to have a domain name ready. We could do this with the resource URL’s generated for our instances, but there’s really no reason you’d ever need to do such a thing.

Configuring HTTPS

As a bonus to our users, we’re going to setup SSL so they can trust we really are who we say we are. Within the AWS console, navigate to the Certificate Manager and Request a certificate. Follow the prompts to setup, entering your domain or multiple domains, and selecting DNS Validation as the validation method.

Once your certificate has been requested, you’ll need to follow the instructions listed to complete issuance. It may take up too an hour for Route 53 and Certificate Manager to synchronize and issue the certificate.

S3 Hosting

We’re going to need an S3 bucket to hold all our static build files. Open S3 and create a new bucket, using your domain name as the bucket’s name. Next open the bucket settings navigate to Properties and configure your Static website hosting like so. We’re telling our bucket to act as a web server, returning the specified index document when no resource is specified and the error document when a resource isn’t found. We will set ours as the default files outputted from Gatsby’s build process. We don’t need to configure our bucket policy yet, we will have CloudFront do that later.

Setting up Cloudfront

We could configure our S3 Bucket to directly serve our content, but then we loose the advantage of SSL encryption and high speed delivery. Let’s setup a CloudFront instance instead to build an edge cache from our bucket contents and deliver it with SSL. Open the CloudFront Service within AWS, Create Distribution, and Get Started with a Web distribution. We have a little bit to configure here so stay patient.

-

Origin Settings

- Origin Domain Name: YOUR_S3_BUCKET_ARN

-

Default Cache Behavior Settings

- Viewer Protocol Policy: Redirect HTTP to HTTPS

-

Distribution Settings

- SSL Certificate: Custom SSL Certificate (Select the cert assigned earlier)

- Default Root Object:

index.html

You can setup any additional configuration here you like, like custom headers. Next select Create Distribution and Amazon will start up the new instance. This will take some time, go have a coffee while the instance starts and propagates across all edge locations. We will need the service to be discoverable for the next section, configuring Route 53.

Domain Routing

So we’ve setup CloudFront to cache and serve our static site from S3, now we need to configure Route53 to provide a user friendly domain for our CloudFront instance. Navigate over to Route53, select the Hosted Zone associated with your domain, or create one if you haven’t already. Then Create Record Set, type A — IPv4 address, select Alias Yes and set the Alias Target as your CloudFront instance. Again, the CloudFront instance could take up too 24 hours to appear across other services. Once this is setup, you should be able to visit your domain after a few minutes and see your static site appear.

Automating S3 Deployment

We previous manually copied the entire contents of the build folder into our S3 bucket. There’s nothing technically wrong with this… but we’re all about automation and making things simpler! So let’s setup gatsby-plugin-s3 to deploy our build to S3 in just one command.

npm install -s gatsby-plugin-s3

Then we want to configure the plugin within our gatsby-config.js file. Add the following configuration object into the plugins array:

plugins: [

...

{

resolve: 'gatsby-plugin-s3',

options: {

bucketName: 'agora.pierias.com'

}

}

...

]

Finally we’re going to add a deploy command to the package.json like so:

"scripts": {

...

"deploy": "gatsby-plugin-s3 deploy"

...

}

Before you can run this you’ll need to call npm run build to update the plugins in .cache, then you can run the npm run deploy command, following the prompts to deploy to S3. Remember to setup your ~/.aws/credentials with your AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY from the AWS IAM service. More details on the S3 plugin can be found here.

Thank you!

You should now have a full production pipeline setup. We could also add a GraphQL query to our Gatsby site in order to fetch the latest build resource URL from our deployment S3 Bucket. If you’d like to visit the live website deployed in this example, it can be found at Agora.

Top comments (5)

Hey Mitch! Just an FYI that you can add your medium feed to DEV and have your articles import automatically as drafts -- might help the cross-posting process go more smoothly!

Wow. Nice clickbait title. If only you just put the content here. I could have read the whole content and not redirecting to medium post. I have limited access to medium articles per day.

Sorry Vince, I had premium medium and didn't realize. I'll try modify this post for you.

Try it again now Vince. That was a mission to re-annotate the markdown and images for this 2000 word article. I don't need Medium claps, but I'd really appreciate any GitHub stars... looks great on my resume!

What I like about DEV is that the articles are free. Thanks!