Tutorial - How to build your own LinkedIn Profile Scraper in 2022

Table of Contents

Intro

I am Malcolm, a Full-Stack developer and Technical writer that specialises in using languages like Python to break down todays topic. How can we use a Profile scraper in today’s climate to extract details that you need from a secure website like LinkedIn?

First of all we need to discuss what is a profile scraper? If you are familiar with Python and have been using it for awhile you have probably come across the term profile scraper or Data extraction. A Profile scraper is essentially the extraction of configured data of your choice for profiles or data in general on a website of the programmer's choice.

Here you will be pulling information and details such as name, age, email address, phone number and relative information that the user has permitted on sites such as linkedin, Facebook, instagram etc and has allowed for public and available use according to the sites terms and conditions.

Now lets get started.

IDE

Open Your IDE and Terminal.For this I will be following along with VS Code but you can use any platform of your choice. I will also be using google chrome to pull the data from Linked In to my IDE.

We will first need to make sure we have some packages installed in order to pull the data so if you first access this website: https://www.pypi.org. You want to first make sure you have check what version you have of chrome. My version for this example is Version 103.0.5060.114 (Official Build) (64-bit).

You can access your Version by selecting the 3 dot settings on your browser, clcikng settings, and on the left hand side select “ About Chrome”

InstallPackages

So the packages you want to make sure are installed and set are:

Import request

From time import sleep

From selenium import webdriver

Import chromedriver_binary

Starting with Selenium you can install this from pypi or simply use pip install selenium.

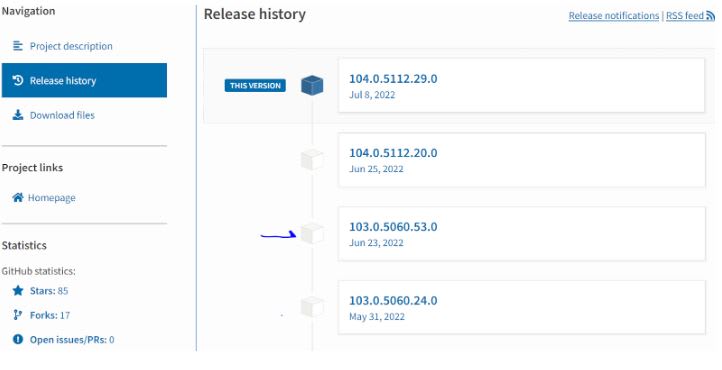

In terms of the chromedriver_binary we do not necessarily need to install the latest version but the version that is closest to your Chrome version. To find the correct version click into version release history on the left hand side and select the closest version for you.

So in my case its best for me to choose 103.0.5060.53.0 as its closets to my Chrome version and the latest version which is 104.0.5112.20.0 will not work for me.

AnalyzingCode

Now lets go through the code:

With the driver = webdriver.chrome() with this instruction will open the chrome application and wait for 5 seconds. Then it will maximize the window and again it will wait for 5 seconds.

This is not required but 5 seconds is inserted here so that when the code runs through you can see whats happening. After that we will open the base request in linkedin main task to get the cookies, so that when you send a profile request with the backend we have to set cookies thats why im sending a linkedin.com based request to get cookies from the chrome browser.

Now with cookies_dict = {} is a dictionary variable to save the cookie we got from the chrome browser. In the cookies_dict = {} it will save a cookie and a pair of names and value.

The next instruction will close the browser. Cookie is already saved with the cookies_dict = {} here a send request with requests.get then I have to set a linkedin profile url here we set the cookies we save the cookies_dict = {} then we set all required headers like user agent accept etc. now whatever response I get from the text we save an html variable, html equal response dot text response is this variable .

The next task is to save whatever response we get in your local folder. For this have to give the full path so drive then whatever folder and filename here is the d drive and linkedin underscore pages this is the folder that I have to save the html page in name of one html now I take one function and syntax is open and have to apply the full html path next comma then ‘w’ it is for write function we have to write an html page, here I mentioned ‘w’ then I have to apply encoding to right page we have to apply utf-8 encoding so we can write encoding is equal utf-8 next is the page function right I have to write the text I got in response so I mentioned html right I have to write the fun.write(html). Now we are going to close the function with page_fun.close().

So this function that writes a page in the d drive folder linked_pages.

Once we run this code it will open your browser automatically and then maximise it shortly after launching linkedin.com it will send a backend request of the profile and it will save the html page and the folder and is concurrently saved as 1.html.

We can open this with the chrome browser by right clicking and clicking open with and select chrome. This will then launch to a page like shown below:

ResponseText

SO now to check the response text just write in to the console variable name html. And this is where you will find the html variable response text.

You can parse the data once it is saved in the local drive you can parse the data from the response text to the data of the profile name, number of employees, location, followers, about us section, website, company size, headquarters, specialties and much more all without logging in or having an account.

Now if you require multiple pages you can use a for loop. You don't have to open the browser multiple times, you have to send a request with a different url because a cookie is already saved and cookie_dict{} is already applied here so you don't have to open the page again and again.

So you can change the linkedin profile url and either you can create a list and send different urls and save pages. You Can change the html path with 1,2,3 incremental value.

However if you have some issues trying to extract data from a database I suggest you should have a look at this video here.

Conclusion

Now to wrap up this article:

Why is this useful?

Web Scraping sites with Python on LinkedIn is useful in the hands of some Developers as it is an easy way to extract data in mass quantities and have it formed to structured web data that you would not be able to get normally via an API. It is also high speed and cuts time once you have learned how to do it once and can use again for what other projects you may want to use in the future.

I hope you enjoyed this Tutorial.

For more information on this Visit this link:

https://nubela.co/proxycurl/?utm_campaign=affiliate_marketing&utm_source=affiliate_link&utm_medium=affiliate&utm_content=malcolm

Thanks for reading,

Malcz/Mika

Top comments (0)