Hey folks! I'm starting a series to cover popular code challenges that are often used on technical interviews. My intent is to make this a weekly series and that way we could share our solutions in the comments. As a reference many of these challenges are taken from websites such as Hackerrank, Leetcode, InterviewCake and Codewars. (etc...)

What is Data Structures? Well, it is a particular way of organizing data in a computer/program so that it can be used effectively. Then, What is an algorithm? is a step-by-step procedure that takes an input instance (problem) as input/s and produces output for the problem (instance).

Warning: It's not guaranteed that you will be asked any of the coding or data structure / algorithmic questions, but they will give you an idea of what kind of questions you can expect in a real interview.

If you have no experience whatsoever in Data Structure and Algorithms, then you should visit either interview cake, Udemy Colt Steele or even Freecodecamp to get the basics down.

My intention is to cover the following during this series:

- Big O 🔗

- Arrays 🔗

- Linked list 🔗

- Stacks & Queues 🔗

- Hashes 🔗

- Sorting 🔗

- Trees (Binary Search Tree) 🔗

- Graphs 🔗

- System Design (Extra) 🔗

Note: Challenges will be presented like this => e.g: Big-O (Title) [Difficulty]

Ah...yes. Before I forget, All algorithms can be categorized in one of these paradigms:

- Brute Force Algorithm - check all possible solutions and select the best one.

- Dynamic Programming Alg. - solve the problem based on all the previous solutions.

- Greedy - Choosing the best solution at the moment, regardless of the consequences in the future.

- Divide & Conquer - divide the problem into a smaller set of issues to resolve and get the overall solution at the end.

Let's begin introducing our first guest Big O.

Big O

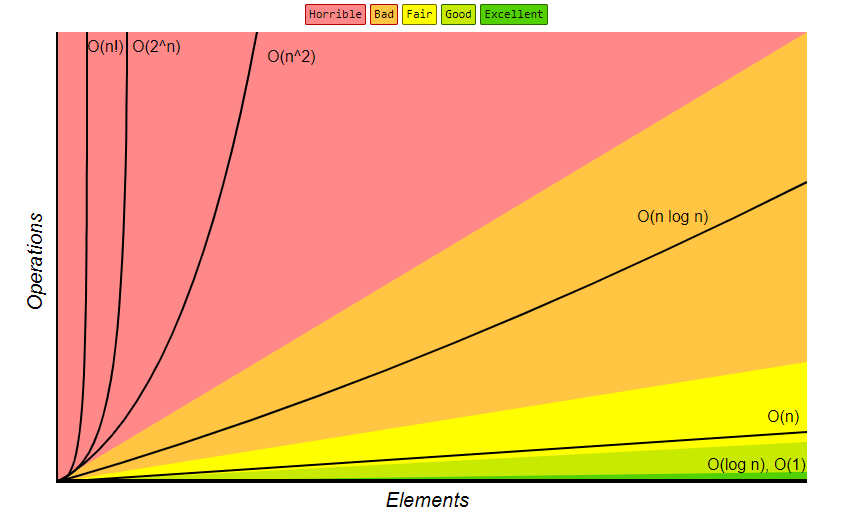

Allows us to determine the scalability of our code, this refers to how we measure the efficiency of our code. How can we exactly calculate the performance of our code? is it runtime speed? complexity/simplicity? Regardless the differences of our computer how do we calculate scalability again? We are able to measure it by how large the input size is and how much this slow down our function or algorithm (algorithmic efficiency).

Linear Time

const yoda = ['Baby Yoda']

const friends = ['Mandolorian', 'Luke', 'Leila', 'Clone A','Baby Yoda','Dark Vader']

const large = new Array(10000).fill('Baby Yoda')

const findBabyYoda = arr=> {

for(let i=0; i < arr.length; i++){

if(arr[i] === 'Baby Yoda'){

console.log('FOUND YODA!')

}

}

}

findBabyYoda(friends) // O(n) - Linear time

// The num of outputs increases proportionally with the num of inputs

Constant Time

const pineapples = [0,1,2,3,4,5]

const logFirstsPineapples = pineapples => {

console.log(pineapples[0]) // O(1) - constant time

console.log(pineapples[1]) // 0(1) - constant time

}

logFirstsPineapples(pineapples) // O(2)

1- What is the Big O of the below function? Solution

const firstChallenge => input => {

let a = 10;

a = 50 + 3;

for (let i = 0; i < input.length; i++) {

ramdomFunction();

let stranger = true;

a++;

}

return a;

}

2- What is the Big O of the below function? Solution

function secondChallenge(input) {

let a = 5;

let b = 10;

let c = 50;

for (let i = 0; i < input; i++) {

let x = i + 1;

let y = i + 2;

let z = i + 3;

}

for (let j = 0; j < input; j++) {

let p = j * 2;

let q = j * 2;

}

let whoRu= "I don't know";

}

Rules to help you Big(O) a bit better:

Worst Case:

Resource

Thanks for making it to the end of our first stop, what we saw today seemed pretty basic but this is only the beginning and it will get more challenging as we progress in this topic. If you have any questions, suggestions or anything to discuss in regards this topic please comment below.

Hope to see you in the comments!

![Cover image for Intro to Data Structures + Algo [Part 1]](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fimages.unsplash.com%2Fphoto-1500964757637-c85e8a162699%3Fixlib%3Drb-1.2.1%26ixid%3DeyJhcHBfaWQiOjEyMDd9%26auto%3Dformat%26fit%3Dcrop%26w%3D1378%26q%3D80)

Top comments (3)

nice explaination, wait for next post

Good post lots of useful information here thanks.

Thanks that was helpful.