Overview

OpenShift Origin (OKD) is the Community Distribution of Kubernetes that powers Red Hat OpenShift. Built around a core of OCI container packaging and Kubernetes container cluster management, OKD is also augmented by application lifecycle management functionality and DevOps tooling. OKD provides a complete open-source container application platform

🔰1. Prerequisites

We can plan to install 3 physical machines or VM, and they should be connected on the Internet

Distribution: Centos 7 linux [Os Minimal Installation]

IP Address: 192.168.31.182 with 8 GB, 2 Core

IP Address: 192.168.31.144 with 4 GB RAM, 2 Core

IP Address: 192.168.31.94 with 4 GB RAM, 2 Core

Login username: root

Password: 123456

Note: Mentioned ip address and credential uses just for references. Please take a look at Official prerequisites

🔰2. To access remotely through username & password, needs to Installing and Enabling OpenSSH

📗 Install OpenSSH & net-tools packages, start and show status of SSH service, run automatic after reboot and finally show machine IP Address.

yum –y install openssh-server openssh-clients net-tools

systemctl start sshd

systemctl status sshd

systemctl enable sshd

ifconfig

Note: Now we can able to access those services through Terminal or Putty

🔰3. Common steps for on all the machines or nodes [Here, Master, Compute, Infra], Login into each single machine

📗 Step1: Install base packages

yum install -y wget git zile net-tools bind-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct openssl-devel httpd-tools python-cryptography python2-pip python-devel python-passlib java-1.8.0-openjdk-headless "@Development Tools"

yum update -y

yum install docker-1.13.1

systemctl start docker && systemctl enable docker && systemctl status docker

yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

sed -i -e "s/^enabled=1/enabled=0/" /etc/yum.repos.d/epel.repo

📗 Step2: Set hosts for all nodes, so future ansible can communicate with all machines for automation

Open that file and vi /etc/hosts, include contents

192.168.31.182 master.192.168.31.182.nip.io

192.168.31.144 compute.192.168.31.144.nip.io

192.168.31.94 infra.192.168.31.94.nip.io

Now, restart network service

service network restart

📗 Step3: Login into each single machine and set hostname for all nodes

hostnamectl set-hostname hostname.<machine-ip>.nip.io

Examples for all machine:

hostnamectl set-hostname master.192.168.31.182.nip.io

hostnamectl set-hostname compute.192.168.31.144.nip.io

hostnamectl set-hostname infra.192.168.31.94.nip.io

Note: Remember each machine has only one hostname, so don't put all hostname in a single machine.

📗 Step4: Modify '/etc/NetworkManager/NetworkManager.conf' so after reboot wifi-router each time, DNS shouldn't change.

open this file vi /etc/NetworkManager/NetworkManager.conf, include contents

[main]

#plugins=ifcfg-rh,ibft

dns=none

📗 Step5: Modify ifconfig label information, and use google DNS

ifconfig

cd /etc/sysconfig/network-scripts

In Here, mine label was ifcfg-ens33, so vi ifcfg-ens33 and include contents

PEERDNS="no"

DNS1="8.8.8.8"

📗 Step6: Modify resolv.conf file, to update nameserver & search globally

open vi /etc/resolv.conf, include contents

search nip.io

nameserver 8.8.8.8

📗 Step7: Restart network manager & network service

systemctl restart NetworkManager

service network restart

📗 Step8: Change Selinux setting on Master,Compute,Infra

open vi /etc/selinux/config, include contents

SELINUX=enforcing

SELINUXTYPE=targeted

📗 Step9: Reboot machine

reboot

Note: For unknown behaviors, we can restart network manager & service

🔰4. Finally, log in into Master Machine and follow the below instructions,

📗 Step1: create ssh key and copy public key to other machines or nodes, fso we can communicate without username and password. It's needed for SSH.

ssh-keygen

for host in master.192.168.31.182.nip.io \

master.192.168.31.182.nip.io \

compute.192.168.31.144.nip.io \

infra.192.168.31.94.nip.io; \

do ssh-copy-id -i ~/.ssh/id_rsa.pub $host;

done

📗 Step2: After boot all the machines, we can ping from an external machine terminal or command

ping google.com

ping master.192.168.31.182.nip.io

ping compute.192.168.31.144.nip.io

ping infra.192.168.31.94.nip.io

Notes:

ping VM to VM = Ok

ping Host to VM = Ok

ping www.google.com = Ok

📗 Step3: install ansible 2.7 in master node

rpm -Uvh https://releases.ansible.com/ansible/rpm/release/epel-7-x86_64/ansible-2.7.10-1.el7.ans.noarch.rpm

ansible --version

📗 Step4: Now, clone openshift-ansible repository, and switch 3.11 release branch

git clone https://github.com/openshift/openshift-ansible.git

cd openshift-ansible && git fetch && git checkout release-3.11

📗 Step5: Create ansible hosts.ini configuration,

# Create an OSEv3 group that contains the masters, nodes, and etcd groups

[OSEv3:children]

masters

nodes

etcd

# Set variables common for all OSEv3 hosts

[OSEv3:vars]

# SSH user, this user should allow ssh based auth without requiring a password

ansible_ssh_user=root

# If ansible_ssh_user is not root, ansible_become must be set to true

ansible_become=true

openshift_master_default_subdomain=app.192.168.31.94.nip.io

deployment_type=origin

[nodes:vars]

openshift_disable_check=disk_availability,memory_availability,docker_storage

[masters:vars]

openshift_disable_check=disk_availability,memory_availability,docker_storage

# uncomment the following to enable htpasswd authentication; defaults to DenyAllPasswordIdentityProvider

openshift_master_identity_providers=[{'name': 'htpasswd_auth', 'login': 'true', 'challenge': 'true', 'kind': 'HTPasswdPasswordIdentityProvider'}]

# host group for masters

[masters]

192.168.31.182

# host group for etcd

[etcd]

192.168.31.182

# host group for nodes, includes region info

[nodes]

192.168.31.182 openshift_node_group_name='node-config-master'

192.168.31.144 openshift_node_group_name='node-config-compute'

192.168.31.94 openshift_node_group_name='node-config-infra'

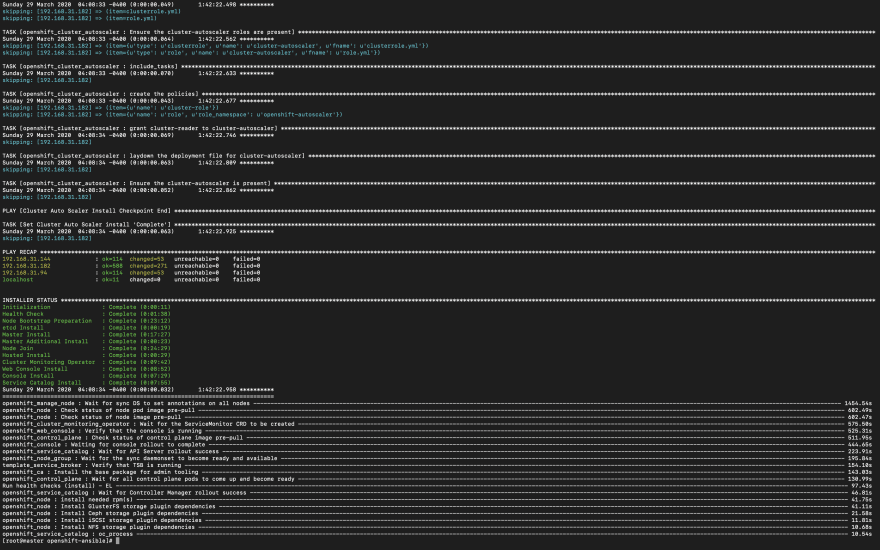

📗 Step5: Execute ansible playbooks with hosts.ini configuration,

ansible-playbook -i hosts.ini playbooks/prerequisites.yml

ansible-playbook -i hosts.ini playbooks/deploy_cluster.yml

Note: If you're prerequisites.yml not throw any error, then you can ready for deploy_cluster.yml. After hours of later, you can able to see this screen. I hope your face will be glowing like mine! 🤩

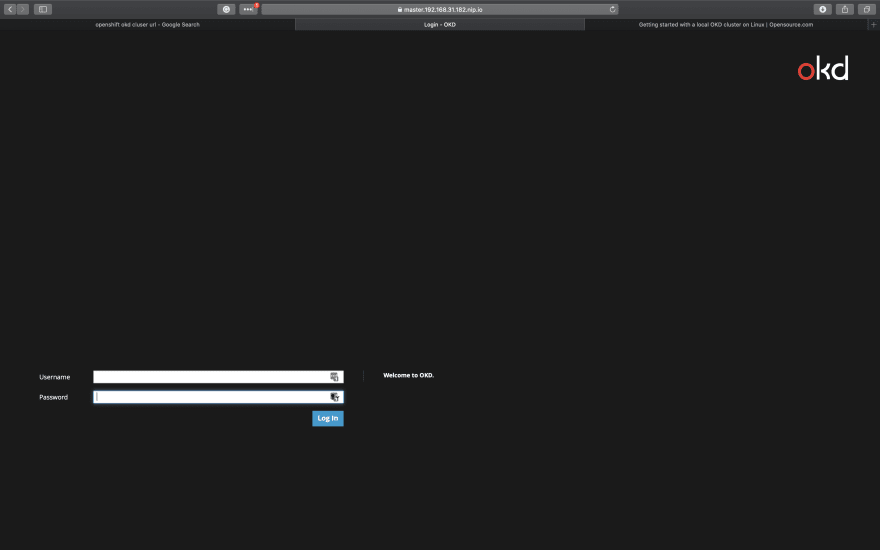

📗 Step6: Create Admin User, so that we can log in to web console, we can also able to see web console URL

htpasswd -c /etc/origin/master/htpasswd admin

oc status

Note: We already created admin users, so use those credentials to move forward.

After 5 hours (depends on internet speed and nodes resources), successfully able to install a private cloud on an open-source container application platform using centos 7 with 3 nodes. It's lots of fun. It might occur errors if you're network connection is poor and machines or nodes resources (CPU, RAM) are not properly.

👌 Congratulations. & Thanks for your time & passion.

Feel free to comments, If you have any issues & queries.

Top comments (3)

iam gettimg the below error! tried sevaeral things nothing worked out!!

TASK [openshift_node_group : Copy templates to temp directory] **********************************************

changed: [10.0.0.20] => (item=/root/openshift-ansible/roles/openshift_node_group/files/sync-policy.yaml)

changed: [10.0.0.20] => (item=/root/openshift-ansible/roles/openshift_node_group/files/sync.yaml)

changed: [10.0.0.20] => (item=/root/openshift-ansible/roles/openshift_node_group/files/sync-images.yaml)

TASK [openshift_node_group : Update the image tag] **********************************************************

changed: [10.0.0.20]

TASK [openshift_node_group : Ensure the service account can run privileged] *********************************

ok: [10.0.0.20]

TASK [openshift_node_group : Remove the image stream tag] ***************************************************

changed: [10.0.0.20]

TASK [openshift_node_group : Remove existing pods if present] ***********************************************

ok: [10.0.0.20]

TASK [openshift_node_group : Apply the config] **************************************************************

changed: [10.0.0.20]

TASK [openshift_node_group : Remove temp directory] *********************************************************

ok: [10.0.0.20]

TASK [openshift_node_group : Wait for the sync daemonset to become ready and available] *********************

FAILED - RETRYING: Wait for the sync daemonset to become ready and available (69 retries

FAILED - RETRYING: Wait for the sync daemonset to become ready and available (2 retries left).

FAILED - RETRYING: Wait for the sync daemonset to become ready and available (1 retries left).

fatal: [10.0.0.20]: FAILED! => {"attempts": 80, "changed": false, "module_results": {"cmd": "/usr/bin/oc get daemonset sync -o json -n openshift-node", "results": [{"apiVersion": "extensions/v1beta1", "kind": "DaemonSet", "metadata": {"annotations": {"image.openshift.io/triggers": "[\n {\"from\":{\"kind\":\"ImageStreamTag\",\"name\":\"node:v3.11\"},\"fieldPath\":\"spec.template.spec.containers[?(@.name==\\"sync\\")].image\"}\n]\n", "kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"apps/v1\",\"kind\":\"DaemonSet\",\"metadata\":{\"annotations\":{\"image.openshift.io/triggers\":\"[\n {\\"from\\":{\\"kind\\":\\"ImageStreamTag\\",\\"name\\":\\"node:v3.11\\"},\\"fieldPath\\":\\"spec.template.spec.containers[?(@.name==\\\\"sync\\\\")].image\\"}\n]\n\",\"kubernetes.io/description\":\"This daemon set provides dynamic configuration of nodes and relabels nodes as appropriate.\n\"},\"name\":\"sync\",\"namespace\":\"openshift-node\"},\"spec\":{\"selector\":{\"matchLabels\":{\"app\":\"sync\"}},\"template\":{\"metadata\":{\"annotations\":{\"scheduler.alpha.kubernetes.io/critical-pod\":\"\"},\"labels\":{\"app\":\"sync\",\"component\":\"network\",\"openshift.io/component\":\"sync\",\"type\":\"infra\"}},\"spec\":{\"containers\":[{\"command\":[\"/bin/bash\",\"-c\",\"#!/bin/bash\nset -euo pipefail\n\nfunction md5()\n{\n local md5result=($(md5sum $1))\n echo \\"$md5result\\"\n}\n\n# set by the node image\nunset KUBECONFIG\n\ntrap 'kill $(jobs -p); exit 0' TERM\n\n# track the current state of the config\nif [[ -f /etc/origin/node/node-config.yaml ]]; then\n md5 /etc/origin/node/node-config.yaml \u003e /tmp/.old\nelse\n touch /tmp/.old\nfi\n\nif [[ -f /etc/origin/node/volume-config.yaml ]]; then\n md5 /etc/origin/node/volume-config.yaml \u003e /tmp/.old-volume.config\nelse\n touch /tmp/.old-volume-config\nfi\n\n# loop until BOOTSTRAP_CONFIG_NAME is set\nwhile true; do\n file=/etc/sysconfig/origin-node\n if [[ -f /etc/sysconfig/atomic-openshift-node ]]; then\n file=/etc/sysconfig/atomic-openshift-node\n elif [[ -f /etc/sysconfig/origin-node ]]; then\n file=/etc/sysconfig/origin-node\n else\n echo \\"info: Waiting for the node sysconfig file to be created\\" 2\u003e\u00261\n sleep 15 \u0026 wait\n continue\n fi\n name=\\"$(sed -nE 's|^BOOTSTRAP_CONFIG_NAME=([^#].+)|\\1|p' \\"${file}\\" | head -1)\\"\n if [[ -z \\"${name}\\" ]]; then\n echo \\"info: Waiting for BOOTSTRAP_CONFIG_NAME to be set\\" 2\u003e\u00261\n sleep 15 \u0026 wait\n continue\n fi\n # in the background check to see if the value changes and exit if so\n pid=$BASHPID\n (\n while true; do\n if ! updated=\\"$(sed -nE 's|^BOOTSTRAP_CONFIG_NAME=([^#].+)|\\1|p' \\"${file}\\" | head -1)\\"; then\n echo \\"error: Unable to check for bootstrap config, exiting\\" 2\u003e\u00261\n kill $pid\n exit 1\n fi\n if [[ \\"${updated}\\" != \\"${name}\\" ]]; then\n echo \\"info: Bootstrap configuration profile name changed, exiting\\" 2\u003e\u00261\n kill $pid\n exit 0\n fi\n sleep 15\n done\n ) \u0026\n break\ndone\nmkdir -p /etc/origin/node/tmp\n# periodically refresh both node-config.yaml and relabel the node\nwhile true; do\n if ! oc extract \\"configmaps/${name}\\" -n openshift-node --to=/etc/origin/node/tmp --confirm --request-timeout=10s --config /etc/origin/node/node.kubeconfig \\"--token=$( cat /var/run/secrets/kubernetes.io/serviceaccount/token )\\" \u003e /dev/null; then\n echo \\"error: Unable to retrieve latest config for node\\" 2\u003e\u00261\n sleep 15 \u0026\n wait $!\n continue\n fi\n\n # does the openshift-ca.crt exist\n oldca=/etc/pki/ca-trust/source/anchors/openshift-ca.crt\n newca=/run/secrets/kubernetes.io/serviceaccount/ca.crt\n if ! cmp -s \\"${oldca}\\" \\"${newca}\\" ; then\n cp \\"${newca}\\" \\"${oldca}\\"\n update-ca-trust extract\n fi\n\n KUBELET_HOSTNAME_OVERRIDE=$(cat /etc/sysconfig/KUBELET_HOSTNAME_OVERRIDE 2\u003e/dev/null) || :\n if ! [[ -z \\"$KUBELET_HOSTNAME_OVERRIDE\\" ]]; then\n #Patching node-config for hostname override\n echo \\"nodeName: $KUBELET_HOSTNAME_OVERRIDE\\" \u003e\u003e /etc/origin/node/tmp/node-config.yaml\n fi\n\n # detect whether the node-config.yaml or volume-config.yaml has changed, and if so trigger a restart of the kubelet.\n if [[ ! -f /etc/origin/node/node-config.yaml ]]; then\n cat /dev/null \u003e /tmp/.old\n fi\n\n if [[ ! -f /etc/origin/node/volume-config.yaml ]]; then\n cat /dev/null \u003e /tmp/.old-volume-config\n fi\n md5 /etc/origin/node/tmp/node-config.yaml \u003e /tmp/.new\n\n if [[ ! -f /etc/origin/node/tmp/volume-config.yaml ]]; then\n cat /dev/null \u003e /tmp/.new-volume-config\n else\n md5 /etc/origin/node/tmp/volume-config.yaml \u003e /tmp/.new-volume-config\n fi\n\n trigger_restart=false\n if [[ \\"$( cat /tmp/.old )\\" != \\"$( cat /tmp/.new )\\" ]]; then\n mv /etc/origin/node/tmp/node-config.yaml /etc/origin/node/node-config.yaml\n trigger_restart=true\n fi\n\n if [[ \\"$( cat /tmp/.old-volume-config )\\" != \\"$( cat /tmp/.new-volume-config )\\" ]]; then\n mv /etc/origin/node/tmp/volume-config.yaml /etc/origin/node/volume-config.yaml\n trigger_restart=true\n fi\n\n if [[ \\"$trigger_restart\\" = true ]]; then\n SYSTEMD_IGNORE_CHROOT=1 systemctl restart tuned || :\n echo \\"info: Configuration changed, restarting kubelet\\" 2\u003e\u00261\n # TODO: kubelet doesn't relabel nodes, best effort for now\n # github.com/kubernetes/kubernetes/i... if args=\\"$(openshift-node-config --config /etc/origin/node/node-config.yaml)\\"; then\n labels=$(tr ' ' '\\n' \u003c\u003c\u003c$args | sed -ne '/^--node-labels=/ { s/^--node-labels=//; p; }' | tr ',\\n' ' ')\n if [[ -n \\"${labels}\\" ]]; then\n echo \\"info: Applying node labels $labels\\" 2\u003e\u00261\n if ! oc label --config=/etc/origin/node/node.kubeconfig \\"node/${NODE_NAME}\\" ${labels} --overwrite; then\n echo \\"error: Unable to apply labels, will retry in 10\\" 2\u003e\u00261\n sleep 10 \u0026\n wait $!\n continue\n fi\n fi\n else\n echo \\"error: The downloaded node configuration is invalid, retrying later\\" 2\u003e\u00261\n sleep 10 \u0026\n wait $!\n continue\n fi\n if ! pkill -U 0 -f '(^|/)hyperkube kubelet '; then\n echo \\"error: Unable to restart Kubelet\\" 2\u003e\u00261\n sleep 10 \u0026\n wait $!\n continue\n fi\n fi\n # annotate node with md5sum of the config\n oc annotate --config=/etc/origin/node/node.kubeconfig \\"node/${NODE_NAME}\\" \\\n node.openshift.io/md5sum=\\"$( cat /tmp/.new )\\" --overwrite\n cp -f /tmp/.new /tmp/.old\n cp -f /tmp/.new-volume-config /tmp/.old-volume-config\n sleep 180 \u0026\n wait $!\ndone\n\"],\"env\":[{\"name\":\"NODE_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"spec.nodeName\"}}}],\"image\":\" \",\"name\":\"sync\",\"securityContext\":{\"privileged\":true,\"runAsUser\":0},\"volumeMounts\":[{\"mountPath\":\"/etc/origin/node/\",\"name\":\"host-config\"},{\"mountPath\":\"/etc/sysconfig\",\"name\":\"host-sysconfig-node\",\"readOnly\":true},{\"mountPath\":\"/var/run/dbus\",\"name\":\"var-run-dbus\",\"readOnly\":true},{\"mountPath\":\"/run/systemd/system\",\"name\":\"run-systemd-system\",\"readOnly\":true},{\"mountPath\":\"/etc/pki\",\"name\":\"host-pki\"},{\"mountPath\":\"/usr/share/pki\",\"name\":\"host-pki-usr\"}]}],\"hostNetwork\":true,\"hostPID\":true,\"priorityClassName\":\"system-node-critical\",\"serviceAccountName\":\"sync\",\"terminationGracePeriodSeconds\":1,\"tolerations\":[{\"operator\":\"Exists\"}],\"volumes\":[{\"hostPath\":{\"path\":\"/etc/origin/node\"},\"name\":\"host-config\"},{\"hostPath\":{\"path\":\"/etc/sysconfig\"},\"name\":\"host-sysconfig-node\"},{\"hostPath\":{\"path\":\"/var/run/dbus\"},\"name\":\"var-run-dbus\"},{\"hostPath\":{\"path\":\"/run/systemd/system\"},\"name\":\"run-systemd-system\"},{\"hostPath\":{\"path\":\"/etc/pki\",\"type\":\"\"},\"name\":\"host-pki\"},{\"hostPath\":{\"path\":\"/usr/share/pki\",\"type\":\"\"},\"name\":\"host-pki-usr\"}]}},\"updateStrategy\":{\"rollingUpdate\":{\"maxUnavailable\":\"50%\"},\"type\":\"RollingUpdate\"}}}\n", "kubernetes.io/description": "This daemon set provides dynamic configuration of nodes and relabels nodes as appropriate.\n"}, "creationTimestamp": "2020-06-18T18:50:14Z", "generation": 1, "name": "sync", "namespace": "openshift-node", "resourceVersion": "641", "selfLink": "/apis/extensions/v1beta1/namespaces/openshift-node/daemonsets/sync", "uid": "8ba1e579-b194-11ea-b899-000c29e336e1"}, "spec": {"revisionHistoryLimit": 10, "selector": {"matchLabels": {"app": "sync"}}, "template": {"metadata": {"annotations": {"scheduler.alpha.kubernetes.io/critical-pod": ""}, "creationTimestamp": null, "labels": {"app": "sync", "component": "network", "openshift.io/component": "sync", "type": "infra"}}, "spec": {"containers": [{"command": ["/bin/bash", "-c", "#!/bin/bash\nset -euo pipefail\n\nfunction md5()\n{\n local md5result=($(md5sum $1))\n echo \"$md5result\"\n}\n\n# set by the node image\nunset KUBECONFIG\n\ntrap 'kill $(jobs -p); exit 0' TERM\n\n# track the current state of the config\nif [[ -f /etc/origin/node/node-config.yaml ]]; then\n md5 /etc/origin/node/node-config.yaml > /tmp/.old\nelse\n touch /tmp/.old\nfi\n\nif [[ -f /etc/origin/node/volume-config.yaml ]]; then\n md5 /etc/origin/node/volume-config.yaml > /tmp/.old-volume.config\nelse\n touch /tmp/.old-volume-config\nfi\n\n# loop until BOOTSTRAP_CONFIG_NAME is set\nwhile true; do\n file=/etc/sysconfig/origin-node\n if [[ -f /etc/sysconfig/atomic-openshift-node ]]; then\n file=/etc/sysconfig/atomic-openshift-node\n elif [[ -f /etc/sysconfig/origin-node ]]; then\n file=/etc/sysconfig/origin-node\n else\n echo \"info: Waiting for the node sysconfig file to be created\" 2>&1\n sleep 15 & wait\n continue\n fi\n name=\"$(sed -nE 's|^BOOTSTRAP_CONFIG_NAME=([^#].+)|\1|p' \"${file}\" | head -1)\"\n if [[ -z \"${name}\" ]]; then\n echo \"info: Waiting for BOOTSTRAP_CONFIG_NAME to be set\" 2>&1\n sleep 15 & wait\n continue\n fi\n # in the background check to see if the value changes and exit if so\n pid=$BASHPID\n (\n while true; do\n if ! updated=\"$(sed -nE 's|^BOOTSTRAP_CONFIG_NAME=([^#].+)|\1|p' \"${file}\" | head -1)\"; then\n echo \"error: Unable to check for bootstrap config, exiting\" 2>&1\n kill $pid\n exit 1\n fi\n if [[ \"${updated}\" != \"${name}\" ]]; then\n echo \"info: Bootstrap configuration profile name changed, exiting\" 2>&1\n kill $pid\n exit 0\n fi\n sleep 15\n done\n ) &\n break\ndone\nmkdir -p /etc/origin/node/tmp\n# periodically refresh both node-config.yaml and relabel the node\nwhile true; do\n if ! oc extract \"configmaps/${name}\" -n openshift-node --to=/etc/origin/node/tmp --confirm --request-timeout=10s --config /etc/origin/node/node.kubeconfig \"--token=$( cat /var/run/secrets/kubernetes.io/serviceaccount/token )\" > /dev/null; then\n echo \"error: Unable to retrieve latest config for node\" 2>&1\n sleep 15 &\n wait $!\n continue\n fi\n\n # does the openshift-ca.crt exist\n oldca=/etc/pki/ca-trust/source/anchors/openshift-ca.crt\n newca=/run/secrets/kubernetes.io/serviceaccount/ca.crt\n if ! cmp -s \"${oldca}\" \"${newca}\" ; then\n cp \"${newca}\" \"${oldca}\"\n update-ca-trust extract\n fi\n\n KUBELET_HOSTNAME_OVERRIDE=$(cat /etc/sysconfig/KUBELET_HOSTNAME_OVERRIDE 2>/dev/null) || :\n if ! [[ -z \"$KUBELET_HOSTNAME_OVERRIDE\" ]]; then\n #Patching node-config for hostname override\n echo \"nodeName: $KUBELET_HOSTNAME_OVERRIDE\" >> /etc/origin/node/tmp/node-config.yaml\n fi\n\n # detect whether the node-config.yaml or volume-config.yaml has changed, and if so trigger a restart of the kubelet.\n if [[ ! -f /etc/origin/node/node-config.yaml ]]; then\n cat /dev/null > /tmp/.old\n fi\n\n if [[ ! -f /etc/origin/node/volume-config.yaml ]]; then\n cat /dev/null > /tmp/.old-volume-config\n fi\n md5 /etc/origin/node/tmp/node-config.yaml > /tmp/.new\n\n if [[ ! -f /etc/origin/node/tmp/volume-config.yaml ]]; then\n cat /dev/null > /tmp/.new-volume-config\n else\n md5 /etc/origin/node/tmp/volume-config.yaml > /tmp/.new-volume-config\n fi\n\n trigger_restart=false\n if [[ \"$( cat /tmp/.old )\" != \"$( cat /tmp/.new )\" ]]; then\n mv /etc/origin/node/tmp/node-config.yaml /etc/origin/node/node-config.yaml\n trigger_restart=true\n fi\n\n if [[ \"$( cat /tmp/.old-volume-config )\" != \"$( cat /tmp/.new-volume-config )\" ]]; then\n mv /etc/origin/node/tmp/volume-config.yaml /etc/origin/node/volume-config.yaml\n trigger_restart=true\n fi\n\n if [[ \"$trigger_restart\" = true ]]; then\n SYSTEMD_IGNORE_CHROOT=1 systemctl restart tuned || :\n echo \"info: Configuration changed, restarting kubelet\" 2>&1\n # TODO: kubelet doesn't relabel nodes, best effort for now\n # github.com/kubernetes/kubernetes/i... if args=\"$(openshift-node-config --config /etc/origin/node/node-config.yaml)\"; then\n labels=$(tr ' ' '\n' <<<$args | sed -ne '/^--node-labels=/ { s/^--node-labels=//; p; }' | tr ',\n' ' ')\n if [[ -n \"${labels}\" ]]; then\n echo \"info: Applying node labels $labels\" 2>&1\n if ! oc label --config=/etc/origin/node/node.kubeconfig \"node/${NODE_NAME}\" ${labels} --overwrite; then\n echo \"error: Unable to apply labels, will retry in 10\" 2>&1\n sleep 10 &\n wait $!\n continue\n fi\n fi\n else\n echo \"error: The downloaded node configuration is invalid, retrying later\" 2>&1\n sleep 10 &\n wait $!\n continue\n fi\n if ! pkill -U 0 -f '(^|/)hyperkube kubelet '; then\n echo \"error: Unable to restart Kubelet\" 2>&1\n sleep 10 &\n wait $!\n continue\n fi\n fi\n # annotate node with md5sum of the config\n oc annotate --config=/etc/origin/node/node.kubeconfig \"node/${NODE_NAME}\" \\n node.openshift.io/md5sum=\"$( cat /tmp/.new )\" --overwrite\n cp -f /tmp/.new /tmp/.old\n cp -f /tmp/.new-volume-config /tmp/.old-volume-config\n sleep 180 &\n wait $!\ndone\n"], "env": [{"name": "NODE_NAME", "valueFrom": {"fieldRef": {"apiVersion": "v1", "fieldPath": "spec.nodeName"}}}], "image": " ", "imagePullPolicy": "IfNotPresent", "name": "sync", "resources": {}, "securityContext": {"privileged": true, "runAsUser": 0}, "terminationMessagePath": "/dev/termination-log", "terminationMessagePolicy": "File", "volumeMounts": [{"mountPath": "/etc/origin/node/", "name": "host-config"}, {"mountPath": "/etc/sysconfig", "name": "host-sysconfig-node", "readOnly": true}, {"mountPath": "/var/run/dbus", "name": "var-run-dbus", "readOnly": true}, {"mountPath": "/run/systemd/system", "name": "run-systemd-system", "readOnly": true}, {"mountPath": "/etc/pki", "name": "host-pki"}, {"mountPath": "/usr/share/pki", "name": "host-pki-usr"}]}], "dnsPolicy": "ClusterFirst", "hostNetwork": true, "hostPID": true, "priorityClassName": "system-node-critical", "restartPolicy": "Always", "schedulerName": "default-scheduler", "securityContext": {}, "serviceAccount": "sync", "serviceAccountName": "sync", "terminationGracePeriodSeconds": 1, "tolerations": [{"operator": "Exists"}], "volumes": [{"hostPath": {"path": "/etc/origin/node", "type": ""}, "name": "host-config"}, {"hostPath": {"path": "/etc/sysconfig", "type": ""}, "name": "host-sysconfig-node"}, {"hostPath": {"path": "/var/run/dbus", "type": ""}, "name": "var-run-dbus"}, {"hostPath": {"path": "/run/systemd/system", "type": ""}, "name": "run-systemd-system"}, {"hostPath": {"path": "/etc/pki", "type": ""}, "name": "host-pki"}, {"hostPath": {"path": "/usr/share/pki", "type": ""}, "name": "host-pki-usr"}]}}, "templateGeneration": 1, "updateStrategy": {"rollingUpdate": {"maxUnavailable": "50%"}, "type": "RollingUpdate"}}, "status": {"currentNumberScheduled": 0, "desiredNumberScheduled": 0, "numberMisscheduled": 0, "numberReady": 0}}], "returncode": 0}, "state": "list"}

NO MORE HOSTS LEFT ******************************************************************************************

to retry, use: --limit @/root/openshift-ansible/playbooks/deploy_cluster.retry

PLAY RECAP **************************************************************************************************

10.0.0.20 : ok=433 changed=88 unreachable=0 failed=1

10.0.0.22 : ok=106 changed=16 unreachable=0 failed=0

10.0.0.23 : ok=106 changed=16 unreachable=0 failed=0

localhost : ok=11 changed=0 unreachable=0 failed=0

INSTALLER STATUS ********************************************************************************************

Initialization : Complete (0:00:55)

Health Check : Complete (0:00:41)

Node Bootstrap Preparation : Complete (0:03:18)

etcd Install : Complete (0:01:06)

Master Install : In Progress (0:20:33)

This phase can be restarted by running: playbooks/openshift-master/config.yml

Failure summary:

have you solved that? i encountered the same problem and i have tried many solutions

Thank you so much. It is working after changes like " openshift_node_group_name='node-config-master-infra' "