Introduction

In today's rapidly evolving technological landscape, the demand for processing power and computational capabilities continues to grow exponentially. From scientific research and complex simulations to data analytics and artificial intelligence, numerous fields rely on high-performance computing (HPC) to tackle intricate problems and drive innovation. At the core of HPC lies the HPC cluster, a system designed to harness the collective power of multiple interconnected computing resources. In this article, we delve into the intricacies of HPC clusters, exploring their components, operation, challenges, and the latest trends shaping the industry.

What is an HPC Cluster?

An HPC cluster refers to a collection of interconnected computing nodes, working collaboratively to perform complex computations and handle massive amounts of data. It leverages parallel processing techniques, dividing tasks into smaller sub-tasks that can be executed simultaneously across multiple computing nodes. By combining the processing power of individual nodes, HPC clusters enable researchers, scientists, engineers, and organizations to tackle computationally demanding workloads efficiently.

Main Components of an HPC Cluster:

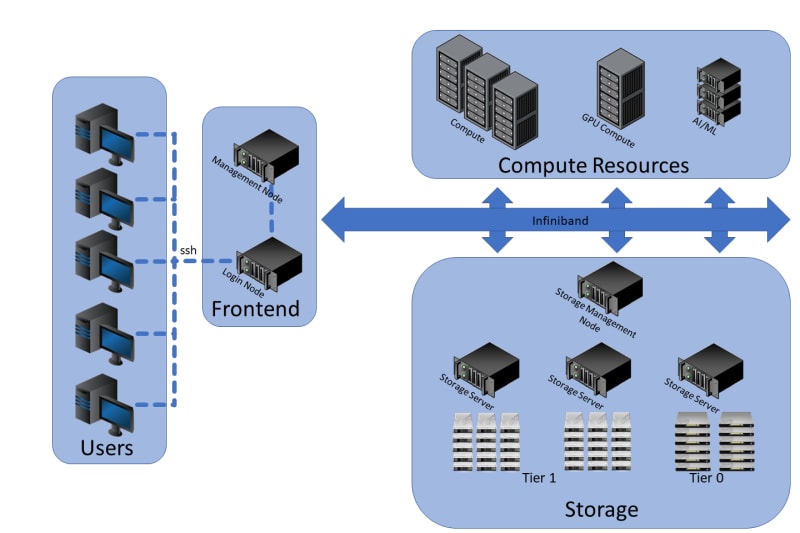

- Login Node: A login node is a dedicated computing node within an HPC cluster that serves as the entry point for users to access and interact with the cluster. It acts as a gateway or interface between users and the rest of the cluster's resources. Users typically connect to the login node through secure shell (SSH) or other remote login protocols.

- Head Node: The head node, also known as the control node or management node, is another key component in an HPC cluster. It is responsible for overseeing and coordinating the cluster's operation, managing various system-level tasks, and facilitating communication between different components of the cluster.

- Computing Nodes: These are individual machines within the cluster, often equipped with multicore processors, high-speed memory, and specialized accelerators such as GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units).

- Network Fabric: The network fabric connects the computing nodes, enabling communication and data transfer between them. High-bandwidth, low-latency interconnects, such as InfiniBand or Ethernet, are commonly used to facilitate efficient data exchange and synchronization.

- Storage Infrastructure: HPC clusters require robust storage systems capable of handling massive datasets. This may include high-performance parallel file systems, distributed storage solutions, or cloud-based storage.

- Scheduler and Resource Manager: To optimize resource allocation and workload management, an HPC cluster relies on a scheduler and resource manager. These components ensure that computing resources are efficiently utilized and that jobs are executed in a timely manner.

How HPC Cluster Systems Work?

HPC clusters leverage parallelism to distribute computational tasks across multiple computing nodes, enabling faster processing and increased efficiency. The workflow typically involves the following steps:

- Job Submission: Users submit computational tasks, often referred to as jobs, to the cluster's scheduler.

- Resource Allocation: The scheduler analyzes the available resources, taking into account factors such as job priority, resource requirements, and system load, to allocate the necessary computing nodes and storage resources.

- Job Execution: Once resources are allocated, the scheduler initiates the execution of the job on the designated nodes. The nodes work in parallel, processing their respective portions of the workload.

- Data Management: HPC clusters often employ distributed file systems or object storage solutions to handle large datasets. These systems enable efficient data access and sharing among the computing nodes.

- Job Monitoring and Completion: Throughout the job execution process, the scheduler monitors the progress and resource utilization. Once the job is completed, the results are returned to the user or stored for further analysis.

Challenges in HPC Clusters Today:

- While HPC clusters offer immense computational power, they also face several challenges:

- Scalability: As workloads become increasingly complex and data-intensive, scaling HPC clusters to handle larger and more diverse tasks can be challenging. Balancing computational resources, minimizing communication overhead, and optimizing performance at scale require careful system design and management.

- Energy Efficiency: HPC clusters consume substantial amounts of power, leading to high operational costs and environmental impact. Improving energy efficiency is a critical challenge, necessitating advancements in hardware, cooling technologies, and power management strategies.

- Data Management: With the explosive growth of data, efficiently managing, accessing, and processing large datasets poses significant challenges. Storage infrastructure must keep pace with the ever-increasing demand for performance and capacity.

- Complexity: HPC clusters are complex systems that require a high level of expertise to design, build, and manage. This complexity can make it difficult to find and hire qualified personnel, and it can also make it difficult to troubleshoot problems when they occur.

- Cost: HPC clusters are expensive to purchase and maintain. The cost of the hardware, software, and networking equipment can be significant, and the cost of energy consumption can also be high.

Latest Trends in the HPC Cluster Industry:

The HPC cluster industry is continuously evolving, with several exciting trends shaping its future:

- Convergence of HPC and AI: HPC clusters are increasingly being integrated with artificial intelligence (AI) frameworks, enabling the training and deployment of AI models on large-scale computing infrastructures. This convergence unlocks new possibilities for scientific discovery, deep learning, and data-driven insights.

- Hybrid and Cloud Computing: Hybrid architectures, combining on-premises HPC clusters with cloud-based resources, provide flexibility and scalability. Organizations can leverage the cloud for bursting workloads, accessing specialized hardware, and managing peak demands, while still maintaining control over critical data and infrastructure.

- Exascale Computing: The race toward exascale computing, capable of performing a quintillion calculations per second, is gaining momentum. HPC clusters are being developed to achieve unprecedented performance levels, enabling breakthroughs in areas such as climate modeling, drug discovery, and cosmology.

Conclusion:

In conclusion, HPC clusters represent the backbone of modern scientific and computational research, offering the processing power required to solve complex problems. Understanding the components, functioning, challenges, and trends in the HPC cluster industry is essential for researchers, engineers, and organizations looking to unlock the full potential of high-performance computing. Stay tuned and motivated for the next article where we will talk about the stages for building HPC clusters.

Top comments (0)