Originally posted on matt.si

In July I attended this year's Open Rights Group Conference, a one-day multi-track conference organised by the Open Rights Group (ORG). ORG is the UK's biggest advocacy group for digital rights and campaigns on issues like surveillance, net neutrality and censorship. Despite being a member for many years this is only the second time I've attended an ORGCon, and it was honestly one of the best tech-related events I've been to all year. It's not exclusively for techies, on the contrary I was in the minority. There were many more journalists, activists and lawyers there. I'll dive into what made it great, describing each fascinating session I attended.

Session one- Keynote by Edward Snowden

Image from @greekemmy

For the first session of the day American whistleblower Edward Snowden gave a keynote speech followed by Q&A, via videocall from Russia. No-one in the room missed the irony of relying on Google's Hangouts service to talk at a digital rights conference, and the audio was appropriately unreliable. In spite of that Snowden delivered an enlightening, inspiring speech. On discussing his whistleblowing, he made an interesting clarification: That his motivation was about democracy, not about surveillance. What disturbed him more was not the orwellian surveillance of hundreds of millions of innocent people, but the fact that none of them had agreed to it. The fact that there'd been no debate, no chance to object before this policy was implemented. Other highlights from the session:

- He very heavily criticised the Ghost Proposal, a GCHQ bid to encrypt all our communications with a key that only the government can decrypt1. Specifically he referred to how the NSA lost track of some of their most valuable digital assets that were then used by malicious actors cause huge amounts of damage2, and questioned whether the "rinky-dink UK government" (with tongue firmly in cheek, I'm sure) would be able to protect a set of keys that anyone could use to decrypt millions of people's communications.

- "The law does not protect society, society protects the law"; Snowden made the argument that laws are tools and only effective so long as conscientious citizens use them and uphold them.

- He further arguded that technology is another tool that, like law, can and should be utilised to enforce human rights. One obvious example is encryption; it should surely be illegal to eavesdrop your communications without due process, but encrypting one's communications is another way to enforce that principle.

- He ended his speech on an inspiring note; “We will lose this year and the next one, but we will win- it’s a way of life, a system of beliefs that will not go away, what we did to resist governmental abuse of surveillance will matter to our children” (paraphrased to the best of my memory).

Session two- "How Can I Trust What I See Online?" panel from Teen AI

Image from Acorn Aspirations

This panel consisted of four teenagers from Teen AI who've been studying computers, security and AI as well as attending various tech events for young people. It was really inspiring to see young people so interested in technology, they were each well in advance of where I'd been at their age. Unfortunately I've forgotten exactly which panelists said what, having misplaced some notes. Nontheless, my highlights were:

- One panelist made the point that deepfakes may actually make us trust what we see less, thereby making us more skeptical. This may be a positive change in society rather than the bleak forecasts that we usually associate with deepfake tech.

- One panelist when aksed whether young people need to be taught how to be safe online responded "No, old people do". They went on to discuss how young people are that much more tech-savvy and more likely to understand what they're looking at, while it may be that older people are more susceptible to misleading or malicious content.

- "If we don't understand technology we will be controlled by people who do"- a wonderful response from one of the panelists discussing their motivations for learning about technology.

- Another panelist said something that was very novel to me, when making the point that technology is not inherently good or bad. I've always thought of facial recognition as being an inherently orwellian and oppressive tool, but they cited a much more positive example: Police in New Delhi are using it to locate missing children3. This is one of those wonderfully simple yet effective ideas; go around taking pictures of children at orphenages, use facial recognition to compare them to the faces of missing children that their family have posted online. In just the first four days they identified 3,000 missing children.

Session three- Can Tech be Truly Ethical?

Image from David Raho

This was a fantastic session, it really began to open my eyes to how complex ethics in technology can get. We had Paul Dourish (University of California), Lilian Edwards (Newcastle University), Ann Light (University of Sussex) and Gina Neff (University of Oxford) discussing the topic, and each demonstrated obvious expertise in the subject. Among numerous enlightening moments were:

- "Ethics washing"- a new term to me; it refers to the tendency of organisations to create an ethics board, often entirely internally with no public oversight or transparency, and declare themselves to now be ethical. The accusation is that such organisations are trying to escape true scrutiny and regulation by saying "We're ethical now, so there's no need to regulate us".

- Ethical behaviour can be difficult to enforce because of combinatorial complexity. This is an especially significant issue with Internet of Things devices, filling homes and interacting with one another. You may ensure your software acts ethically in as many ways as you can think of, but your software will very frequently find itself in situations that you hadn't imagined. When a thousand different devices are capable of interacting with one another, we can't predict every interaction ahead of time.

- This may be a good use case apply property-based testing.

- One person in the audience was a member of a worker-owned software business, which is the first time I've heard of such a thing. It sounds like a great development.

- "How do we stop a raging bull"- while we're clamouring to figure out what technology's role in society is and how it affects all of us, technology continues to develop rapidly and crosses new boundaries into new parts of our lives every day. It's a lot easier to start a fire than to put it out; how can ethicists and human rights campaigners keep from always being caught on the back foot?

- It was suggested that we should look at business models, and the way different revenue sources can affect a business' motivations. Right now, huge swathes of the tech industry are profiting from automated surveillance of their users. What revenue sources could offer more ethical motivations?

- It's one rule for them, one rule for us: San Francisco has banned facial recognition within the city4. Yet many of the city's occupants are affluent technologists making their money by continually improving the very same problematic technologies that they're exempt from, and much of the rest of the world is increasingly subjected to.

- Do Artifacts Have Politics was dropped in as a piece of recommended reading.

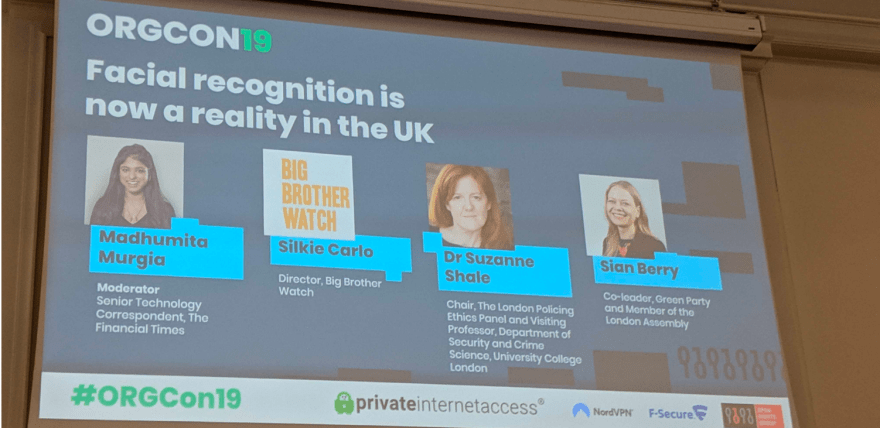

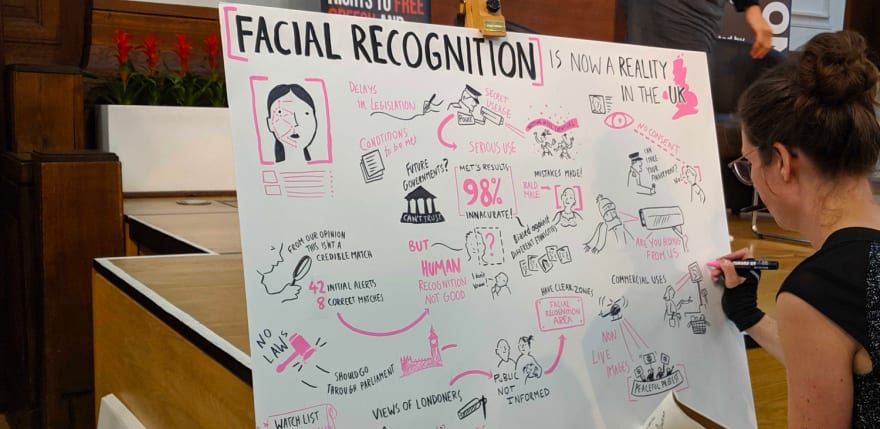

Session four- Facial Recognition is Now a Reality in the UK

This panel was packed with talented and interesting people- a senior technology correspondent at The Financial Times, the director of Big Brother Watch, the Chair of the London Policing Ethics Panel, and Sian Berry co-leader of the Green Party. The topic was on facial recognition in the UK, and in particular how it's been deployed at Notting Hill Carnival in recent years. The Met Police started using the technology without notice or discussion, and studies have found that it's grossly ineffective5. My notes from the session:

- The Met Police didn't admint to using the technology until Big Brother Watch pushed the issue.

- There is no basis in law for the use of facial recognition. No-one knows whether it is something that needs to be consented to under UK law, or what limitations there are on it.

- There is an ongoing legal battle, brought by Big Brother Watch, to decide the legitimacy of facial recognition in UK law6.

- Suzanne Shale was a great balancing voice representing the Policing Ethics Panel, and the discussion was much less partisan or one-sided for having her there.

- Sian Berry assured the audience that progress was being made, and the Met Police would be putting a memorandum on place on the use of facial recognition technology.

- Another issue was raised regarding regulation of private facial recognition, taking the cameras in self checkout machines as a particular example. They're constantly facing outward from behind the screen and have a clear view of the consumer. They could be, and may currently be, used to build facial recognition databases and map them to our cards and purchasing histories. There's currently little if any regulation to prevent this.

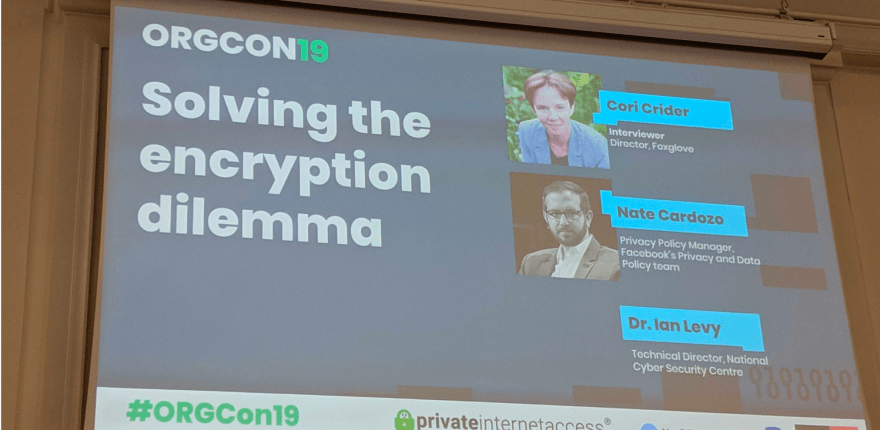

Session five- The Encryption Dilemma

This was perhaps my favourite session, it was really remarkable and speaks to the integrity of the organisers and the whole community. The first thing to notice in the picture is that Dr. Ian Levy is missing a photograph. This is because he was added at the last possible minute. This session was supposed to be a one-on-one interview, where Cori Crider and the audience asks questions of Nate Cardozo, privacy policy manager at Facebook and former senior policy-maker at the Electronic Frontier Foundation (EFF), the biggest digital rights advocacy group in the United States.

Today Nate mostly works with WhatsApp, which is setting new standards for private digital communications by popularising the end-to-end encryption model. This model is infuriating to the likes of GCHQ because it removes their ability to intercept communications. GCHQ's Ghost Proposal is supposed to be a middle-ground where end-to-end encryption can exist between a server and their client, but the state can sit in the middle and read everything. Nate is a big time digital privacy advocate and a very outspoken critic of the Ghost Proposal. He even wrote a well publicised article for the EFF in which he called the Ghost Proposal a "backdoor by another name"7.

Enter Dr. Ian Levy, co-author of The Ghost Proposal and Technical Director of the Cyber Security Centre (a part of GCHQ). I gather that he found out about this interview and argued that that, as it was obviously going to center around the ghost proposal, he ought to have an opportunity to defend the proposal. So at the twilight hour the planned session turns from an interview into a debate, and Ian is added to the session. I think it's really wonderful that we can have a nonpartisan debate like this. I really admire Ian for coming to the event knowing that the audience would largely disagree with him and to ORG, Cori and Nate for doing the right thing by giving Ian this chance, and avoiding the conference becoming an echo chamber. Here are my highlights:

- Ian went to great lengths to make the point that he wishes to genuinely engage with the digital rights community, to come to a common understanding and find some resolution for the encryption debate that satisfies everyone. Needless to say this is something that GCHQ and the like have an extremely poor track record of, so it's great to see someone making a change. "The fact I'm here should tell you something", Ian says, and it does.

- Ian insists that the Ghost Proposal is nothing more than a proposal and says that he wants to use it to trigger debate, to iterate on this idea and get public discussions moving.

- The end-to-end encryption model is coming to Facebook Messenger too.

- Questioned on WhatsApp security, Nate assures the audience and adds: "If I leave in a huff, stop using WhatsApp".

- Ian responded really well to a difficult question- "If we introduce a backdoor, won't terrorists just write their own encryption?". Ian responds that he'd love that. Cryptography, he argues, is hard. Unless you're an expert cryptographer, when writing your own encrypted communication software you're almost certain to make a mistake that intelligence services can exploit.

- Ian, perhaps as part of trying to humanise his work and reach out to people, recommends the "Top Secret" exhibition at the Science Museum as a great way to learn the history of GCHQ.

- There was a great question from the crowd criticising the Ghost Protocol- "Are you leading the way by using it in all internal GCHQ messaging? Do the royals use it?". Going back to the point of "one rule for us, another for them"; GCHQ would obviously resist any attempt to weaken their own communication privacy, as would the more affluent members of society.

- The discussion also went briefly into Cloudflre's DNS over HTTPs. Ian and GCHQ are very much not fond of this as it once again makes tracking people on the internet more difficult. Conversely, I must say I'm quite enamored with the idea.

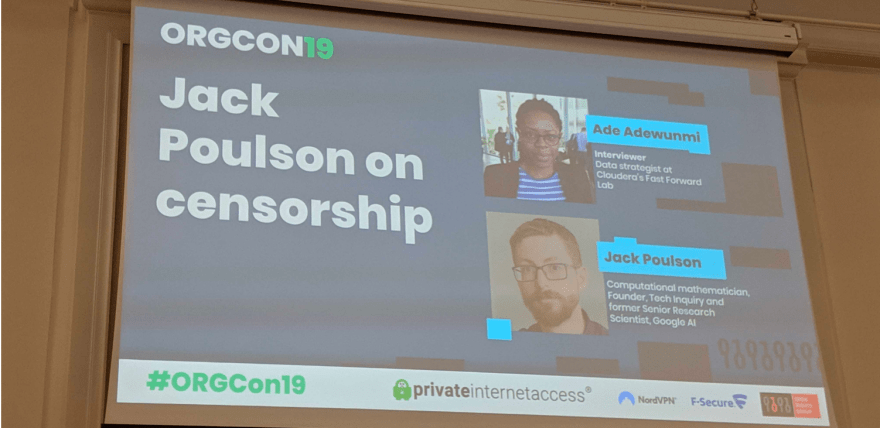

Session six- Jack Poulson on Censorship

Jack Poulson is known for quite publicly leaving Google, where he was a Senior Research Scientist, over their "Dragonfly" project to censor search results in China8. The project would have blocked results for searches like "human rights", "Nobel Prize" and "student protest"9. My notes:

- Challenged on what he had expected his resignation to do, Jack pointed out the successes Google staff have had in changing their employer's policy10.

- Jack made a similar stance when he worked in academia, refusing to support closed access journals. He was threatened with tenure removal if he didn't comply, and resigned two days later.

- Google first shut down Google.cn because China attacked them, compromised their source code and more in order to track human rights activists11.

- Jack suggests that we too often discuss ethics in a future tense, as if it were theoretical- "Oh, what if technology were to harm society…" but tech already is harming society. It's better, he argues, to refer to actual historical human rights abuses, such as when Cisco wrote software for the Chinese government knowing that it would be used to detain and torture dissidents12.

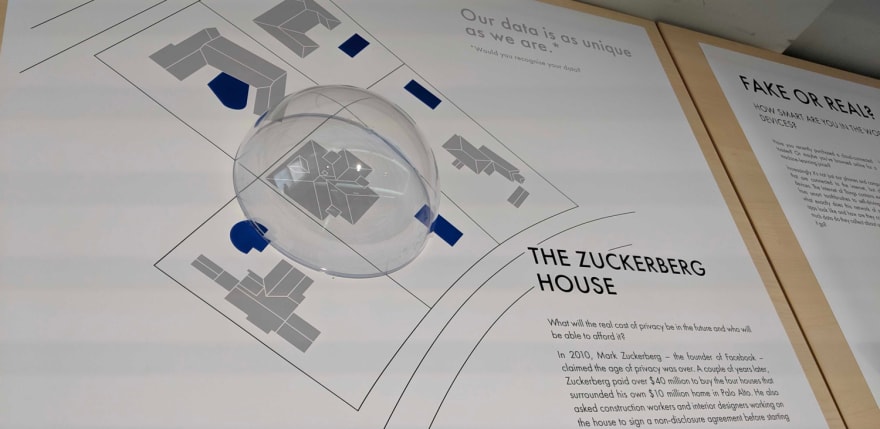

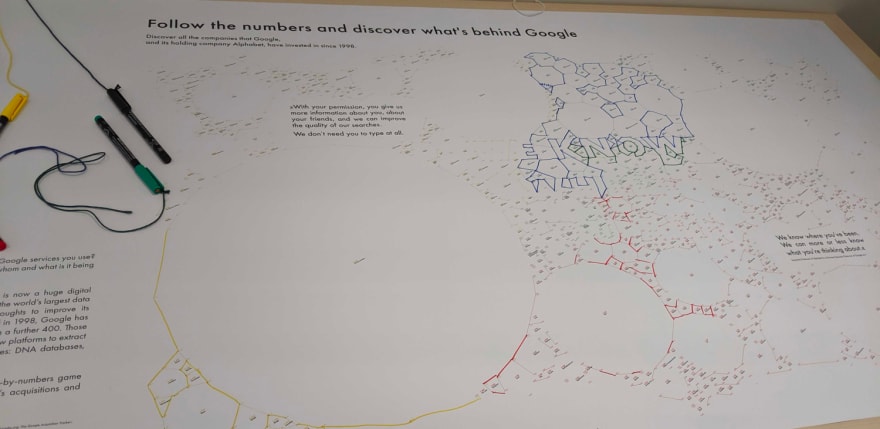

Art installations

Lastly, there were a number of interesting art installations around the conference. I particularly liked these:

This display demonstrated the amount of land that Mark Zuckerberg has purchased, at great expense, around his home to ensure privacy. The author asks; are we entering a world where only the rich can afford privacy?

A children's connect-the-dot game in which each dot is a subsidiary of Alphabet, Google's parent company. A great way to visually demonstrate the sheer scale technology corporations are reaching.

Each talk in the biggest room was accompanied by an artist drawing a wonderful piece to summarise the topics covered in the talk.

Conclusion

ORGCon is great. I never imagined I'd learn so much about ethics in technology. There were some really unexpected and interesting sessions. I definitely recommend going next year, and perhaps you'll consider joining the Open Rights Group.

Footnotes

-

Alex Hearn, The Guardian, Apple and WhatsApp Condem GCHQ Plans to Eavesdrop on Encrypted Chats ↩

-

Nicole Perlroth, David E. Sanger, New York Times, How Chinese Spies Got the N.S.A.’s Hacking Tools, and Used Them for Attacks ↩

-

Anthony Cuthberston, The Independent, Indian Police Trace 3,000 Missing Children In Just Four Days Using Facial Recognition Technology ↩

-

Dave Lee, BBC News, San Francisco is first US city to ban facial recognition ↩

-

Matt Burgess, Wired.co.uk, The Met Police's facial recognition tests are fatally flawed ↩

-

Big Brother Watch, Big Brother Watch Begins Landmark Legal Challenge to Police Use of Facial Recognition Surveillance ↩

-

Nate Cardozo, EFF, Give Up the Ghost: A Backdoor by Another Name ↩

-

Ryan Gallagher, The Intercept, SENIOR GOOGLE SCIENTIST RESIGNS OVER “FORFEITURE OF OUR VALUES” IN CHINA ↩

-

Anthony Cuthberston, The Independent, GOOGLE EMPLOYEES SUSPECT IT IS STILL WORKING ON SECRET CHINESE 'DRAGONFLY' PROJECT ↩

-

Daisuke Wakabayashi, Scott Shane, New York Times, Google Will Not Renew Pentagon Contract That Upset Employees ↩

-

Elinor Mills, CNet, Behin the China attacks on Google ↩

-

Stephen Lawson, CIO, EFF says Cisco shouldn't get off the hook for torture in China ↩

Top comments (0)