![]() photo credit: james-wheeler

photo credit: james-wheeler

After using a Github action to assess web page performance with Google PageSpeed, I found out that PageSpeed leverages a tool called Lighthouse for most of what it now provides. When I was configuring a Github action to check for Javascript library security vulnerabilities, I remember it using a package called Lighthouse, too.

🤔

According to Google, the Lighthouse project provides . . .

"Automated auditing, performance metrics, and best practices for the web"

NOTE: This is the same suite of tests that execute when you launch Google Chrome and use the audits tab in Developer Tools, and the same suite of tests that now provide a large portion of what is reported back by Google PageSpeed.

Some quick scouting on Github revealed some existing efforts focused around making Lighthouse easier to plug in to CI/CD and packaging it into a Github Action.

For the purpose of asserting and maintaining a baseline performance level on this site, we're going to try leveraging the Lighthouse Github Action. Thanks to Aleksey and the contributors!

The Github Action

First step: add a workflow file .github/workflows/lighthouse.yml in the Github repository which should trigger Lighthouse:

name: Lighthouse

on:

push:

branches:

- master

jobs:

lighthouse:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- name: Audit URLs using Lighthouse

uses: treosh/lighthouse-ci-action@v2

with:

urls: 'https://mattorb.com/'

- name: Save results

uses: actions/upload-artifact@v1

with:

name: lighthouse-results

path: '.lighthouseci'

After committing that workflow file, it executes, and we can go grab the artifact generated by lighthouse and see what our scores look like.

That zip file contains both a json and html representation of the report.

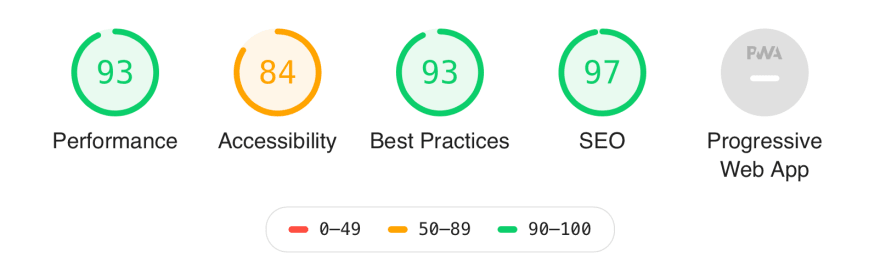

Inspecting the summary portion of the html report gives us overall scores in various areas and provides much more detail below that fold:

Based on those numbers, decide where to draw the line and set a threshold to not fall below in each of the categories. Leave some room for variability in server performance though. Nobody wants to be dealing with false alerts regularly.

Create a .github/lighthouse/lighthouserc.json file in the repository with Lighthouse assertions you want to enforce:

{

"ci": {

"assert": {

"assertions": {

"categories:performance": ["error", {"minScore": 0.90}],

"categories:accessibility": ["error", {"minScore": 0.80}],

"categories:best-practices": ["error", {"minScore": 0.92}],

"categories:seo": ["error", {"minScore": 0.90}]

}

}

}

}

Configure the stanza in the Github Action at .github/workflows/lighthouse.yml to enact those assertions by pointing it to that config file:

✂️

steps:

- uses: actions/checkout@v1

- name: Audit URLs using Lighthouse

uses: treosh/lighthouse-ci-action@v2

with:

urls: 'https://mattorb.com/'

configPath: '.github/lighthouse/lighthouserc.json' # Assertions config file

✂️

Next time the action executes, it will check that we don't drop below these thresholds.

If one of those assertions fails, it will fail the workflow with a message:

You can fail the workflow based on overall scores or make even finer grained assertions using items called out in the detail of the report. Check out the Lighthouse CI documentation on assertions for examples.

Going Further

- Run a Lighthouse CI server to provide tracking of these metrics over time in a purpose built solution for that.

- Use multiple runs to average out server performance and draw more manageable thresholds.

- Use the concept of a page performance budget to set guide-rails and limits around size and quantity of external (think .js and .css) resources.

- Shift left and assess a site change while it is still in the PR stage

- Design specific test suites and set unique performance/seo requirements based on what kind of page is being probed.

- Leverage a Lighthouse plugin to assess pages that have opinionated and strict design requirements for their target platforms (i.e. - AMP).

Top comments (0)