[Update: July 2, 2021] The issue with Safari discussed below gets fixed when I deploy another Next.js site (static generation) with Cloudflare Page rather than with Netlify. The root cause is unclear, though.

TL;DR

Apparently it’s best NOT to self-host Google Fonts, because the latest versions of Safari will fail to render them.

My own experience

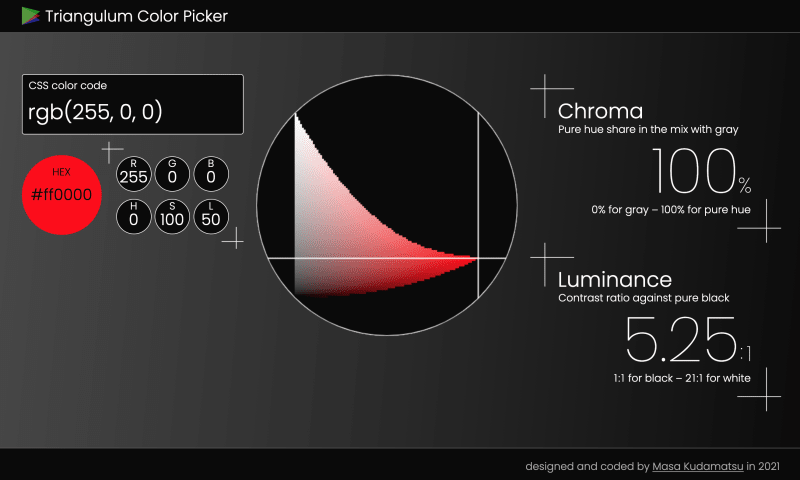

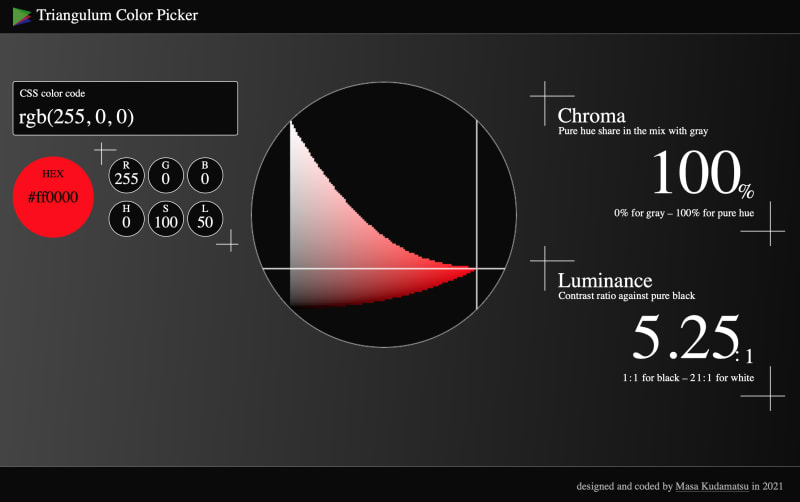

With my little web app (Triangulum Color Picker--an original color picker), I tried self-hosting Google Fonts, after being convinced that there is now no performance gain in any situation by using the Google Fonts server (Wicki 2020; see also Pollard 2020).

So I wrote up the @font-face declaration myself, downloaded the .ttf files from Google Fonts, converted them into .woff2 files (with the help of Google Webfonts Helper) to reduce the file size, and uploaded them by pushing with Git LFS to my GitHub repo, and deployed the site with Netlify.

With Chrome (and Edge, Firefox, and Opera) , it looked like:

Poppins, a geometric sans serif font from Google Fonts to go well with the geometric design of my website, is beautifully rendered.

With Safari, however, it looked like:

Oh, no. Times New Roman, the default font of the web since Day 1. I should have at least specified sans-serif as a fallback for the font-family CSS property.

Still, it’s weird why only Safari fails to render self-hosted Google Fonts.

Other people’s experiences

It turns out I’m not alone. Searching over Twitter, I found several tweets reporting the same problem.

DeeC Digital Solutions@deecdigital

DeeC Digital Solutions@deecdigital I wonder why safari doesn’t play nice with font-face. IE is usually the one causing trouble 🤔

I wonder why safari doesn’t play nice with font-face. IE is usually the one causing trouble 🤔

#webdevelopment #Safari #CSS #ugh #alwayssomething18:16 PM - 25 Nov 2019

Seth Wright@crosse3

Seth Wright@crosse3 My latest "what the—?!?" moment: Safari won't use user-installed fonts when they show up in CSS "font-face" attributes, because...fingerprinting? What even?

My latest "what the—?!?" moment: Safari won't use user-installed fonts when they show up in CSS "font-face" attributes, because...fingerprinting? What even?

stackoverflow.com/a/6320822717:02 PM - 11 Sep 2020

(The last tweet says in Japanese: "@font-face fails to work only for Safari...")

A Word Press plugin to self-host Google Fonts appears to have this issue as well.

HELP!! Doing final optimizations on a site before going live, hopefully today, and tried moving Google Fonts to be called locally using your plugin.

It works great on all browsers except Safari.

Any idea what’s the issue here?

— Todd (@toddedelman) on Aug. 6, 2019

So what's going on with Safari?

The root cause: Fingerprinting

I finally found why Safari behaves differently: from Safari 12 (released on Sep 17, 2018), it only renders the default system fonts and web fonts (ravasthi (2020)). TylerDurdOn (2018) points to the same fact, so does this tweet:

Cascadea@cascadeaapp

Cascadea@cascadeaapp @Shpigford You can define web fonts with @font-face rules for remote fonts! Local fonts are restricted to the system fonts, however - this is a Safari security restriction to prevent browser fingerprinting.21:17 PM - 14 Apr 2020

@Shpigford You can define web fonts with @font-face rules for remote fonts! Local fonts are restricted to the system fonts, however - this is a Safari security restriction to prevent browser fingerprinting.21:17 PM - 14 Apr 2020

And the reason is to prevent browser fingerprinting. Steiner (2020) explains what it is, in a very approachable way:

… the list of fonts a user has installed can be pretty identifying. A lot of companies have their own corporate fonts that are installed on employees' laptops. For example, Google has a corporate font called Google Sans. … An attacker can try to determine what company someone works for by testing for the existence of a large number of known corporate fonts like Google Sans. The attacker would attempt rendering text set in these fonts on a canvas and measure the glyphs. If the glyphs match the known shape of the corporate font, the attacker has a hit. If the glyphs do not match, the attacker knows that a default replacement font was used since the corporate font was not installed.

Surprisingly, the lack of support for self-hosted fonts by the latest versions of Safari is rarely mentioned in any article that I've seen advocates the self-hosting of Google Fonts for the best performance. Which is why I’ve decided to write down this article.

Steiner (2020) introduces a work-around that is currently (as of January 2021) in active development: Local Font Access API. Until it’s launched and supported by all the major browsers, we should avoid self-hosting Google Fonts (and those other font files you have purchased from font foundries) to ensure the cross-browser consistency in how fonts are rendered.

Good news: Web fonts are no longer that slow

Performance improvement is the main motivation for self-hosting Google Fonts and other non-system fonts. But web fonts are no longer as slow as it used to be, thanks to (1) “preconnecting” the font file server and (2) “preloading” the font stylesheet. For detail, see another article of mine: Kudamatsu (2021).

Plus, when it comes to the Google Fonts server, it has additional benefits: (1) it customizes the @font-face declaration to each browser and (2) it minimizes the size of font files to be downloaded. For both points in detail, see Pollard (2020).

Conclusion: Don’t host Google Fonts locally for the sake of cross-browser consistency

That’s my conclusion after doing research on this issue for the past two days. The fact that it’s rarely mentioned makes me wonder if I miss something important. If you know how to work around Safari’s behaviour, please let me know by posting a comment.

References

Kudamatsu, Masayuki (2021) “Loading Google Fonts and any other web fonts as fast as possible in early 2021”, Dev.to, Feb. 1, 2021.

Pollard, Barry (2020) “Should you self-host Google Fonts?”, Tune The Web, Jan. 12, 2020.

ravasthi (2020) “An answer to ‘Safari user installed fonts don't render’”, Stack Overflow, Aug. 1, 2020.

Steiner, Thomas. (2020) “Use advanced typography with local fonts”, web.dev, Aug 24, 2020.

TylerDurdOn (2018) “PSA: Safari 12 disabled/nerfed setting custom fonts via CSS or defaults”, Reddit, Sep. 29, 2018.

Wicki, Simon (2020) “Time to Say Goodbye to Google Fonts”, wicki.io, Nov. 30, 2020.

First published on 1 February, 2021.

Last updated on 3 February, 2021.

This version: 1.0.1

Top comments (1)

Hey Masa, thanks for reference my 'Time to Say Goodbye to Google Fonts' post.

Could it be possible, that we misunderstood each other?

In my post I'm basically advocating for self-hosting fonts. Whereas local fonts, that you refer in your post, would require the user to have them already on their system—this is where Safari pretty much kills that feature for the sake of privacy (finger printing). Cheers!