People have different, often subjective opinions of what good software architecture is. We want it to be elegant. We want it to be intuitive. Most of the time, we want it to feel good, without knowing what that means in terms of measurable metrics.

IMHO, software architecture is solely a scaling problem. Good architectural design, pragmatically, ensures that multiple developers will be able to conveniently work on the same code base, without screwing things up left and right. In other words, when you have good design:

- 💡 One can easily find out how to make changes.

- 🚀 One can make those changes efficiently.

- ☂️ Performing those changes doesn't cause errors (often).

- 🧠 The code structure and conventions are memorable.

Of course, a good architectural design has other measurable properties as well, but I am focusing on these for a reason: they perfectly mirror the main pillars of Usability. If you are not familiar with the term, Usability is about ensuring a system (e.g. interface, app, etc.) is usable (people can do stuff with it). It is the cornerstone of UX, so much so that the two terms are often used interchangeably. Usability is mainly composed of the following:

💡Learnability:

How fast/easy is it for users to learn what can they do?

🚀 Efficiency:

How fast can users perform tasks?

☂️ Error-Rate:

How often do users encounter errors?

🧠 Memorability:

How easy is getting back to the system/software?

As you can see, good architecture implies good UX, where our users (the U) are developers working on some code base, and the use-case (the experience, the X) is maintaining / updating the code. Since those users are developers and their usage is developing, it is safe to use the term DX (Developer Experience) here, which means:

Good architecture provides good DX for maintainers.

How does that help? Well, we know how to measure and evaluate UX/DX pretty well, and by looking at software architecture as a UX/DX problem, we can apply the same tools and measurements and make practical and data-driven decisions.

Example: Change Propagation

Imagine we have this function:

export function doSomething(user: User, opts: Options) {

...

}

And we need to change the function signature so that it also accepts a boolean flag, e.g.:

export function doSomething(

user: User,

opts: Options,

really: boolean

) {

...

}

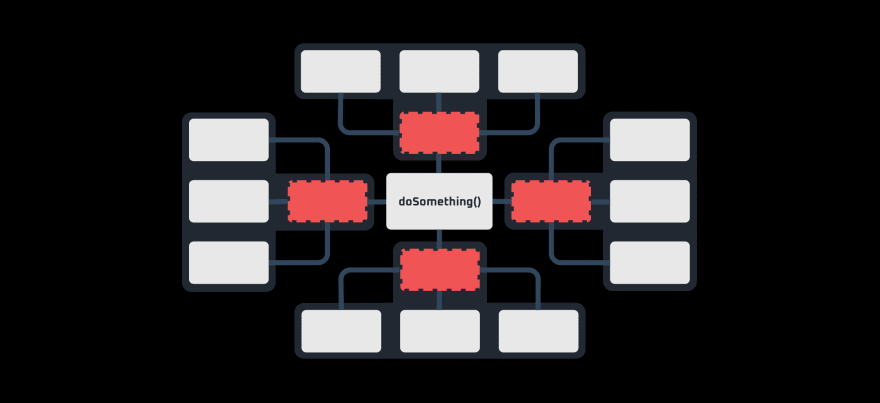

Beyond the change itself, we need to update every other code module (segment, unit) that is using doSomething(), which might look like this:

Here we would have to modify 8 other modules for making the intended change. We could make this change more efficient if we had less coupling between different modules, for example by introducing intermediary modules/wrappers:

For example, the three modules on the left might all use doSomething() with the same set of options, so our intermediary module could look something like this:

import { doSomething() } from '...'

export function doSomethingWithTheseOpts(user: User) {

const opts = { ... }

return doSomething(user, opts)

}

And our intended change would be isolated to this:

return doSomething(user, opts, false)

Note that in the second architectural design, we have more modules, but making changes such as our example is easier. If the frequency of these changes is far less than frequency of creating new functions similar to doSomething(), then the overhead of the second architecture might be more than its benefits.

Generally speaking, lowering the coupling between different modules limits the propagation of any particular change, which in turns makes it more efficient.

In this design, a change to module A results in 6 other modules being changed. But with lowering the coupling (and increasing cohesion), we can limit it to 2:

This means for good architectural design, generally speaking, we should try to identify most probable changes, and then increase cohesion and reduce coupling in a way that would isolate the propagation of those changes.

Example: Layered Structures

Imagine a frontend project with the following layered structure:

For changes that affect all things related to A (marked in red), we would need to constantly navigate between all A files. This interaction is made particularly difficult by the distance these files have from each other.

Detailed analysis of this navigation interaction depends on the environment setup (e.g. the IDE's file explorer), but to see how the distance affects the difficulty of the interaction, we can assume the simple interaction of opening two of the files subsequently, i.e. clicking on one and then clicking on the other. The difficulty of this interaction is governed by Fitts's law, i.e.:

ID = log(2 * D / W)

👉 ID is index of difficulty, which is proportional to time

👉 D is the distance that the cursor needs to travel

👉 W is the width of the clicked element

In our case, W is determined by the environment (e.g. the width of the IDE file explorer), so the only parameter that can be optimized is D, i.e. the distance of the files. Since index of difficulty scales logarithmically based on distance, the only meaningful optimization here would be to have really small Ds, i.e. if the files were adjacent, for example in a structure like this:

Both structures do have cohesion: the first one has logical cohesion (stuff that do the same logical thing are put alongside each other), the latter eventually will arrive at functional cohesion (stuff that are about the same task are put alongside each other).

👉 Functional cohesion is generally costlier than logical cohesion to achieve, as the latter only requires logically bundling units (e.g. everything related to DB, everything related to HTML) while the former requires a proper conceptual model of the project.

DX-Driven approach allows for a cost-efficient, data-driven, iterative way of reaching functional cohesion: observe which parts of the code change together (under the umbrella of one task) more often, and reduce their relative distance.

Example: Mental Models

So far, we've only focused on the efficiency(🚀) aspect of

DX. Mental models and design patterns start to play a role when we start to consider learnability(💡) and memorability(🧠) aspects as well.

What is a mental model / design pattern? MVC is a famous example, where you imagine some modules as responsible for data (i.e. Model), some others for how the data looks (i.e. View), and others for how data is updated (i.e. Controllers). Instead of controllers, you might assume modules whose job is to translate business data to presentable data and vice-versa, which would give you MVVM, and so on.

An important function of these mental models is to facilitate learning and recalling the design. A common pitfall is to try to evaluate them by how intuitive they are (or conversely, pursuing intuitive mental models and design patterns). The exact same pitfall exists in UX land, as designers pursue the mythical intuitive design, where the interface is so learnable that users know it by heart.

The solution here is also similar: the best practical way for minimizing the learning curve is to draw on things that people already have learned. In other words, a pragmatic approach to intuitiveness is pursuing familiarity instead, both in UX, DX and in architectural design.

For example: the best mental model for a particular project might be an actor model. However, if your developers are familiar with MVC, and if the overhead of modeling the project with MVC is not that higher than using the actor model, you would be better off using MVC.

Conclusion

DX-Driven Architecture (DXDA, if I may 😎) is NOT a ground-breaking, flashy, new design pattern or anything. It is a method of pragmatically contextualizing already existing patterns (e.g. MVC), techniques (e.g. access abstraction) and metrics (e.g. coupling or cohesion), by considering the experience of the maintainers. This allows for making quantitative and data-driven decisions about software architecture and evaluating those decisions empirically.

As mentioned earlier, it is notable that DXDA does not offer an all-encompassing perspective. Good design has properties that are not covered by translating UX principles to developers maintaining a project (i.e., DX), at least not trivially. This means blindly optimizing DX will also not lead to the best architecture, as other goals and concerns should also be considered.

All-in-all though, I feel DXDA provides a really solid basis for thinking about software architecture practically and pragmatically. Whatever our subjective notion of ideal architecture and design is, I think it is universally agreeable that it MUST reduce maintenance/improvement cost, which logically entails that optimizing DX is always a key component of good architectural design, whether we actively consider it or not.

Top comments (0)