Written by Chimezie Enyinnaya ✏️

Starting as a software developer, it's recommended to follow a more pragmatic approach to developing software.

As time goes on, when you start building more complex software that serves thousands or millions of users, it becomes crucial to be more concerned with concepts such as architecture, system design, testing, security, deployment, and most especially performance. Furthermore, on performance, it is common practice to follow key performance metrics such as average response time, traffic rate, and CPU usage of your application and the server.

The main issue now stems from acquiring the best profiler tool that measures your Node.js application performance based on these metrics and doesn’t affect the software responsiveness.

N.B., CPU profiling and CPU monitoring are often used interchangeably but are different.

In this article, you’ll learn about the inbuilt profiling tool recommended in Node.js.

Why profiling?

Profiling is collecting and analyzing data on how functions in your code perform when executed, regarding performance measurement parameters such as time complexity, throughput, input workloads, garbage collection, and time to invoke and execute a function.

The purpose here is to identify bottlenecks in your code. Bottlenecks happen when a line/block of code or function is not performing as well as the rest of the program. Some of these bottlenecks might be obvious but most of them not so much.

So in essence, a profiler tells you, "Hey, don’t stress in guessing where the bug that slows down your application is hiding. Let me help you do this programmatically."

The Node.js profiler

The easiest way in Node.js to profile applications is by using the inbuilt profiler, which collects all the data from functions and logs it into a file. Node.js implements this by introducing the --prof flag, which communicates with the V8 profiler and then logs the data.

Let’s illustrate how this works with a sample Node.js app. This example uses the Node.js framework Fastify. Fastify claims to be the "fastest web framework in town!" so I felt using this would be perfect for a demo.

The Fastify API performs CRUD operations for a bookstore app. To follow along, you can clone the GitHub repo.

Let’s examine the handler functions in controllers/bookController.js and profile them:

//controllers/bookController.js

const boom = require('boom')

const Book = require('../models/Book')

// get all books

exports.getAllBooks = async (req, reply) => {

try {

const books = await Book.find()

return reply.code(200)

.send(

{

Message: "Success",

data: books

}

)

} catch (err) {

throw boom.boomify(err)

}

}

// get a single book by id

exports.getSingleBook = async (req, reply) => {

try {

const id = req.params.id

const book = await Book.findById(id)

return reply.code(200)

.send({

Message: "Success",

data: book

})

} catch (err) {

throw boom.boomify(err)

}

}

// add a new book

exports.addNewBook = async (req, reply) => {

try {

const book = new Book(req.body)

const newBook = await book.save()

return reply.code(200)

.send({

Message: "New Book added successfully",

data: newBook

})

}

catch (err) {

throw boom.boomify(err)

}

}

// edit a book

exports.updateBook = async (req, reply) => {

try {

const id = req.params.id

const updatedBook = await Book.findByIdAndUpdate(id, req.body, {

new: true

})

return reply.code(200)

.send({

Message: "Book updated successfully",

data: updatedBook

})

} catch (err) {

throw boom.boomify(err)

}

}

// delete a book

exports.deleteBook = async (req, reply) => {

try {

const id = req.params.id

const deletedBook = await Book.findByIdAndDelete(id);

return reply.code(200)

.send({

Message: `${deletedBook.title} has been deleted successfully`,

data: id

})

} catch (err) {

throw boom.boomify(err)

}

}

You could add some data to the MongoDB like this:

> db.user.insert({ title: "The book for old people", genre: "Self Help" })

We are adding some data to help us simulate a scenario where users have latency issues when making requests to your app. So you can go ahead and create as much data in the database as you can.

Run this command on your terminal:

node --prof controllers/bookController.js

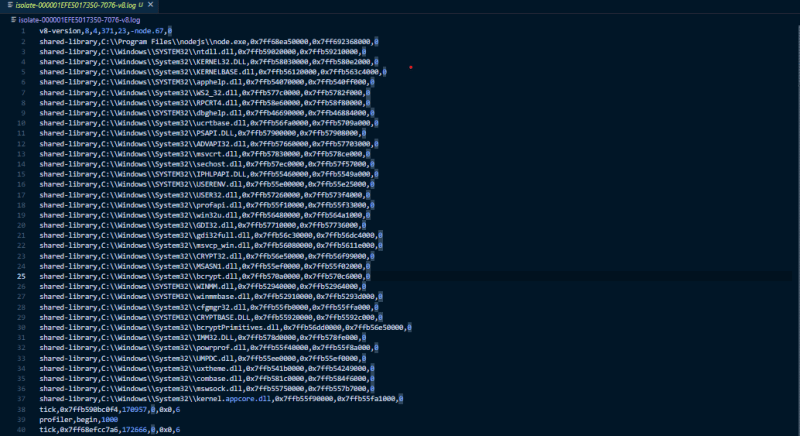

This command creates an isolate-0xnnnnnnnnn-v8.log (where n is a digit) log file, and it should look like this:

Unreadable, right? This is why we will run the --prof-process flag to covert that log file to a more readable file.

Run this command on your terminal:

node --prof-process isolate-000001EFE5017350-7076-v8.log > processed.txt

This will create a processed.txt file that has the logs.

Let’s examine the logs, starting from the summary section.

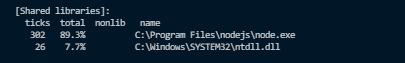

This shows that 97 percent of samples gathered were obtained from shared libraries. So let’s focus on the [Shared Libraries] section and ignore the rest.

We can see that 89 percent of CPU time is taken up by the Node.js runtime environment and 8 percent by Windows kernel functions. Also, it clearly shows that it’s not ideal to profile code on your local development server. Running tests from an HTTP server is preferable. So, let’s simulate a production environment with Ngrok.

Ngrok enables you to create public URLs for exposing your development web server. You can learn how to set up Ngrok here.

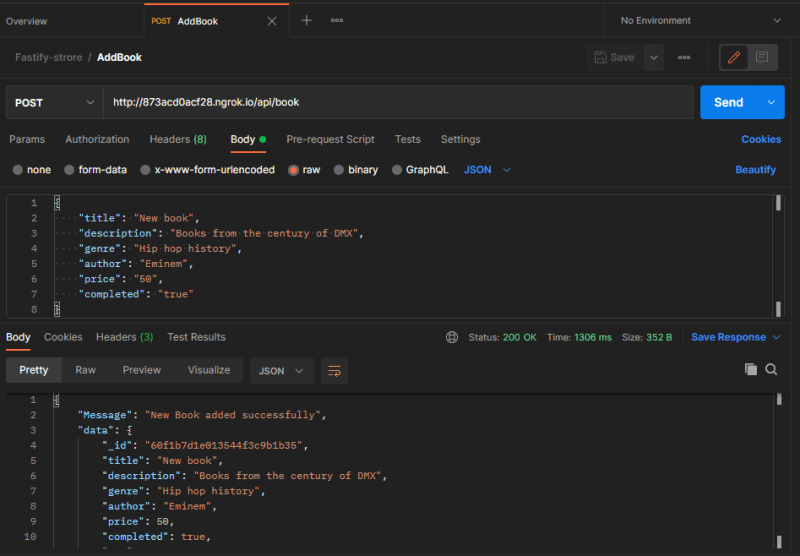

A typical Ngrok URL looks like http://873acd0acf28.ngrok.io.

Now we are no more relying on Node.js runtime and OS kernels to run our Node server. We will run the --prof attribute again. First, add some load to the server. I use Postman as an API client to test my API endpoints, but you can use whatever tool you want.

You can go ahead and add more books:

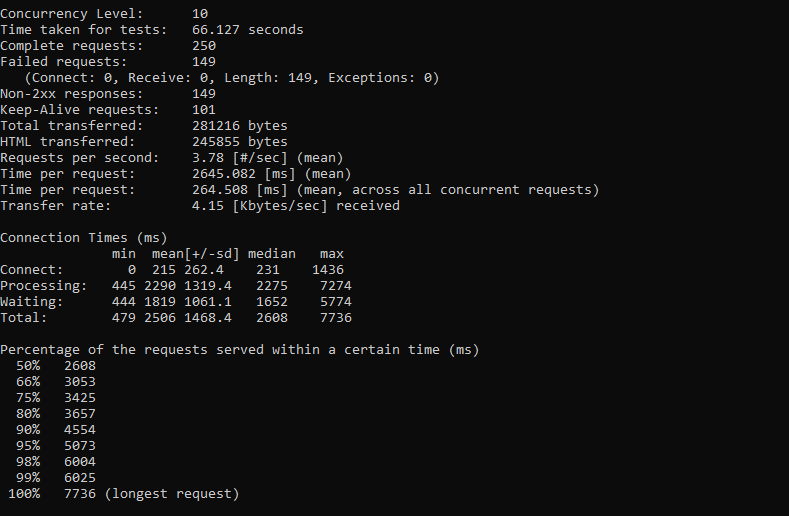

Let’s also get the AB output using the Apache benchmarking tool. If you don’t know how to install this tool, I dropped a link in the resources section below.

The AB output:

It’s interesting to note that in our simple sample here, each request (successful or not) averages almost three seconds to make a round trip and serves approximately four requests per second. In a real-world application, you would want to come up with strategies to improve these numbers so users won't have issues with your application responsiveness when traffic rises.

This is good so far. Now, let’s re-run the --prof-process command and try analyzing the report.

Run the following on your terminal:

NODE_ENV=production node --prof index.js

node --prof-process isolate-000001E540DA5730-19636-v8.log

At this point, you should be able to read, analyze, and implement solutions if there are any bottlenecks.

Conclusion

The inbuilt Node.js profiler is the easiest and most suitable for profiling your Node.js applications. In this article, we discussed why profiling is important, and how to use the --prof and --prof-process flags for debugging bottlenecks.

I hope you’ve learned something new from reading this. If you have questions or suggestions, please drop them in the comment section.

200’s only ✔️ Monitor failed and slow network requests in production

Deploying a Node-based web app or website is the easy part. Making sure your Node instance continues to serve resources to your app is where things get tougher. If you’re interested in ensuring requests to the backend or third party services are successful, try LogRocket.

LogRocket is like a DVR for web apps, recording literally everything that happens on your site. Instead of guessing why problems happen, you can aggregate and report on problematic network requests to quickly understand the root cause.

LogRocket instruments your app to record baseline performance timings such as page load time, time to first byte, slow network requests, and also logs Redux, NgRx, and Vuex actions/state. Start monitoring for free.

Top comments (0)