This piece was originally posted on my blog at legobox

Background

Many think of Nginx as just a web server, yep it's a web server, and so much more, Nginx is first and foremost a proxy engine, and load balancing server mechanism, which is capable of working as a web server using whichever post-processing interpreter you’d like, be it uWSGI, php-fpm, etc.

Many of us have heard of nginx, and some of us are looking forward to checking out what it is, in this piece we take a good look at how to use nginx as a load balancer and a general proxy passer.

Pre-requiste

Before getting up and running with nginx, it's usually important (but not compulsory) that you meet the following conditions,

- You are looking to learn and understand some more DevOps

- You have an understanding of what a web server is in general

- You’ve used a computer before. 😏

Got all these down, then you are good to go.

Proxying

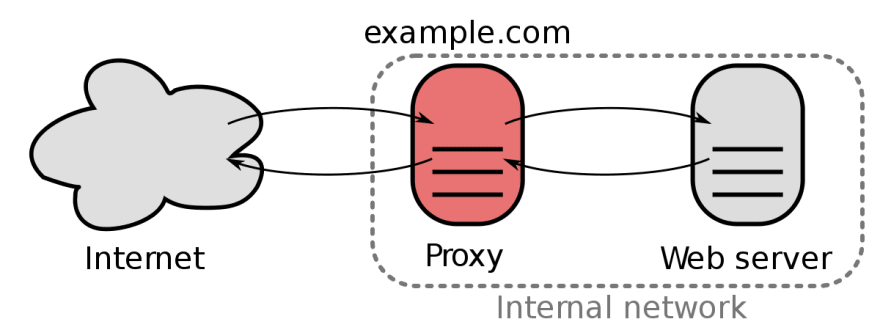

The first scenario and structure we are going to check out is the concept of proxying. In general, terms, proxying is simply having one server positioned in front of another and have all request to reach the second server through the first server.

in nginx, this is achieved using the proxy pass directive.

// basic proxy pass

location / {

proxy_pass http://ourserver2here.com

}

With the proxy pass, there are many tricks and these are important to an understanding with respect to setting a proxy pass system, let’s have a look at some of these in the books.

Matching prefixes

location /match/here {

proxy_pass http://ourserver2here.com;

}

In the above setup, no URI is given at the end of the server in the proxy pass definition. In this case a request such as /match/here/please, This request would be sent to ourserver2here as https://ourserver2here/match/here/please. Pretty convenient right?

Reverse Proxying

This is one type of proxying that’s particularly interesting, It's a proxying system were by requests sent to a server (lets call it A) is actually sent to another server (lets call it B) which places in front of the first server (server A) and proceeds to relay the message to A and responds as if it's A is responding.

Here's a more formal description.

In computer networks, a reverse proxy is a type of proxy server that retrieves resources on behalf of a client from one or more servers. These resources are then returned to the client as if they originated from the Web server itself.

Here’s a virtual description

With nginx, we could achieve this, and even take it further, ever heard of ngrok, maybe you may have, maybe not, either ways,

Ngrok is a service that allows you to serve your localhost application via a domain proxy, exposing it via a public URL.

With the reverse proxy, and setting up a subdomain on your site for such businesses, you can have your own ngrok without the constant change in the domain name and stuff.

To pull this off, the first part is to setup nginx on your server to proxy connections from your specific subdomain or domain (whichever you find convenient) to a specific port on the server.

server {

listen 80;

server_name coolsubdomain.mainname.com;

location / {

proxy_pass http://127.0.0.1:5000; #in this case we picked the port 5000

}

}

on your computer, assuming our application is served on our localhost port 8000 (it could be any port really) proceeding to use ssh we run a reverse tunnel to the port on the server.

// run a reverse proxy to your server via ssh

ssh -R 5000:127.0.0.1:8000 youruser@mainname.com

This sets up the reverse proxy, therefore any request going to coolsubdomain.mainname.com is actually coming to your localhost machine.

Load balancing

Load balancing is another problem nginx has been uniquely positioned to solve, it’s built to handle a lot of requests and if we have several servers running our project, we can balance the load between them using upstreams.

Nginx uses upstreams to balance the load and it has a few algorithms by default which are implored to handle the load balancing mechanism.

These algorithms are specified by directives and can be noted as follows.

(round robin): The default load balancing algorithm that is used if no other balancing directives are present. Each server defined in the upstream context is passed requests sequentially in turn.

least_conn : Specifies that new connections should always be given to the backend that has the least number of active connections. This can be especially useful in situations where connections to the backend may persist for some time.

ip_hash : This balancing algorithm distributes requests to different servers based on the client's IP address. The first three octets are used as a key to deciding on the server to handle the request. The result is that clients tend to be served by the same server each time, which can assist in session consistency.

hash : This balancing algorithm is mainly used with Memcached proxying. The servers are divided based on the value of an arbitrarily provided hash key. This can be text, variables, or a combination. This is the only balancing method that requires the user to provide data, which is the key that should be used for the hash

When setting up, for load balancing it may look like this.

# http context

upstream backend_hosts {

least_conn;

server host1.example.com;

server host2.example.com;

server host3.example.com;

}

# next we set up our server

server {

listen 80;

server_name example.com;

location /proxy-me {

proxy_pass http://backend_hosts;

}

}

We could even add weights to a specific host so they handle more connections in general than the rest according to a certain ratio.

...

upstream backend_hosts {

least_conn;

server host1.example.com weight=3;

server host2.example.com;

server host3.example.com;

}

...

Conclusion

Where are many other things we can achieve using nginx, this is pretty much just the tip of the iceberg. Setting up web servers and proxy servers can usually be a bit of a hassle, but it doesn’t have to.

In the next post under this category, I’d explore how to get this up and running using, caddy, it's a web server technology which aims to make the process of setting up web servers and proxy engines a whole lot easier.

Top comments (3)

Thanks Osita I am currently trying to access how much easier can Nginx can make laod balancing. So far there are only two article I have found useful. Your and this one on Why use Nginx for load balancing.

Thanks for the post Osita, really helpful.

When I started reading was thinking of writing a similar post using caddy and then saw your closing notes.

What I like most most about caddy is automatic SSL with let's encrypt among other features.

Looking forward for part two.

Sorry I stopped writing for a while, but I did write the caddy article, you can check it out using the link. dev.to/legobox/another-kickass-ser...