Airflow is one of the great orchestrator today. It is a highly scalable and available framework for orchestrating, and more importantly, it is developed in Python. Therefore, developers can develop in Python based on the various operators provided by Airflow. In addition, the Airflow community is quite active, and many operators are constantly added to the ecosystem.

These advantages make Airflow the preferred choice for data engineers working on ETL or ELT, although there are many other frameworks for orchestrating workflows, such as Argo workflow, but for engineers who rely heavily on Python development, Airflow is much easier to harness and maintain.

But Airflow is not without drawbacks, one of the biggest problems is it is a monolith. When workflows are continuously added, this monolith will sooner or later become a big ball of mud. Another problem is that Airflow is a distributed framework and it is not easy to verify a complete workflow in local development. There are many tools that improve on Airflow's shortcomings, such as Dagster, but the fact of the monolith remains unresolved.

Although Airflow has been widely used in a diversity of data engineering domains, the role of the data domain has been further differentiated as the domain has become increasingly popular. In addition to the traditional Data Engineer, Data Analyst and ML Engineer, a new role has recently become more common and is called Analytical Engineer.

Let's briefly describe the responsibilities and capabilities of these roles.

- Data Engineers: are responsible for developing and managing the entire data pipeline lifecycle, i.e. they are the main developers of ETL and ELT. They are mainly proficient in distributed architectures, programming (mainly Python), and data storage and its declarative language (mainly SQL).

- Data Analyst: End-user of data, using already structured data for analysis in various business situations. Very good at using SQL and various data visualization tools.

- ML Engineer: This role is interesting and has a different position in each organization, but in general, work related to machine learning, such as tagging data, data cleansing, data normalization will be involved, so there are opportunities to develop ETL/ELT, but the main responsibility is still the framework and model of machine learning, of course, is mainly in Python.

So, what is the position of an Analytical Engineer?

They are also responsible for managing the lifecycle of the data pipeline, but the biggest difference between them and data engineers is their lack of the concept of distributed architecture and the ability to develop programs.

Well, how to develop a data pipeline without programming? Thanks to the various tools in the data ecosystem, especially dbt, even if you don't know programming, you can still implement data pipelines as long as you are familiar with SQL.

The emergence of such a role brings a new perspective into the data world.

Traditionally, in an Airflow-based data pipeline, although most of the business logic is made up of SQL, there is a lot of code that must surround the SQL in order to make it work. For example

- the declaration of the DAG.

- database connections, including credentials, connection pools, etc.

- implementation of SQL calls through PythonOperator or the corresponding database operator.

- defining the upstream and downstream dependencies of the DAG

In Airflow, all these must be developed in Python, but it is undoubtedly a big challenge for those who do not know how to program.

Decomposing the data pipeline

It's time to decompose Airflow, but before we talk about decomposition, let's talk about why we need Airflow still instead of replacing it.

Airflow is a competent orchestrator, i.e., workflow manager. Have you wondered before

What exactly is an orchestrator?

Let me illustrate with a simple example.

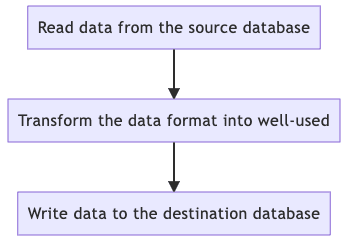

From the above diagram, this is a typical ETL, the following tasks cannot be executed until the previous task is done, and there is a dependency relationship between all tasks, which is a workflow. The purpose of the orchestrator is to ensure that each task can follow the instructions, one after the other.

What if the previous job fails? Then it automatically retries. The orchestrator will make sure that the entire workflow follows the direction you have planned. If it does fail, it can also send a notification to inform the developer something wrong.

From the above description, we know it cannot be solved by replacing Airflow, although it is feasible to move all the business logic from Airflow to dbt. If we put all the processing into dbt, we will immediately encounter a problem: what should we do if something goes wrong after executing dbt run?

Do we execute dbt run again?

A huge package of retries has several issues as follows.

- It can take a long time

- The development must be done with extra care, all models must be idempotence.

- The cost of accessing the database and the cost of network transmission are not low when the volume of data is high.

Therefore, decomposing Airflow seems to be a more reliable solution.

The tasks can be delivered to dbt are done by dbt, but the whole workflow is still under Airflow's control.

Oops, that's a loop, isn't it? We still need Airflow and we still need programming. No, not really, we can still do complete development on dbt, we just need a mechanism to enable Airflow to understand the model composition and relationships within dbt, and then Airflow can trigger dbt.

Back to the topic

What we want to do is exactly as the title is orchestrating DBT with Airflow.

In fact, we have surveyed many solutions in this process, all of which are not ideal. But finally, we found a relatively reliable solution.

Here is the reference we found, which is a trilogy and similar to the problem we are trying to solve.

- Building a Scalable Analytics Architecture With Airflow and dbt

- Building a Scalable Analytics Architecture With Airflow and dbt: Part 2

- Building a Scalable Analytics Architecture With Airflow and dbt: Part 3

Let me summarize briefly.

First, we still use dbt for complete development, which also solves the problem that Airflow is not good at local development.

Then, the CI/CD pipeline is triggered to execute dbt compile when we commit the dbt-related code into the version control system, in order to get the artifact manifest.json under the target folder.

With manifest.json, we can get the dbt model dependencies by reading the file in Airflow, then we only need to write the basic conversion framework, and then we can call the dbt corresponding models through Airflow's BashOperator.

The whole development process can be dbt-based, and take full advantage of Airflow's workflow management capabilities.

Conslusion

Decomposing monoliths is the trend of complex software development nowadays, just like the rise of microservices architecture, there will be a need for decomposing in the big data area.

However, in the big data area, decomposition is not just a clear cut of each domain based on domain-driven development. After all, when doing data analysis, we always want to have a centralized data warehouse.

Therefore, the data engineer's approach to decomposition will be different from the software engineer's. In this case, although we are decomposing Airflow, we still have a new dbt as a monolith, except Airflow calls dbt by model.

The decomposition is done at the level of the model and the execution period, which is a new attempt. I feel this concept can also be introduced into software development.

There is also a decomposition approach that is introduced from microservices architecture into the big data area, i.e. data mesh. But this article is already too long, let's save the data mesh for the future.

Top comments (0)