This post is about Artificial Neural Networks. Computer Science != Biology.

Summary

- What is a Neural Network?

- What are Neural Networks used for?

- Structure of a Neural Network

- How Neural Networks work

- How to improve the learning process

What is a Neural Network?

A Neural Network is a computational model inspired by biological neural networks, like yours and mine.

A key concept here is that these models learn from examples (inputs), without being programmed with any task-specific rule.

What are they used for?

- Image and voice recognition.

- Text generation.

- Genetical analysis.

- Language translations.

- Autonomous driving.

- Fraud prevention.

Structure of a Neural Network

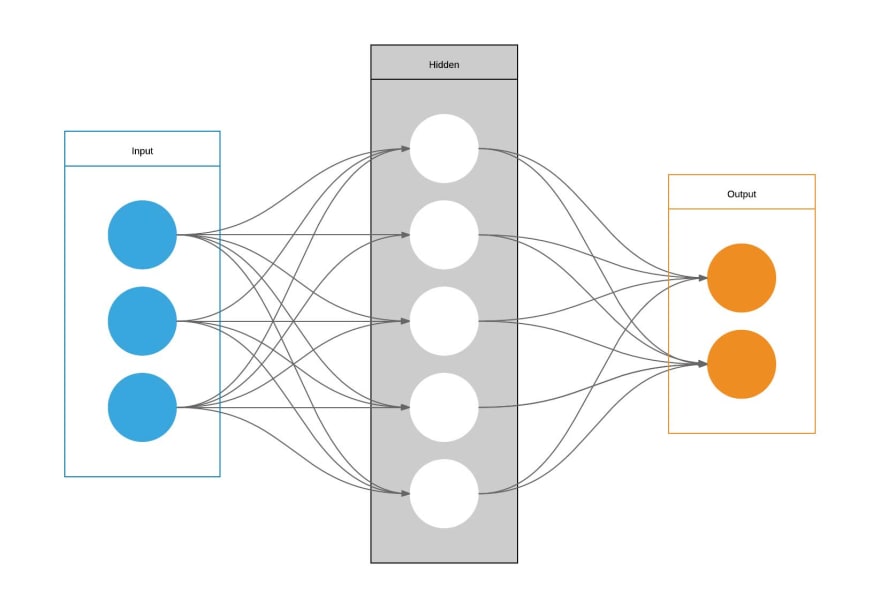

Different layers

- Input

- Hidden (interconnected)

- Output

Each layer has a specific amount of neurons. And every neuron connects with another neuron from a different layer, it can’t connect to a neuron from the same layer unless you use a Recurrent Neural Network..

How Neural Networks work

Explaining this is not a simple task.

First of all, your Neural Network must “learn”. In other words, you must train them.

How do you train a Neural Network?

Well, you pass information to the input layer, that is already processed. This is easier to get through an example.

Let’s say you pass to the input layer 500 cat pictures, with the tag ‘cat pictures’, so the Neural Network knows that those are cat pictures. Then, you start to pass some cat pictures not tagged, and the network will taggit on its own. Also, you can pass it some car pictures, bird pictures, a non-sense picture, whatever, and your network should not taggit with ‘cat picture’.

if it tags some images wrong, you can warn the network of its mistakes, and it will learn from them.

You can repeat this process many times until you are happy with how your Neural Network works, and there you have it. A trained Neural Network, ready to work.

Now use it! Pass to the input layer some non-tagged pictures, and see how well it does it.

If the output is not what you expected, you should train it more, or improve the learning process. I’ll talk more about this in the next lines.

But now, let’s get nerdy.

Your Neural Network works because identifies a pattern on the ‘cat pictures’ images. Then starts to “memorize” that pattern… somehow learns that this particular set of pixels (a bunch of ones & zeros) goes inside the ‘cat pictures’ tag, then it can tag cat images without our help.

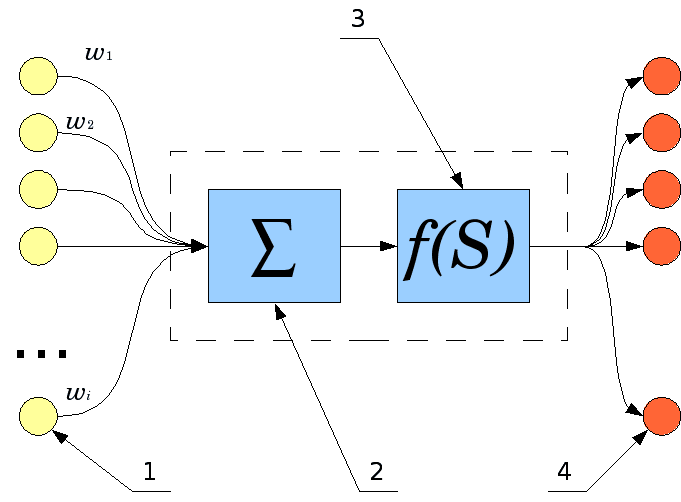

Every neuron gets these inputs, sums all those weights, and outputs come out. Before going to the next layer, this output goes through an activation function. This function adds to the output an “activation rate”, telling the next neuron much important is this data. More in-depth math in here.

|

|---|

| Inputs coming in, sum, activation function, outputs coming out. |

This is how neurons process data but wait, there’s more!

These outputs can go to the next layer, and also can go to a previous layer.

This process when the data goes to a previous layer is known as backpropagation. Thanks to it, data gets processed several times. This may help you or not, depending on the problem that you are willing to solve with your network.

Also, a friendly advice, size matter. If your network’s size is too small or too big, it won’t be able to group the data, the outputs will always be wrong, so you would need to find your network’s perfect size.

In the majority of cases, you would find this through trial and error, until you build your intuition.

How to improve the learning process

Through regularization.

This is a broad concept, that includes a bunch of techniques. More common ones are weight regularization, early stopping, learning rate decay, data augmentation,, and dropout.

These are all measurements to prevent overfitting and underfitting..

Do you have any questions? Do you think that I may be missing something? Write it down in the comments!

See ya!

Top comments (0)