When you want to analyze data stored in MongoDB, you can use MongoDB’s powerful aggregation framework to do so. Today I’ll give you a high level overview of the aggregation framework and show you how to use it.

If you’re just joining us in this Quick Start with MongoDB and Node.js series, we’re just over halfway through. So far, we’ve covered how to connect to MongoDB and perform each of the CRUD—create, read, update, and delete—operations. The code we write today will use the same structure as the code we built in the first post in the series, so, if you have any questions about how to get started or how the code is structured, head back to that first post.

And, with that, let’s dive into the aggregation framework!

Get started with an M0 cluster on Atlas today. It's free forever, and it’s the easiest way to try out the steps in this blog series.

What is the Aggregation Framework?

The aggregation framework allows you to analyze your data in real time. Using the framework, you can create an aggregation pipeline that consists of one or more stages. Each stage transforms the documents and passes the output to the next stage.

If you’re familiar with the Linux pipe |, you can think of the aggregation pipeline as a very similar concept. Just as output from one command is passed as input to the next command when you use piping, output from one stage is passed as input to the next stage when you use the aggregation pipeline.

The aggregation framework has a variety of stages available for you to use. Today, we’ll discuss the basics of how to use $match, $group, $sort, and $limit. Note that the aggregation framework has many other powerful stages including $count, $geoNear, $graphLookup, $project, $unwind, and others.

How Do You Use the Aggregation Framework?

I’m hoping to visit the beautiful city of Sydney, Australia soon. Sydney is a huge city with many suburbs, and I’m not sure where to start looking for a cheap rental. I want to know which Sydney suburbs have, on average, the cheapest one bedroom Airbnb listings.

I could write a query to pull all of the one bedroom listings in the Sydney area and then write a script to group the listings by suburb and calculate the average price per suburb. Or I could write a single command using the aggregation pipeline. Let’s use the aggregation pipeline.

There are a variety of ways you can create aggregation pipelines. You can write them manually in a code editor or create them visually inside of MongoDB Atlas or MongoDB Compass. In general, I don’t recommend writing pipelines manually as it’s much easier to understand what your pipeline is doing and spot errors when you use a visual editor. Since you’re already setup to use MongoDB Atlas for this blog series, we’ll create our aggregation pipeline in Atlas.

Navigate to the Aggregation Pipeline Builder in Atlas

The first thing we need to do is navigate to the Aggregation Pipeline Builder in Atlas.

- Navigate to Atlas and authenticate if you’re not already authenticated.

- In the CONTEXT menu in the upper-left corner, select the project you are using for this Quick Start series.

- In the right pane for your cluster, click COLLECTIONS.

- In the list of databases and collections that appears, select listingsAndReviews.

- In the right pane, select the Aggregation view to open the Aggregation Pipeline Builder.

The Aggregation Pipeline Builder provides you with a visual representation of your aggregation pipeline. Each stage is represented by a new row. You can put the code for each stage on the left side of a row, and the Aggregation Pipeline Builder will automatically provide a live sample of results for that stage on the right side of the row.

Build an Aggregation Pipeline

Now we are ready to build an aggregation pipeline.

Add a $match Stage

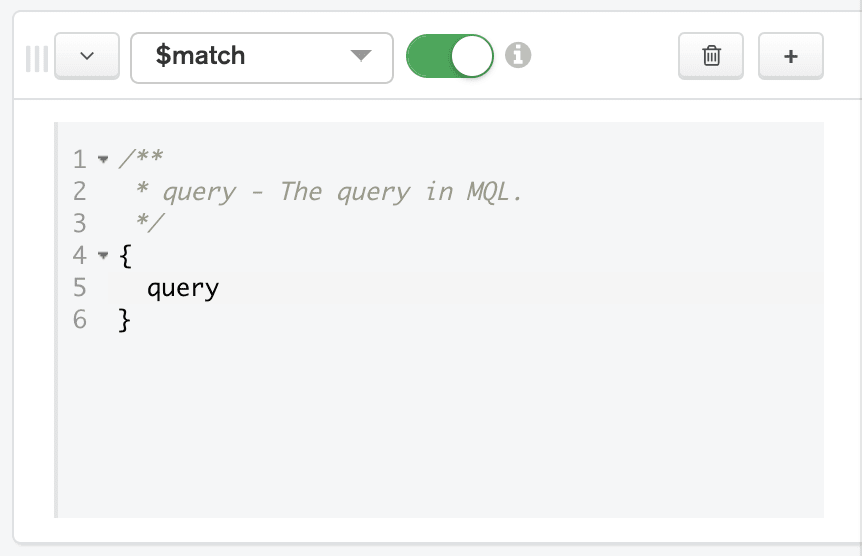

Let’s begin by narrowing down the documents in our pipeline to one bedroom listings in the Sydney, Australia market where the room type is Entire home/apt. We can do so by using the $match stage.

-

On the row representing the first stage of the pipeline, choose $match in the Select… box. The Aggregation Pipeline Builder automatically provides sample code for how to use the $match operator in the code box for the stage.

-

Now we can input a query in the code box. The query syntax for

$matchis the same as thefindOne()syntax that we used in a previous post. Replace the code in the $match stage’s code box with the following:

{ bedrooms: 1, "address.country": "Australia", "address.market": "Sydney", "address.suburb": { $exists: 1, $ne: "" }, room_type: "Entire home/apt" }Note that we will be using the

address.suburbfield later in the pipeline, so we are filtering out documents whereaddress.suburbdoes not exist or is represented by an empty string.

The Aggregation Pipeline Builder automatically updates the output on the right side of the row to show a sample of 20 documents that will be included in the results after the $match stage is executed.

Add a $group Stage

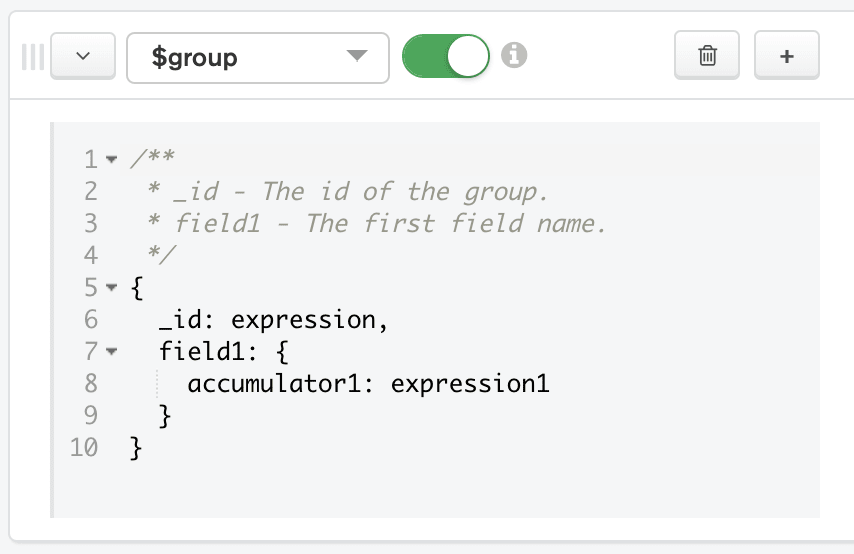

Now that we have narrowed our documents down to one bedroom listings in the Sydney, Australia market, we are ready to group them by suburb. We can do so by using the $group stage.

- Click ADD STAGE. A new stage appears in the pipeline.

-

On the row representing the new stage of the pipeline, choose $group in the Select… box. The Aggregation Pipeline Builder automatically provides sample code for how to use the

$groupoperator in the code box for the stage. -

Now we can input code for the

$groupstage. We will provide an_id, which is the field that the Aggregation Framework will use to create our groups. In this case, we will use$address.suburbas our_id.Inside of the $group stage, we will also create a new field namedaveragePrice. We can use the $avg aggregation pipeline operator to calculate the average price for each suburb. Replace the code in the $group stage’s code box with the following:

{ _id: "$address.suburb", averagePrice: { "$avg": "$price" } }

The Aggregation Pipeline Builder automatically updates the output on the right side of the row to show a sample of 20 documents that will be included in the results after the $group stage is executed. Note that the documents have been transformed. Instead of having a document for each listing, we now have a document for each suburb. The suburb documents have only two fields: _id (the name of the suburb) and averagePrice.

Add a $sort Stage

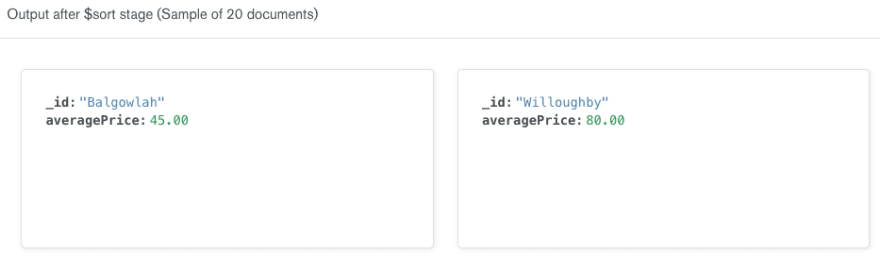

Now that we have the average prices for suburbs in the Sydney, Australia market, we are ready to sort them to discover which are the least expensive. We can do so by using the $sort stage.

- Click ADD STAGE. A new stage appears in the pipeline.

-

On the row representing the new stage of the pipeline, choose $sort in the Select… box. The Aggregation Pipeline Builder automatically provides sample code for how to use the

$sortoperator in the code box for the stage. -

Now we are ready to input code for the

$sortstage. We will sort on the$averagePricefield we created in the previous stage. We will indicate we want to sort in ascending order by passing1. Replace the code in the $sort stage’s code box with the following:

{ "averagePrice": 1 }

The Aggregation Pipeline Builder automatically updates the output on the right side of the row to show a sample of 20 documents that will be included in the results after the $sort stage is executed. Note that the documents have the same shape as the documents in the previous stage; the documents are simply sorted from least to most expensive.

Add a $limit Stage

Now we have the average prices for suburbs in the Sydney, Australia market sorted from least to most expensive. We may not want to work with all of the suburb documents in our application. Instead, we may want to limit our results to the ten least expensive suburbs. We can do so by using the $limit stage.

- Click ADD STAGE. A new stage appears in the pipeline.

-

On the row representing the new stage of the pipeline, choose $limit in the Select… box. The Aggregation Pipeline Builder automatically provides sample code for how to use the

$limitoperator in the code box for the stage. -

Now we are ready to input code for the

$limitstage. Let’s limit our results to ten documents. Replace the code in the $limit stage’s code box with the following:

10

The Aggregation Pipeline Builder automatically updates the output on the right side of the row to show a sample of ten documents that will be included in the results after the $limit stage is executed. Note that the documents have the same shape as the documents in the previous stage; we’ve simply limited the number of results to ten.

Execute an Aggregation Pipeline in Node.js

Now that we have built an aggregation pipeline, let’s execute it from inside of a Node.js script.

Get a Copy of the Node.js Template

To make following along with this blog post easier, I’ve created a starter template for a Node.js script that accesses an Atlas cluster.

- Download a copy of template.js.

- Open template.js in your favorite code editor.

- Update the Connection URI to point to your Atlas cluster. If you’re not sure how to do that, refer back to the first post in this series.

- Save the file as

aggregation.js.

You can run this file by executing node aggregation.js in your shell. At this point, the file simply opens and closes a connection to your Atlas cluster, so no output is expected. If you see DeprecationWarnings, you can ignore them for the purposes of this post.

Create a Function

Let’s create a function whose job it is to print the cheapest suburbs for a given market.

-

Continuing to work in aggregation.js, create an asynchronous function named

printCheapestSuburbsthat accepts a connected MongoClient, a country, a market, and the maximum number of results to print as parameters.

async function printCheapestSuburbs(client, country, market, maxNumberToPrint) { } -

We can execute a pipeline in Node.js by calling Collection’s aggregate(). Paste the following in your new function:

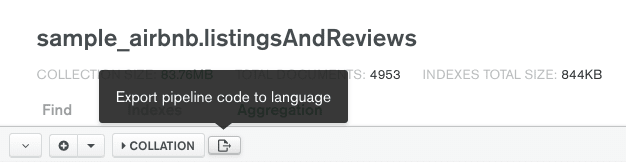

const pipeline = []; const aggCursor = client.db("sample_airbnb").collection("listingsAndReviews") .aggregate(pipeline); aggregate()has one required param: a pipeline of type object. We could manually create the pipeline here. Since we’ve already created a pipeline inside of Atlas, let’s export the pipeline from there. Return to the Aggregation Pipeline Builder in Atlas. Click the Export pipeline code to language button.

The Export Pipeline To Language dialog appears. In the Export Pipleine To selection box, choose NODE.

In the Node pane on the right side of the dialog, click the copy button.

-

Return to your code editor and paste the pipeline in place of the empty object currently assigned to the

pipelineconstant.

const pipeline = [ { '$match': { 'bedrooms': 1, 'address.country': 'Australia', 'address.market': 'Sydney', 'address.suburb': { '$exists': 1, '$ne': '' }, 'room_type': 'Entire home/apt' } }, { '$group': { '_id': '$address.suburb', 'averagePrice': { '$avg': '$price' } } }, { '$sort': { 'averagePrice': 1 } }, { '$limit': 10 } ]; -

This pipeline would work fine as written. However, it is hardcoded to search for ten results in the Sydney, Australia market. We should update this pipeline to be more generic. Make the following replacements in the pipeline definition:

- Replace

’Australia’withcountry - Replace

’Sydney’withmarket - Replace

10withmaxNumberToPrint

- Replace

-

aggregate()will return an AggregationCursor, which we are storing in theaggCursorconstant. An AggregationCursor allows traversal over the aggregation pipeline results. We can use AggregationCursor’s forEach() to iterate over the results. Paste the following insideprintCheapestSuburbs()below the definition ofaggCursor.

await aggCursor.forEach(airbnbListing => { console.log(`${airbnbListing._id}: ${airbnbListing.averagePrice}`); });

Call the Function

Now we are ready to call our function to print the ten cheapest suburbs in the Sydney, Australia market. Add the following call in the main() function beneath the comment that says Make the appropriate DB calls.

await printCheapestSuburbs(client, "Australia", "Sydney", 10);

Running aggregation.js results in the following output:

Balgowlah: 45.00

Willoughby: 80.00

Marrickville: 94.50

St Peters: 100.00

Redfern: 101.00

Cronulla: 109.00

Bellevue Hill: 109.50

Kingsgrove: 112.00

Coogee: 115.00

Neutral Bay: 119.00

Now I know what suburbs to begin searching as I prepare for my trip to Sydney, Australia.

Wrapping Up

The aggregation framework is an incredibly powerful way to analyze your data. Creating pipelines may seem a little intimidating at first, but it’s worth the investment. The aggregation framework can get results to your end-users faster and save you from a lot of scripting.

Today, we only scratched the surface of the aggregation framework. I highly recommend MongoDB University’s free course specifically on the aggregation framework: M121: The MongoDB Aggregation Framework. The course has a more thorough explanation of how the aggregation framework works and provides detail on how to use the various pipeline stages.

This post included many code snippets that built on code written in the first post of this MongoDB and Node.js Quick Start series. To get a full copy of the code used in today’s post, visit the Node.js Quick Start GitHub Repo.

Be on the lookout for the next post in this series where we’ll discuss change streams.

Series Versions

The examples in this article were created with the following application versions:

| Component | Version used |

|---|---|

| MongoDB | 4.0 |

| MongoDB Node.js Driver | 3.3.2 |

| Node.js | 10.16.3 |

All posts in the Quick Start: Node.js and MongoDB series:

- How to connect to a MongoDB database using Node.js

- How to create MongoDB documents using Node.js

- How to read MongoDB documents using Node.js

- How to update MongoDB documents using Node.js

- How to delete MongoDB documents using Node.js

- Video: How to perform the CRUD operations using MongoDB & Node.js

- How to analyze your data using MongoDB's Aggregation Framework and Node.js (this post)

- How to implement transactions using Node.js

- How to react to database changes with change streams and triggers

Top comments (2)

awesome article series. keep up the good work. 🙏

Thank you!