Docker containers (also known as OCI containers) are now the most popular way to build and ship applications, whether it's through plain Docker, Docker-Compose, Kubernetes, or hosted services like Heroku, GCP CloudRun. The ease of building and deploying containers made it a no-brainer for developers and ops teams to adopt the new technology.

But what about IoT devices? In this post, we will look into what's good about Docker containers on the edge and things to watch out for.

Are Docker containers ready for the IoT devices?

Yes. The devices themselves are now plenty powerful to run a regular Linux distro, have a Docker engine installed, and run your containers without much overhead. Most of the devices out there, even with dated hardware are often more powerful than the small VMs provided by major cloud services.

Synpse has been deployed on large fleets of devices that were comparable to RaspberryPi 2 (much weaker than the current generation) and we haven't observed any performance-related issues so far.

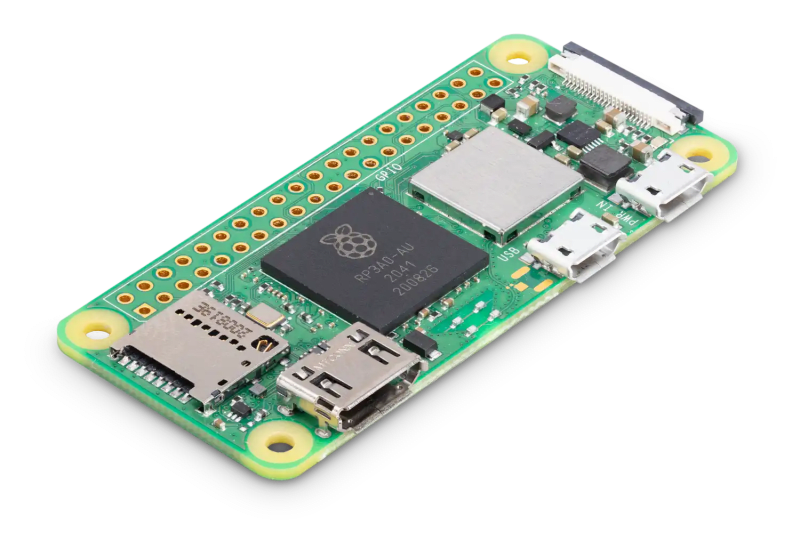

For example, looking at Pi Zero 2 which is priced as low as $15 can offer you a quad-core 64-bit ARM Cortex-A53 processor clocked at 1GHz and 512MB of SDRAM. Or we can look at the regular Raspberry Pi 4 or Jetson Xavier which can provide a much higher amount of computing power.

Software deployment and OTA updates

The biggest advantage of Docker is the ability to package the application the way you want and not worry about the host machine too much (you still need to think about the OS but a lot less). Before writing software in Go I used to write backend applications in Python. Application deployment used to be complicated, updates brittle and once in a while we would get some dependency clash. Docker solved this by packaging the whole filesystem with installed dependencies. You have your packages, your static files placed in the container.

This way you can build the container, run automated tests against it and then deploy it to the servers. It also paves way for a simple rollback mechanism, where if things go wrong, you can just start the older version of the Docker container.

For IoT products with tiny applications and fewer packages, the OTA update process is usually lightweight and simple: a new image is downloaded, the running container is stopped, a new container from the new Docker image is started.

Preparing multi-arch (or at least arm) images

The main difference between x86/amd64 Docker images and the ones that you will probably need to deploy to the IoT devices is their architecture. This can sometimes be a gotcha when trying to run your containers on a RaspberryPi or some other device as the errors are often cryptic.

Thankfully, DockerHub and other registries support multi-arch images where you can push both x86, arm 32-bit, and arm 64-bit images. This greatly simplifies deployment as the Docker daemon will figure out on its own, which image to download. We have written an article on building multi-arch images.

Persisting data

An application that performs AI tasks such as image processing or has local databases need to persist data. When people just start using Docker containers, storage can be confusing as anything saved inside the container is not persisted when a container is recreated during the update. The way to persist data is to mount a volume from the device's filesystem into the container. You can read more about persistence in Docker here.

Write/read-heavy directories and extending device's filesystem lifetime

When preparing a device for an extended time in service, you need to think not only about the updates but also about the longevity of its components. SD cards or inbuilt storage can last for a long time but there are ways to reduce the load on them.

If your application needs to write and read large amounts of data, it's often good to also mount /dev/shm. You can read more about shared memory here.

Exposing hardware devices into the containers

Quite often you will need to use serial devices. Containerized applications can access them without any problems but you need to let Docker know that you want to expose these devices to your containers. On the Docker CLI it looks like this:

docker run -t -i --device=/dev/ttyACM0 ubuntu bash

Alternatively, in Synpse you can add the following into your application spec:

devices:

- hostPath: /dev/ttyACM0

containerPath: /dev/ttyACM0

Security

When deploying software to thousands of devices you need to think about "day two" operations. Even before releasing your application, it's crucial to plan and test both OS and application updates. How will you update the application? If the device is connected to the internet, what are the best methods to keep it secure even years later?

By reducing the number of packages needed to be installed on the device, you are also drastically reducing the attack surface. For example, if you are deploying NodeJS, Python, Ruby based applications, your device OS doesn't need to have the runtime and can be as bare-bones as possible. This helps a lot, whatever is not installed, doesn't need to be updated.

You can also use tools to automatically scan your images for vulnerabilities:

- https://github.com/marketplace/actions/container-image-scan

- https://docs.docker.com/docker-hub/vulnerability-scanning/

Developer productivity

Another important aspect when deploying containerized applications to the edge is reusing the tools that are already well known to your developers and ops teams. By choosing Docker for the edge, you aren't introducing a new packaging system. You can also reuse your CI pipelines.

Summing up

If your hardware and OS allow, it's almost always better to choose Docker containers for deploying your applications. It will increase security, simplify the deployment process and reduce the overall amount of issues over a long time of operation. The important part becomes a solid strategy to update your containers.

OTA updates with Synpse

When it comes to managing large fleets of containers - Synpse was built for this. It provides everything you might need to successfully deploy and run a large scale operation:

- Declarative application deployment, store configuration in GitHub or any other source control management system

- Simple rollbacks

- GPU support

- Volume support

- SSH support with Ansible integration for OS updates

- Application logs for each device

- Metrics collection

You can try out Synpse for free (up to 5 devices). Check out our quick start.

Top comments (0)