The title of this post might seem odd coming from a guy who helps run a site on Visual Regression Testing (and has given many talks on the subject).

But I really do believe it.

I'll explain why in just a moment, but first, a primer if you're not familiar with the term.

What is 'Visual Regression Testing'?

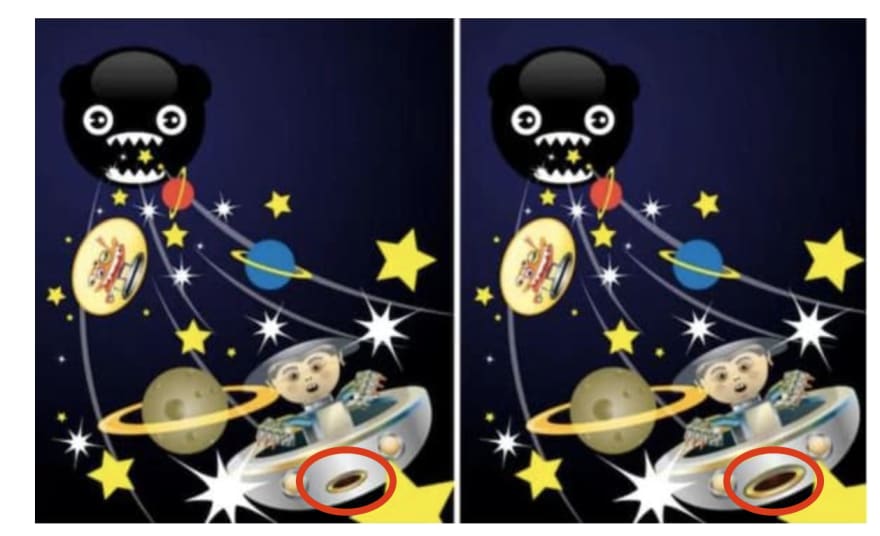

You know those "spot the difference" puzzles? The ones where you need to find five changes between two very identical looking pictures?

Testers do that sort of thing every day.

Anytime the CSS or HTML changes, we're stuck playing "spot the difference".

But we're really bad at it. Like, really bad. (It's how magicians make their magic work)

Visual Regression Testing aims to help us here by having a computer detect the differences for us.

You start by storing a screenshot of the page in a good state. Then, after an update, you take a fresh screenshot and have the computer compare the two.

Any differences between these two images causes the test to fail. It's actually really good at this, in a really dumb way.

Why it's stupid

Basic image comparison is dumb.

It just can't understand the subtleties of design, and how that design can change without breaking. This leads to a ton of false positives.

Because web pages change. A lot.

The content is always being updated; New features are added, old features removed. The design is always being tweaked.

And don't even get me started on all the ways marketing wants to tinker with the site.

If you're running basic image comparison, your tests are going to fail. All. The. Time.

Believe me, I've been there. It sucks.

It's like trying to detect earthquakes with a seismometer... a seismometer that's in a car hurtling down a washed out dirt road.

You're going to get a lot of useless noise.

To get around this, some libraries offer the option of defining an allowed 'diff percentage'. It's a number comparing the total percentage of differences versus the size of the image itself.

If it's below that number, it'll say, "Hey, that's okay, we'll just accept that change because surely it means nothing..."

What type of madness is that?!

We're just going to define an arbitrary and completely subjective number that has no basis in reality and go with it?!

Even the seismologist in the car thinks that's idiotic.

How to learn it some good

All this may seem pretty harsh, and you may be thinking that it's all a lost cause, but I have some decent news.

Just as scientists are smart enough to cancel out the noise from their measuring devices, we can improve our signal to noise ratio with a few tricks.

Take smaller screenshots

The first and simplest method I recommend is to take smaller screenshots. That is, only take screenshots of individual components, and never of the entire webpage.

The tool you use needs to support snapping individual sections of the page (this is usually done by passing in a CSS selector for a specific element).

By reducing the size of your images, you're able to quickly identify what failed ('oh, the screenshot of the submit button').

You also get to block out sections of the website constantly in motion (for instance, that third-party 'recommended items' widget).

Test Static Pages

Generated style guides are incredibly useful for visual testing. They remove much of the dynamic nature of sites and replace them with predictable and reliable pages.

Tools like Storybook have made a ton of progress in getting style guides/pattern libraries set up. Sure, you're not testing the actual site, but it's certainly helping the cause.

Testing your components in isolation and succeeding is much better than trying to test everything allat once and constantly failing.

Use better algorithms

This is the "you get what you pay for" section. Open-source tools are neat, but I haven't found one that offers what paid tools can provide (I'm always open to suggestions though).

There's a myriad of paid tools out there, but I'm going to focus on the two that I have the most experience with here.

Percy

The team at Percy has continuously improved the product over the past three years.

They've added intelligent features like "screenshot stabilization", which helps remove false positives due to font rendering and animation.

They've also built out a slick interface for managing screenshots through the software development lifecycle.

A friendly interface for managing screenshots is not something I've mentioned before, but it's an absolute necessity. It's hard to explain without getting in to too much detail, but a good interface streamlines the complexities of this type of testing.

Simple demos of can't account for projects that have multiple test environments and multiple code branches going at the same time.

When you try it in a real environment, you quickly realize that the basic tools aren't functional enough to be manageable long-term (or usable by a wide range of folks).

And that's where paid tools really pay off and why I recommend you check them out.

Applitools

One alternative to Percy is Applitools, which bills itself as "AI-powered visual testing and monitoring". The AI part comes to play in the way they manage their image comparison.

Instead of blindly (heh) comparing the before and after screenshots, their code intelligently understands different types of changes.

Have a component that has different text, but the same layout? You can define that as a 'Layout match level', which allows for different text as long as the general geometry of the area is the same.

That's super helpful if you don't have complete control over the text shown on the site.

And like Percy, they also provide a hosted dashboard of test results for you, which again, is essential in any solution.

Along with that, they've also done a lot of work to integrate with tools out there like Storyboard and test frameworks.

They've put in a ton of work into their product so you don't have to. Why not take advantage of that?

Not so stupid after all

I stated at the beginning that I think Visual Regression Testing is stupid. I still believe that.

But it doesn't have to be. I write this as a reality check, rather than a condemnation.

Just because I believe it's "stupid" doesn't mean it can't be smart.

Just as web frameworks and programming languages have improved tremendously over time, visual regression tooling is doing the same.

Please, do add visual regression testing to your testing suites, it's incredibly powerful.

But be aware that the basic functionality won't be enough for professional use.

You'll need to add some intelligence to your process.

Give those quick-start tutorials a try, but also put in the extra work to make the testing effective.

Don't give up when the first attempts are a bit too bumpy for your needs.

Find ways to smooth out the process by simplifying your scenarios. Look in to paid solutions that offer functionality not available yet in the open-source world.

And understand that while it can make testing appear easier, website testing is, and always will be, a complex process that requires a little brainpower.

Disclaimer: I have done paid work for Applitools in the past, but they are in no way compensating me in writing this.

Top comments (9)

The mouth on the right is bigger.

They're different pictures. I can tell because of the pixels.

Try Imagium.io a free on-premise cloud based AI powered Visual testing tool.

Imagium can be integrated with any automation tool(Selenium, Appium, Playwright, Cypress etc) and any programing language (Java, C#, Python, Javascript etc.)

Nice article

if you cross your eyes until both images overlap and then let the composite image come into focus, the difference will shimmer a bit

Dead on - the mouth on the space ship was shimmering for me.

Some comments may only be visible to logged-in visitors. Sign in to view all comments.