I’ve been using Honeycomb.io for a small project and I thought I would take some time to share my thoughts. Overall I think Honeycomb is a good product. It is expensive, but it would be hard to replicate for cheaper until you are a huge company (and then you probably get good discounts).

I’m not going to focus too much of all of the specific things that you can do with Honeycomb, check out their marketing material for that. Instead I will focus on overall impressions of their offerings as well as specific complaints that significantly address my usage of the product.

Disclaimer: I am currently using the free plan. Honeycomb has a generous forever-free plan with just a few features blocked off. I used a paid Honeycomb account a past workplace and we are considering using Honeycomb at my current workplace, but I don’t currently have a paid account to test if any of my complaints are free-only problems. I don’t think they are, but I can’t be sure.

What is Honeycomb?

Honeycomb is an application visibility tool. Its goal is to let you understand what is happening in your running application. It does this via “event-based observability”. Basically structured log messages. You post Honeycomb a bunch of events and it allows you to use that data to derive information.

For example if for every HTTP request my application receives I post an object like this to Honeycomb:

{

"duration_ms": 1.23,

"http.method": "GET",

"http.request.header.origin": "https://example.com",

"http.request.header.referer": "https://example/subscriptions",

"http.status_code": 200,

"http.target": "/subscriptions/:id",

"http.user_agent": "Mozilla/5.0 (X11; Linux x86_64; rv:101.0) Gecko/20100101 Firefox/101.0",

"message_id": "teeZei5o",

"timestamp": "2022-06-20T11:45:29.191127734Z",

}

Then I can produce nice graphs of the most popular endpoints on my site.

But this is just the basics. The main benefit of Honeycomb is that the data isn’t pre-aggregated. So we can dig into the raw information as well as decide what aggregations are helpful on-the-fly. We can see any specific request and dig into it, which is invaluable for debugging low-frequency errors or trying to understand the impact caused by a specific problem.

Honeycomb also provides basic dashboarding where you can put charts and tables onto a page. You get to choose between a one or two column layout and that is about it. There is also simple sharing support. While very basic, the dashboards serve their purpose well as a way to collect and organize your favourite visualizations.

Intro to Tracing

Simply getting individual events is nice, but it can be hard to understand exactly what occurred. The step from logs to tracing is small, but critical. We just add a few attributes to our events (which are now called spans).

trace.trace_id- This is the “trace” that this event is a part of. This is typically a logical event such as a web request or a periodic job starting. It is used to collect related spans together.

trace.span_id- This is the ID of the specific span. This is used for identifying spans within a trace.

trace.parent_id- This is the ID of the span directly causal to this one.

With this information you can then build up a tree. (You don’t really need trace.trace_id but it is helpful for performance, ID entropy and resilience to lost spans.)

Now we have a pretty powerful tool, we can now identify problems, for example errors or performance problems, and dig into a trace of what caused the problem. Depending on the language and library that you are using this may even be easier than regular logging.

Tracing and Honeycomb

So tracing is the primary source of ingest into Honeycomb and it has a trace viewer, so Honeycomb is a tracing tool?

Well, no. It was surprising to me as well. But there are basically two groups of tools that Honeycomb provides.

- Charting, querying, alerting, SLOs and more based on spans.

- A trace viewer.

While these tools are closely integrated (when viewing spans there is a link to the trace viewer and the trace viewer has options to add filters to your current chart or query) they are also annoyingly distinct.

For example if I want to know the average number of parse_entry calls per feeds_find call I am out of luck. I can query for parse_entry spans and take the average, but I can’t select only the calls that occurred as a child of a feeds_find call. This is because the query engine fundamentally isn’t trace aware. It only works within a particular span, it has no access to data from the rest of the trace.

This isn’t a huge problem, you can make a huge number of valuable dashboards and queries using the span-based queries. You also learn to “duplicate” important metrics down the trace. For example, I often duplicate the Feed ID down the call stack as far as reasonable so that I can answer questions about particular feeds and see if any are unusually expensive, flakey or something else that may cause concern. But often times more complex questions can’t be answered with this limitation in place.

I would love to see true tracing-aware queries. Although I can imagine how this can become slow and expensive if not implemented carefully.

Query Building

The Honeycomb query builder is fairly simple. It basically allows you to generate something in the form of:

SELECT FROM dataset

WHERE "filters"

GROUP BY "groups"

HAVING "having"

ORDER BY "order"

Where you have control over the things in quotes.

However, the primary limitation is that each of these is restricted to simple operations on columns.

For example the WHERE clause is controlled by a global AND vs OR mode. This makes it impossible to do a query like WHERE message_id = "abcdef" AND (http.target LIKE '/login/%' OR http.target LIKE '/user/%'). Similar restrictions appear on other clauses, simple queries such as GROUP BY http.url LIKE 'https:%' can not be entered.

The workaround for this is creating a derived column. Derived columns are global across the dataset. This can be very helpful for cases where having a common definition is useful such as my http.user_agent_looks_human but can be annoying for less reusable logic where the derived column is many clicks away to edit and adds noise to other dashboards and the span viewer (as every derived column is visible in the list of fields for every span).

Interestingly it looks like the query engine has full support for on-the-fly logic here as some of Honeycomb’s pre-made dashboards use more complicated logic.

While you can edit this query in some ways you can’t change that “pill” in any way other than removing it. The editor forces you to select an auto-complete option and an expression like that will never appear in the suggestions.

Annoyingly this shows a transformation that I commonly want to do! Convert a bool value (or expression) into a 0/1 integer so that I can get an average.

This also reinforces the impression that these derived columns are computed on-the-fly, so it is likely just a frontend limitation.

Visualizations

Honeycomb has some basic visualizations and I have found that they get the job done for most scenarios. The charts are interactive and easy to explore, including clicking to view a nearby trace which is very convenient for investigating outliers. I also like that page titles are set and it is easy to bookmark and share any URL.

But I still have a few complaints that significantly impact my experience.

Units

Honeycomb doesn’t deal with units at all. This makes many things difficult to quickly understand. For example viewing a field with values in milliseconds will show you 100k which is of course 100 kilo-milli-seconds AKA seconds. This is also annoying for cases like measuring bytes where you would prefer to use binary prefixes instead of SI.

In addition to the general lack of units they also don’t scale their charts in the most reasonable ways. For example the hover-tooltip for a graph of a rare event may tell you that it happens 0.002 times per second. It would be much more useful to say that it occurs 7.2 times per hour.

I also find it quite annoying that the left axis is scaled based on the “granularity” (the duration of each time-slice). Especially since this is often automatically chosen by the frontend, it means that you need to look both at the left axis and the granularity setting to understand how often something is occurring. If are viewing multiple aggregations (which each get their own graph) the granularity indicator can also be far away (or even offscreen) so the scale on the left becomes basically meaningless until you scroll around.

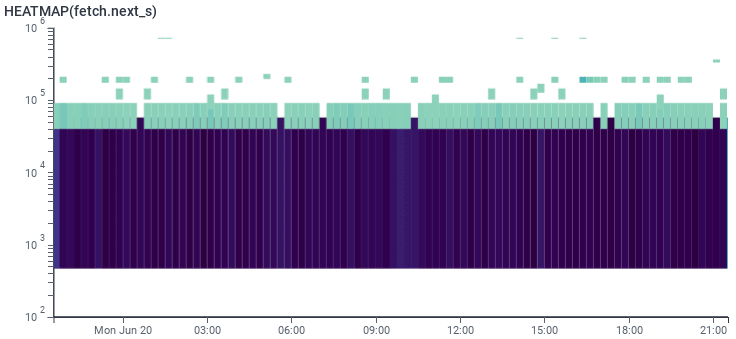

Logarithmic Scale

Lots of data comes in an exponential distribution. Latencies, request sizes and other. A logarithmic scale is great for getting an idea of patterns in different sections of this distribution. It provides finer detail on the small end and more range on the big end.

Almost always I want to use a log scale with Honeycomb’s distribution visualization. Unfortunately its log scale mode is completely useless. It appears that the buckets are not set up logarithmically, the UI just scales the bucket so that half of the chart is just one giant bucket for all of the small values, instead of the extra detail that you hoped to see.

The only workaround is viewing different percentiles on different graphs which gives you back some detail but doesn’t give the intuitive view that the heatmap visualization does. The other workaround which I find myself doing frequently is manually “zooming in” by filtering for ranges of values. This way I can focus on a small range for small values then manually get larger ranges for the high end. I’m basically manually making exponential buckets at this point.

Alerting

Honeycomb also supports a basic alerting system. Basically you can create an expression that is evaluated periodically. If the expression crosses a target you get an email. For small projects this is likely sufficient for most of your alerts. The main limitation is the 2 alerts on the free plan, but the paid plan gives you 100 alerts which should keep you well covered.

Cost

While Honeycomb has a generous free plan their paid plan is a big step-up to $120/month. If your company has employees this will likely be small compared to the costs of labour however for a small project that is a big step. For my project we want to stay profitable, so we are unlikely to upgrade soon as this would quickly put us in the red.

https://www.honeycomb.io/pricing/

Despite being too expensive for my current project I am very happy with their pricing model.

Quota

The most important thing is that Honeycomb implements a fixed-cost model. You purchase a plan upfront and pay that amount at the end of the month. This does mean that you may be paying for capacity that you didn’t use, but more importantly it means that you won’t be hit with a surprise bill after a DoS attack or just a bug in your code that results in excessive events being logged.

They also have a simple usage dashboard that is clear and concise. It highlights your daily “target” as well as the current billing period and a bit of history.

If you approach your usage limit Honeycomb will automatically start sampling events (discussed further below) and only start dropping events in extreme cases. This means that even if you are approaching the limit you will still have most of your visibility. This is unlike many SaaS tools where hard budget caps leave you completely in the dark until the next billing cycle starts. So while you won’t have every trace available you will still be able to understand what is going on.

Sampling

Log-based visibility is fundamentally more expensive than metrics-based because you retain a lot of information rather than just a small delta per update. However, sampling can keep the costs under control by sacrificing only a small amount of visibility.

The best part of sampling with Honeycomb is that Honeycomb is sampling native. This means that each event knows its sample rate and the query and visualization tools correct for this.

For example, we have a health-check endpoint that is used to verify that each instance of our application is running correctly. This endpoint is hit a lot. We have Kubernetes configured to check each instance every second. There are also external services periodically hitting this endpoint from various locations on the public internet. If we traced these health checks just like regular HTTP requests we would be burning most of our quota on these uninteresting requests. We could exclude these, but then we would have no visibility at all. How long do these health checks take? How often do they fail? It is nice to have some insight. So we simply sample these 1/256th of the time. This way we can still see the volume, latency and sources (for example kubernetes vs checks from the public internet) even if we can’t investigate the trace of every health check. Most importantly when we query for our most popular HTTP requests the health checks are scaled properly, so we are not mislead.

For example I can still see valuable metrics for my health checks like response time distribution and total rate. I can also easily view the traces of some slow health-checks to see why they took so long (assuming that your sampler is trace-aware).

In theory you can sample based on just about anything. For example sample 10% of HTTP requests but 100% if they return a 5xx response. Of course, you need to be careful to set the reported sample rate correctly, otherwise the “correction” will actually lead to incorrect results. This can be easy to mess up for dynamic sample rates, but a good library will handle this for you so that you get it correct every time.

With some basic tuning it is easy to use sampling to collect most of the data you need while keeping costs low. This is an excellent tool to make sure that you are getting good value from Honeycomb.

Ingest

Honeycomb supports the standard OpenTelemetry Collection API. OpenTelemetry is widely supported and you just need to point it at api.honeycomb.io with some custom headers for you API key. This keeps the lock-in low, which is fantastic.

Support

While I am only using the free plan and have access to lowest priority support, I have still been quite happy with what I have received. While the responses may take a while to come in the agents were knowledgeable and friendly. Not perfect, but they listened to what I said and gave thoughtful responses. I also have experience with a dedicated representative at a past company and they were excellent as well. I have definitely gotten the impression that the support team is a valued part of Honeycomb.

My only minor complaint with support is that while it said I would get replies in my email I never did. I had to remember to check back in the support widget on their website.

Top comments (0)