I see posts and hear conversations quite frequently about MongoDB performance issues. They are a frequent, and hot, topic on sites like Quora, Hacker News, and Reddit. Many of these "hits" against MongoDB are based on outdated data and older versions of MongoDB.

There was a movie from the late 1980's called Crocodile Dundee II

Similarly, many complaints from older versions of MongoDB still linger around. Someone who had a bad experience with an old version will answer a thread somewhere and claim "I used it once, didn't like it, it's garbage." Much like Mick Dundee, they are basing their entire opinion on outdated knowledge.

Let's take a look at some performance issues that are often raised and where things sit now with the latest version of MongoDB, 3.4.6. I raised some of these aspects in a previous post, but let's take a deeper dive.

The Jepsen Test &Â Performance Issues of old

From a "documented issue" standpoint, many performance issues that plague MongoDB in social reviews are covered in a Jepsen test result post from 20 April 2015. This was based on version 2.4.3. Or an even older article from 18 May 2013. Clearly, there were some issues with data scalability and data concurrency in those earlier versions.

In fact, Jepsen has done extensive tests on MongoDB on lost updates and dirty and stale reads. Without getting too deep into the hows and whys of what was happening to the data, there were issues with writes when a primary went down, and read & write consistency. These issues have been addressed as of version 3.4.1.

Product Enhancements

With the new data enhancements, MongoDB version 3.4.1 passed all of the Jepsen tests. Kyle Kingsbury, the creator of Jepsen, offered the following conclusions:

MongoDB has devoted significant resources to improved safety in the past two years, and much of that ground-work is paying off in 3.2 and 3.4.

MongoDB 3.4.1 (and the current development release, 3.5.1) currently pass all MongoDB Jepsen tests….These results hold during general network partitions, and the isolated & clock-skewed primary scenario.

You can read more about his conclusions in his published results.

Beyond data security, customers are finding huge benefits in performance in the more current releases of MongoDB. Improvements to, or the introduction of, technologies such as replication compression, the WiredTiger storage engine, in memory cache, and performance enhancements to sharding and replica sets have been a win for users.

WiredTiger Case Study

A friend who works at Wanderu.com, a MongoDB user, was very generous and forthcoming with some information about their MongoDB experience. When choosing a database option they felt that NoSQL, and MongoDB specifically, fit their business and data model better than a relational model would. They process a very diverse set of data for their bus and train travel application.

They take information from a vast assortment of bus and train vendors which arrive in XML, JSON, PDF, CSV, and other formats. Data is then ingested and transformed so that everything works with price checking and booking calls in vendor specific formats. The data model was determined to be incredibly complex and fragile for implementing in a relational database.

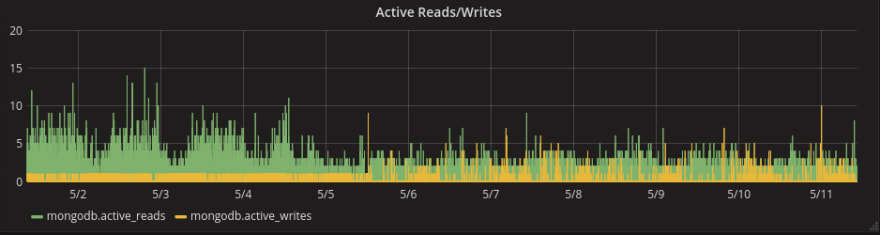

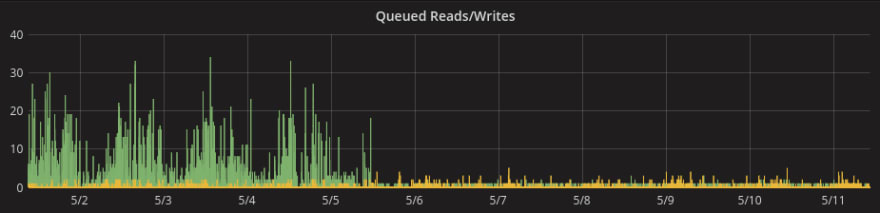

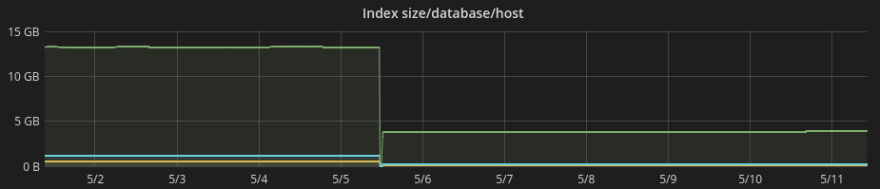

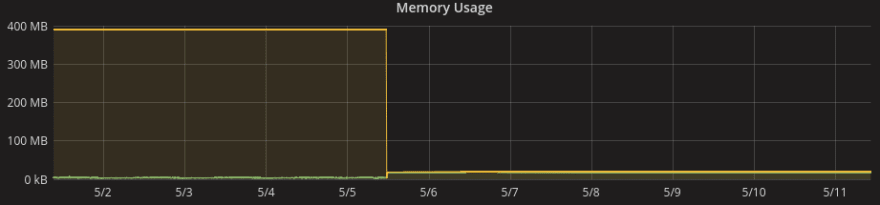

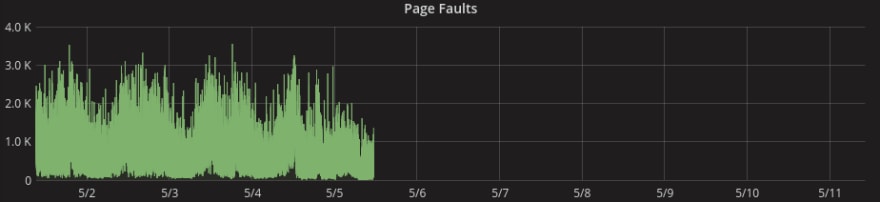

In May 2017, Wanderu migrated to the WiredTiger storage engine in MongoDB 3.4. They took some screenshots of some of their performance graphs that covered a 10 day period, five before and five after, their migration on 5/5. They were kind enough to share these images with me and approved of their use in this article.

[caption id="attachment_492" align="aligncenter" width="938"] Before WiredTiger, the write load had a very limited max. After migration writes spiked as necessary.[/caption]

Before WiredTiger, the write load had a very limited max. After migration writes spiked as necessary.[/caption]

[caption id="attachment_495" align="aligncenter" width="925"] Writes stayed fairly constant while queued reads fell dramatically.[/caption]

Writes stayed fairly constant while queued reads fell dramatically.[/caption]

[caption id="attachment_493" align="aligncenter" width="925"] Index size took a dramatic decrease in size as well.[/caption]

Index size took a dramatic decrease in size as well.[/caption]

[caption id="attachment_497" align="aligncenter" width="921"] Not surprisingly, Memory Usage dropped too.[/caption]

Not surprisingly, Memory Usage dropped too.[/caption]

[caption id="attachment_494" align="aligncenter" width="924"] Page Fault improvements[/caption]

Page Fault improvements[/caption]

[caption id="attachment_496" align="aligncenter" width="922"] If there wasn't a doubt, replication lag was improved as well.[/caption]

If there wasn't a doubt, replication lag was improved as well.[/caption]

In the four years since Wanderu launched, it has relied heavily on MongoDB to store the station and trip information for each local, regional, and national carrier. With the new $graphLookup capability in MongoDB version 3.4, they are looking at the possibility of utilizing that technology for their graphing needs as well.

Further Industry Thoughts

MongoDB is a widely used NoSQL database. It is used by companies large and small, for a variety of reasons. I reached out to a few other known MongoDB users to get some real world feedback and product experiences.

CARFAX, for example, has been using MongoDB in production since version 1.8. They load over a billion documents a year and generate over 20,000 reports per second. Jai Hirsch, a Senior Systems Architect at CARFAX, wrote a nice write up about why they decided on MongoDB. They have achieved some tremendous performance benefits from compressed replication.

GHX switched from MMAPv1 to WiredTiger with the 3.2 release of MongoDB. Jeff Sherard, their Database Engineering Manager, had another very positive experience.

Definitely the switch to WiredTiger in 3.2 was a huge boost. Especially on the compression side - we experience about 50% compression. Document level locking vs. Collection level locking also improved performance for us significantly.

He also experienced benefits with sharding and replica sets as well with an upgrade to 3.4.4.

We recently upgraded to 3.4.4 and are particularly pleased with the improvements in balancing on shards (the parallelism makes balancing really fast). And the initial sync improvements in replicaSets [sic] have been really useful too.

Tinkoff Bank landed on using MongoDB instead of Oracle based on their finding that the performance of Oracle's CLOBs are not as fast and are not searchable. They are able to process approximately 1,500 requests per second using their three node replica set. These queries put a load of 5-10% on the CPU of the primary node.

Wrap Up

I'm sure that the SQL vs. NoSQL debate will live on. Much the same as Windows vs. Mac, or cats vs. dogs. I hope, however, that based on the information and testimonies provided here we can lay to rest the notion that MongoDB isn't "enterprise ready." If we are going to argue the virtues of MongoDB, we should at least be talking about the most current version. As in the scene with Mick Dundee, he comes across looking foolish for basing his entire view of a product based on something he experienced years ago.

There are several MongoDB specific terms in this post. I created a MongoDB Dictionary skill for the Amazon Echo line of products. Check it out and you can say "Alexa, ask MongoDB what is a document?" and get a helpful response.

Follow me on Twitter @kenwalger to get the latest updates on my postings.

Top comments (0)