Recently, I had to make use of a third-Party API service that takes at least 30 seconds to respond.

The information these API services offer is not frequently requested by users but needed at some point by the user.

I can wait for the response to come back from the third-party service, but are users willing to wait for a response? A user may not be willing to wait for a reply and cancel the request before a response is returned.

If the user can't wait for the response to complete, they won't be able to get the info since it will always take 30 seconds or more to complete.

Every request to the API service costs money. If the user cancels the request before a response comes back, I will still pay for the request 😑. Some users will retry which means more money spent on getting nothing.

I have to come up with a way to reduce the request to the third-party API service, run the operation in the background in case the user cancels the request, and improving response time.

For this, I made use of a function that only calls the API once for a unique info_id. Other requests with the same info_id will wait for the response of the first call to complete. When the response arrives, every request waiting will get the response. The value returned is saved in a DB/Cache to speed up future requests.

This way, the user will wait for how long it takes the first request to complete. Requests made before the response of the first request will wait for the response, while requests made after it completes, get the value from the DB/Cache.

Implemetation

package main

import (

"log"

"sync"

"time"

)

var (

duration = time.Second * 5

)

type infoModel struct {

// INFO FIELDS

Name string

Age int

Sex string

Hobbies []string

InfoID string

}

// infoSaver defines the interface required to save infoModel to a db

type infoSaver interface {

CreateInfo(i *infoModel) error

}

// infoChan the struct passed in the channel

// contains the info model and an error

type infoChan struct {

info infoModel

err error

}

// infoBatchRequester this handles the request to the slow api/operation

// ensures only the first request call the api/op

type infoBatchRequester struct {

mx sync.RWMutex

pending map[string][]chan<- infoChan // this holds pending requests

infoSaver

}

// Request makes request to the api

func (i *infoBatchRequester) Request(infoID string, result chan<- infoChan) {

var done chan infoChan

i.mx.Lock()

_, ok := i.pending[infoID]

i.mx.Unlock()

if !ok {

// create the first pending request

done = make(chan infoChan)

i.mx.Lock()

i.pending[infoID] = []chan<- infoChan{result}

i.mx.Unlock()

// make the request to get the info

go func() {

var (

infoResp infoModel

err error

)

//pretend to do long running task

log.Println("DOING LONG RUNNING TASK FOR. CALLED ONCE", infoID)

time.Sleep(duration)

infoResp = infoModel{

Name: "John Doe",

Age: 50,

Sex: "Male",

Hobbies: []string{"eating"},

InfoID: infoID,

}

//send info and error to done chan

done <- infoChan{info: infoResp, err: err}

}()

} else {

// append info to list of pending requests with the same infoID

i.mx.Lock()

i.pending[infoID] = append(i.pending[infoID], result)

i.mx.Unlock()

return

}

//wait for done to receive a value

value := <-done

i.mx.RLock()

results, ok := i.pending[infoID]

i.mx.RUnlock()

if ok {

for _, r := range results {

r <- value

close(r)

}

}

defer func() {

//delete the key when func execution is complete

i.mx.Lock()

delete(i.pending, infoID)

i.mx.Unlock()

}()

if value.err == nil {

log.Println("SAVED TO DB")

log.Println(i.infoSaver.CreateInfo(&value.info))

} else {

log.Println("Error returned", value.err)

}

return

}

Using it within an HTTP handler

package main

import (

"encoding/json"

"errors"

"log"

"net/http"

)

// mockInfoSaver mock implementation of infoSaver

type mockInfoSaver struct {

}

func (b mockInfoSaver) CreateInfo(i *infoModel) error {

log.Println("saving to db", i)

return nil

}

var (

ErrNotFound = errors.New("Not Found")

defaultInfoBatchRequester = &infoBatchRequester{

pending: map[string][]chan<- infoChan{},

infoSaver: mockInfoSaver{},

}

)

// getInfo acts like a failed db get operation

func getInfo(infoID string) (infoModel, error) {

return infoModel{}, errors.New("info does not exists")

}

// handleSlowThirdParty http handler that makes the call to the third party api

func handleSlowThirdParty(w http.ResponseWriter, r *http.Request) {

infoID := r.URL.Query().Get("info_id")

// make a fake request to get the info

info, err := getInfo(infoID)

if err != nil {

// make a request to get the info

result := make(chan infoChan, 1)

go defaultInfoBatchRequester.Request(infoID, result)

select {

//the request was canceled early

case <-r.Context().Done():

log.Println("request terminated")

//result returned

case rs := <-result:

if rs.err != nil {

http.Error(w, rs.err.Error(), http.StatusBadRequest)

}

info = rs.info

}

}

b, err := json.Marshal(info)

w.Write(b)

}

Test file to check if it works as expected.

package main

import (

"context"

"fmt"

"log"

"sync"

"testing"

"time"

)

var (

infoBatcher *infoBatchRequester

)

func Test_infoFetchPoll_ResolveINFO(t *testing.T) {

infoBatcher = &infoBatchRequester{

pending: map[string][]chan<- infoChan{},

infoSaver: mockInfoSaver{},

}

var wg sync.WaitGroup

now := time.Now()

// make 5 request to the get an info_id with the same id

for i := 0; i < 5; i++ {

wg.Add(1)

go func(i int) {

defer wg.Done()

waitTime := time.Second * time.Duration(4+i)

_, err := run(fmt.Sprintf("same_info"), i, waitTime)

// not expecting error for the index greater than 1

if i > 1 && err != nil {

t.Errorf("not expection error %v on index %d", err, i)

}

}(i)

}

wg.Wait()

log.Println("completed in ", time.Since(now).Seconds())

}

// run calls infoBatcher.Request for an infoID

// it takes the infoID, the index of the call, and the duration for the deadline.

// duration t is the user canceling the request after time t

func run(infoID string, id int, t time.Duration) (infoModel, error) {

ctx, cancel := context.WithDeadline(context.TODO(), time.Now().Add(t))

defer cancel()

result := make(chan infoChan, 1) // using a buffered channel to prevent it from blocking if no

// receiver is available

infoBatcher.Request(infoID, result)

select {

case <-ctx.Done():

log.Println("CLOSED", id, infoID)

return infoModel{}, ctx.Err()

case r := <-result:

log.Println("PASSED", infoID, id, r)

return r.info, r.err

}

}

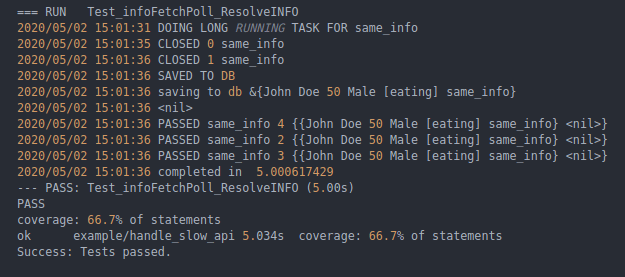

Running the test file returns the image we see below. The image shows the request was completed in approximately 5 seconds, the time it takes our fake request to complete.

We can see the request was to the slow fake service was called only once, and even when the request that made the call timed out, other requests with the same info_id still got a response.

Money saved for the remaining request to the same info_id. We can now use the extra cash to buy something for ourselves.

Conclusion

I don't know what to conclude with. I have run out of wise words to say 🤔.

Let me just say using the right algorithm can save money, time, and other things good algorithms can save.

The End.

Top comments (1)

Great Kay! Now, DM me, let's go grab a pizza with the saved cash.