A quick guide on how to back up the sample sakila database with dbForge Studio for MySQL. Probably, would be useful for beginners.

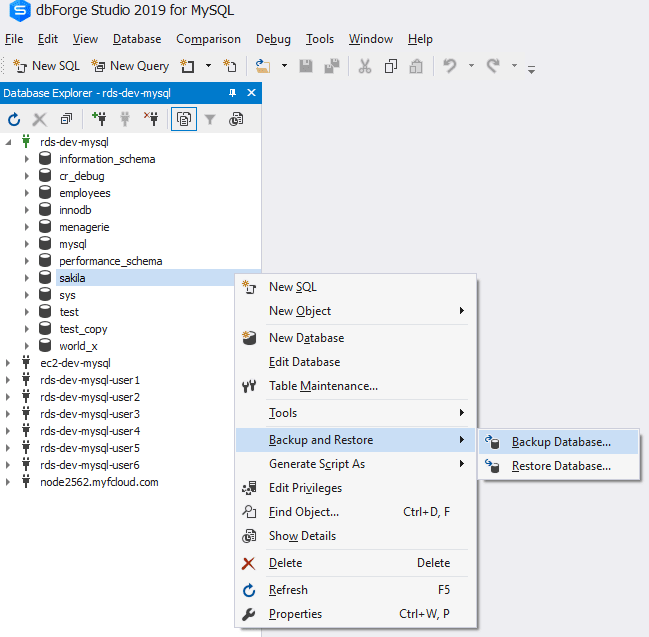

Let’s go straight to the business. Here, we are connected to an Amazon RDS MySQL instance. We are interested in backing up the sample sakila database (which is not large - but we will get to that very shortly). We right click on the database and then choose “Backup and Restore” > “Backup Database...” option from the context menu:

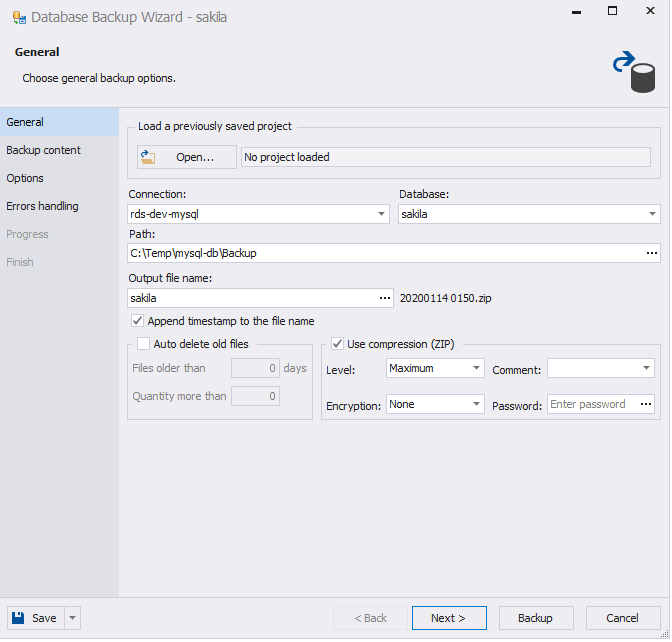

This starts the Database Backup Wizard. From the first screen, we can see the database connection and the database is already selected for us. We can see dbForge Studio has selected a default backup path and file name for us as well. The file name defaults to the database name and we can also opt to append a timestamp at the end of the file name. Also, there’s an option to delete backup files older than a configurable period of time. There are options to compress the backup file and to encrypt it:

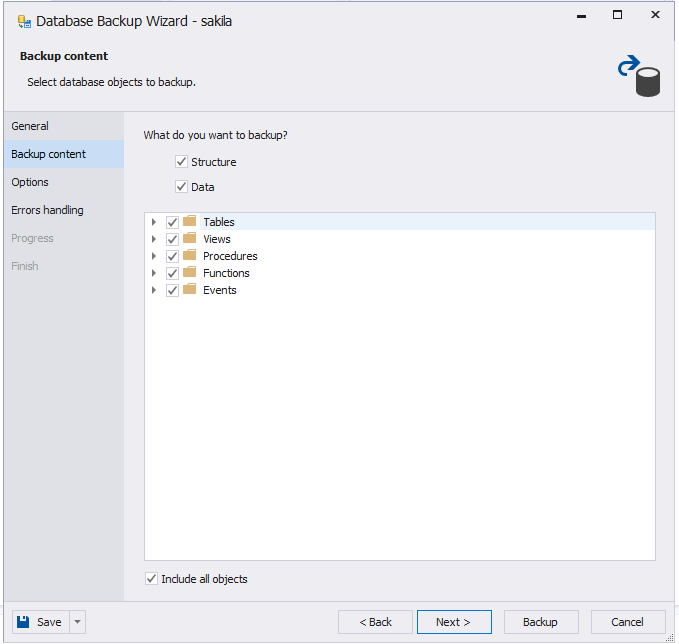

With the options chosen, we click “Next”. In the next screen, we can choose if we want to backup only the database structure, only the data or both. In this case, we are backing up both. Note how we can include or exclude different types of objects from our backup. Again, we are including all objects and then clicking “Next”:

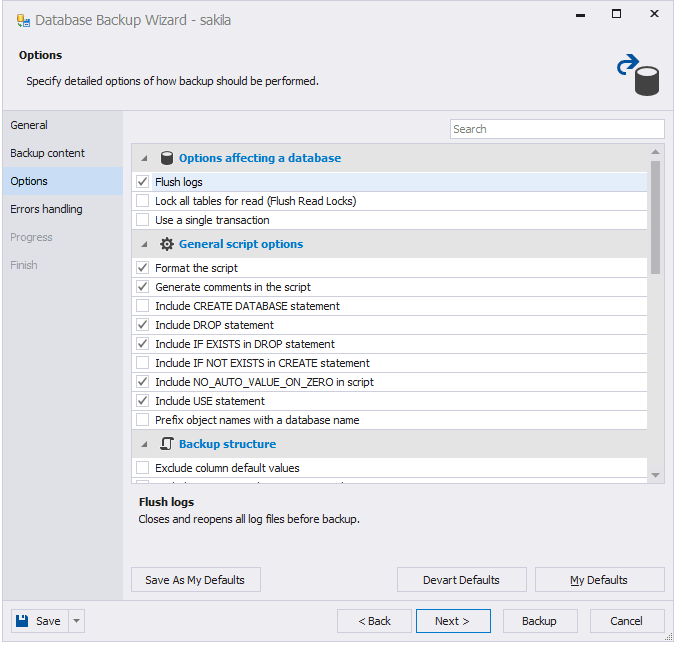

In the next screen, we can choose some extra options. Here, we want dbForge Studio to flush the logs to disk before the backup. We can also choose other options (not visible here) like including database users and roles. Once happy with the options, we click “Next”:

The final screen asks us how we want to handle any errors. For manual backups, we can choose the first option: to prompt us. For automated backups, we can choose the third option (abort on first error) and also write to a log file:

Now, clicking on the “Backup” button will start the backup, and it will be a manual backup. Most tools in the market would give you this type of facility.

What sets dbForge apart is that you can save the options you have chosen so far as a command line script.

Why would you want that? Well, backing up large databases can take time and when you do it manually, any client application can seem to have become unresponsive. Particularly GUI tools seem frozen. In reality though, the backup client is waiting for a synchronous operation to complete, sometimes delayed by network latency. Also, you would want a command-line alternative so you can schedule the backup the task.

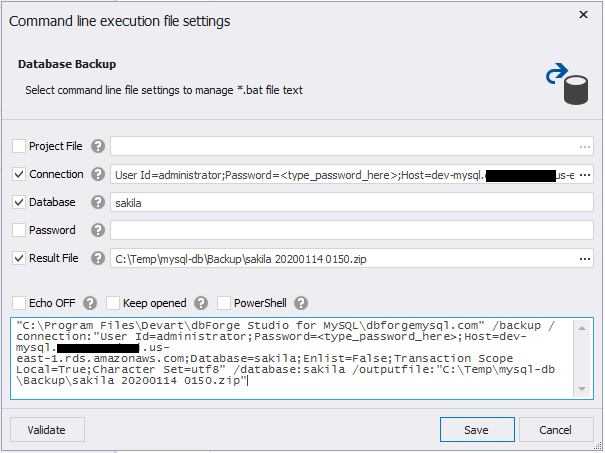

Instead of clicking the “Backup” button, If we click the down arrow key beside the “Save” button, we will see an option “Save Command Line…”. Selecting that option will open the dialog box below:

From here, you can copy the generated command with all the options and save it as a Windows command file. In the snippet below, we are calling a script file. This script file has a single line, and that line is this generated command. The output is also shown:

c:\Temp>backup.cmd

c:\Temp>"C:\Program Files\Devart\dbForge Studio for MySQL\dbforgemysql.com" /backup /connection:"User Id=administrator;Password=xxxxxxxx;Host=dev-mysql.xxxxxxxxi.us-east-1.rds.amazonaws.com;Database=sakila;Enlist=False;Transaction Scope Local=True;Character Set=utf8" /database:sakila /outputfile:"C:\Temp\mysql-db\Backup\sakila 20200114 0150.zip"

=================================================================

Devart dbForge Studio 2019 for MySQL version 8.1.45.0

Copyright 2020 Devart. All rights reserved.

=================================================================

Database backup started at: 14/01/2020 4:06:16 AM

Exporting (100%): Done

Objects processed: 26

Rows processed: 25020

Backup file: C:\Temp\mysql-db\Backup\sakila 20200114 0150.zip

Database backup finished at: 14/01/2020 4:07:16 AM

As you can imagine, we could have scheduled this command script. This would be ideal for large databases because we would not have to worry about any timeouts or any unresponsive clients.

Top comments (0)