In this article I will share my team's experience with setting up an infrastructure on BitBucket Pipelines to facilitate integration tests.

I will go over the challenges we faced when orchestrating our service's external dependencies as docker containers and making them communicate with each other both locally and during the CI integration tests.

Specifically, I will outline the docker-in-docker problem, how to solve it both locally, locally within docker & in CI and how to actually put it all together via docker-compose.yml & and a bit of Bash.

Our particular experience was with Kafka, but the broad ideas can also be applied to other stacks.

Background

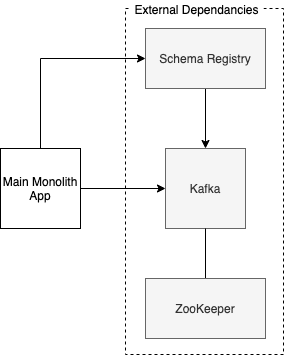

For our current (greenfield) project my team is developing an application which is processing events from a Kafka stream. To ensure the velocity of the team doesn't decrease with the advancement of the project, we wanted to setup a suite of integration tests that will run on each commit during the CI testing phase within BitBucket Pipelines.

We had some specific requirements on how to ensure we can easily run the tests as often as possible - not only during the CI phase, but also locally:

- Running the tests straight from our IDEs without too much wizardry was a must - PyCharm lets you click Run on a specific test which is like the best right :) Having a proper debugger is quite integral to us.

- When running the tests locally, we wanted to be able to execute them directly via a native Python interpreter but also from within a container which resembles the one which will run during CI. PyCharm lets you use an interpreter from a docker container - we do this when we want to run our tests in a CI-like container and still have a proper debugger. For normal development/testing we prefer to use the native Python interpreter because of smoother development experience.

- Have as close to prod environment as possible both locally and in CI. I.e. it must be easy to spawn the external dependencies and be able to have full control over them regardless of the environment.

Before we continue, I will reiterate the three environment we'll be addressing:

- local - dev laptop using the native Python interpreter (via pyenv + pipenv)

- local docker - again on dev laptop but python is within docker

- CI - BitBucket Pipeline build which runs our scripts in a dedicated container

The tech stack

Our service is a Python 3.7 app, powered by Robinhood's Faust asynchronous framework. We use Kafka as a message broker. To ensure the format of the Kafka messages produced and consumed we use AvroSchemas which are managed by a Schema Registry service.

Project structure

Here's the project structure, influenced by this handy faust skeleton project:

./project-name

scripts/

...

run-integration-tests.sh

kafka/ # external services setup

docker-compose.yml

docker-compose.ci.override.yml

project-name/

src/...

tests/...

bitbucket-pipelines.yml

docker-compose.test.yml

...

The kafka/docker-compose.yml has the definitions of all the necessary external dependencies of our main application. If run locally via cd kafka && docker-compose up -d, the containers will be launched and they will be available on localhost:<service port> - we can then run via our native Python the app and tests against the containerised services.

# kafka/docker-compose.yml

version: '3.5'

services:

zookeeper:

image: "confluentinc/cp-zookeeper"

hostname: zookeeper

ports:

- 32181:32181

environment:

- ZOOKEEPER_CLIENT_PORT=32181

kafka:

image: confluentinc/cp-kafka

restart: "on-failure"

hostname: kafka

healthcheck:

test: ["CMD", "kafka-topics", "--list", "--zookeeper", "zookeeper:32181"]

interval: 1s

timeout: 4s

retries: 2

start_period: 10s

container_name: kafka

ports:

- 9092:9092 # used by other containers launched from this file

- 29092:29092 # use outside of the docker-compose network

depends_on:

- zookeeper

environment:

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:32181

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT_HOST://localhost:29092,PLAINTEXT://kafka:9092

- KAFKA_BROKER_ID=1

schema-registry:

image: confluentinc/cp-schema-registry

hostname: schema-registry

container_name: schema-registry

depends_on:

- kafka

- zookeeper

ports:

- "8081:8081"

environment:

- SCHEMA_REGISTRY_KAFKASTORE_CONNECTION_URL=zookeeper:32181

- SCHEMA_REGISTRY_HOST_NAME=schema-registry

- SCHEMA_REGISTRY_DEBUG=true

- SCHEMA_REGISTRY_LISTENERS=http://0.0.0.0:8081

A peculiarity when configuring the kafka container is the KAFKA_ADVERTISED_LISTENERS - for a given port you can specify a single listener name (e.g. localhost for port 29092). Kafka will accept connections on this port if the client used kafka://localhost:29092 as the URI (plus kafka://kafka:9092, for the example above). Setting 0.0.0.0 instead of localhost causes kafka to return an error during launch - so you need to specify an actual listener hostname. We will see below why this is important.

CI BitBucket Pipelines & Sibling Docker containers

As per the Bitbucket documentation, it's possible to launch external dependencies as containers during a Pipeline execution. This sounded appealing at first but it meant it would lead to difficulties when setting up the test infrastructure locally as the bitbucket-pipelines.yml syntax is custom. Since it was a requirement for us to be able to easily have the whole stack locally and have control over it, we went for the approach of using docker-compose to start the dockerised services.

To configure the CI we have a bitbucket-pipelines.yml with, among others, a Testing phase, which is executed in a python:3.7 container:

definitions:

steps:

- step: &step-tests

name: Tests

image: python:3.7-slim-buster

script:

# ...

- scripts/run-integration-tests.sh # launches external services + runs tests.

...

The run-integration-tests.sh script will be executed inside a python:3.7 container when running the tests via docker (locally & on CI) - from this script we launch the kafka & friends containers via docker-compose and then start our integration tests.

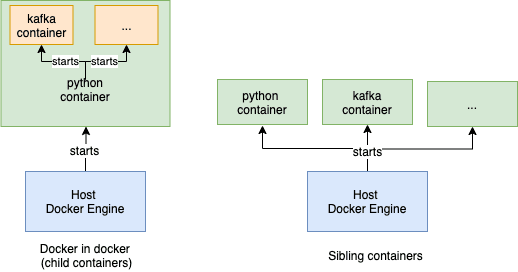

However, doing so directly would mean that we will have a "docker in docker" situation which is considered painful. By "directly" I mean launching a container from within a container.

A solution to avoid "docker in docker" is to connect to the host's docker engine when starting additional containers within a container instead of to the engine within the container. By connecting to the host engine, sibling containers will be started, instead of child ones.

The good news is that this seems to be done out of the box when we are using BitBucket pipelines! So it's a concern just when we're running locally in docker.

The pain & the remedy

There are two main problems:

- how to use the host Docker engine from within a container instead the engine within the container

- how to make two sibling containers communicate with each other

- how to configure our tests & services so that communication can happen in all three of our environments

Use host Docker engine

In the root of our project we have a docker-compose-test.yml which just starts a python container and executes the scripts/run-integration-tests.sh.

version: '3.5'

services:

app:

tty: true

build:

context: .

dockerfile: ./Dockerfile-local # installs docker, copies application code, etc. nothing fancy

entrypoint: bash scripts/run-integration-tests.sh

volumes:

- /var/run/docker.sock:/var/run/docker.sock # That's the gotcha

When the above container launches, it will have docker installed and the docker commands we run, will be directed to our host's docker engine - because we mounted the host's socket file in the container.

To start this test container we actually combine both the kafka/docker-compose.yml & docker-compose-test.yml - via docker-compose --project-directory=. -f local_kafka/docker-compose.yml -f ./docker-compose-test.yml -f ... <build|up|..... This is just an easy way to extend the compose file with all the kafka services with an additional service. We have this in a Makefile to save ourselves from typing too much. E.g. with the Makefile snippet below we can just do make build-test to build the test container.

command=docker-compose --project-directory=. -f local_kafka/docker-compose.yml -f ./docker-compose-test.yml

build-test:

$(command) build --no-cache

Sibling inter-container communication

When running the containers locally

It's cool that we can avoid the docker-in-docker situation but there's one "gotcha" we need to address :)

How to communicate from within one sibling container to another container?

Or in our particular case, how to make the python container with our tests connect to the kafka container - we can't simply use kafka://localhost:29092 within the python container as localhost will refer to, well.., the python container host.

The solution we found is to go through the host and then use the port of the kafka container which it exposed to the host.

In newer versions of Docker, from within a container, host.docker.internal will point to the host which started the container. This is handy because if we have the kafka running on the host as a container and exposing its 29092 port, then from our python tests container we can use host.docker.internal:29092 to connect to the sibling container with kafka! Good stuff!

So in essence:

- when running the tests from the host's native python we can target

localhost:29092 - and when running the tests from within a container, we need to configure them to use

host.docker.internal:29092.

When running the containers on BitBucket

Now there's another gotcha, as one might expect. When we are in CI and we start our containers, host.docker.internal from within a container does not work. Would have been nice if it did, right... The good thing is that there is an address that we can use to connect to the host (the bitbucket "host", that is).

I found it by accident when inspecting the environment variables passed to our build - the $BITBUCKET_DOCKER_HOST_INTERNAL environmental variable keeps the address that we can use to connect to the BitBucket "host".

Now the above is actually the significant findings that I wanted to share.

In the next section I will go over how to actually put together the files/scripts so that it all glues together in all of our three envs.

Gluing it all together

Given the above explanation, the trickiest part, in my opinion, is how to configure the kafka listeners in all three envs & how to configure the tests to use the correct kafka address (either localhost/host.docker.internal/$BITBUCKET_DOCKER_HOST_INTERNAL). We need to configure the kafka listeners accordingly, simply because our test kafka client will use different addresses to connect to kafka, thus the kafka container should be able to listen on the corresponding address.

To handle the kafka listener part we make use of the docker-compose files extend functionality. We have the "main" kafka/docker-compose.yml file which orchestrates all containers and configures them with settings that will work fine for when using the containers from our local python via localhost. We also added a simple docker-compose.ci.override.yml with the following content:

version: '3.5'

services:

kafka:

environment:

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT_HOST://${BITBUCKET_DOCKER_HOST_INTERNAL:-host.docker.internal}:29092,PLAINTEXT://kafka:9092

This file will be used when starting the kafka & friends when running tests within local docker & CI - if the $BITBUCKET_DOCKER_HOST_INTERNAL is set, then its values are going to be used - we are in CI then. If it's not set, the default host.docker.internal is used - meaning we are in local docker env.

Our tests/app are configured via env vars. Our run-integration-tests.sh looks something like this

pip install --no-cache-dir docker-compose && docker-compose -v

docker-compose -f local_kafka/docker-compose.yml -f docker-compose-test-ci.override.yml down

docker-compose -f local_kafka/docker-compose.yml -f docker-compose-test-ci.override.yml up -d

# install app dependancies

# wait for kafka to start

sleep_max=15

sleep_for=1

slept=0

until [ "$(docker inspect -f {{.State.Health.Status}} kafka)" == "healthy" ]; do

if [ ${slept} -gt $sleep_max ]; then

echo "Waited for kafka to be up for ${sleep_max}s. Quitting now."

exit 1

fi

sleep $sleep_for

slept=$(($sleep_for + $slept))

echo "waiting for kafka..."

done

# !! figure out in which environment we are running and configure our tests accordingly

if [ -z "$CI" ]; then

if [ -z "$DOCKER_HOST_ADDRESS" ]; then

echo "running locally natively"

else

echo "running locally within docker"

export KAFKA_BOOTSTRAP_SERVER=host.docker.internal:29092

export SCHEMA_REGISTRY_SERVER=http://host.docker.internal:8081

fi

else

echo "running in CI environment"

export KAFKA_BOOTSTRAP_SERVER=$BITBUCKET_DOCKER_HOST_INTERNAL:29092

export SCHEMA_REGISTRY_SERVER=http://$BITBUCKET_DOCKER_HOST_INTERNAL:8081

fi

pytest src/tests/integration_tests

The script can be used in all of our three envs - it will set the KAFKA_BOOTSTRAP_SERVER (our tests' configuration) env var accordingly.

FYI In the Makefile example couple of paragraphs ago, I've omitted the ci.override.yml file for brevity.

Thanks for reading the article - I hope you found useful information in it!

Please let me know if you know better ways to solve the problems from above! I couldn't find any, thus wanted to save others from the pain I went through :)

Top comments (0)