So currently I am transitioning into the tech industry after spending some time in the music industry. I graduated from the University of New Haven back in 2016 with a degree in music/sound engineering and I've been playing guitar for about 16 years. A few weeks ago I started classes at Flatiron School with hopes of one day becoming a software developer.

Right now I am learning how React works just after learning JavaScript and I can't help but constantly compare the concepts to audio engineering.

Data Flow & Signal Flow

Audio engineering is all about being able to follow the flow of the audio signal as it travels from point to point in your signal chain. The signal may start from an instrument and then a microphone will transduce the signal so that we may manipulate that signal by passing it through processors like compressors and EQ's. From there, the signal may then travel to a mixing console where it can be manipulated even further and then to a loudspeaker and ultimately end up in your ears.

I feel as though coding works very similarly to this signal chain concept. I am mostly comfortable with following the data flow of code - which is critical for things like JavaScript nested functions or loops, as well as React components+props. The code flows from one place to another, being somehow manipulated along the way to ultimately end up in one final spot - like the DOM??

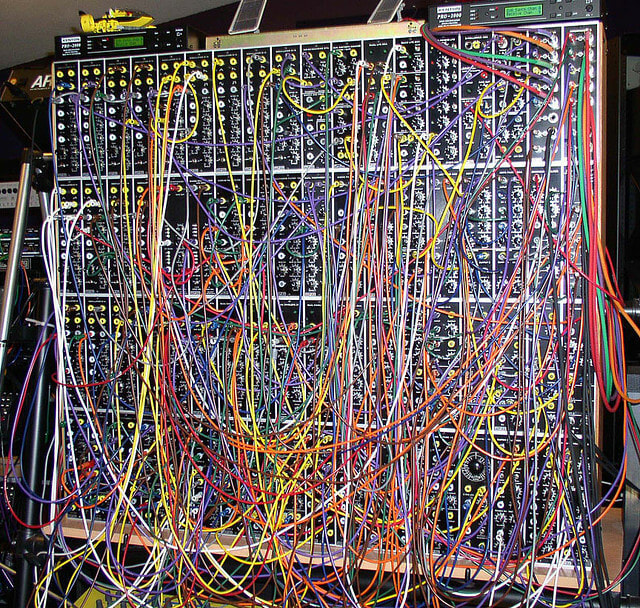

I'd also like to compare React-vs-vanilla-JavaScript with digital-vs-analog-recording/mixing. While computer programming is clearly 100% in the digital realm, back in the day sound engineers had only physical, analog equipment to work with - racks upon racks of outboard gear and a jungle of patch cables filling up a patchbay. And everything was recorded to tape because computers weren't even a thing yet.

(this patch bay's not even that bad)

Nowadays people can accomplish the same tasks while sitting in their bedrooms with nothing but a laptop. But both analog and digital techniques are equally legitimate methods of audio engineering, it all depends on what the project is really.

I think this is comparable to the creation of React. React uses the same JavaScript/HTML concepts but in ways makes it easier/more efficient to develop web apps. Using React components allows for cleaner and more dynamic code but doesn't necessarily mean that using React is "better" than coding in vanilla JavaScript. Just like how digital recording makes things easier by not having to spend time physically wiring a bunch of gear together and having to maintain it like swear-by-analog engineers have to. Obviously you can do both, just like how you can choose to code with both React and vanilla JavaScript/HTML.

I just think it's cool how these industries can evolve so similarly and making these kinds of comparisons makes learning new things much easier for me. However I am only just breaking the surface of React so I might have a completely new perspective of all this later on.

If anybody else is or has been in a similar transitional phase like me going into software development, I'd love to hear about any kinds of comparisons you are making.

Top comments (0)