Hey everyone! Pete and I recently set out to create a somewhat unconventional AWS setup. Now, to be totally transparent, we are almost certainly doing this incorrectly, but it was the only way we got it working! Enjoy.

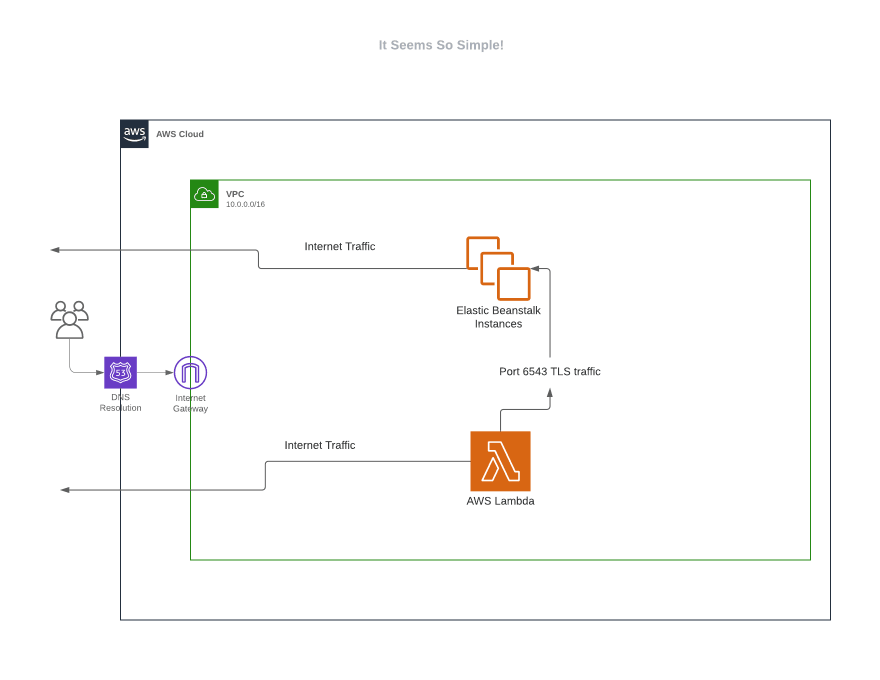

Our goal was to create an Elastic Beanstalk application that is open to the internet (port 80, 443) but also open to a single other AWS resource (lambda) on a specific single other port.

Specifically, we needed an EB (Elastic Beanstalk) instance that is open to the world, but also needs to accept traffic on port 6543 only from this one specific lambda. We didn't want port 6543 open to the world or publicly accessibly in any way.

Solution: a custom VPC where the EB application, a custom load balancer, and a lambda that will live in.

Now you're probably thinking, why didn't y'all just use a security group to accomplish this? We couldn't just use security groups because by default, an EB app has an application load balancer, which can only accept http / https traffic, not TCP traffic on custom ports. This meant that no matter what our security group was, port 6543 was rejected by the load balancer when our lambda reached out to it... Sad.. I know.

We then found out about network load balancers that enable us to route traffic by port. We then thought, ok, we can spin up the lambda within a new VPC and create a custom network load balancer that routes our 6543 traffic to our Elastic Beanstalk server instance group. So we set out to do just that.

Now bear with me, this is going to get very VPC specific. If you're interested in spinning up VPC's, read on! We finished up creating a custom VPC with 2 CIDRs: 1 public, 1 private. When you create a VPC, you must specify a range of IPv4 addresses for the VPC in the form of a Classless Inter-Domain Routing (CIDR) block. In our case, the CIDR was 11.0.0.0/24 (public) and 192.168.70.0/24 (private) (https://docs.aws.amazon.com/directoryservice/latest/admin-guide/gsg_create_vpc.html). After creating a VPC, you can add one or more subnets in each Availability Zone. We created the two private subnets and the two public subnets. When you create a subnet, you specify the CIDR block for the subnet, which is a subset of the VPC CIDR block. we created 3 subnets and associated them with the VPC: 11.0.1.0/24 in AZ us-west-2a, 11.0.0.0/24 in AZ us-west-2b (2 publics) and 192.168.70.0/24 in AZ us-west-2a (the 2b and 2a ended up being important since you aren't allowed to spin up t2.micros in us-west-2d). Subnets are used by the load balancer to serve traffic. The public subnets need to be associated with an internet gateway. Here is where we got all this info. If a subnet's traffic is routed to an internet gateway, the subnet is known as a public subnet.. the private subnets need to be associated with a nat gateway to access the internet. Each subnet must be associated with a route table, which specifies the allowed routes for outbound traffic leaving the subnet.. So we then created 2 route tables, the public one which routes traffic destined for the internet through the internet gateway; the private one which routes traffic destined for the internet through the NAT gateway; all of the above we got from this random AWS article about how to allow your VPC to access the internet while retaining a private subnet.

We then associated the public and private subnets with the above route tables, created a lambda configured to be inside the VPC, on the private subnet, with a security group that allows all outbound and nothing inbound (default). Unfortunately, we then realized that even though our EB wasn't specified to be in a VPC, our elastic beanstalk spins up instances by default into the default VPC. This meant that our lambda and custom load balancer was now in a different VPC than the elastic beanstalk app, and they couldn't communicate. UGH. And since you can't change the VPC of an EB application after it's built, we had to tear it down and start a new one. Fret not, this wasn't too serious and we just spun our elastic beanstalk application back up using the now custom VPC.

Once we configured our VPC to allow incoming traffic from anywhere and configured the correct regions for our subnets, we spun up our elastic beanstalk application with the specified VPC we just created. We added a VPC access policy to our lambda execution role: also from this doc. We then configured the custom load balancer, which is also in the custom VPC, to point it's traffic (traffic from port 6543) to the instance groups that are spun up from the elastic beanstalk application. We created a security group that allows 6543 from the VPC private subnets (which is how you allow traffic from your NLB (network load balancer) since NLBs can't be associated with security groups). We added that security group to our elastic beanstalk instance config, and because they're in the same VPC, this traffic works! Next, we created a target group for the NLB which includes our elastic beanstalk instance(s) (TODO: we don't know how to auto add new ec2 instances to this NLB target group when EB autoscales up/down)

Lastly, we told our lambda to connect to the public dns name of our NLB on 6543, which gets translated into a private IP, which is inside the VPC, which ends up hitting our elastic beanstalk server on the proper private port. And... take a breath. It worked.

We now had a lambda that could access the internet and send internet traffic over TCP on port 6543 to an elastic beanstalk application, of which could also access the internet but could only receive traffic from port 6543 from our specific lambda.

Whew! Leave a comment below if you know of a better way to do this, because believe it or not, this is the production setup for Flossbank. Hope this helps a fellow dev in the weeds of VPC's!

Top comments (0)