Perhaps the most significant and under-appreciated aspect of Rails and Agile software development of the last roughly 15 years is the culture and discipline around testing and test-driven development.

I’ve never come to understand why testing and TDD is often maligned by the loudest, most vocal developers: It’s too slow, it takes longer, the boss didn’t want or ask for it, they’ll say.

You don’t hear about these developers or this ideology often in professional circles, but you encounter them quickly in the wild west of freelance development.

[SEIZURE WARNING: There is an animated GIF towards the bottom of this page that flashes. Please disable animated GIFs if you are susceptible to flashing lights.]

Indeed, much of the popular and discussed rhetoric in the community of Rails is about a codebase’s test suite (a suite of tests for your whole application, this collective is called “the tests” or “the specs”): How much of your codebase is covered (as measured in %, which I discuss further below)? How easy are the tests to write? Do they use factories or fixtures? How brittle are they? Do they test the right things and are they valuable?

All of these are correct questions. Although there is no substitute for the day-in-day-out practice of this to become great at testing, I will try to offer some broad ‘best practice’ answers to these questions.

The enlightened developers don’t ask or care about whether or not the boss told us to write a tested codebase. We just know the answers to the above questions and do what’s right for the codebase: write specs.

Testing has varying degrees, varying methods, varying strengths.

In 99 Bottles of OOP, Metz, Owen, and Stankus make this interesting observation:

Belief in the value of TDD has become mainstream, and the pressure to follow this practice approaches an unspoken mandate. Acceptance of this mandate is illustrated by the fact that it’s common for folks who don’t test to tender sheepish apologies. Even those who don’t test seem to believe they ought to do so.

(Metz, et al, 99 Bottles of OOP, Second Edition, 2020. p 43)

So testing exists in a murky space: The top dev shops and teams know it is essential, but its implementation is inconsistent. Sadly, I’ve seen lots of development happen where people either just don’t write tests, write tests blindly, use tests as a cudgel, or skip end-to-end testing altogether.

Many years in this industry have led me to what seems like an extreme position. Not writing tests should be seen as akin to malpractice in software development. Hiring someone to write untested code should be outlawed.

Having a tested codebase is absolutely the most significant benchmark in producing quality software today. If you are producing serious application development but you don’t have tests, you have already lost.

Having a good test suite is not only the benchmark of quality, it means that you can refactor with confidence.

There are two kinds of tests you should learn and write:

Unit testing (also called model testing or black-box testing)

End-to-end testing (also called integration testing, feature testing, or system tests)

These go by different names. Focus on the how and why of testing and don’t get lost in the implementation details of the different kinds of tests. (To learn to do testing in Ruby, you can check out my course where I go over all the details.)

Unit Testing

Unit tests are the “lowest-level” tests. In unit testing, we are testing only one single unit of code: Typically for Rails, a model. When we talk about Unit testing in other languages, it means the same as it does for Rails, but might be applied in other contexts.

The thing you are testing is a black box. In your test, you will give your black box some inputs, tell it to do something, and assert that a specific output has been produced. The internals (implementation details) of the black box should not be known to your unit test.

This fundamental tenet of unit testing is probably one of the single most commonly repeated axioms of knowledge in software development today.

The way to miss the boat here (unfortunately) is to follow the axiom strictly but misunderstand why you are doing it.

Testing, and especially learning to practice test-driven development (that’s when you force yourself not to write any code unless you write a test first), is in fact a lot deeper and more significant than just about quality, refactoring, and black boxes. (Although if you’ve learned that much by now you’re on the right track.)

Most people think that software, especially web software, is written once and then done. This is a fallacy: Any serious piece of software today is iterated and iterated. Even if you are writing an application for rolling out all at once, on the web there should always be a feedback loop.

Perhaps one of the worst and most problematic anti-patterns I’ve ever seen is when contractors write code, it is deployed, and nobody ever looks at any error logs. Or any stack-traces. Or even at the database records. (Typically this happens less in the context of companies hiring employees because employees tend to keep working for your company on an ongoing basis whereas contractors tend to ‘deliver’ the product and then leave.)

It’s not just about “catching a bug’” here or there. Or tweaking or modifying the software once it’s live. (Which, to be fair, most developers don’t actually like to do.)

It’s about the fact that once it is live, anything and everything can and will happen. As a result, the data in your data stores might get into all kinds of states you weren’t expecting. Or maybe someone visits your website in a browser that doesn’t support the Javascript syntax you used. Or maybe this, or maybe that. It’s always something.

This is the marriage of testing & ‘real life’: You want your tests to be ‘as isolated’ as possible, yet at the same time ‘as realistic’ as they need to be in order to anticipate what your users will experience.

That’s the right balance. Your code doesn’t exist in a vacuum, and the test environment is only a figment of your imagination. The unit test is valuable to you because it is as realistic as it needs to be to mimic what will happen to your app in the real, wild world of production.

With unit testing, you aren’t actually putting the whole application through its places: You’re just testing one unit against a set of assertions.

In the wild (that is, real live websites), all kinds of chaos happens. Your assumptions that user_id would never be nil, for example, proves out not to be the case in one small step of the workflow because the user hasn’t been assigned yet. (Stop me if you’ve heard this one before.)

You never wrote a spec for the user_id being nil, because you assumed that that could never happen. Well, it did. Or rather, it might.

Many developers, especially the ones with something to prove, get too focused on unit testing. For one thing, they use the percentage of codebase covered as a badge of honor.

Percentage of Codebase Covered

When you run your tests, a special tool called a coverage reporter can scan the lines of code in your application to determine if that line of code was run through during your test. It shows you which lines got the test to run over them and which lines were ‘missed.’

It doesn’t tell you if your test was correct, that it asserted the correct thing of course. It just tells you where you’ve missed lines of code. The typical benchmark for a well-tested Rails application is about 85–95% test coverage. (Because of various nuanced factors, there are always some files that you can’t or don’t need to test— typically not your application files.)

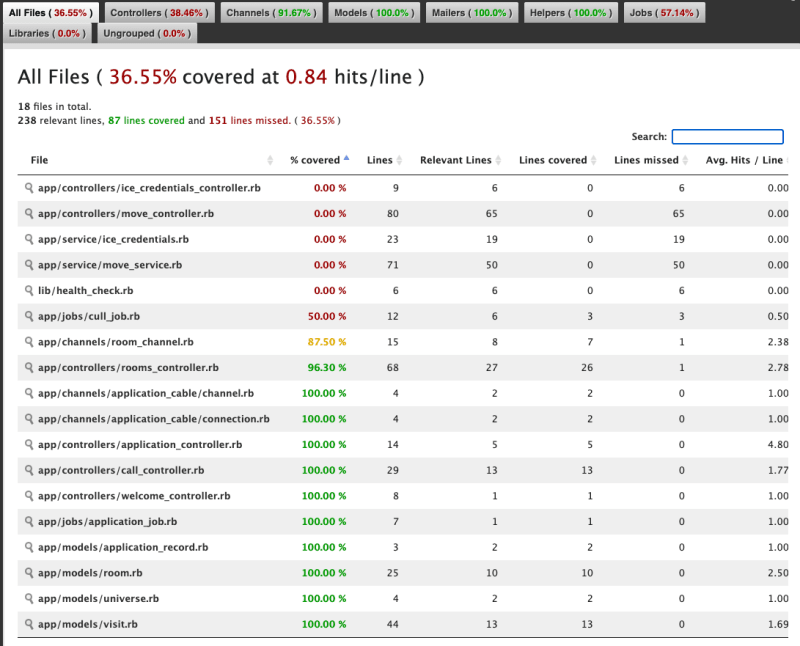

Here I use a tool in Ruby called simplecov-rcov to show which lines (precisely, line-by-line, and file-by-file) are covered. Here in this baby little project of mine, I have an unfortunate 36.55% of my codebase covered:

As you see, the files are sorted with the least covered files shown up top. The top files are in red and say “0.00 %” covered because the test suite does not go into that file.

When I click into the file, I can actually see which lines are covered and uncovered, in red & green like so:

(Here’s a great example of that “it only happens in the wild” thing I was talking about earlier. In theory, I should never get passed a room id (params[:room]) that is not in my database [see line 4], but in practice, for some reason, while I was debugging I did. So I added a small little guard to catch for this while debugging, thus making the line of code inside the if statement uncovered by my test suite.)

Correlating the total percentage of test coverage to your code quality and/or value of the tests is often a fallacy: Look at the percentage of codebase covered, but not every day.

The problem with over-emphasis on unit testing is the dirty little secret of unit testing: Unit tests rarely catch bugs.

So why do we unit test at all then? Unit tests do catch all of your problems when you are upgrading.

You should unit test your code for the following four reasons:

(1) It helps you think about and structure your code more consistently.

(2) It will help you produce cleaner, more easily reasoned code as you refactor.

(3) Refactoring will, in turn, reveal more about the form (or shape) of your application that you couldn’t realize upfront.

(4) Your unit tests will catch bugs quickly when you upgrade Rails.

That’s it. Notice that not listed here is ‘catching regressions’ (or bugs). That’s significant because many developers think unit testing cover all of their bases. Not only do they not cover all of your bases: They don’t even catch or prevent regressions (bugs) in live production apps very often.

Testing is important. Unit testing and end-to-end testing are both important, but between the two, end-to-end testing is the most important of all.

End-To-End Testing

End-to-end testing goes by many names: System specs, integration specs, Capybara, Cypress, Selenium.

End-to-end testing for Javascript applications means the following things:

- Your test starts in the database. I like factories, but fixtures are also popular.

- Your test ‘hits’ the server (Rails, Node, Java, etc)

- The server returns a front-end in Javascript

- Your test interacts in Javascript with your web page

If you do not have all four of those components, you do not have end-to-end testing. Using Capybara, you are really doing all of these things.

If you’ve never seen a Capybara test run, here’s what it looks like:

A moving visualization showing a Selenium suite running in a Rails application.

I like to show this to people because I don’t think many people see it. Often the specs are run in headless mode, which means those things are happening just not on the screen. (But you’re still really doing invisibly them which is the important part.) While headless mode is much faster (and typically preferred by developers), using Selenium to control a real browser is an extraordinarily powerful tool— not for the development, but for evangelizing these techniques and spreading the good word of end-to-end-testing.

Most non-developers simply don’t even know what this is. I’ve talked to countless CEOs, product people, people who’ve worked in tech for years and have never even seen an end-to-end test be run. (They’ve literally never witnessed with their own eyes what I’ve shown you in the animated GIF above.)

What these people don’t even understand is that TDD, and end-to-end testing, is a practice of writing a web application development that is itself an advancement. The advancement facilitates a more rapid development process, less code debt, and a lower cost of change.

Without having actually witnessed the test runner run against the browser, it is shocking to me how many people in positions of authority are happy to hire teams of QA people to do manual testing for every new feature or release. (Disparigingly called “monkey testing” by the code-testing community.) With the easy and “inexpensive” availability of remote QA people, an industry of people are happy to keep monkey testing until judgment day. What they don’t know is that those of us who are code-testing are already in the promised land of sweet milk and honey.

My biggest disappointment personally moving from Rails to the Javascript world (Vue, Ember, Angular, React) is the lack of end-to-end-testing in Javascript. It’s not that JSers don’t ever do end-to-end testing— it’s that it might not be possible in your setup or your team.

If you are only working on the frontend, by definition you don’t have access to the database or the backend.

The fundamental issue with the shift away from Rails monoliths and towards microservices is: How are these apps tested?

I don’t know about you, but after years of being a user of microservices, I’m not entirely sold.

Don’t get me wrong: I am not categorically opposed to microservices. (Your database, and Redis, both probably already in your app, could be thought of as microservices and they work very well for us Rails developers.)

But designing applications around microservices is a paradigm ideal for huge conglomerate platforms that simultaneously want to track you, show you ads, and curate massive amounts of content using algorithms.

Most apps aren’t Facebook. I hypothesize that the great apps of the 2020s and 2030s won’t be like Facebook either.

That’s why having the power to do database migrations without involving “a DBA” (or a separate database team), or having to get the change through a backend team— something which is normal for smaller startups and Rails — has been so powerful for the last 15 years.

The social media companies are well poised for leveraging microservices, but most small-medium (even large) Rails apps are not, and here’s why: Doing end-to-end testing with a suite of microservices is a huge headache.

It’s a lot of extra work, and because it’s so hard many developers just don’t do it. Instead, they fall back lazily to their unit testing and run their test coverage reports and say they have tested code. What? The API sent a field to the React Native app that it couldn’t understand so there’s a bug?

Oh well, that was the React Native developer’s problem. OR, that was the services layer problem.

It’s a slow, creeping NIMBY (not-in-my-backyard) or NIH (not-invented-here) kind of psychology that I see more and more as I learn about segregated, siloed teams where there’s a backend in Rails, a frontend in React or another JS framework, and a mobile app — all written by segregated, separated teams who need to have product managers coordinate changes between them.

Already we see lots of major companies with websites made up of thousands of microservices. I don’t think our web is better because of it: for me, most of my experience using these websites is spinning and waiting to load. Every interaction feels like a mindless, aimless journey waiting for the widget to load the next set of posts to give me that dopamine-kick. Everywhere I look things kind of work, mostly, but every now and then just sort of have little half-bugs or non-responses. It’s all over Facebook, and sadly it seems like more and more of the web I use this degradation in experience quality has gotten worse and worse over the last few years.

It’s a disaster. I blame microservices.

I hear about everybody rushing into mobile development or Node development or re-writing it all in React and I just wonder: Where are the lessons learned by the Rubyists of the last 15 years?

Does anyone care about end-to-end-testing anymore? I predict the shortsightedness will be short-lived, and that testing will see a resurgence of the importance of popularity in the 2020s.

I don’t know where the web or software will go next, but I do know that end-to-end testing, as pioneered by Selenium in the last 10 years, is one of the most significant stories to happen to software development. There will always be CEOs who say they don’t care about tests. Don’t listen to them (also, don’t work for and don’t fund them). Keep testing and carry on.

[Disclaimer: The conjecture made herein should be thought of in the context of web application development, specifically modern Javascript apps. I wouldn’t presume to make generalizations about other kinds of software development but I know that testing is a big deal in other software development too.]

Jason Fleetwood-Boldt is runs the consulting agency VERSO COMMERCE. We can help you with site speed, analytics, competitive research, Wix, Shopify, React, or NextJS/Node apps. Get in touch today at https://versocommerce.com

more at his blog at https://jasonfleetwoodboldt.com

Top comments (1)

Good article about testing even if I don't totally agree with you about some parts.

Unit testing and E2E tests are good for what you said but comparing UT to E2E tests is not a good idea. You have different stages when you test you application and each stage has his main goal and maybe completes another stage. E2E tests aren't not enough to prevent your application from bugs and it's the same for UT. You forgot to talk about integration or functional tests that are also important.