⚠️ This post is a part of Learning data analysis with python series. If you haven't read the first post, some of the content won't make sense. Check it out here.

In the previous article, we talked about pandas library in python, Series data structure, different ways to create a Series object using a list,a dictionary and a numpy array. and how we can apply some functions on it to get some statistical data from it. In this post, we'll talk about how you can create a Series object from an external file(csv), what is loc and iloc and some tasks that we can achieve using Series. Also, we'll see an introduction to the DataFrame object as in real world data, almost in every case we come across tabular data(data with multiple columns and rows) rather than a single column data and thus, we'll be using DataFrame more frequently than Series.

What is loc and iloc?

loc and iloc are probably the most asked topic in the data science interviews and many newcomers and data science aspirants get confused initially about loc and iloc. However, it's not rocket science and if you understand loc and iloc in Series objects, it will be easier for you to understand how to use them for indexing the DataFrame object.

In the last post, we saw that we can give index of our choice for the convenience of accessing data using that index but sometimes we may want to use the default integer indexing that pandas provide (0,1,2,...) to access some data. For example, let's say, we have data consisting 100 rows and you want to get the first 50 rows, we can do this using slicing but now that we have custom indexing, we must know the first and 50th index to get the data. This is just an oversimplified case of such scenarios, as in real world data analysis tasks you may be working on a dataset with thousands or millions of rows. In that case, it is not feasible to find custom index and then access data.

And that's when loc and iloc come-in handy. loc and iloc are builtin pandas method for indexing data from a pandas Series or DataFrame. In plain english loc is label based indexing which means that use name(custom index) of row and column whereas iloc is integer index-based which means we use integer index(default pandas index) of row and column to access the data. Let's look at the code and it will make things more clear.

In[1]:

s = pd.Series([0.0, 1, 1, 2, 3, 5, 8], index=[1, 2, 3, 4, 5, 6, 7])

Out[1]:

1 0.0

2 1.0

3 1.0

4 2.0

5 3.0

6 5.0

7 8.0

dtype: float64

In[2]:

s.loc[3]

Out[2]:

1.0

In[3]:

s.iloc[3]

Out[3]:

2.0

As you can see, in our Series object s, we have given custom index starting from 1 to 7. And then, when we use the loc method on this Series and pass 3 as index, we get the data corresponding to the 3 in our index column. And when we use the iloc method on this Series and again pass 3 as index, we get the data corresponding to the 3 in the default pandas index and as the dafault pandas indexing starts from 0, we get the data from 4th row. We can use the reset_index method to reset the index and get the default interger indexing in our Series. Looking at both the indexing side by side will make things more clear.

In[4]:

s.reset_index()

Out[4]:

index 0

0 1 0.0

1 2 1.0

2 3 1.0

3 4 2.0

4 5 3.0

5 6 5.0

6 7 8.0

As you can see, the first unnamed column represents the default pandas indexing, second column named as 'index' represents the custom index that we provided and the third column named as '0' represents corresponding values. I hope now you get the idea of what is loc and iloc and what's the difference between them. We'll talk more about it in the DataFrame objects article.

Missing values or NaNs

Missing values or NaN(Not A Number) values are very common in real world datasets and before using such datasets we have to handle these missing values and NaNs in our dataset in the data cleaning phase. There are many different ways in which we can handle missing values such as dropping rows that contain missing value or replacing missing values with some other values such as mean of that column or 0 or any other value. It's a subjective matter that how you handle the missing values but anyways, Pandas provide very easy way to find missing values using isnan(), isna(), notna() in our data and using it's advance indexing methods, changing those missing values become very easy. Now let's look at the very basic example of handling missing values by changing it to the mean of that column.

In[5]:

'''Series of Mass of Planets, where index is the name of the planet and the corresponding value is the mass of that planet.'''

mass = pd.Series([0.33, 4.87, 5.97, 0.642, 1898, 568, 86.8, 102, 0.0146],

index=['Mercury', 'Venus', 'Earth', 'Mars', 'Jupiter', 'Saturn', 'Uranus', 'Neptune', 'Pluto'])

print(mass)

Out[5]:

Mercury 0.3300

Venus 4.8700

Earth 5.9700

Mars 0.6420

Jupiter 1898.0000

Saturn 568.0000

Uranus 86.8000

Neptune 102.0000

Pluto 0.0146

dtype: float64

In[6]:

'''Series of Diameter of Planets, where index is the name of the planet and the corresponding value is the diameter of that planet.'''

diameter = pd.Series([4879, 12104, 12756, 3475, 6792, 142984, 120536, 51118, 49528, 2370],

index=['Mercury', 'Venus', 'Earth', 'Moon', 'Mars', 'Jupiter', 'Saturn', 'Uranus', 'Neptune', 'Pluto'])

print(diameter)

Out[6]:

Mercury 4879

Venus 12104

Earth 12756

Moon 3475

Mars 6792

Jupiter 142984

Saturn 120536

Uranus 51118

Neptune 49528

Pluto 2370

dtype: int64

In[7]:

# Finding density using the mass and diameter series.

density = mass / (np.pi * np.power(diameter, 3) / 6)

print(density)

Out[7]:

Earth 5.493286e-12

Jupiter 1.240039e-12

Mars 3.913302e-12

Mercury 5.426538e-12

Moon NaN

Neptune 1.603427e-12

Pluto 2.094639e-12

Saturn 6.194402e-13

Uranus 1.241079e-12

Venus 5.244977e-12

dtype: float64

In[8]:

pd.isna(density)

Out[8]:

Earth False

Jupiter False

Mars False

Mercury False

Moon True

Neptune False

Pluto False

Saturn False

Uranus False

Venus False

dtype: bool

In[9]:

# Get the index of data in density where the value is NaN and change it to the mean of density.

density[pd.isna(density)] = np.mean(density)

print(density)

Out[9]:

Earth 5.493286e-12

Jupiter 1.240039e-12

Mars 3.913302e-12

Mercury 5.426538e-12

Moon 2.986303e-12

Neptune 1.603427e-12

Pluto 2.094639e-12

Saturn 6.194402e-13

Uranus 1.241079e-12

Venus 5.244977e-12

dtype: float64

If that was a lot to take in, let me walk you through it. First I've created two Series objects named mass and diameter containing mass and diameter of the planets respectively. The only change in these two is that diameter Series contains 'Moon' in it but mass doesn't. And thus, when we find density using these two Series we get the NaN value for Moon as mass doesn't contain it. I infer you thinking "How did this happen?". Let me explain, when we merge two Series or create a new Series by applying some functions on two different Series, what pandas does is that it matches both the Series by their indices. Also, the order of the indices doesn't matter. Thus, when we created a new Series 'density' using 'mass' and 'diameter', pandas did the calculations and merged these two Series by their indices but in the case 'Moon' it couldn't find the 'mass' and thus, it added NaN.

After creating this new 'density' Series, we see that we have NaN value in our data and to change it we have to find it's index. Now, in our example for the sake of simplicity, we had a single NaN value and also our Series had only 10 rows so we could have changed the NaN value manually using the index of 'Moon', but with large data and many more NaN values we can't go through all the rows and find the indices of each NaN values, therefore pandas has a builtin function to find all the values in which has NaN values. And using the indexing techniques that pandas offer we changed the value of Moon's diameter in one line, otherwise we would have to go through all the data in the Series using a for loop and change the NaN values with the mean value.

As you can see, pandas package provides builtin methods for all the major tasks that we have to do while working with data and it uses numpy library that makes these operations fast as numpy use vectorization methods for all the computer heavy tasks and that's the reason why numpy and pandas are the most popular python packages for data science.

Reading Data From External File & Working with Real World Data

Till now, we have created and worked with the data that we created using list and dictionaries but we don't create data by ourself in real world tasks, we use the data from external files such as csv(comma seperated values) files, text files, excels files, etc. And pandas provides many builtin methods to load the data from external files and make Series and DataFrame objects from that data very easily. Let's look at the example of loading 'csv' file and creating a Series object from it.

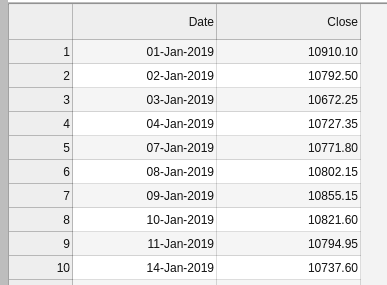

We have a real world data of closing value of Nifty index from the 1st January 2019 to 31st December 2019. Nifty is a benchmark Indian stock market index that represents the weighted average of 50 of the largest Indian companies listed on NSE. Let's see what this data looks like.

Now let's see how to load this data in our code and create a Series object. Pandas has a method named read_csv() which takes file path and some other parameters such asseperator,skip_rows,header, index_col and many more and creates a DataFrame object from it. But we want to create a Series object, thus we'll use iloc and get only the first column from the DataFrame that padas creates.

In[10]:

# Using first column as index column create a Series from 'nifty_data.csv

nifty = pd.read_csv("nifty_data.csv", index_col = 0).iloc[:,0]

print(nifty.head(5)) # Print first 5 rows

Out[10]:

Date

01-Jan-2019 10910.10

02-Jan-2019 10792.50

03-Jan-2019 10672.25

04-Jan-2019 10727.35

07-Jan-2019 10771.80

Now, let's look at some basic operations on this data such as mean, median, max and other statistical data. But instead of doing all these operations one by on the Series, we can use a builtin pandas method describe() to get all these data together using a single function.

In[11]:

nifty.describe()

Out[11]:

count 245.000000

mean 11432.632245

std 454.214611

min 10604.350000

25% 11003.050000

50% 11512.400000

75% 11841.550000

max 12271.800000

Name: Close, dtype: float64

And just like that, we can easily get all the statistics information from the data with a single line. Here 25%,50% and 75% represents percentile of the data meaning that 25% of values in our data is below 11003.050000 and so on. You may ask that here we dont' have median of the data but that's because the median is equal to 50th percentile ,thus 50% also represents median of the data. From a little statistics knowledge I can infer that there are not many significant outliers as there isn't much difference between mean and median.

Now remember I told you, when we apply some operations on two Series and get a new Series, pandas match them using their indices. Let's see that with another example and why keeping that mind is important. Let's say you want to find the total days on which the closing value was higher than the previous day's closing value. Well that's pretty simple, we can just take two slices from this Series, first being the data without the first column and second being the data without the last column and we can just subtract and see if the result is greater than zero or not.Right? Let's see what happens when we do that.

In[12]:

nifty[1:]

Out[12]:

Date

02-Jan-2019 10792.50

03-Jan-2019 10672.25

04-Jan-2019 10727.35

07-Jan-2019 10771.80

08-Jan-2019 10802.15

... ...

24-Dec-2019 12214.55

26-Dec-2019 12126.55

27-Dec-2019 12245.80

30-Dec-2019 12255.85

31-Dec-2019 12168.45

Name: Close, Length: 244, dtype: float64

In[13]:

nifty[:-1]

Out[13]:

Date

01-Jan-2019 10910.10

02-Jan-2019 10792.50

03-Jan-2019 10672.25

04-Jan-2019 10727.35

07-Jan-2019 10771.80

... ...

23-Dec-2019 12262.75

24-Dec-2019 12214.55

26-Dec-2019 12126.55

27-Dec-2019 12245.80

30-Dec-2019 12255.85

Name: Close, Length: 244, dtype: float64

In[14]:

nifty[1:] - nifty[:-1]

Out[14]:

Date

01-Apr-2019 0.0

01-Aug-2019 0.0

01-Feb-2019 0.0

01-Jan-2019 NaN

01-Jul-2019 0.0

...

31-Dec-2019 NaN

31-Jan-2019 0.0

31-Jul-2019 0.0

31-May-2019 0.0

31-Oct-2019 0.0

Name: Close, Length: 245, dtype: float64

Ooops!! That's not what we expected it to be right? Yes and that's because pandas matched indices of both the slices and subtracted their corresponding values and for the values that it couldn't find matching indices (01-Jan-2019 and 31-Dec-2019), it addded NaN values. Thus, when we want to subtract two series by element by element and don't want the index to be matched, we can use values() method on these slices and get the numpy array of values for both the slices and then subtract them.

In[15]:

(nifty.values[1:] - nifty.values[:-1]) > 0

Out[15]:

array([False, False, True, True, True, True, False, False, False,........,False])

In[16]:

np.sum((nifty.values[1:] - nifty.values[:-1]) > 0)

Out[16]:

129

Well that's pretty much it for this post and Series object. There are many more things that you can do with the pandas Series object but including each and everything in my posts is pretty much impossible. Look at the pandas documentation and see what you can do with the Series object. In the next post, We'll talk about DataFrame objects and all the good things we can do with it. Thank you for reading.

Get the full code notebook here : Github

Get the Nifty 50 data here : Data

Top comments (0)