Web Scraping and crawling is the process of automatically extracting data from websites. Websites are built with HTML, as a result, data on these websites can be unstructured but one can find a structure with the help of HTML tags, IDs, and classes. In this article, we will find out how to speed up this process by using Concurrent methods such as multithreading. Concurrency means overlapping two or more tasks and executing them in parallel, where those tasks progress simultaneously and result in less time for execution, making our Web Scraper fast.

In this article, we will create a bot that will scrape all quotes, authors, and tags from a given sample website, after which we will Concurrent that using multithreading and multiprocessing.

Modules required 🔌 :

- Python: Setting up python, link

- Selenium: Setting up selenium Installing selenium using pip

pip install selenium

- Webdriver-manager: To get and manage headless browser drivers Installing Webdriver-manager using pip

pip install webdriver-manager

Basic Setup 🗂 :

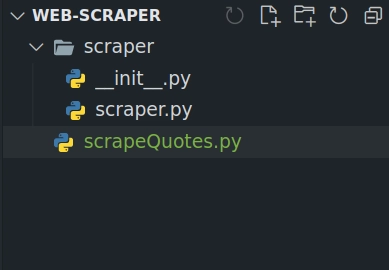

Let us create a directory named web-scraper, with a subfolder named scraper. In the scraper folder, we will place our helper functions

For creating a directory, we can use the following command.

mkdir -p web-scraper/scraper

After creating folders, we need to create a few files using the commands below. To write our code, scrapeQuotes.py contains our main scraper code.

cd web-scraper && touch scrapeQuotes.py

whereas scraper.py contains our helper functions.

cd scraper && touch scraper.py __init__.py

After this, our folder structure will look like this, open this in any text editor of your choice.

Editing scraper.py in the scraper Folder:

Now, Let us write some helper functions for our bot

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

def get_driver():

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument("--disable-gpu")

return webdriver.Chrome(

service=ChromeService(ChromeDriverManager().install()),

options=options,

)

def persist_data(data, filename):

# this function writes data in the file

try:

file = open(filename, "a")

file.writelines([f"{line}\n" for line in data])

except Exception as e:

return e

finally:

file.close()

return True

Editing scrapeQuotes.py 📝 :

For scraping, we need an identifier in an order to scrape quotes for this website. We can see each quote has a unique class called quote

For getting all elements we will use By.CLASS_NAME identifier

def scrape_quotes(self):

quotes_list = []

quotes = WebDriverWait(self.driver, 20).until(

EC.visibility_of_all_elements_located((By.CLASS_NAME, "quote"))

)

for quote in quotes:

quotes_list.append(self.clean_output(quote))

self.close_driver()

return quotes_list

Complete script with a few more functions:

- load_page: load_page is a function that takes the URL and loads the webpage

- scrape_quotes: scrape_quotes fetch all quotes by using the class name

- close_driver: this function closes the selenium session after we get our quotes

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from scraper.scraper import get_driver, persist_data

class ScrapeQuotes:

def __init__ (self, url):

self.url = url

self.driver = get_driver()

def load_page(self):

self.driver.get(self.url)

def scrape_quotes(self):

quotes_list = []

quotes = WebDriverWait(self.driver, 20).until(

EC.visibility_of_all_elements_located((By.CLASS_NAME, "quote"))

)

for quote in quotes:

quotes_list.append(self.clean_output(quote))

self.close_driver()

return quotes_list

def clean_output(self, quote):

raw_quote = quote.text.strip().split("\n")

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[1] = raw_quote[1].replace("by", "")

raw_quote[1] = raw_quote[1].replace("(about)", "")

raw_quote[2] = raw_quote[2].replace("Tags: ", "")

return ",".join(raw_quote)

def close_driver(self):

self.driver.close()

def main(tag):

scrape = ScrapeQuotes("https://quotes.toscrape.com/tag/" + tag)

scrape.load_page()

quotes = scrape.scrape_quotes()

persist_data(quotes, "quotes.csv")

if __name__ == " __main__":

tags = ["love", "truth", "books", "life", "inspirational"]

for tag in tags:

main(tag)

Calculating Time :

In an order to see the difference between a normal Web Scraper and a Concurrent nature Web Scraper, we will run the following scraper with a time command.

time -p /usr/bin/python3 scrapeQuotes.py

Here, we can see our script took 22.84 secs.

Configure Multithreading :

we will rewrite our script to use concurrent.futures library, from this library we will import a function called ThreadPoolExecutor which is used to spawn a pool of threads for executing the main function parallelly.

# list to store the threads

threadList = []

# initialize the thread pool

with ThreadPoolExecutor() as executor:

for tag in tags:

threadList.append(executor.submit(main, tag))

# wait for all the threads to complete

wait(threadList)

In this executor.submit function takes the name of the function which is main in our case as well as the arguments, we need to pass to the main function. The wait function is used to block the flow of execution until all tasks are complete.

Editing scrapeQuotes.py for using Multithreading 📝 :

from concurrent.futures import ThreadPoolExecutor, process, wait

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from scraper.scraper import get_driver, persist_data

class ScrapeQuotes:

def __init__ (self, url):

self.url = url

self.driver = get_driver()

def load_page(self):

self.driver.get(self.url)

def scrape_quotes(self):

quotes_list = []

quotes = WebDriverWait(self.driver, 20).until(

EC.visibility_of_all_elements_located((By.CLASS_NAME, "quote"))

)

for quote in quotes:

quotes_list.append(self.clean_output(quote))

self.close_driver()

return quotes_list

def clean_output(self, quote):

raw_quote = quote.text.strip().split("\n")

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[1] = raw_quote[1].replace("by", "")

raw_quote[1] = raw_quote[1].replace("(about)", "")

raw_quote[2] = raw_quote[2].replace("Tags: ", "")

return ",".join(raw_quote)

def close_driver(self):

self.driver.close()

def main(tag):

scrape = ScrapeQuotes("https://quotes.toscrape.com/tag/" + tag)

scrape.load_page()

quotes = scrape.scrape_quotes()

persist_data(quotes, "quotes.csv")

if __name__ == " __main__":

tags = ["love", "truth", "books", "life", "inspirational"]

# list to store the threads

threadList = []

# initialize the thread pool

with ThreadPoolExecutor() as executor:

for tag in tags:

threadList.append(executor.submit(main, tag))

# wait for all the threads to complete

wait(threadList)

Calculating Time :

Let us calculate the time required for running using Multithreading in this script.

time -p /usr/bin/python3 scrapeQuotes.py

Here, we can see our script took 9.27 secs.

Configure Multiprocessing :

We can easily convert Multithreading scripts to multiprocessing scripts, as in python multiprocessing is implemented by ProcessPoolExecutor which has the same interface as ThreadPoolExecutor. Multiprocessing is known for parallelism of tasks whereas Multithreading is known for concurrency in tasks.

# list to store the processes

processList = []

# initialize the mutiprocess interface

with ProcessPoolExecutor() as executor:

for tag in tags:

processList.append(executor.submit(main, tag))

# wait for all the threads to complete

wait(processList)

Editing scrapeQuotes.py for using Multiprocessing 📝 :

from concurrent.futures import ProcessPoolExecutor, wait

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from scraper.scraper import get_driver, persist_data

class ScrapeQuotes:

def __init__ (self, url):

self.url = url

self.driver = get_driver()

def load_page(self):

self.driver.get(self.url)

def scrape_quotes(self):

quotes_list = []

quotes = WebDriverWait(self.driver, 20).until(

EC.visibility_of_all_elements_located((By.CLASS_NAME, "quote"))

)

for quote in quotes:

quotes_list.append(self.clean_output(quote))

self.close_driver()

return quotes_list

def clean_output(self, quote):

raw_quote = quote.text.strip().split("\n")

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[0] = raw_quote[0].replace("", '"')

raw_quote[1] = raw_quote[1].replace("by", "")

raw_quote[1] = raw_quote[1].replace("(about)", "")

raw_quote[2] = raw_quote[2].replace("Tags: ", "")

return ",".join(raw_quote)

def close_driver(self):

self.driver.close()

def main(tag):

scrape = ScrapeQuotes("https://quotes.toscrape.com/tag/" + tag)

scrape.load_page()

quotes = scrape.scrape_quotes()

persist_data(quotes, "quotes.csv")

if __name__ == " __main__":

tags = ["love", "truth", "books", "life", "inspirational"]

# list to store the processes

processList = []

# initialize the mutiprocess interface

with ProcessPoolExecutor() as executor:

for tag in tags:

processList.append(executor.submit(main, tag))

# wait for all the threads to complete

wait(processList)

Calculating Time :

Let's calculate the time required for running using Multiprocessing in this script.

time -p /usr/bin/python3 scrapeQuotes.py

Here, we can see our Multiprocessing script took 8.23 secs.

Top comments (1)

Thank you.