In this article and its part I we explore the technologies available to build a cross-platform experience which includes voice assistants. Assuming we want to build a small and simple to-do list app for Android, iOS, web and the Google Assistant, how can we provide the best experience for each platform while making sure our app can scale?

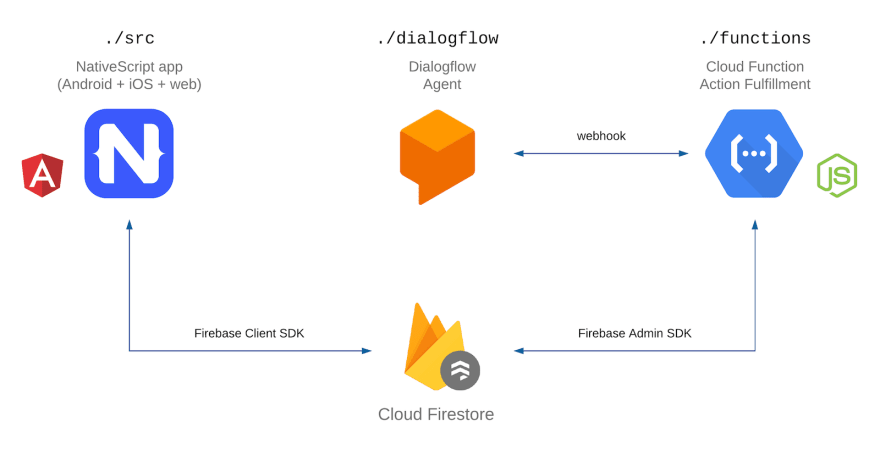

To bring to life the tech stack defined in the previous article, we’ve created VOXE (VOice X-platform Experience). VOXE is a simple proof-of-concept to-do list app for Android, iOS, web and the Google Assistant. One single repository, which contains:

- A NativeScript (Angular) app that compiles to Android, iOS and web.

- A Dialogflow agent.

- A Cloud Function for the Google Action fulfillment.

The app makes use of Cloud Firestore to store the data.

The repository is publicly available on GitHub.

potatolondon

/

voxe

potatolondon

/

voxe

VOice X-platform Experience. A to-do list app for Android, iOS, web and Google Assistant

Firebase: Infrastructure and services

VOXE makes use of three Firebase services:

- Hosting: for the web app.

- Authentication: we will use Google here for simplicity but Firebase supports many other authentication methods such as passwords, phone numbers and other popular federated identity providers like Facebook, Twitter, etc.

- Cloud Firestore.

The first two services are easily configurable via the Firebase Console. Let’s dig into our data model and security rules.

Cloud Firestore data model

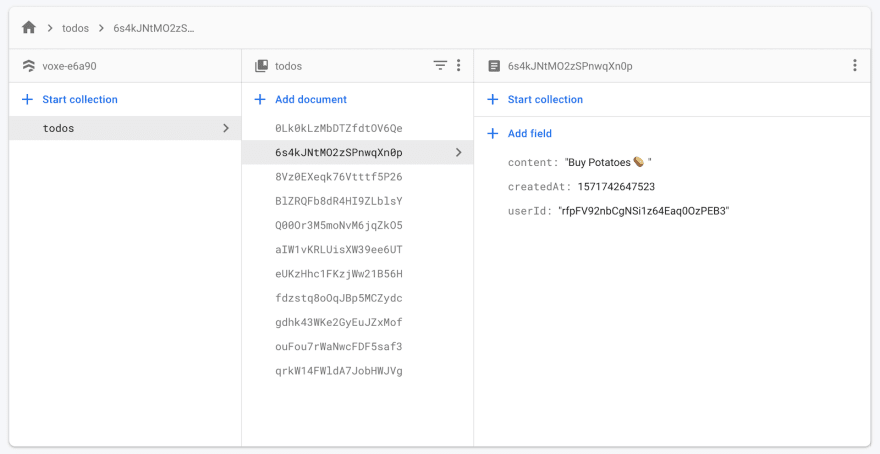

Cloud Firestore is a NoSQL, document-oriented database. Unlike a SQL database, there are no tables or rows. Instead, the data is stored in documents, which are organised into collections.

We’ll need, then, a collection of to-do documents (todos), each of which will define the following fields: content (string), createdAt timestamp (number) and userId (string).

Security rules

Since we’re relying on Firebase client SDKs and not on a bespoke API, how do we make sure users only have access to to-do items created by themselves?

Every database request from a Cloud Firestore mobile/web client library is evaluated against a set of security rules before reading or writing any data. These rules define the level of access for a specific path in the database using conditions based on authentication and data fields.

Let’s have a look at the security rules for our database:

service cloud.firestore {

match /databases/{database}/documents {

match /todos/{todo} {

allow read, delete: if request.auth.uid == resource.data.userId;

allow create: if request.auth.uid == request.resource.data.userId;

allow update: if request.auth.uid == resource.data.userId && request.auth.uid == request.resource.data.userId;

}

}

}

-

resource.datarepresents the document accessed by the query. -

request.auth.uidis theidof the authenticated user. -

request.resource.datarepresents a new or updated document.

So this set of security rules will make sure that:

- Users can only read and delete documents whose

userIdfield matches their useruid. - User can only create documents whose

userIdfield matches their useruid. - User can only update documents whose

userIdfield matches their useruidand this field can’t be changed.

Our database is now ready to roll.

NativeScript: A cross-platform native and web app

In our previous article we discussed some of the benefits of using NativeScript for building cross-platform apps, focusing on its code-sharing capabilities using Angular.

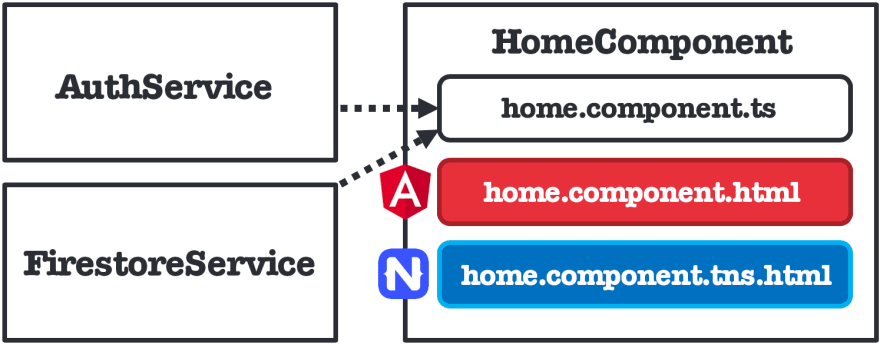

A code-sharing project is one where we keep the code for the web and mobile apps in one place. Let’s have a look at some examples of how we have benefited from this architecture on VOXE.

At component level, these files define the app homepage:

-

home.component.ts: Angular component definition (web and native). -

home.component.html: web template file. -

home.component.tns.html: mobile template file.

It is also possible to use the naming convention for platform-specific styling:

-

home.component.scss: web styles. -

home.component.android.scss: Android styles. -

home.component.ios.scss: iOS styles.

We’ve been able to write an implementation for the Home component (home.component.ts) that works both for web and mobile but this might not always be possible. In fact, for the Authentication and Firestore services, this isn’t possible since we’re forced to use the Firebase native client SDK for each platform. For this to work, again, we use the naming convention to define two different services. For example, the Firestore service is defined by these files:

-

firestore.service.ts: web implementation using theangularfirelibrary. -

firestore.service.tns.ts: mobile implementation usingnativescript-plugin-firebase. -

firestore.service.interface.ts: interface used by both implementations of the service.

Actions on Google: The voice experience

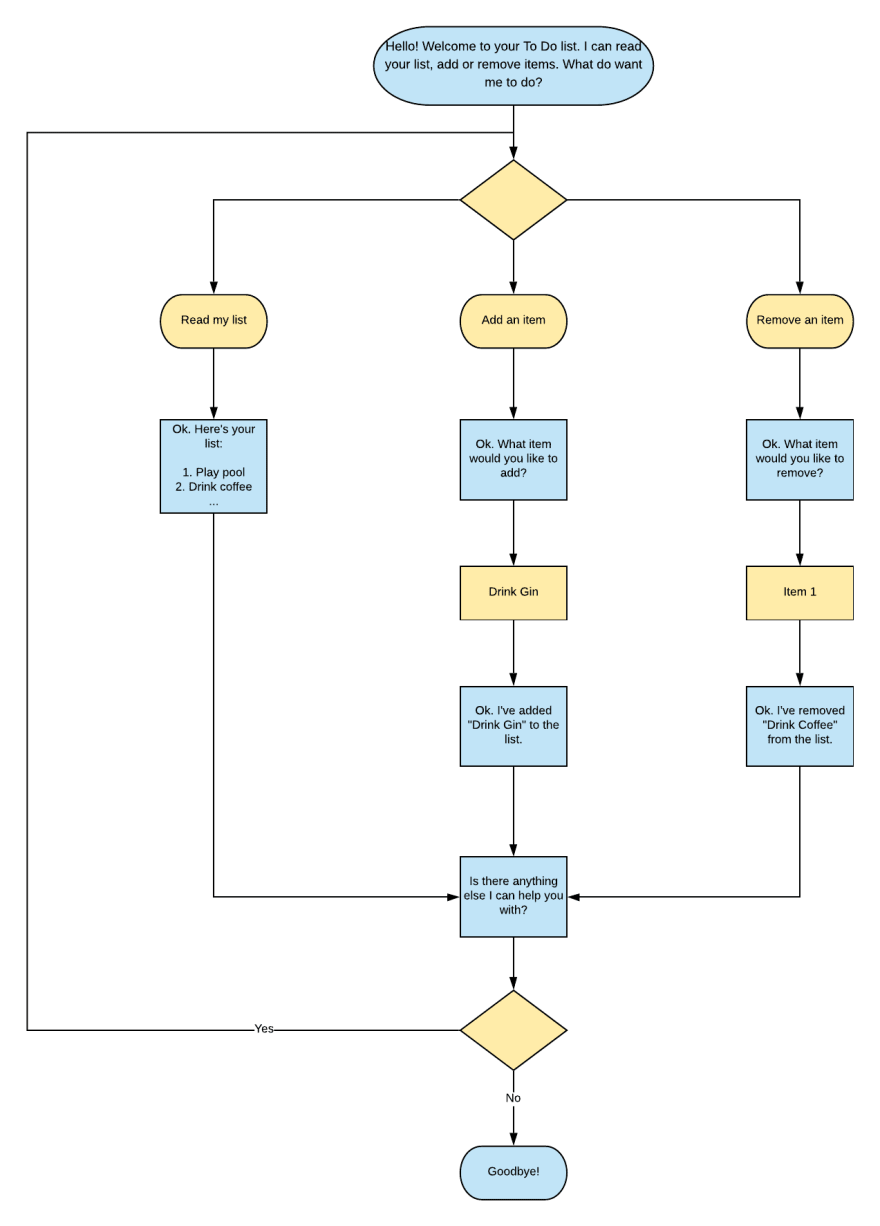

As previously discussed, some of our app features could be triggered via voice (or text) commands using the Google Assistant. Let’s assume we want to be able to read our to-do list, add and remove items. Here’s a diagram of the conversation flow:

In order to achieve this integration we’ll need to follow these three steps:

- Create an Action in the Google Actions Console.

- Create a Dialogflow Agent.

- Deploy a Fulfillment.

Dialogflow

Dialogflow is a natural language understanding platform that allows us to design and build conversational user interfaces. A Dialogflow agent is a virtual agent that handles these conversations with your end-users. Although the focus of this article is on the Google Assistant, it’s important to mention that Dialogflow can also be used to build text chatbots that can be integrated with different platforms.

To describe how to do something we use intents. We want our Action to respond to requests like:

- Read my list

- Add a new item to my list

- Remove an item from my list

We can create these intents in Dialogflow. Here’s the list of Intents in VOXE:

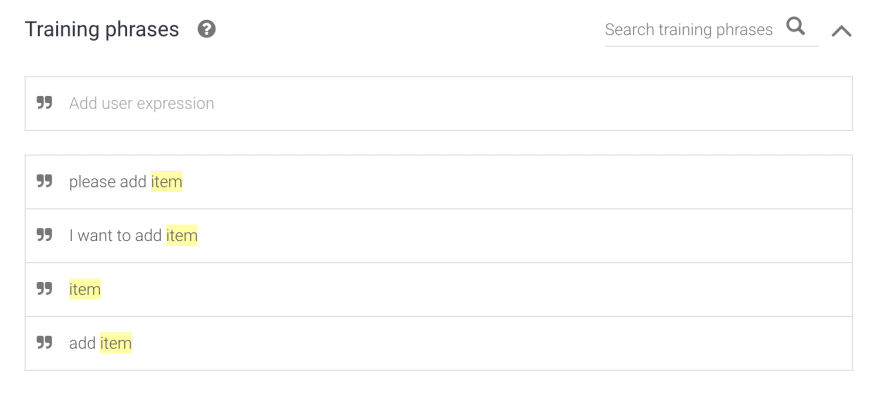

For each of the intents we need to provide training phrases. These are example phrases for what end-users might type or say, referred to as end-user expressions. For each intent, we create many training phrases. When an end-user expression resembles one of these phrases, Dialogflow matches the intent. “Please read my list”, “Remove an item from my list” or “Add an item to my list” are examples of training phrases for intents in VOXE.

Training phrases for “Add item request” intent

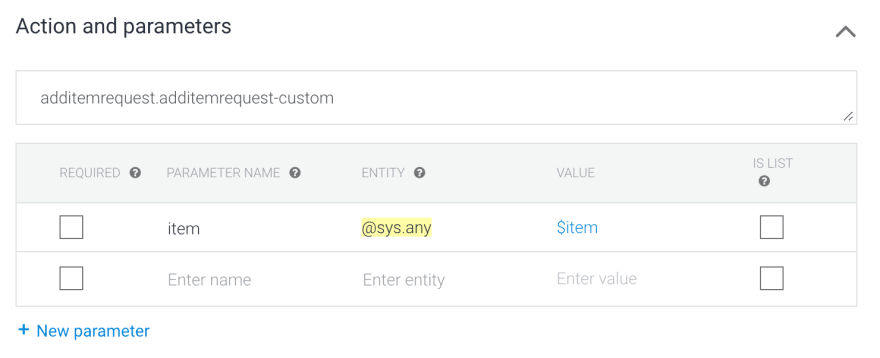

Training phrases can contain parameters of different entity types, from which we can extract values. For example, when the user mentions the item to be added to, or removed from the list, this list item is extracted as a parameter.

“item” parameter for “Add item request” intent

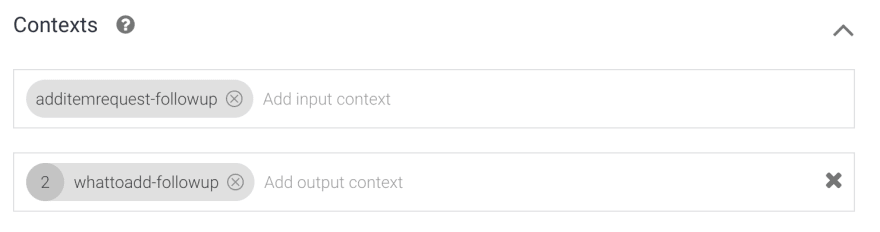

But how does Dialogflow know in which point of the conversation we are, so only the right intent for our invocation phrase can be triggered? Contexts are the key to controlling the flow of the conversation and are used to define follow-up intents. When an intent is matched, any configured output contexts for that intent become active. While any contexts are active, Dialogflow will only match intents that are configured with input contexts that match the currently active contexts.

Input and output contexts for “Add item request” intent

Fulfillment

We’ve discussed so far how intents represent the input of the conversational action. But what about the output? In Dialogflow, intents have a built-in response handler that can return static responses after the intent is matched. Different variations of this response can be specified, and one of them will be selected randomly.

Responses to “Add item request” intent

Most of the time we will need dynamic responses for our intents. The “Read list” intent is a good example. We need some code to access the database and read our list. For this to happen we need to use fulfillment for the intent. This tells Dialogflow to use an HTTP request to a webhook to determine the response.

In order to build the fulfillment for VOXE we’ve used the Node.js® client library for Actions on Google. We have deployed our code to a Cloud Function using Firebase.

This snippet shows the implementation for the “Read list” intent handler:

'use strict';

// Import the Dialogflow module and response creation dependencies

// from the Actions on Google client library.

const {

dialogflow,

BasicCard,

List,

SignIn,

Suggestions,

} = require('actions-on-google');

// Import the firebase-functions package for deployment.

const functions = require('firebase-functions');

// Instantiate the Dialogflow client.

const app = dialogflow({

debug: true,

clientId: 'YOUR_CLIENT_ID_HERE',

});

// Init firebase

const admin = require('firebase-admin');

admin.initializeApp(functions.config().firebase);

const db = admin.firestore();

const auth = admin.auth();

const todosCollection = db.collection('todos');

// Middleware to store user uid

app.middleware(async (conv) => {

const { email } = conv.user;

const user = await auth.getUserByEmail(email);

conv.user.storage.uid = user.uid;

});

// Handle the Read List intent

app.intent('read list', async (conv) => {

const snapshot = await todosCollection

.where('userId', '==', conv.user.storage.uid)

.get()

.catch((err) => {

conv.close('Sorry. It seems we are having some technical difficulties.');

console.error('Error reading Firestore', err);

});

if (!snapshot.empty) {

let ssml = '<speak>This is your to-do list: <break time="1" />';

snapshot.forEach((doc) => {

ssml += `${doc.data().content}<break time="500ms" />, `;

});

ssml += '<break time="500ms" />Would you like me to do anything else?</speak>';

conv.ask(ssml);

conv.ask(new Suggestions('Yes', 'No'));

conv.contexts.set('restart-input', 2);

} else {

conv.ask(`${conv.user.profile.payload.given_name}, your list is empty. Do you want to add a new item?`);

conv.ask(new Suggestions('Yes', 'No'));

conv.contexts.set('read-empty-list-followup', 2);

}

});

// The rest of your intents handlers here

// ...

// Set the DialogflowApp object to handle the HTTPS POST request.

exports.dialogflowFirebaseFulfillment = functions.https.onRequest(app);

The full version of the fulfillment can be found here.

Multimodal interaction

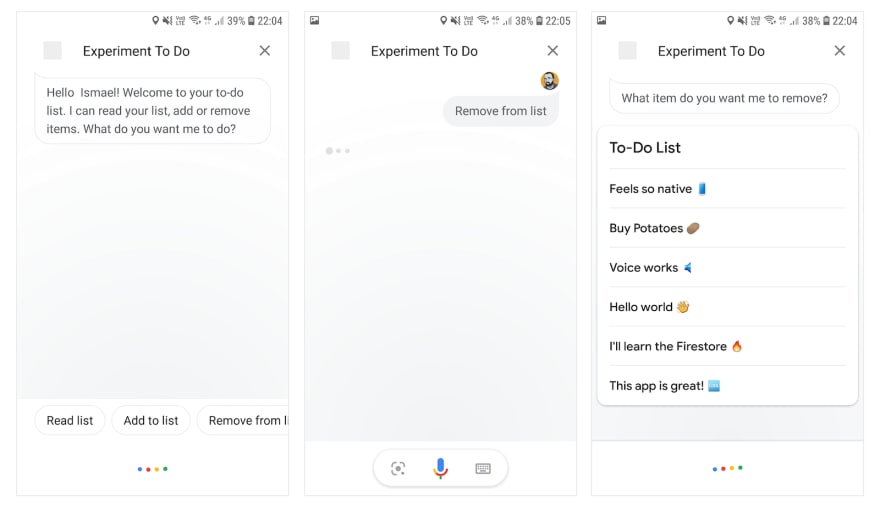

The Google Assistant is available to users on multiple devices like smartphones, smart speakers and smart displays. This means we’re not limited to voice and text as the only method for input and output, but we can also use visuals. This is known as multimodal interaction, and takes full advantage of these devices capabilities. This makes it easy for users to quickly scan lists or make selections.

A good example of multimodal interaction happens when we need to specify an item to be removed from the list. One option is using voice (or text) to indicate the item. However, if we’re using a touchscreen device, it feels more natural to select it from the list directly.

VOXE: Multimodal interaction to remove list item

In this two-part article we discussed how to build a cross-platform experience which includes voice assistants. The first part focused on exploring the technologies available and choosing a tech stack. In this second part we explained how we built a simple to-do list prototype app using NativeScript, Firebase and Actions on Google.

Top comments (0)