Whether you're a novice, just starting or a highly skilled AWS ninja, you need to keep an eye on those pesky AWS bills. Billing is a very important and sometimes confusing part of AWS and if left unchecked can cause a lot of damage.

AWS gives a one-year free trial and there are a lot of great tutorials out there, enabling young devs to get started in no time. However, before getting started, it is strongly recommended that you set up a billing alarm to avoid any unnecessary costs.

This article assumes that you have already created an AWS account

What is a Billing Alarm?

Good question! Billing Alarm is a limit that you can set and AWS console lets you know (email) when you exceed that limit.

Let's get our hands dirty !! 👩💻 👨💻

Assuming you are logged into the console. Click on your username in the top right bar and select the Billing Dashboard. Alternately, you can also search for billing in the search bar, on the main page.

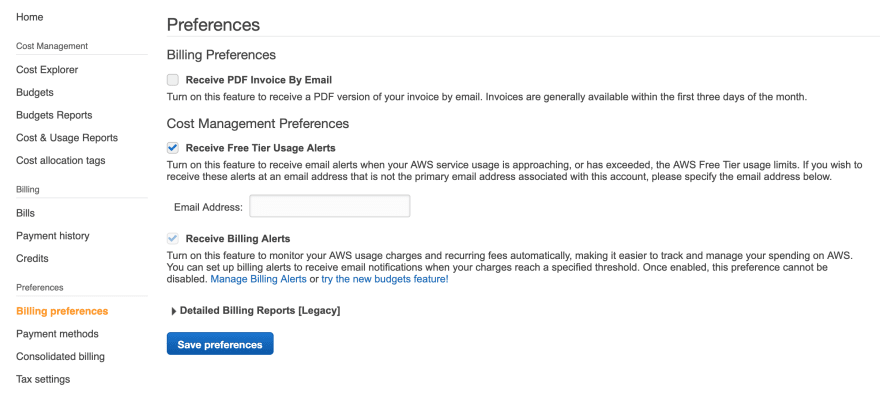

On the left tab, under the Preferences section click Billing preferences.

- Check Receive Billing Alerts and press Save Preferences.

Note: AWS recently updated its user interface (UI), so many existing tutorials on the net are still based on the older UI.

After that, you'll need to navigate to CloudWatch. Open Services dropdown (top bar) and search for CloudWatch. It should be under Management and Governance.

On the left side tab, click on Billing and then Create Alarm button.

💡CloudWatch displays all billing alerts in US East (N. Virginia) region only. So you will have to create all alarms in that region.

- The Create Alarm button will take you to a setup wizard. There are four steps in the wizard:

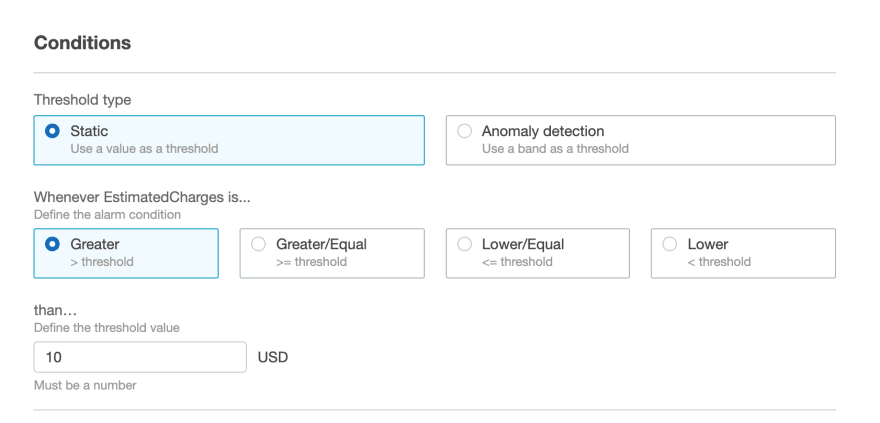

- Specify metrics and conditions

- Configure actions

- Add description

- Preview and create

- In the first step, you can add the alarm's name, currency, and conditions that trigger the alarm.

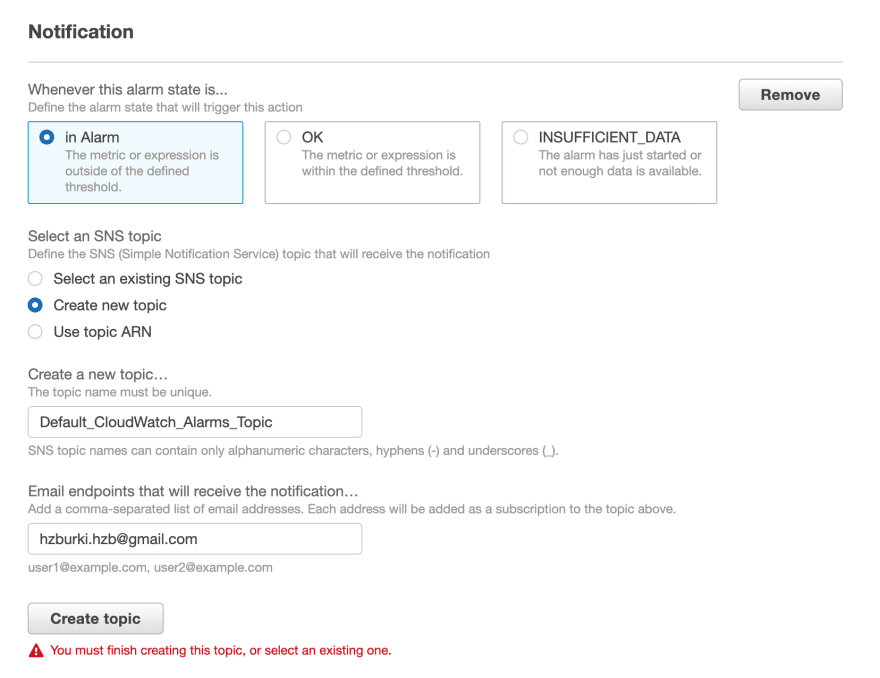

In the second step, you can configure when the alarm is triggered and who it goes out to. To define who receives the notification, you will need to create or choose an existing, SNS topic.

Complete the following steps;

- Select Create new topic under "Select an SNS topic" subheading

- You can change the topic name if you want.

- Add your email address. You can add multiple email addresses separated by commas (,)

- Press Create topic

In the third step just add a unique name and description (optional) for the alarm. This is so you can recognize the alarm easily among others you may create in the future.

The last step lets you preview all the information you have added in the setup wizard and create the alarm.

Note: Once the alarm is created, you will need to access your email and confirm the subscription to it.

Top comments (13)

If you're also looking to monitor your expenses on a monthly or quarterly or manual interval and want a report than consider also turning on AWS Budgets.

The first two budgets are free.

Alarms aren't free but they are inexpensive starting at $0.1 per triggered event. I noticed Alarms costs can pile up quickly with DynamoDB and its auto-scaling features. Since it at least creates three alarms for read and then write and for each index you create. My alarms with only a few DynamoDB tables were getting into $10-30 USD a month. You can, in this case, delete the alarms you don't need that are generated to reduce these costs.

Cool !! Thanks for the info, AWS budgets looks promising. I'll explore it further.

Also, I'd heard that DynamoDB can get really expensive when it comes to scaling. This is a really cool example you provided.

Appreciate it !! :D

To reduce costs for DynamoDB for write capacity a common strategy is to put SQS in front DynamoDB to buffer these reads and writes.

To reduce costs for DynamoDB for read capacity you can put ElastiCache infront of it.

There is DAX which is a caching solution for DynamoDB but I've haven't crunched the numbers to see if it's more cost-effective than ElastiCache so I may be utilizing an outdated caching solution.

I don't have any knowledge on DyanmoDB but putting SQS infront of DynamoDB will only limit the concurrent number of writes. The number of total requests will still remain the same. How does that reduce costs?

Also won't this slow down the write capability?

It slows down the write capacity, since the higher the capacity the more you pay.

It can be cheaper than paying for bursts of traffic and write capacity going up.

It just really depends how long those burts occur for, so there is a sweet spot to it.

This actually appears as an exam question on the Solution Architect Professional.

The catch being that you can only go SQS -> Dynamo if your system is already async. Otherwise you have to do a bit of redesigning, correct?

What do you mean by "system is already sync" ?

Sorry, I guess this is a bit off topic from your topic. The only reason @andrew Brown suggested to put SQS in front of DynamoDB is because they are most likely running their tables with Provisioned Capacity billing mode. With that they are using Auto-scaling. So most of the AWS Service Autoscale the same way, by creating multiple CloudWatch Alarms on a Metric for that service. Then if the current Write Capacity Units (WCU, basically rate at which is being written to DynamoDB) exceeds a value, the alarm triggers and does a certain action. In this case it will increase the Maximum Write Capacity Units so that writes do not get throttled.

So by controlling the rate at which data is written into DynamoDB they are limiting the amount of times that the CloudWatch Alarms triggers autoscaling actions. This reduces their bill as he explains these alarms have accumulated costs to an amount of $30 once. That's a lot, you can basically get a EC2 T3 Medium running an entire month for that price.

So he can only do this because when he writes, he writes to the queue, then returns immediately and does not wait until the data is written into Dynamo. There systems are async and most likely subject to eventual consistency. If you don't design your systems to be async from the start it requires some redesign, that is all I meant.

On the reading side I'm sure you get why a cache helps, but again you need to design that logic in your application. With something like ElasticCache (Redis) you can do a read-trhough cache (and most likely wrtie-through), this logic sits on your application level. Alternatively you can use DAX which is an AWS Managed Cache build specifically for DynamoDB that intercepts the API calls to DynamoDB, both read and write through cache. DynamoDB is a whole can of worms that probably shouldn't be opened on a comment for billing alarms, DM me if you want to know more.

I didn't suggest ElastiCache because it has to be in the same VPC since ElastiCache is a pain as such its likely a much more expensive solution than using DAX (again have yet to compare the pricing) and I wasn't certain how ElastiCache would work with DynamoDB Global Tables which is a common upgrade path for DynamoDB so did I not want to suggest an expensive and technical dead-end solution.

Not arguing just more food for thought and taking this conversation well off course.

Oh I see, sorry about that. From what I can remember, I did a quick comparison when DAX just came out and it was more expensive, specifically for our use case then. But this was back then.

Hehe but if you didn't take it off course I wouldn't have learned something new. Thanks

This has been really informative. I have a production-level app deployed on AWS (API, DB, Frontend). I've learned all the services I've had to encounter from google, blogs, and documentation. Unfortunately, I have not had the need to tinker with DynamoDB and a number of other necessary services as well.

I have started a learning path for AWS Solutions Architect certification and will be writing more blogs as a means of keeping notes and sharing what I learn.

Hi Andrew, can you recommend a video or article where I can find step by step implementation of that process? I mean put the record to sqs, process it with lambda and save to dynamo db with reducing the cost of db? thank you in advance!