You know what I'm talking about. Those infuriating websites that present animated grey boxes while they fetch their content asynchronously. For seconds. No one has seconds. Give me the content now!

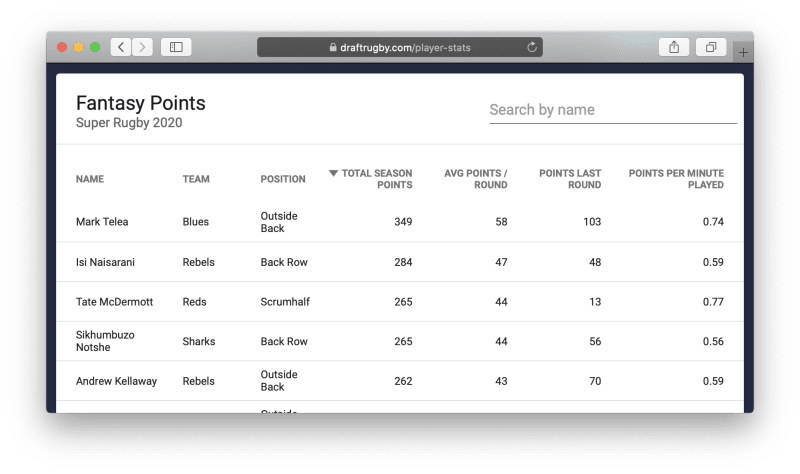

Draft Rugby is a fantasy Rugby app. It's in early development. Our main feature is the Player Stats page. This page is effectively a glorified table: It allows rapid search and sorting of the ~800 players in the Super Rugby season.

Before today, it loaded pretty quickly. Here's the process:

- A browser makes a

GETrequest to/player-stats - Draft Rugby replies with a bunch of HTML, CSS, and JS

- The browser runs the JS, which includes an immediate

GETrequest to/api/fantasy/player/listvis the Draft Sport JS library - Draft Rugby replies with a bunch of JSON

- The browser eats the JSON and fills the player table

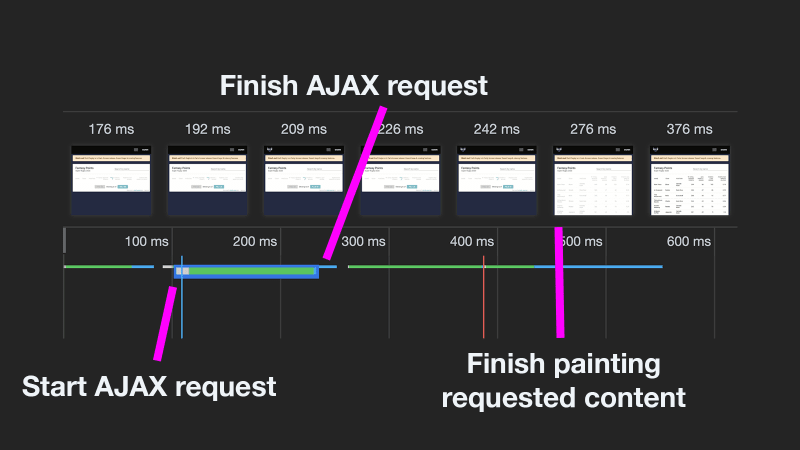

Step 3 is an asynchronous javascript request leading to document manipulation, commonly known as "AJAX". That's nice, because the user can now sort and search the table. Each time they do, more asynchronous requests are made to get them the data they want, and refill the table.

Except it's not always nice, because of the speed of light. In an ideal case, with a client device say, 30 kilometres from the datacenter, there might be 50 milliseconds between the start of step 1 and the start of step 3. In Draft Sport's case it was taking a whopping ~270ms to finish the whole sequence and begin animating the table.

No one has time for 270ms! A user will notice this delay, without question. And it get worse: Your user's visual processing system needs to parse your fancy loading animation while the async request is happening. Then it needs to dump that information and re-parse the actual page content.

Don't do this! It sucks! Let's shift the initial table load back onto the server. Now the sequence looks like this:

- A browser makes a

GETrequest to/player-stats - Draft Rugby replies with a bunch of HTML, CSS, and JS, including the content of the player stats table retrieved via Draft Sport Py.

- The browser paints everything

From 5 steps to 3. Now:

- No double round-trip to the datacenter to fetch the initial data

- No loading animations for the user to parse

What's the tradeoff? It depends on the disposition of your API. Draft Sport API is not the fastest thing in the world, yet - It takes about 50ms to retrieve the player table. That request now blocks the time-to-first-byte, slowing the page delivery down by 50ms.

The synchronous result is still way better. The time until content is fully presented drops from about ~450ms to ~200ms. As Draft Sport API matures and gets faster, that time will drop further, whereas the speed of light isn't going anywhere. And in the real world, your user is not going to be 20ms from your datacenter. The further away they are, faster the synchronous request becomes. Your framework can't outrun the speed of light!

Conclusion? Don't be afraid to hold up returning your first byte with a server-side API request. If you know what data the client wants, your overall time to displayed content will probably be significantly lower than if you return it asynchronously via an AJAX request.

Top comments (5)

The main concern I'd have with this approach is that you're tightly coupling your response time to an external service. If their response times spike or they go down for any reason, you can potentially leave yourself open to DoS (especially is you're using WSGI and not ASGI and asyncio). Removing that coupling and loading asynchronously leaves your service in a safer place overall.

If load times is an issue, something that you could maybe do (I'm not a UI guy so it might be a bad idea) is to incur the load time up front, but store the latest data in local storage. Then, the next time the page loads, load it from local storage and then update it once the async operation loads. That way, you only get that visible hit the first time around.

Maybe you misunderstood what's being called, and from where? Draft Sport API is part of Draft Sport, it's not an external service. If Draft Sport API doesn't respond to a request from inside the Draft Sport network boundary, then it's sure as hell not going to respond to a browser, either. Either way your system is FUBAR, you have critical failures, and your user is hung. So why choose a failure mode that results in a slow page load when the failure isn't present.

DOS? Whether a request comes from within your own network or a browser does not change your DOS attack surface. Either you have a public API or you don't. If you don't then you have a totally different architecture and this post is irrelevant.

As for local storage - I don't see the relevance. You are going to have to come up with a convoluted way to invalidate your local cache, for... A benefit I can't divine.

Absolutely could be, sure. The site was 500'ing when I tried to check it out (I realize it's in alpha :)). I took a quick gander through the Github repo and saw an external request being made through the nozomi python package, so I just naively assumed that's what was being used (no idea whatsover what nozomi is). It's also relatively late so I may just not be grok'ing the situation entirely. My assumption was that you were calling out to an external service, so sounds like I was wrong there. So based on your reply, I'm now assuming that you have (or had) a front end service and a back end service within a single network boundary (for simplicity sake). There aren't any call outs being made to any service outside of your own. You /used/ to call the back end service from the front end JS asynchronously and have moved from that to call inline from the front end services server-executed code during page render.

Running with that, the DoS surface is still changed. Let's say you're using WSGI (one input -> one output) and the back end service slows down for whatever reason. Eventually (of course, depending on the load that you're dealing with), all of your available workers can become saturated due to response times (or timeouts) in the other service. So now the user is presented with an error page, whatever the web server you're using sends back once it has too many requests queued.

OTOH, if you ensure that there isn't any tight coupling between the front end and back end services, you can still at least present the user with /something/. Perhaps there's only a portion of the back end service that's running slowly and they'll still have the ability to navigate through the rest of your site, create a support ticket or get in touch with you, or whatever else you may have.

Of course, you can mitigate the potential issues of synchronous requests during page render with request timeouts and such, but you'll still deal with holistic service degradation with the same potential outcome rather than just a portion of your site.

So yes, the DoS surface does change depending on the chosen architecture. I'm not only talking about an actual malicious DoS attack, I'm talking about you accidentally DoS'ing yourself.

As for local storage - The problem statement was those "infuriating websites that present animated grey boxes while they fetch their content asynchronously". Using the local storage approach, you could conceivably:

Next visit:

(Nothing convoluted there)

Yes the initial data will be stale, but it gets rid of those annoying gray boxes. Like I said though, I'm not really a UI guy so it might be a bad idea. I guess it all depends on what's more important: not having the annoying gray boxes or the freshness of the data without a visible refresh.

These are just my thoughts after having dealt with systems that have experienced these kinds of issues at high load. It may very well be that you never run into them. I was just trying to share my thoughts and experiences, not trying to be condescending or negative at all.

We'll have to agree to disagree, though I appreciate your interest in the post. The assumptions you are making are not applicable to the system in which these synchronous requests are being made. Though I am sure they are applicable in many systems.

To anyone else reading this comment thread and thinking "oh wow, I better make everything async!": Don't overthink it. Even if your system has the characteristics of the one Demian is describing, you might well want to take the 50% speed boost anyway. Make the risk/reward tradeoff.

Fair enough :) And no worries, it's a well written post and Python and performance are both topics that are near and dear to me.

And absolutely. Risk/reward trade offs should always be considered. One of the main issues I've had with Python at scale is WSGI. With the release of ASGI servers and asyncio, many of those specific issues can be greatly mitigated if not solved entirely. I just thought that it would be helpful to address this particular issue as I've been bitten by it in the past. Having to re-architect pieces of a system under such circumstances is not a fun thing ;)