OneTick is a time-series database that is heavily used in financial services by hedge funds, brokerages and investment banks. OneMarketData, the maker of OneTick, describes it as “the premier enterprise-wide solution for tick data capture, streaming analytics, data management and research.” OneTick captures, compresses, archives, and provides uniform access to global historical data, up to and including the latest tick.

I myself have used OneTick for almost 5 years and found it to be a very nicely packaged solution thanks to built-in collectors for popular feeds such as Reuters’ Elektron and Bloomberg’s MBPIPE. OneTick also comes with a GUI that makes it easy for non-developers, such as researchers and portfolio managers, to write advanced queries using pre-built event processors (EPs). The GUI can be used to design queries that you can run from the GUI or APIs that OneTick supports, such as Python.

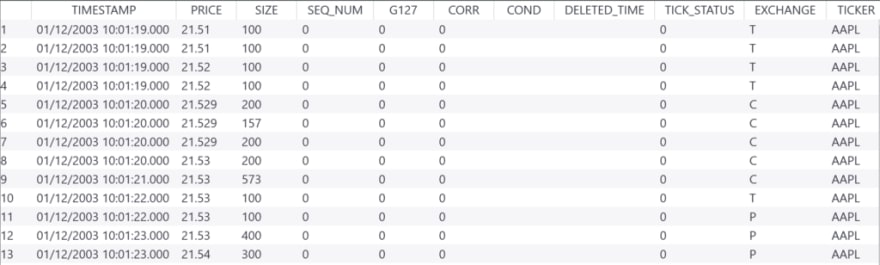

For example, here is a simple query that would select trade data for AAPL where price is greater than or equal to 21.5 (data is from 2003). In SQL, this would be select * from <database_name> where symbol="AAPL" and price >= 21.5.

OneTick comes with a DEMO database that I will be using for my examples. Here is what the query looks like in OneTick GUI:

When you run this query, you will see the result in OneTick’s GUI.

That’s useful information, but what if…

- You wanted to stream this data to your real-time event-driven applications?

- You wanted to publish the data once and have many recipients get it?

- You wanted to let downstream applications retrieve data via open APIs and message protocols?

Solace PubSub+ Event Broker makes all of that possible!

How PubSub+ Can Help OneTick Developers

Here are some of the many ways OneTick developers can leverage PubSub+:

- Consume data from a central distribution bus Many financial companies capture market data from external vendors and distribute it to their applications internally via PubSub+. OneTick developers can now natively consume the data and store it for later use.

- Distribute data from OneTick to other applications Instead of delivering data individually to each client/application, OneTick developers can leverage pub/sub messaging pattern to publish data once and have it consumed multiple times.

- Migrate to the cloud Have you been thinking about moving your applications and/or data to the cloud? With PubSub+ you can easily give cloud-native applications access to your high-volume data stored in on-prem OneTick instance using an event mesh.

- Easier integration PubSub+ supports open APIs and protocols which make it a perfect option for integrating applications. Your applications can now use a variety of APIs to publish to and subscribe data from OneTick.

- Replay messages Are you tired of losing critical data such as order flows when your applications crash? Replay functionality helps protect applications by giving them the ability to correct database issues they might have arising from events such as misconfiguration, application crashes, or corruption, by redelivering data to the applications.

- Automate notifications It’s always a challenge to ensure all of the consumers are aware of any data issues whether it be an exchange outage leading to data gaps or delay in an ETL process. With PubSub+, OneTick developers can automate the publication of notifications related to their databases and broadcast it to the interested subscribers.

Running an Instance of PubSub+ Event Broker

In the rest of this post, I am going to show you how you can take data from your OneTick database and publish it to PubSub+, and vice versa. To follow along you will need access to an instance of PubSub+. If you don’t, there are two free ways to set up an instance:

- Install a Docker container locally or in the cloud

- Set up a dev instance on Solace Cloud (easiest way to get started)

Please post on Solace Community if you have any issues setting up an instance.

Publishing data from OneTick to PubSub+

OneTick makes it extremely easy for you to publish data to PubSub+ by simply using a built-in event processor (EP). The EP is called WRITE_TO_SOLACE and is part of OneTick’s Output Adapters (see OneTick docs: docs/ep_guide/EP/OutputAdapters.htm) which contains other EPs such as WRITE_TEXT, WRITE_TO_RAW, and WRITE_TO_ONETICK_DB.

To publish results of our previous query to PubSub+, we would simply add WRITE_TO_SOLACE EP at the end of our query and fill in appropriate connection and other details. Here is what the EP parameters look like:

You can find details about the WRITE_TO_SOLACE EP and its parameters in OneTick docs (docs/ep_guide/EP/OMD/WRITE_TO_SOLACE.htm).

The first few fields in the EP are for your PubSub+ connection details such as HOSTNAME, VPN, USERNAME, and PASSWORD.

The EP supports both direct and guaranteed message delivery. Direct messages are suitable for high throughput/low latency use cases such as market data distribution, and guaranteed messages are appropriate for critical messages that can’t be lost, such as order flow. You can learn more about the two delivery modes here.

The EP also allows you to specify the ENDPOINT you would like to publish data to, and the ENDPOINT_TYPE where you can specify if it is a topic or a queue. Publishing to a topic supports the pub/sub messaging pattern where your application publishes once to the topic and many applications can subscribe to that topic. On the other hand, publishing to a queue is point-to-point messaging because only the consumer binding to that specific queue can consume data published to it. Needless to say, we encourage clients to use the pub/sub messaging pattern as it provides more flexibility.

For this example today, I will publish to this topic: EQ/US/<security_name; such as EQ/US/AAPL.

Finally, you will notice that the EP allows users to specify the message format via MSG_FORMAT field. Currently, you can only pick from NAME_VALUE_PAIRS and BINARY, but OneTick will support additional message formats soon.

Configuring Subscriptions on PubSub+ Broker

Before publishing data to PubSub+, you need to determine how it will be consumed. Applications can either subscribe directly to the topic we are publishing to, or map the topic(s) to a queue and consume them from there. Using a queue introduces persistence so if your consumer disconnects abruptly, your messages won’t be lost. For our example, we will consume from a queue called onetick_queue that I have created from PubSub+’s UI:

PubSub+ allows you to map one or many topics to a queue, which means your consumer only needs to worry about binding to one queue and it can have messages being published to different topics ordered and delivered to that queue.

Additionally, PubSub+ also supports rich hierarchical topics and wildcards so you can dynamically filter the data you are interested in consuming. PubSub+ supports two wildcards: * lets you abstract away one topic level, and > lets you abstract away one or more levels. So, if you wanted to subscribe to all the data for equities, you can simply subscribe to EQ/> and if you wanted to subscribe to data for AAPL from different regions/venues, you could subscribe to EQ/*/AAPL.

You can learn more about Solace topics and wildcards here.

I have subscribed my queue to topic: EQ/US/AAPL.

With that done, you’re ready to run the query!

Once you have run your OneTick query, go to the PubSub+ UI and note that messages are in the queue.

As you can see, there are 8,278 messages in our queue.

This is how easy it is to publish messages from OneTick to PubSub+!

Writing Data from PubSub+ to OneTick

Now it’s time to consume data from PubSub+ and write it to a OneTick database. To be efficient you can use the data in the onetick_queue to write to OneTick db.

To read data from PubSub+, OneTick provides the omd::OneTickSolaceConsumer interface via its C++ API. Use this interface to connect to a Solace broker, make subscriptions, process received messages, and propagate those messages to the Custom Serialization interface.

To make things simple for us, OneTick distribution comes with a C++ example (examples/cplusplus/solace_consumer.cpp) that shows you how to do that. You can go through the code to see how to use the OneTick/Solace API but for the purpose of this post, we will simply run the code. However, before we can write the data from PubSub+ to a OneTick database, we need to create/configure that database.

I have created a database called solace by following these steps:

- Create data directory for our database. I have created mine at:

C:/omd/one_market/data/data/solace - Create a database entry in your

locatorfile. Here is my locator entry forsolacedb:

<db ID="solace" SYMBOLOGY="SOLACE" ><LOCATIONS><location access\_method="file" location="C:\omd\data\solace" start\_time="20000101000000" end\_time="20300101000000" /></LOCATIONS><RAW\_DATA><RAW\_DB ID="PRIMARY" prefix="solace."><LOCATIONS><location mount="mount1" access\_method="file" location="C:\omd\data\solace\raw\_data" start\_time="20000101000000" end\_time="20300101000000" /></LOCATIONS></RAW\_DB> </RAW\_DATA></db>

Now that we have our database configured, we are ready to run our sample code. We will need to provide the following details to run the example:

- PubSub+ connection details: host:port, username, password, vpn

- endpoint: which topic/queue to subscribe to

- OneTick db: name of database we will be writing data to

If the queue doesn’t exist, our code will administratively create it and map topics to it. In our case, we already have a queue (onetick_queue) that we will be consuming from.

As soon as we run the example, it will consume all the messages from the queue:

C:\omd\one\_market\_data\one\_tick\examples\cplusplus>solace\_consumer.exe -hostname <host\_name>.messaging.solace.cloud -username <username> -password <password> -vpn himanshu-demo-dev -queue onetick\_queue -dbname solace20200509021426 INFO: Relevant section in locator <DB DAY\_BOUNDARY\_TZ="GMT" ID="solace" SYMBOLOGY="SOLACE" TIME\_SERIES\_IS\_COMPOSITE="YES" > <LOCATIONS > <LOCATION ACCESS\_METHOD="file" END\_TIME="20300101000000" LOCATION="C:\omd\data\solace" START\_TIME="20000101000000" /> </LOCATIONS> <RAW\_DATA > <RAW\_DB ID="PRIMARY" PREFIX="solace." > <LOCATIONS > <LOCATION ACCESS\_METHOD="file" END\_TIME="20300101000000" LOCATION="C:\omd\data\solace\raw\_data" MOUNT="mount1" START\_TIME="20000101000000" /> </LOCATIONS> </RAW\_DB> </RAW\_DATA> </DB>

After running the example, you will notice new raw data files have been created:

C:\omd\data\solace\raw\_data>dir Directory of C:\omd\data\solace\raw\_data05/11/2020 05:41 PM <DIR> .05/11/2020 05:41 PM <DIR> ..05/11/2020 05:41 PM 245,644 solace.mount1.2020051121403905/11/2020 05:41 PM 0 solace\_mount1\_lock 2 File(s) 245,644 bytes 2 Dir(s) 172,622,315,520 bytes free

Now, we need to run OneTick’s native_loader_daily.exe to archive the data:

C:\omd\one\_market\_data\one\_tick\bin>native\_loader\_daily.exe -dbname solace -datadate 2020051120200511214237 INFO: Native daily loader started20200511214237 INFO: BuildNumber 20200420120000Command and arguments: native\_loader\_daily.exe -dbname solace -datadate 20200511...20200511214237 INFO: Picked up file C:\omd\data\solace\raw\_data/solace.mount1.20200511214039 in raw db PRIMARY20200511214237 INFO: Total processed messages: 8,468 (1,721,228 bytes)20200511214237 INFO: Messages processed in last 0.046 seconds: 8,468 (1,721,228 bytes) at 184,652.958 msg/s (37,533,046.948 bytes/s)20200511214237 INFO: Last measured message latency: NaN20200511214237 INFO: Total processed messages: 8,468 (1,721,228 bytes)20200511214237 INFO: Messages processed in last 0.005 seconds: 0 (0 bytes) at 0.000 msg/s (0.000 bytes/s)20200511214237 INFO: Last measured message latency: NaN20200511214237 INFO: persisting sorted batch20200511214237 INFO: done persisting sorted batch20200511214237 INFO: done persisting sorted batches for database solace, starting phase 2 of database load. Total symbols to load: 1, number of batches: 120200511214237 INFO: beginning sorted files cleanup20200511214237 INFO: done with sorted files cleanup20200511214237 INFO: loading index file; total records: 120200511214237 INFO: loading symbol file20200511214237 INFO: renaming old archive directory, if exists20200511214237 INFO: Load Complete for database solace for 20200511Total ticks 8278Total symbols 120200511214237 INFO: Processing time: 1

As you can see, 8,278 ticks have been archived – which is the same number of messages that were in the queue. You can now go to OneTick GUI and query the data from solace db:

That’s it!

I’ve shown you how easy it is to publish data from OneTick to PubSub+, read it back, and write to a OneTick database.

We are excited to have OneTick users like yourself take advantage of features like rich hierarchical topics , wildcards , support for open APIs and protocols and the dynamic message routing that enables event mesh.

If you would like to learn more about the API, please reach out to your OneTick account manager or support. If you would like to learn more about how to get started with PubSub+, please reach out to us here or check out our Solace with OneTick page.

The post Using Solace PubSub+ with OneTick Time Series Database appeared first on Solace.

Top comments (0)