... and a double NAT'ed Ubiquiti Dream Machine Pro

Wait, what? I just want to access a Kubernetes cluster in a secure way ... should be easy.

[2022-03-10] Update from Google! I just got this message: "We are writing to let you know that starting June 15, 2022, we will remove the restriction on the Internet Key Exchange (IKE) identity of peer Cloud VPN gateways."

This means in practice that NAT'ed setups will become easier to manage because GCP will not require remote IP to match remote id.

At GOALS we are cloud native, and we are serious about security. As a consequence our Kubernetes clusters are provisioned with private endpoints only, where all nodes have internal IP addresses. This is great, but the question immediately arrises - how do we operate such a cluster, when we cannot access it from outside the VPC?

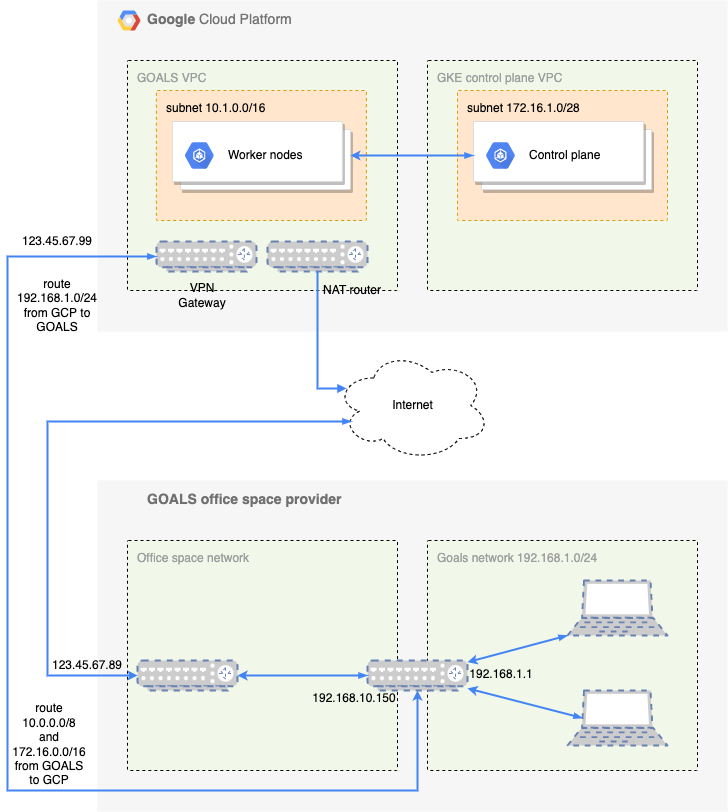

Here is a high level overview of what we have:

At the top of the diagram we see the private Google managed Kubernetes (GKE) cluster. A Kubernetes cluster consists of a control plane and worker nodes. In the case of GKE, Google manages the control plane (api server, etcd nodes, etc), the underlying VM's the control plane is running on, and the underlying VM's that worker nodes are running on.

We have set up our own VPC and a subnet where the worker nodes are running, and Google creates a managed VPC where the control plane is running. Google automatically peers the control plane VPC with our VPC.

At the bottom of the diagram we see an overview of our office space network. Since we are a startup we rent office space and share the network with other tenants. We have connected a Ubiquity Dream Machine Pro to the office space network, and created our own GOALS network where we connect our work stations.

Obviously, running eg kubectl describe nodes from my workstation in our office network doesn't work since kubectl needs access to the cluster's api server. So, how can we connect our office network to our VPC in a secure way, and enable management of the GKE cluster using kubectl?

A note on our infrastructure

We use Terraform to provision all GCP resources. Google provides opinionated Terraform modules to manage GCP resources here.

Our infra leverages a shared VPC and we use the project factory module to create the host project and the service projects.

The VPN will be provisioned in the host project that owns the VPC.

Our GKE clusters are created with the private cluster terraform module.

Prerequisites and preparations

There are a few things we need to ensure and some information we must gather before we can start.

First, we need admin access to the Ubiquiti Dream Machine (UDM) and we need a Google user with Network Management Admin.

Now you need to gather the following:

- The office space external IP address, eg 123.45.67.89

- The Goals network subnet range, eg 192.168.1.0/24

Ensure that the Kubernetes cluster is prepared

Two configuration entries are necessary to get right when setting up GKE to enable VPN access to the cluster.

-

master_authorized_networksneeds to include the office network subnet range, ie 192.168.1.0/24 in our case. - VPC peering must be configured to export custom routes - in this particular case it means that the custom network route that the VPN will create to enable communication from the VPC to the office network will also be available in the Google managed VPC that host the GKE control plane. This is enabled by adding the following terraform configuration to the GKE setup: ```

module "kubernetes_cluster" {

source = "terraform-google-modules/kubernetes-engine/google//modules/private-cluster"

version = "18.0.0"

...

}

resource "google_compute_network_peering_routes_config" "peering_gke_routes" {

peering = module.kubernetes_cluster.peering_name

network = var.vpc_id

import_custom_routes = false

export_custom_routes = true

}

### Shared VPN secret

Generate a shared secret that will be used for authentication of the VPN peers and put it into a new secret in GCP Secret Manager, name it **office-vpn-shared-secret**.

### Create a Cloud VPN Gateway

For this part we will use the [VPN](https://github.com/terraform-google-modules/terraform-google-vpn) module, setting it up in the host project like this:

locals {

name = "office-vpn"

}

resource "google_compute_address" "vpn_external_ip_address" {

project = var.project_id

name = local.name

network_tier = "PREMIUM"

region = var.region

address_type = "EXTERNAL"

}

data "google_secret_manager_secret_version" "office_vpn_shared_secret" {

project = var.project_id

secret = "office-vpn-shared-secret"

}

module "office_site_to_site" {

source = "terraform-google-modules/vpn/google"

version = "2.2.0"

project_id = var.project_id

network = var.vpc_id

region = var.region

gateway_name = local.name

tunnel_name_prefix = local.name

shared_secret = data.google_secret_manager_secret_version.office_vpn_shared_secret.secret_data

ike_version = 2

peer_ips = [ var.office_public_ip ]

remote_subnet = var.office_subnet_ranges

vpn_gw_ip = resource.google_compute_address.vpn_external_ip_address.address

}

resource "google_compute_firewall" "allow_office_traffic" {

project = var.project_id

name = "${local.name}-allow-office-traffic"

network = var.vpc_id

description = "Allow traffic from the office network"

allow { protocol = "icmp" }

allow {

protocol = "udp"

ports = [ "0-65535" ]

}

allow {

protocol = "tcp"

ports = [ "0-65535" ]

}

source_ranges = var.office_subnet_ranges

}

`google_compute_address.vpn_external_ip_address` creates the external static IP address which becomes the VPN endpoint on the GCP end.

`google_secret_manager_secret_version.office_vpn_shared_secret` fetches the shared secret used to authenticate the VPN peers.

The `office_site_to_site` module creates a "classic" Cloud VPN. The UDM does not support BGP yet which means that we cannot create a "HA Cloud VPN" variant.

`google_compute_firewall.allow_office_traffic` allows traffic originating from the office subnet (`192.168.1.0/24`) to enter our VPC.

After applying the configuration the VPN tunnel will be in an error state because it cannot connect to it's peer. This is expected since we have not set up the UDM side yet.

### Create a VPN network on the UDM Pro

The UDM Pro does not support configuration by code as far as I know, so here we need to resort to manually use the management GUI.

Go to Settings->Networks->Add New Network, choose a name and select VPN->Advanced->Site-to-site->Manual IPSec.

**Pre-shared Secret Key** is the `office-vpn-shared-secret` from above.

**Public IP Address (WAN)** is the IP address the UDM has on the office space network, ie it is __not__ the public IP our office space provider has. For example `192.168.10.150`.

In the **Remote Gateway/Subnets** section, add the subnet ranges in your VPC that you want to access from the office, eg `10.0.0.0/8` and `172.16.0.0/16`.

The **Remote IP Address** is the public static IP that was created for the VPN endpoint in GCP, eg `123.45.67.99`.

Expand the Advanced section and choose `IKEv2`. Leave PFS and Dynamic routing enabled.

Save the new network.

Unfortunately we are not ready yet, because with the current configuration the UDM will identify itself using the WAN IP we have configured, which doesn't match the IP it connects to GCP with.

To fix this last part of configuration we need to `ssh` into the UDM Pro. Once you are on the machine, we can update the ipsec configuration.

$ cd /run/strongswan/ipsec.d/tunnels

$ vi .config

Add the following line just below the `left=192.168.10.150` line:

leftid=123.45.67.99

This makes the UDM identify itself using it's actual public IP when it connects to the VPN on the GCP end.

Finally, refresh the IPSec configuration:

$ ipsec update

## Thats it!

Verify that the connection on the UDM is up and running by invoking:

$ swanctl --list-sas

The output should list information about the tunnel, and the tunnel should be in {% raw %}`ESTABLISHED` state.

Now the VPN tunnel state in GCP should move into a green state as well.

And, finally, accessing the Kubernetes cluster from a workstation in the Goals office network is possible:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-XXXXX-controller-pool-28b7a87b-9ff2 Ready 17d v1.21.6-gke.1500

We now have a setup looking like below:

## Troubleshooting

While being connected to the UDM, run

$ tcpdump -nnvi vti64

to see all traffic routed via the VPN tunnel.

Sometimes routes are cached on the workstations, eg on Mac you can run

$ sudo route -n flush

a couple of times and disable/enable Wifi to make sure that your routing configuration is up to date.

Top comments (0)