The world is changing. I feel it in my local host. I feel it in my operating system. I smell it in the virtual machine.

It began with the forging of the Great Docker Images.

Three were given to the DevOps Engineers, immortal, wisest, and most difficult to explain to their friends and relatives.

Seven to the Developers, great coders and application craftsmen.

And nine, nine rings were gifted to the race of Operations, who, above all else, sought control and orchestration.

But they were, all of them, deceived, for a new image update was made.

In the land of DockerHub, in the fires of Mt Volume, the Dark Lord Sauron pushed in secret a new image, to create all new containers. And into its Dockerfile he set his base image, his run instructions, and his ENTRYPOINT commands to be executed when the containers were created. One Docker Image to rule them all!

One by one, the admins of Middle Earth pulled the new image

And there were none of them who said “it works on my machine”!

In this blog I am going to attempt to force as many LOTR references as possible. I can't promise that they will all be good. If you have any better ones then please comment below as I would love to hear them! I hope you all enjoy! PS I am actively looking for new work in the Cloud so please feel free to start a conversation if you are looking for a new addition to your team.

Check me out here:

Cloud Resume Challenge Site

GitHub

LinkedIn

Table of Contents:

- Why should you use Docker?

- Virtual Machines vs Containers

- How does Docker work?

- Dockerfiles

- Images and Containers

- DockerHub and Registries

- How does Docker streamline the development - pipeline?

- How do you manage multiple containers?

- DIY Docker Image

- Conclusion

Why should you use Docker?

Middle Earth is fantastical world full of many peoples, beasts, and fell creatures, and the local environments between them are not always the same. This is where Docker comes in. Docker allows your Fellowship to package your applications and its dependencies into an self-contained, isolated unit (called a Docker Container) that can run consistently across different environments, be it the Forrests of Fangorn, the rolling plains of Rohan, or the firey pits of Mordor.

But the power of Docker doesn't stop at portability and consistent environments. Docker is an amazing tool that encompasses features for version control, CI/CD integration, security, isolation, and an extensive ecosystem which I will touch on as we move along.

Virtual Machines vs Containers

Imagine if the one ring were actually a virtual machine and Mr Frodo was tasked to carry it all the way across Middle Earth to chuck it in another developer's local machine instead of Mount Doom. That thang would be heeeeavy! Weighed down by the immense crushing weight of the operating system, Mr Frodo would probably never make it out of the Shire!

With the help of Docker containers, we can make Mr Frodo's burden so much less arduous! Containers, unlike VMs, are lightweight and isolated units that package an application and its dependencies, without the need for an entire OS. Especially if using the powers of Docker Compose, Frodo and Sam could carry many containers to Mordor's local host with ease, but we'll get to that later.

Containers provide strong isolation between applications and their dependencies, ensuring you can "keep it secret and keep it safe" from the powers of any malicious Nazgûl that might seek to directly access or exploit vulnerabilities of their container's security as according to Gandalf's security instructions.

How does Docker work?

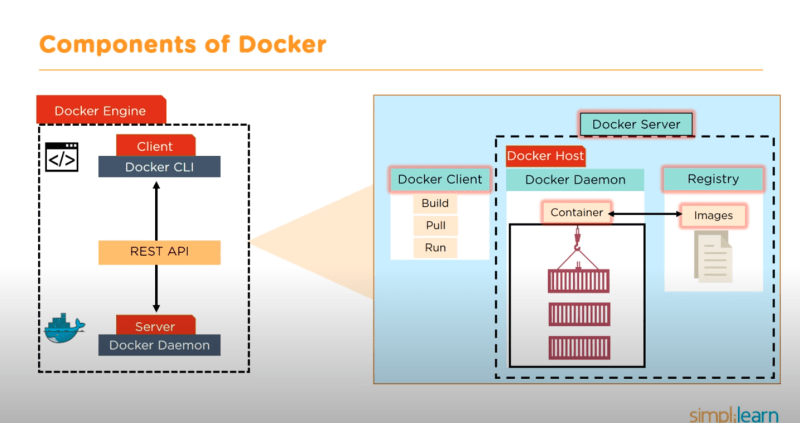

At it's core Docker's architecture is its client-server model which is constituted of the Docker Client, Docker Daemon, and Docker Engine. The rest of its key features include images, containers, and registries, but I will get more into these later.

The Docker Client is where your interaction as a user primarily takes place, be it through the CLI or Docker Desktop. When a command is executed, the Client translates this command into a REST API call for the Daemon.

The Docker Daemon, is not a Balrog. Full stop. Disappointing, I know, but however, it is comparably powerful. The Daemon, as the server component of this architecture, processes requests sent by the Client. It builds, runs, and manages Docker containers, coordinating their lifecycles based on the command received.

Sitting above the Client and Daemon, and encapsulating both, is the Docker Engine. The Docker Engine is an application installed on the host machine that provides the runtime capabilities to build and manage your Docker containers. It also is what allows the Daemon to interact with operating system's underlying resources, acting as the intermediary between your commands (via the Docker Client) and the execution of these commands (by the Docker Daemon).

Dockerfiles

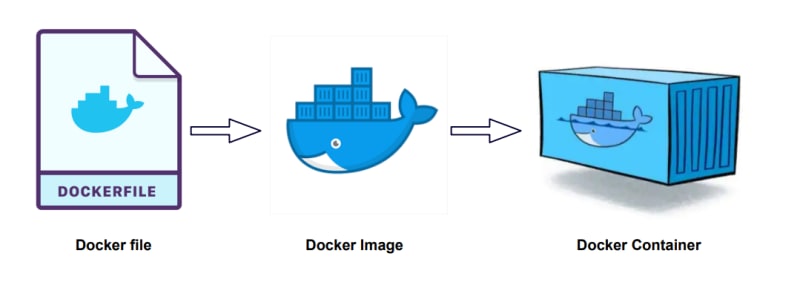

Think of Dockerfiles as the DNA of Docker Images... Or maybe in this context, how the cruelty, hate, and malice form the essence of the One Ring. When we issue the docker build command, Docker reads these instructions, step by step, from the Dockerfile and builds your Docker Image. Each instruction represents a specific action, such as installing packages, copying files, or running commands.

The syntax of Dockerfiles is quite simple and straightforward. Conventionally, instructions are made in ALL CAPS so as to be distinguished from arguments. While specific Dockerfile commands will depend on your application's requirements, some essential and commonly used commands and some of which i'll be using in a quick tutorial later on in this blog:

FROM: This sets the base image on which your Docker Image will be built and is typically the first instruction in a Dockerfile.

WORKDIR: Sets the working directory inside the container where subsequent commands will be executed.

RUN: Executes commands inside the container. Often used for dependency install or application config.

COPY: Copies new files/folders from client machine to the container's file system. This is preferred for simple file copying.

ADD: Is similar to COPY except it allows features like URL downloads and extraction of compressed files.

CMD: Set the default executable of a container and arguments. Can be overridden at runtime by run parameters.

ENTRYPOINT: Similiar to CMD but it cannot be overridden.

EXPOSE: Informs Docker what ports the container application is running on. It should be noted that this does publish the ports but instead only documents it as metadata.

Images and Containers

Docker Images, or container images, are used to run containers. It is important to think of these as immutable templates which are read only. Docker Images are essentially snapshots of a Docker container's file system. When you create a Docker Image, they do not change. If you think you "changed" them then what you have actually done is created a completely new image entirely.

When you launch a container using an image, a writable layer (sometimes referred to as the "container layer") is added. This writable layer is attached to the container and allows for data writes to occur within the container. Instead of altering the image itself, any changes or data writes happen within this writable layer. This is what makes the container mutable - changes are isolated to the running container and do not affect the underlying image. It also enables containers to have their own local storage and system data.

DockerHub and Registries

DockerHub is a cloud-based registry provided by Docker which serves as a centralized storage, sharing, and management platform for Docker Images. It's kind of like GitHub, but for Docker. There are other container registries, such as Amazon's Elastic Container Registry (ECR), Google's Container Registry (GCR), Azure's Container Registry (ACR), and open-source projects like Harbor. Fundamentally, they all do the same thing; give or take some additional features and pricing structures.

Like GitHub, you split registries into repositories. Docker provides several commands to interact with these repositories. You can use the docker pull command to retrieve images from a repo and the docker push command to upload your own images. The naming convention used to specify a particular image within a repo goes like this <username>/<repository>:<tag>, for example, lsauron/containerofrings:latest.

How does Docker streamline the development pipeline?

In the realm of Middle-earth, Docker proves to be an invaluable tool in streamlining the development pipeline for Frodo and his companions. Here's how Docker facilitates a smooth and efficient development process throughout their quest:

Continuous Integration and Deployment (CI/CD): Docker integrates smoothly with popular CI/CD tools, enabling your Fellowship team to automate the build, test, and deployment processes. Leveraging Docker's containerization to package their applications and dependencies, your team can ensure consistency across the different stages of the pipeline. Automating these processes can save time, improve efficiency, and achieve fast iteration cycles.

Versioning and Rollbacks: Docker's versioning capabilities enable your Fellowship to keep track of your application's changes. That way, when you all get stuck by an avalanche along the Path of Caradhras, you can take a step back, realize that you may need to rollback to a previous state, and consider that perhaps taking the shortcut through Moria will be better (probably not).

Scalability and Orchestration: As the darkness of Sauron grows in power and complexity, so too might your applications. Docker allows you to scale your applications horizontally, adding more containers as your load increases. Docker works seamlessly with orchestration tools like Kubernetes and Docker Swarm, making it easier to manage multiple containers and services, which can be a significant advantage for large-scale projects.

How do you manage multiple containers?

So what if Sam and Frodo needed to bring many Containers of Power for the local hosts of Mordor? Well, that's where Docker Compose comes in! Docker Compose is used for creating, managing, and cleaning up multi container applications and particularly in development. It uses a special YAML file called 'compose.yml', or the legacy naming 'docker-compose.yml', to specify the configuration and dependencies of multiple services making up your unified application.

However, for larger production deployments spanning across multiple hosts or for cases where you need high availability, tools like Docker Swarm or Kubernetes are often more commonly used. These tools provide additional capabilities like load balancing, service discovery and scaling services across multiple Docker hosts.

DIY Docker Image

Prerequisites

- Docker installed on your machine

- DockerHub account

- Python3 installed on your machine

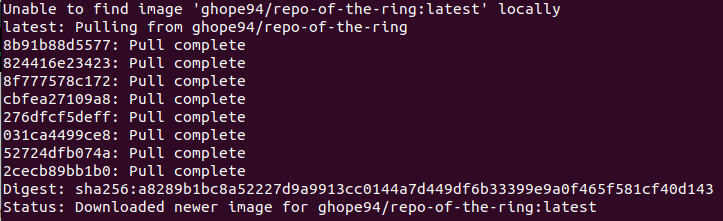

If you would just like to try running a custom made image I created for this blog then all you have to do is open your terminal and run docker pull ghope94/repo-of-the-ring:latest. Here's what you should see returned:

What just happened is that Docker first checked the local host and realized the files were not present. By default, it in these events it will then try to pull the information from DockerHub.

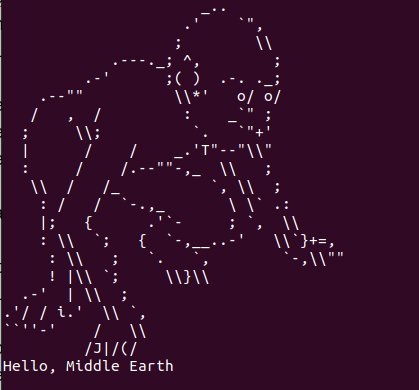

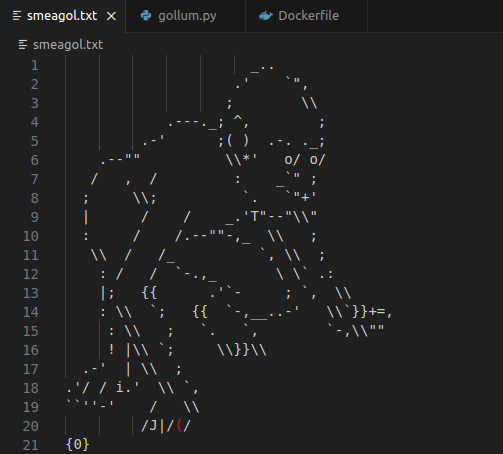

Moving on, now try entering docker run ghope94/repo-of-the-ring:latest "Hello, Middle Earth" and you should see a cool ASCII art depiction of Gollum!

If you would like to know how I was able to create this Docker Image from scratch then keep reading (don't worry, it's easy)...

1. Create a repo in DockerHub

2. Open your favorite code editor and create a new folder.

3. For this tutorial, we are going to have to create three files. A text file for our custom ASCII art, our Dockerfile, and a Python script which will read our ASCII file, format it with a custom message, and then print to the console.

4. Source or create your own ASCII image and add it to your text file and save.

5. Copy this python code and replace everything with your own corresponding information.

Here's what is happening in this script:

The smeagolsay function reads the ASCII art from smeagol.txt and formats it with the input text to display.

In the main execution block, the script first imports the sys module to access command-line arguments.

If arguments were passed to the script, they are combined into a single string and passed to the smeagolsay function. If no arguments were provided, a default string is used instead.

The return value of smeagolsay, which includes the ASCII art and the input text, is printed to the console.

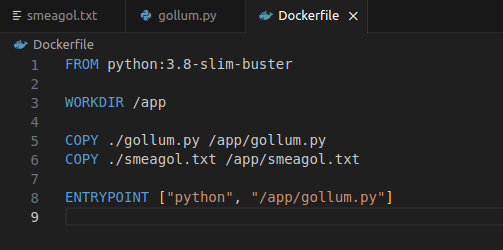

6. Create your first Dockerfile!

Here's what going on in this Dockerfile:

FROM python:3.8-slim-buster: This line is telling Docker to use a pre-made Python image from Docker Hub. The version of Python is 3.8 and it is based on a "slim-buster" image, which is a minimal Debian-based image. This is the base image for your Docker container.

WORKDIR /app: This line is setting the working directory in your Docker container to /app. This means that all subsequent actions (such as copying files or running commands) will be performed in this directory.

COPY ./gollum.py /app/gollum.py: This line is copying the local file gollum.py into the Docker container, placing it in the /app directory and keeping its name as gollum.py.

COPY ./smeagol.txt /app/smeagol.txt: This line is doing the same thing as the previous line, but for the smeagol.txt file.

ENTRYPOINT ["python", "/app/gollum.py"]: This line is specifying the command to be executed when the Docker container is run. In this case, it's running your Python script.

You've now finished this phase of the setup. We'll move over to the terminal next.

7. Open up your terminal and cd into to the appropriate directory where you are storing the files we just created.

8. In the commandline, run docker build -t <your-dockerhub-user>/<your-repo>:latest .

9. Then docker run <your-dockerhub-user>/<your-repo>:latest "Hello, Middle Earth"

10. If you would like you can push your new image to your DockerHub repo using docker push <your-dockerhub-user>/<your-repo>:latest.

And that's it! You now have created your very own personal Gollum! Good luck with that..

Conclusion

Docker has emerged as a powerful tool in the software development landscape, offering portability, consistency, and efficient resource utilization. It streamlines the development pipeline, enabling continuous integration, version control, and scalable deployments. With Docker, developers can easily manage multiple containers, leverage container orchestration platforms, and benefit from a thriving ecosystem. Embracing Docker allows us to navigate the complexities of software development while harnessing the magic of containerization to create robust and resilient applications.

I hope you you found this atleast as mildly amusing to read as I did to write it up. There were a lot of even more forced LOTR references - I decided to spare you all.

Top comments (0)