Once upon a time, NoSQL databases like MongoDB took the tech world by storm. They promised scalability and flexibility in handling unstructured data, and developers eagerly jumped on the bandwagon. Fast forward to today, and the hype surrounding NoSQL has certainly leveled off.

MongoDB's growth and plateau in the DB-Engines ranking, but it still ranks 4th overall

But its influence on the database landscape is undeniable. NoSQL has left a lasting legacy by shaping the way modern databases handle semi-structured data.

In this blog post, we will explore the lasting impact of NoSQL on today's database systems, focusing on the rise of semi-structured data storage. We will discuss various types of semi-structured data and compare the approaches taken by popular database platforms such as Postgres, Redshift, BigQuery, Snowflake, Databricks, DuckDB, and SQLite.

Traditional SQL databases share a very consistent and familiar approach to storing data. Columns (with types) and rows form a table, and each “cell” has a particular value of the column’s type for that individual row. The cell values in SQL databases have traditionally been individual values, a boolean, an integer, and text. In Computer lingo, this is called a scalar value, meaning that it only holds one value at a time, and the schema of that table forces the type. The S in SQL means Structured and this is exactly where the structure comes in.

This approach has worked for decades, but if you’ve ever built databases, you’ll know there are times when you actually need more than one value. Maybe a user has multiple email addresses. Maybe you want to store a list of tags. This type of data is easy to store in programming languages because they support arrays and hashmaps to store collections of values. But back in SQL land, it wasn’t that easy.

In recent years though, modern databases and warehouses have relaxed this constraint. It’s now possible, and sometimes even preferable, to store those arrays and maps in a database. Why the change of heart? Well, a few things happened:

- In the early 2010s, there was a huge rise in Document or NoSQL databases. They offered a lot of benefits with some drawbacks. And in particular, the recognition of the value of not always knowing schemas up front, particularly when dealing with data you may not control.

- More recently, we’ve seen rapid adoption of Datalake architectures where data is written to file stores and then queried (and thus typed) at read time. That data can be in a lot of formats, fancy ones like Avro or Parquet, or simple ones like JSON or the humble CSV. All of those (sans CSV) make it easy to include arrays and hashmaps, so data lakes need to be able to accept and query over that data.

With data lakes and document databases, there was still a lot of excitement to just use SQL, so SQL and these sets of values needed to find a way to work together. That brings us to the world we find ourselves in today, one of semi-structured data.

It will also come as no surprise that different services approach semi-structured data in slightly different ways. Today I want to cover all of the different techniques you’ll need.

New items on the menu

There are four new types that can be used to represent semi-structured data. Most services offer a subset of the options, with Postgres and DuckDB the only two offering all the options.

Some types such as STRUCTS and OBJECTS look identical at first glance but they have some subtle differences so it’s worth knowing exactly which one you’re working with. And if you’re only going to look at one, skip to the end and read about Variants.

Arrays

Arrays represent a list of values. The type of the values in those arrays is up for some debate depending on the service you’re using. For example BigQuery and Databricks require an explicit type, Postgres optionally can have a type, and Snowflake assumes arrays contain variants. Redshift takes it one step further and doesn’t have an explicit array type, recommending its version of a variant instead.

One of the most common ways to create an array is by using an Array aggregate function like you would any other aggregate function with a GROUP BY. But in this case, instead of COUNTing or SUMing the values, you’ll end up with an array of values for each of the groups.

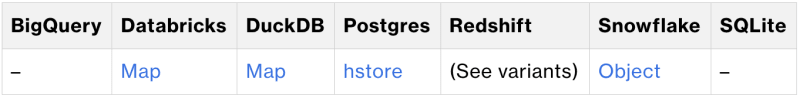

Objects (aka Maps aka …)

Depending on your school of programming, you may call this an object, a map, a hash, or a dictionary. All of these names represent the same thing: a set of key/value pairs. An important feature of Objects though is that there’s no specific restriction on the types. If you want all of your objects to have the exact same set of keys every time, you’re probably looking for the next option.

1️⃣ DuckDB’s Map is part way to a struct in that it requires all keys to be the same type, and all values to be the same type, but not that keys and values be the same type.

2️⃣ I have never seen a Postgres hstore in the wild. Do with that knowledge what you will.

Structs

Structs also act like an object with the additional twist that they have their own pre-defined schemas. Structs come out of the world of C but the pattern is widespread. Postgres calls it a composite type and that’s a good way to think about it: It’s a type made of other types, and the data will always have that shape or combination of types. This is as structured as semi-structured gets.

A very common example of a struct is a GeoCoordinate which would always contain a lat and long value. It’s so common that some services also have a separate GeoCoordinate type just for this purpose.

Variants

Variants are the catch-all. If you’re looking for a type without having to think too hard, this is the one. It’s not surprising that most services actually just call this what it’s most used for: JSON.

If you think about it, a JSON blob can be a lot of different types. Most obviously you might get an object or an array at the root of any blob, and as you navigate into JSON, it may contain strings, numbers, nulls, or booleans (🪦 dates). Variants can represent any of those things and each service usually includes a series of functions to actually figure out what type the variant actually is.

It’s worth pointing out SQLite and DuckDB do something a little funky here. They actually just store their JSON as text and only parse it at query time. It shouldn’t matter, but if you run into weird errors where it feels like you’re for some reason manipulating a string, you probably are!

1️⃣ If you’re in Postgres land, skip over JSON and go straight to JSONB.

2️⃣ It’s a bit sad that Databricks doesn’t have a variant option here. Let me know if I’m just missing it.

When to go semi-structured

Now that you’re familiar with all the new types available, it’s useful to know when to use them. As you’ve seen, each type can be used for a few different use cases, but arguably, you could avoid using them completely with some clever data structure design. So when does it make sense to reach for them?

The most obvious answer is when dealing with JSON. If you’re loading JSON data, particularly data that might have a structure that changes over time or has arbitrary nesting, it’s really nice to just not think about structures and types. Another way to say this is when you’re dealing with data where you don’t control the structure or expect the structure to change.

The other very reasonable use is when a single record or row needs to provide multiple values for a type. A list of tags is one of the most common cases. Sure, you could comma separate and string, but it’s foolproof to let your data service store it for you.

Wrapping it up

Hopefully, that gives you a much better sense of all the semi-structured types now at your disposal. There are lots to choose from, but in most cases, you should just reach for the Variant and you’ll be in good shape (unless you’re in Databricks, sadly).

We didn’t touch on the patterns to query structured data here, but all the doc links should point to examples, and if you’d like to see a post on that, please let us know. And, of course, if you have questions about the right types for your particular use case, structured or semi, swing by the OA Club and ask the experts.

📖 Want to read more like this? Check out our census.dev blog that captures tales from the Census Engineering flock.

P.S. Enjoy working with SQL and data? We’re hiring. Come work with us!

Top comments (0)