Monitoring today is extremely human driven. The only thing we’ve automated with monitoring to date is the ability to watch for metrics and events that send us alerts when something goes wrong. Everything else: deploying collectors, building parsing rules, configuring dashboards and alerts, and troubleshooting and resolving incidents, requires a lot of manual effort from expert operators that intuitively know and understand the system being monitored.

Today’s monitoring tools need to be told what to look for

The challenge, as we leap into Cloud, Kubernetes, and Microservices, is that it is becoming impossible for any single person in an organization to understand how it all works and know what to look for. Some organizations combat this by pushing monitoring down to the teams that own and run their own microservices in production. This way, we split up and push the problem down to the teams that actually wrote the software running in our environments.

However, even these teams can be overwhelmed by all the different failure modes their service can have interacting with other services in the wider environment. Being able to monitor the latency of your service and be alerted if it goes up is a good start, but this generally results in hours of manual troubleshooting across multiple tools and teams to find the root cause of why it went up in the first place.

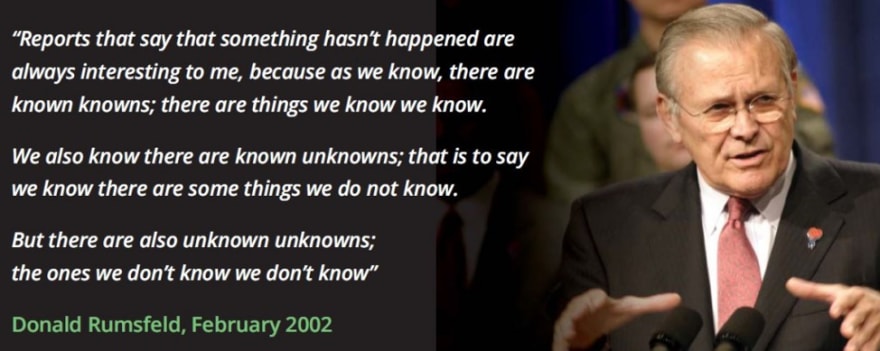

The result is we end up with a lot of blind spots in our alerts, as we can only setup alerts for the failure modes we can think of up front, catching us off guard and too late when a new failure mode occurs that wasn’t noticed until the service was down and users were affected. I believe the “great” Donald Rumsfeld said it best:

The other result is our Mean-Time-To-Resolution (MTTR) is going up, because we now need to search even more metrics and logs, across multiple services and teams, to figure out what the problem is so we can resolve it.

When I started in this space 6 years ago, the primary monitoring tools were Nagios and increasingly, Graphite. As containers and microservices appeared, we saw the need for new views of our environment, resulting in tracing becoming an important tool, and the need to collect overwhelmingly more data, all labeled correctly so we could easily search and make sense of it all.

This resulted in a new wave of monitoring tools that could handle the increasingly large volume of metrics, logs and traces we had to collect, run analytics queries at scale, and present new views of our environments to help us humans make sense of it all.

This trend continues, and I’m excited to see advancements from the CNCF with their OpenTelemetry project and other initiatives that may finally allow all software vendors to standardize how we collect and label our monitoring data.

Observability’s natural endpoint is to provide a “single pane of glass”, an aspiration I’ve heard from many users over the years in the monitoring space. By having all your metrics, logs and traces in one system, you can more easily correlate and search between them to find the root cause.

While this is all needed in the monitoring space and will make it easier for teams to search and troubleshoot what’s going on, it will still rely heavily on human operators telling the observability tool what to look for and searching for the root cause. It will still miss all those “unknown unknowns” we weren’t looking for until they express themselves in a way that starts having a larger impact on the service.

Stop Staring at the Single Pane of Glass

With so much time and investment required to setup and continuously tune your monitoring tools, it does feel a little like we’re becoming slaves to them.

They’re effectively like young babies, that don’t understand the environment they’re in, so we need to constantly guide them. When something goes wrong, they continue to nag us with alerts, and annoyingly wake us up in the middle of the night when something goes wrong. And as “parents” we then have to spend ages trying to figure out the root cause to finally get them to stop crying.

To catch the “unknown unknowns” I’ve seen teams put panels of dashboards around the office so everyone can do basic pattern recognition on the key metrics and hopefully detect something is wrong before their service is impacted.

If you imagine monitoring in 5 years, do you really imagine that the future of monitoring is going to be us staring at dashboards and constantly configuring the monitoring tool to tell it what to look for? I don’t, and I believe there is a better way.

Enter Autonomous Monitoring

Autonomous monitoring at its core uses Machine Learning to automatically detect incidents and correlate them to the root cause, completely unsupervised. This means you just point your stream of metrics, logs and traces at the monitoring tool, and it will figure out when you have an incident and show you all the information you need on one screen to resolve that incident quickly.

No more configuring alerts and dashboards to tell the monitoring tool what to look for, no more searching across multiple tools and GB’s of logs to try and identify the root cause. The monitoring tool does this for you increasing your rate of incident detection and substantially reducing your MTTR.

Can It Actually Work?

Every field is getting disrupted by Machine Learning right now, and the hype around its promises has never been higher. As a result, a lot of monitoring vendors have jumped on the bandwagon with promises that basically haven’t been delivered. I believe, at least in the monitoring space, we are definitely in what Gartner calls the “trough of disillusionment” when it comes to using ML with monitoring, because we’ve seen so many bad examples of it now.

Baron Schwartz from VividCortex did a great talk a few years ago on why he believes we’re fooling ourselves with promises of machine learning and anomaly detection being able to take over from humans. And for years I also believed the same thing, until recently.

There are two major challenges to overcome. Firstly, to be able to detect incidents completely unsupervised, the ML needs to work across every single metric, log and trace as they’re ingested in real time. This presents a huge scaling problem for most environments today, as this can result in billions of samples per environment each day. As a result, the existing solutions allow you to apply anomaly detection algorithms to specific metrics, but this still requires a human to setup alert rules telling the system what to look for.

Secondly, because most solutions today do anomaly detection on specific events or metrics defined in an alert rule, they alert at a very granular level. At the level of a single metric or alert, things can get very noisy, and this results in users who tried the existing approaches getting spammed by their monitoring system and coming to the conclusion that ML anomaly detection doesn’t work.

However, after the “trough of disillusionment” comes the “slope of enlightenment”. At Zebrium, we had some insights into how these two challenges could be overcome with some new approaches in ML. We have started with logs because logs typically are the richest source of truth as to what happened during an incident, and most users today don’t get much value from their logs beyond reactively using them in incidents for searching. More importantly, we are already seeing fantastic results from logs.

In the next series of blog posts, we will dive into why existing approaches haven’t delivered, and how Zebrium has come up with a unique Autonomous Log Monitoring solution that can currently catch 65% of your incidents and root causes completely unsupervised within hours.

And, if you can’t wait for the next blog in this series, please download the whitepaper here.

Posted with permission of the Author: David Gildeh@zebrium.

Top comments (0)