Yes it’s all about reliability. The thing that makes a commercial software stands up and stick to people’s mind. I think the statement is self explanatory but to provide an example, if Google was buggy, it was not the first search engine that comes to everybody’s mind 😉.

Preface

The first key in having a good software is having a good development process. At #matelso we are committed to provide an efficient and reliable digital experience platform as a SaaS. Therefore in today's article, I want to write about how I keep my DevOps routine clean, fast, reliable and efficient using build automation tools.

Disclaimer

This article is not a tutorial nor the explanation on DevOps. It's just my approach to tackle building and deploying software component which in my opinion is good enough but might not be in yours. All in all, there might not be a perfect solution to this challenge so let's share our thoughts and experience to learn and improve our knowledge. Don't hesitate to criticize or support my opinion.

Remark: This article is meant for intermediate to advance programmers who are interested in cloud based software engineering.

Background

In the world of microservice software architecture, every component of a software is a standalone project. Therefore it needs to go through the whole development and operation chain as shown in the famous DevOps picture below:

Doing it all by hand sounds quite cumbersome and being honest who likes to do that? 😢

Nah, we are programmers so we should be able to do better. Let's automate it 💪.

General tools that I use for automating the process

My daily tech routine is quite simple. I code on my windows laptop, commit to git repository, push my git repository to GitLab and from there run my CI/CD pipeline to build, test and deploy my code. However, the key point in this routine is the CI/CD script that I run. This script can get long and unmaintainable if you decide to use only shell scripting, which I was also using even recently, but that's the exact reason why I switched to build automation tools. If you wanna see how, continue reading ;)

Build automation tool

...Is in fact a software that builds your software. I know it might sound complicated at first :) but it's fairly simple.

When we talk about building a project, in today's world what we really mean is "dockerising" that project which is the process of creating a docker image out of your compiled code which can then be run on any container runtime such as docker-compose or Kubernetes.

Of course that is not all and a CI/CD script might include other routines such as QC, test, publish packages and etc. We refer to all of those as CI/CD stages.

My CI/CD script

My projects are mainly C# web projects which then require following stages to go on production:

- build

- test

- quality assurance

- pack and publish NuGet packages

- rollout

Each of these stages require different tooling and environment. For example the build stage involves docker CLI command while the pack stage needs dotnet CLI and the rollout stage needs Helm and Kubectl. Therefore, integrating all these stages in shell scripts are not that easy all the time. With build automation tools you will have a wrapper around the shell commands which can also ensures the correct environment for that particular stage. Let's see how much the script can differ while using or not using these tools.

Old school: shell scripting

Let's take only the build stage into account. This is a glance of what I've been using in the past:

build:

stage: build

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- if [ $? -ne 0 ]; then echo "ERROR MISSING DEPLOYMENT Token (create gitlab-deploy-token)"; exit $BUILD_REPLY; fi

- echo "--== CI running on ${HOSTNAME} ==--"

- echo "--== CI-BUILD for ${CI_PROJECT_PATH} ==--"

- GIT_TAG=$(git describe --tags)

- DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )"

- ENV_PREFIX=$(echo $CI_ENVIRONMENT_NAME | tr /a-z/ /A-Z/)

script:

- TO_DOCKERISE=$(find . -iname "*.sln" -exec basename -s .sln {} \;)

- echo "dockerising project $TO_DOCKERISE"

- docker build -f src/SOSICRED.Server/Dockerfile -t $CI_REGISTRY_IMAGE:$CI_BUILD_REF_NAME -t $CI_REGISTRY_IMAGE:$GIT_TAG -t $CI_REGISTRY_IMAGE:latest .

- BUILD_REPLY=$?

- echo "image build returned $BUILD_REPLY"

- if [ $BUILD_REPLY -ne 0 ]; then echo "ERROR DOCKER IMAGE BUILD FAILED!!!"; exit $BUILD_REPLY; fi

- echo "--== PUSHING IMAGE TO GITLAB REGISTRY ==--"

- docker push $CI_REGISTRY_IMAGE:$CI_BUILD_REF_NAME

- docker push $CI_REGISTRY_IMAGE:$GIT_TAG

- docker push $CI_REGISTRY_IMAGE:latest

after_script:

- echo "--== DONE ==--"

allow_failure: false

environment:

name: build

url: https://10.8.3.64:3005/publicsuffixtree/json

when: always

only:

- development

- tags

tags:

- build # the GitLab runner's tag

As you see the script is fairly long for only building an image and worse that that, it involves logics that gets out of control really fast like dealing with git tags. If any of my colleagues read this, they'll remember that pain :)

Modern approach: build automation

For that I use NUKE execution engine which I forked and modified based on my own tastes and use cases. Here is the link to their repository that you can check it out https://github.com/nuke-build/nuke

I named it SKBA as in Sky Build Automation :) and up to today I don't have any plans of releasing it as open source and I more like to find commercial collaboration for this evenings project of mine. Here is what I could achieve using SKBA:

build:

stage: build

script:

- skba build-and-push-docker-image --docker-file .\src\SOSICRED.Server\Dockerfile

allow_failure: false

rules:

- if: ($CI_COMMIT_BRANCH == "developemnt" || $CI_COMMIT_TAG =~ /^\d+\.\d+\.\d+$/)

when: always

- if: $CI_COMMIT_BRANCH == "master"

when: never

- if: $CI_COMMIT_BRANCH

when: manual

tags:

- skba-runner

As you can see with one single command it takes care of building, tagging, versioning and pushing my docker images to the container registry of GitLab. Isn't that interesting 🥳

Additionally, it brings many security enhancements as well. For instance, the first command in the old school approach is docker login command with an argument to pass a plain text password. This approach is not secure and even creates a warning by docker CLI. In the cleaner approach however, there is not foot path of authorizing or any password. All is being handled by SKBA software.

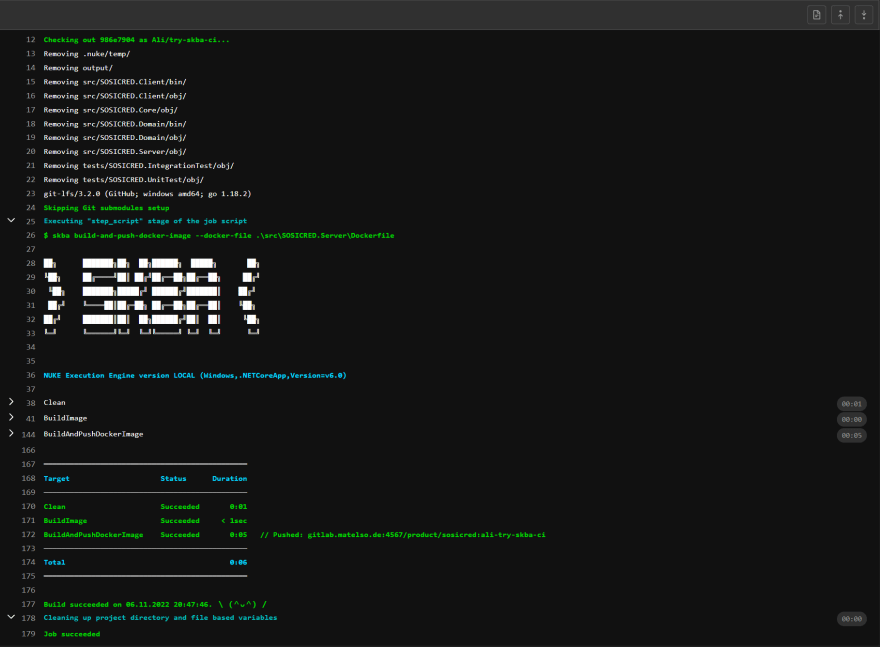

Here is how the logs from this job looks on GitLab:

As you see it even comes with GitLab theming integration where you can collapse the log of a stage plus the summary of what went well and what went wrong in the end.

Is that all?

Well as I mentioned, my CI/CD script includes 5 stages and for all of these stages I only fire a single command of the SKBA tool which minimal number of arguments. As an example, here is the stage that pack and publish all NuGet packages of my project:

pack:

stage: pack

script:

- skba pack-and-push-nuget-packages

--nuget-package-name SOSICRED.Client

--name-of-projects-to-pack SOSICRED.Client+SOSICRED.Domain

--restore-nuget-sources [[hidden]

--push-nuget-source [[hidden]]

--push-nuget-source-api-key [[hidden]]

--push-nuget-no-prompt

allow_failure: false

rules:

- if: ($CI_COMMIT_BRANCH == "development" || $CI_COMMIT_TAG =~ /^\d+\.\d+\.\d+$/)

when: on_success

- if: $CI_COMMIT_BRANCH == "master"

when: never

- if: $CI_COMMIT_BRANCH

when: on_success

tags:

- skba-runner

Again the cool thing is that I don't need to be worried about the version of my packages as it will be intelligently determined based on the last version that is published, the git version and the branch that runs the pipeline.

Further work

I am planning to add many more features to this tool. Things like sending notifications by email and chat message as well as publishing change log and release articles for the project. Integrating it into Jira workflow is also one my dreams as it replaces some of the manual work I need to do in my daily job. So far I got the initial required set of features implemented in SKBA and I'm very happy with it.

One remark that is worth mentioning, is that I will always keep this tool tailored to the tech stack of my choice which is Git, GitLab, Dotnet and Kubernetes. I think it's quite a lot of work to come up with something general that works with every DevOps tech stack. Every team may build one of these tools for themselves.

Collaboration

As I mentioned earlier, I am not planning release open source version of SKBA, however, I am very interested in collaborating on similar tools if you decide to build one for your own. If you use a different tech stack and still want to integrate build automation into your continuous development routine, we can talk and I can help you with building one. If you have a team that uses similar tech stack as mine, and you want to try SKBA, feel free to contact me and we can talk.

Conclusion

In this article I demonstrated how I made my DevOps routine cleaner and more eloquent by using build automation tools like NUKE. I mainly wrote about building and packaging stages of a CI/CD pipeline while there is more to that. If this sounds interesting to you, stay tuned for my future similar articles on the deployment stage using Kubectl and Helm.

I hope you enjoyed following along with my journey and learn something new. Stay tuned for possible and probable future parts of this article including more challenges and their solutions.

Mohammadali Forouzesh - A passionate software engineer

06/11/2022

LinkedIn - Instagram

Top comments (3)

It's always a good practice to have any build script outside the ci config. At least it enable us to run it without the ci.

But from what i understood, any other issue are just moved from the ci config to the skba tool. For example the docker password has to be known from that script. How is it better protected than in gitlab secret store?

In the end if this tool does improve the build maintenance its already a big step forward

Thanks for your insightful mentions.

The cool thing is that SKAB can still use your GitLab secret store for authorizing against your container registry as well as any other secret store.

Here is how: The execution engine of NUKE allow you to inject CI runner specific information while running the build using this line of code:

You can also read secrets from Azure Key Vault or any other secret store. Or even store the values directly on you build runner server and protect that as a whole :)

Also don't forget to check out #matelso on LinkedIn: linkedin.com/company/matelso/