Managing API keys, database passwords, and other secret variables in CI has always been a tiny bit painful and often a massive security loophole in most organisations.

Let’s try to see how we can improve the situation with AWS Secrets Manager, this simple wrapper, and your favorite CI provider (in our case, Gitlab CI — but it should work relatively similarly with most providers).

Let’s use AWS Secrets Manager to help you secure your CI stages.

The problem

By definition, secrets are meant to be… secret. But CI should not be a black box, accessible by a few chosen ones, only so that you can protect your precious secrets! CI is basically the last time you get to touch your app before it ships to your customers or users. All developers should be able to inspect the logs of previous builds and run builds on their own.

Let’s start by stating the obvious:

- If you can get away in doing what you are doing without using any secrets and just making everything already public, great. But chances are you can’t.

- Secrets are obviously meant to be secret, but at least one person will need to know them at some point, and people are weak.

- Security through obscurity is not security. Hiding secrets, for example inside CI stages, is not security.

In that case, what’s a good (as in “easy and I can do it without having a whole costly devops team”) way to deal with secrets? Let’s consider your probable situation.

- You can setup secrets in the CI provider’s configuration panel. This is great as it enables you to pass any variable to any project very easily. However, when you have hundreds of projects, and quite possibly some shared variables between projects, it can become a little bit difficult to manage. You also need to setup the environment properly before the first build, which means letting someone know of the variable, which means introducing a weak point.

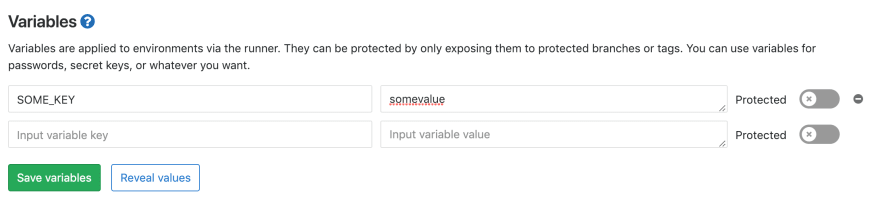

The “Variables” panel inside Gitlab’s CI configuration for a given project.

- Secrets usually have a lot of power attached to them (otherwise they wouldn’t be secret). CI stages will often need to retrieve private packages, perform some private task, access a private database, or publish a new release of your app that could ultimately get in the hands of your users. If someone were to get access to a secret used in CI, they usually gain a LOT of power, and it’s not necessarily easy to detect right away.

- Perhaps you want to hide your secrets and not make them available to your developers. You can use the “protected” setting above and some security setting in the access rules, but it does not completely prevent developers to log the secret in a hidden part of their code that would miraculously pass all the code reviews (you have code reviews, right?), and there you have your secret available in plain text to anyone with read access to the CI logs — which should be most of your developers, if you ask me.

- How hard would it be to replace all your secrets, right now? Let’s say one of your engineers, tasked with setting up these environments, leaves the company. What damage can they do with the keys they know? What if a database password, used in a few different projects, needs to be replaced for some reason — in how many places is it used across all your repositories? Do you really want to spend half a day updating 50 repositories every time somebody leaves the company, or as a routine task every few weeks because your policy says you should rotate all secrets regularly?

So, what can we do?

The only way to make your secrets more secure (given the limitation that you can not get away with not having secrets at all, AND no matter what you do to prevent it, people will be able to access them one way or another at some point) is to make them more volatile. They should be revokable at any given time, without causing much damage, and as often as possible.

You should not care about who knows about what secret at any given time (because people will find out).

The only way you can practically consider revoking your secrets often (and not spend a considerable amount of time manually replacing them everywhere if you have hundreds of them) is to have them managed centrally.

You should not care about having to replace the secret every once in a while (because it’s a painless operation).

The only way you can guarantee that secrets will be revoked often is by automating their rotation. Human is lazy, forgets, goes on vacation, is busy, sleeps…

You should not care about this at all because you have better things to do.

Secrets are meant to be volatile.

I think the problem lies in the word secret. People naturally think that secrets are effectively secret AND rely on them to stay secret. The truth is, secrets do get exposed. It is an unavoidable part of their lifecycle: the longer a secret stays in place, the higher the risk that it gets exposed, and then what?

If your entire business relies on the sole concept of secrets staying secret, I feel sorry for you.

On the other hand, if you actively prepare for your secrets to be exposed at some point, and make it a natural event of your application to just casually change secrets every once in a while without having to even think about, then you solve a much, much large issue: peace of mind. It is very, very comparable to the concept of continuous integration: deploying to production often makes it an absolute non-event.

Consider you are terrible at security. Consider any secret as compromised if it does not get recycled every few hours/days/weeks. Make the rotation of your passwords a non-event. It’s as natural an operation as it gets.

Secrets are a volatile piece of data, nothing more.

Enter AWS Secrets Manager

AWS Secrets Manager is a relatively new service by AWS which is similar to some sort of API-fied, cloud-enabled, 1Password on steroids.

Basically, your main password is as usual with AWS, your AWS credentials (instance role, IAM user, etc.), which gives you access to fine-grained access settings (who can read/update secrets stored in the service). I am not going to go into how to setup an AWS account or the basic IAM concepts here, there are countless great resources about it online! Let’s consider you can access the AWS console, and know how to do so, and have heard about IAM before.

The main features we are interested in here are:

- Centralize management of as many secrets as you want without making them directly available to any particular entity. Instead, they are meant to be consumed on-demand, by using regular AWS credentials, and limiting access via standard IAM rules. This is a great example of responsibility separation by design: use IAM for ACL, Secrets Manager for safe secrets management, and combine both on demand. Secrets should not care who has access to them as it should simply be considered as a piece of data, and by defining group or role based ACLs, you can give or revoke access to any secret values at all times.

- Automate secrets rotation as often as you like with a Lambda function. Set once and forget. What this buys you is that as soon as you revoke access to Secrets Manager to anyone (or anything), they might still access the current secrets for a little while, but as soon as this rotation script runs… who cares what secret they know?

By making secrets access volatile and identity-based, this service solves a bunch of security problems for all devops engineers who don’t need to reinvent the wheel for secrets management (or put their whole company’s security at risk if they don’t address it properly).

Using AWS Secrets Manager in CI/CD

Since the setup of AWS Secrets Manager takes about 5 minutes, the main complexity is to make this easy to integrate into your CI project. To help you with that, we released https://hub.docker.com/r/clevy/awssecrets, a tool that helps you retrieve secrets to use in your projects’ CI steps. If you prefer to build from source for something this sensitive (you should), you can find them here: https://github.com/Clevyio/docker-awssecrets

The following is our current production setup:

- Private gitlab runners on EC2 instances with a GitlabCiInstance IAM role attached. These instances run in a VPC, which means that their access to/from outside is enforced by any security rules we can control.

- An IAM policy for limiting read access to Secrets Manager, attached to the GitlabCiInstance role access that looks something like this (you can of course limit what secrets it has access to, but you get the idea):

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "GitlabCiPolicy",

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue"

],

"Resource": "*"

}

]

}

- Some secrets stored in AWS Secrets Manager with auto-rotation enabled, some projects in our gitlab repositories with CI enabled, etc. :-)

The following would resemble a typical .gitlab-ci.yml file defining our CI process, with usually a first step that looks like this:

secrets:

stage: secrets

image: clevy/awssecrets

script:

- awssecrets --region eu-west-1 --secret my-secrets > .ci-secrets

artifacts:

# you want to set this to a value high enough to be used in all your stages,

# but low enough that it gets invalidated quickly and needs to be regenerated later

expire_in: 30 min

paths:

# this will be available by default in all the next stages

- .ci-secrets

A few things to note here: there is no need to pass AWS credentials to aws secrets: remember, the instance has a role attached to it that automatically gives access to the service! So all calls are “automatically” authed, and there is no risk of leaking AWS credentials that could give access to your secrets, as this must be used in an instance role in an authorized EC2, which you do not want to grant to just anybody.

This will produce an artifact, which is a fancy word for any file or folder you decide to pass to later stages. In our case, we have simply created a .ci-secrets file. This file looks like this:

SOME_SECRET=poepoe

OTHER_SECRET=tutu

VERY_SECRET=lalala

Because we set a expire\_in value, gitlab will automatically remove the artifact after 30 minutes. If your CI process take longer to run, of course you can adjust this duration. With this feature you can somewhat control the risk of leaking secrets in the long run, but it is not technically a security measure!

Then, in the following steps, you can simply use the .ci-secrets file that was just created:

release:

stage: deploy

image: node

before_script:

# export the contents of the secrets file in your environment

- export $(cat .ci-secrets | xargs)

- echo "//registry.npmjs.org/:_authToken=${NPM_TOKEN}" >> ~/.npmrc

script:

- npm install -q

- npm publish

This step effectively publishes an image on a npm repository, so this secret contains a NPM_TOKEN with write access to a package— quite sensitive!

Worst case scenario

Let’s consider a few plausible worst case scenarios directly concerning your secrets. (I am not going to consider a scenario like “somebody got access to my gitlab runner instance and can do whatever they want because of the instance role”: this is a completely different security issue, not linked with exposing secrets, and should normally be taken care of at least with VPC and security group access rules.)

- Somebody from your organisation that you absolutely trust gets full access to the CI configuration and somehow manages to output all your precious secret variables in clear text in the CI logs, making them visible to anyone else with read access. Shit happens! Now one of your employees saw those, got terminated the following day, returns home and wants to hurt you badly. Since we have setup AWS Secrets Manager for auto-rotation of our keys every day, by the time they get home the token will already have been rotated. They try to use the NPM_TOKEN above, but get a 403 Forbidden error, and move on with their lives.

- Somebody outside of your organisation somehow got access to some very secret variable and you urgently need to replace it everywhere: simply run the rotation function once and go back to bed, you deserve it!

Conclusion

My take on this is that we should stop worrying about secrets at once and just make them volatile. Of course you shouldn’t put secrets in clear text in public repositories. But maybe you were drunk one night, tried to do something stupid at 3AM, forgot to update the .gitignore and published them in clear text. You are only human!

What do you think?

Top comments (0)