This article covers:

- Why serverless

- Why not serverless

- The problem: long-duration file upload

- The solution: background functions

- Tips for working with background functions

Welcome to the year of "more with less"

Do more. With less.

This phrase gets banded around by enterprise managers whenever the economy is in the dumps.

In the startup world, resources are even sparser. As Moropo's CTO, I need to deliver as much value to our users as possible with whatever is available.

Enter: serverless functions.

One of the most significant innovations we use in our architecture to achieve this goal is Serverless Functions. These "functions as a service" allow you to hand off discrete computations such as "fetch user" or "post reply" to infrastructure managed by a cloud provider (such as Netlify or AWS).

Newer entrants to the software industry might feel like serverless has been around forever. But actually, adoption isn't as widespread as you might guess. This is especially true for large enterprises.

When I started my career in 2013, I worked for a large tech corporation. We maintained hundreds of servers across dozens of sites worldwide. Every new provision or major upgrade would mean hours trapped in a loud, cold room constantly blasted by A/C. A year later, AWS released Lambda, and the world began to change...

Working with serverless functions for the first time was a revelation!

- No physical box.

- You pay for only what you use.

- Immediate deployment.

- Infinitely many separate environments.

- Near instant and limitless scaling.

- Environmentally friendly.

And that's only scratching the surface of these functions' power. When you combine these properties, they can compound into some ingenious use cases which would never have been possible without an expensive box in a basement somewhere.

What's the catch?

From the previous paragraph, it may sound like serverless functions are about to solve world hunger and bring a new era of peace.

Sadly not.

There are significant limitations, including:

- Only specific programming languages can be used.

- You don't have control over the execution environment.

- Functions can take up to a few seconds to start if they are "cold", leading to poor performance for users.

- They can be more expensive than servers on a large scale.

- You are limited to a particular execution period, e.g. 10 seconds

Each limitation could be the subject of its own article.

Today, we're focused on just one: execution time.

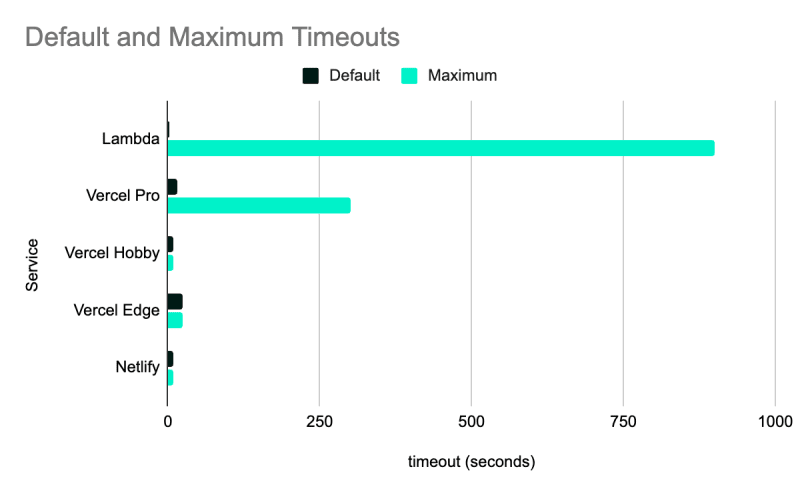

Functions-as-a-service timeout values

10 seconds is a long time - until it isn't...

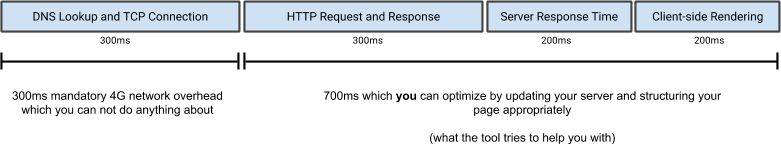

The most common use case for writing serverless functions is a Single Page Application (SPA) website. According to Google's guidelines, running CRUD operations in your SPA should take less than 200ms to keep users engaged. If your execution time is longer, then you're likely to be penalised with poor user experience scores and low SEO ratings - boo!

A typical server request-response round-trip

At Moropo, Netlify runs serverless functions for our SPA dashboard.

Netlify gives us 10 seconds of execution time for each function we invoke.

That's 50x more than we need if we're hitting Google's 200ms target - which we are!

Not all functionality in a SPA is "fast CRUD ops".

Sometimes, we need to do slow things.

Here are some examples of when that 10-second execution limit causes issues.

| Use case | Example time |

|---|---|

| Processing media | 10 mins |

| Uploading files | 2 mins |

| Polling tasks | 45s |

| Waiting for external services | 5 mins |

Read on to see how we dealt with extended running operations without opting for a server.

Problem: file upload without a server

In Moropo, users can import an iOS or Android binary build directly from Expo Application Services (EAS), a service to help build React Native apps.

We do this by allowing users to add their access token to our Expo integration panel and then fetch the relevant data directly from the Expo API.

Moropo UI showing the Expo integration

To get these files from EAS to our storage buckets (hosted in Supabase), we need to have a process which moves the data from one location to the next.

"Great!" we said. "Let's just create a Netlify function and stream the file".

So we implemented the naive approach... and it didn't work!

The Netlify functions would successfully execute the code, but for larger files, they would timeout at 10 seconds before the file had fully transferred.

Potential solutions - should we run a server?

Let's take a look at some of the options available to get around this problem.

1: Create an EC2 server instance

Pros: runs constantly, no timeout.

Cons: requires maintenance, doesn't scale, needs a web server to be set up securely.

2: Use an auto-scaling docker container service (e.g. Elastic Beanstalk, Azure Container Apps, etc.)

Pros: much simpler infrastructure management, auto-scaling, lower cost for sparse use

Cons: difficult to scale to 0 for infrequent workloads, provider-specific learning curve, managing docker images

3: Long-running serverless functions (e.g. Lambda, Vercel Pro, Netlify Background)

Pros: all the benefits of regular serverless functions, run for longer time.

Cons: Expensive compared to a server, resolves without a response(Netlify Background)

Converting to a background function

We already use Netlify functions, so option 3 made the most sense for our use case.

The solution was so simple that it's almost hard to believe:

add _background to the function name 🙀

This small change tells Netlify to use a Background Function instead of a normal function and extends the timeout period to a generous 15 minutes. Even though this may seem like a silver bullet, there are some things to be aware of.

The most significant is that it does not work as a synchronous call. The invoker can't wait for the process to return and instead will receive an immediate 202 response. The background function will continue to execute in the - yep, you've guessed it - the background.

But what if you wanted to upload a large file from your client-side application? In this case, a background function would not work as the browser would close the connection as soon as it received the 202 response.

The reason a background function works well in the case of streaming server-to-server is that it can be triggered and then whirr away in the background entirely asynchronously. We don't care about the response as we track the success or failure of the job in the database.

Here is the critical code from the function for streaming between a given URL and a location within Supabase storage:

import { SUPABASE_URL } from 'config/publicEnvironmentVariables';

import got from 'got';

import stream from 'stream';

import { captureException } from 'utils/errorLogging';

interface IStreamToBucket {

sourceUrl: string;

bucket: string;

targetPath: string;

token: string;

}

export const streamToBucket = async ({

sourceUrl,

bucket,

targetPath,

token,

}: IStreamToBucket) => {

await new Promise(async (resolve) => {

got

.stream({ url: sourceUrl })

.on('end', () => resolve(0))

.pipe(

got.stream.post(

SUPABASE_URL + `/storage/v1/object/${bucket}/${targetPath}`,

{

headers: {

'content-type': 'application/octet-stream',

Authorization: `Bearer ${token}`,

},

},

),

)

.pipe(new stream.PassThrough())

.on('error', (err: any) =>

captureException(err.toString, 'streamToBucket'),

);

});

};

If you want to see this code in action, simply sign up and run a mobile app test at Moropo

Upcoming articles

This is the first of a series of articles where I discuss increasingly complex ways of developing serverless applications to show how you can also avoid the complexities of servers.

The next article will go into more detail on auto-scaling docker containers in the cloud.

Top comments (1)

FaaS are so much more affordable than running a server all the time - very happy to find this solution