What is a genetic algorithm?

A genetic algorithm is a method to solve a problem inspired in the natural selection process popularised by Charles Darwin:

As many more individuals of each species are born than can possibly survive; and as, consequently, there is a frequently recurring struggle for existence, it follows that any being, if it vary however slightly in any manner profitable to itself, under the complex and sometimes varying conditions of life, will have a better chance of surviving, and thus be naturally selected. From the strong principle of inheritance, any selected variety will tend to propagate its new and modified form

There are many variants of these algorithms (they are actually an algorithm family) but all follow roughly the same steps:

Generate a "population" of individuals (or chromosomes in genetic algorithm parlance)

Evaluate these individuals by their "fitness"

Keep N "fittest" subjects

Make these subjects breed and build the "next generation"

Go back to step 2 and repeat this process

What type of problems can you solve?

You can solve any problem that involves optimizing some function, either maximizing or minimizing it.

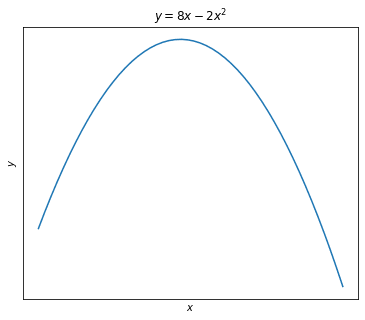

For example, given:

y = 8x - 2x^2

def f(x): return 8 * x - 2 * x**2

We'd like to find the value of x that maximizes y, and we are going to do it with a tiny genetic library called evol

First Generation

For this process to begin we need to build a "first generation" from scratch. We'll do this picking random numbers for the chromosomes or x values.

We assume the value of x that yields the optimal value of y is unknown (although we can see in the plot pretty much where it is) but we have a prior belief that it ranges from -1,000,000 to 1,000,000.

Our first generation will pick values on that range:

np.random.seed(1234) # make results reproducible.

def random_guy(): return np.random.randint(low=-1_000_000, high=1_000_000)

initial_generation_size = 100

first_generation = [random_guy() for _ in range(initial_generation_size)]

Now we can build or Population object. We also provide the function that requires optimizing and we specify this is a maximization problem.

pop = Population(chromosomes=first_generation, eval_function=f, maximize=True)

Survival of the fittest

We'll keep 10% of the population members using the survive function:

survivors = pop.survive(fraction=0.1)

Breeding and mutations

These survivors will create a new generation, by breeding and mutations

For this step we need to define:

A function for picking two parents from the survivors (we'll pick any two)

Another function to specify how these two individuals (parents) generate an offspring (breeding)

A last one to add some random mutations to the offspring (this is usually done so you don't get stuck in local maxima)

def pick_parents(population):

mom, dad = np.random.choice(population, size=2)

return mom, dad

# the child is the mean between two parents.

def make_child(mom, dad):

return (mom + dad) / 2

# add gaussian noise with mean zero.

def random_noise(individual, sigma):

noise = np.random.normal(loc=0, scale=10) * sigma

return individual + noise

new_generation = survivors.breed(parent_picker=pick_parents, combiner=make_child).mutate(random_noise, sigma=1)

It's evolution, baby

We now have a new_generation with (hopefully) fitter individuals.We'll repeat this process a few more times, keeping the fittest individual of each generation.

def fittest(generation):

fit = sorted(generation.evaluate(), key=lambda x: x.fitness)[-1]

return fit.chromosome

generations = 120

fittest_of_each_gen = []

gen = new_generation

for _ in range(generations):

survivors = gen.survive(fraction=0.1)

new_gen = survivors.breed(parent_picker=pick_parents, combiner=make_child).mutate(random_noise, sigma=1)

fittest_of_each_gen.append(fittest(new_gen))

gen = new_gen

Results

Let's plot the original function again, this time with the fittest individuals of each generation on top.

Note individuals go chronologically from blue (cool) to red (warm). It's also useful to include the error of each approximation.

About generation #100 the output of our algorithm starts to converge with the maximum y and error goes down to zero.

Closing remarks

Does this technique make sense? after all we know we can find the function maximum analytically with it's derivative:

f(x) = 8x - 2x^2

f'(x) = 8 - 4x

f'(x) = 8 - 4 * 2 = 0

Though the process is indeed an overkill for cases like this, the algorithm generalizes to all kinds of problems and functions, not only continuous and differentiable.

For more information on genetic algorithms and the evol library make sure to check it's GitHub repository.

Hope you enjoyed this! If you did let me know on Twitter

Top comments (0)