Modern applications change frequently as you deliver features and fixes to customers. These changes in data access patterns require changes to the structure and content of your data, even when using a schemaless database like Fauna.

In this series, you learn how to implement migrations in Fauna, the data API for modern applications. This post introduces a high-level strategy for planning and running migrations. In the second post, you learn how to implement the migration patterns using user-defined functions (UDFs) written in the Fauna Query Language (FQL). The third post presents special considerations for migrating your data when you access Fauna via the Fauna GraphQL API. In the final post, you learn strategies and patterns for migrating your data and explore the impact migrations have on your indexes.

Planning for migration

Planning is the most important element of a successful database migration. Preparing to evolve your data before you need to perform a migration reduces the risk of a migration and decreases the implementation time. Consider the four following key areas to prepare your database:

- Encapsulate your data by limiting all access to your data to UDFs.

- Migrate in steps to minimize the risk and allow for continuous uptime.

- Choose a data update strategy based on your application's specific needs.

- Assess the impact on your indexes to minimize performance impact.

Encapsulating your data

Always accessing your data via UDFs is generally a best practice with Fauna. Only allow clients to call specific UDFs, and prevent client-side calls to primitive operations like Create, Match, Update, and Delete. This separates your business logic from the presentation layer, which provides several benefits. UDFs can be unit-tested independently of the client, ensuring correctness. Because UDFs encapsulate your business rules, you can write them once and call them from different clients, platforms, and programming languages.

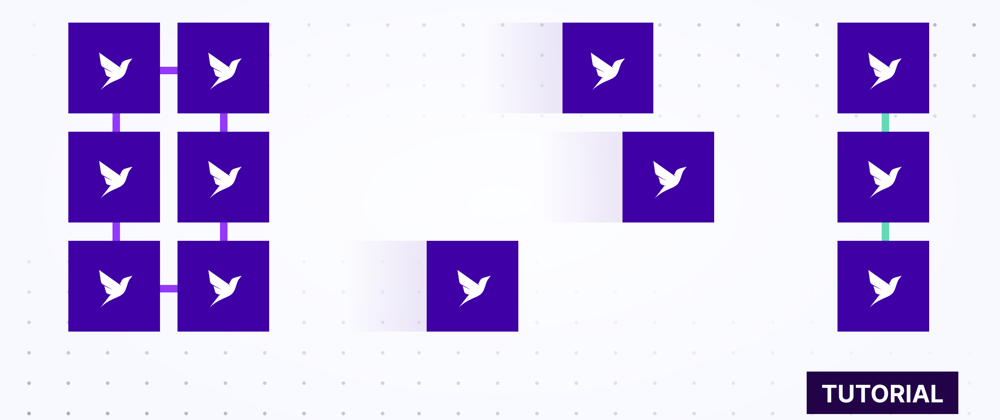

The following diagram represents a client side call to the FQL Create() primitive to create a document in a collection called notifications.

Suppose you receive a new requirement to add a field to each document that indicates whether a notification is urgent? You can modify the client code and publish a new version, but what about users who do not upgrade? What if the field is required to work with a downstream dependency? How do you handle existing documents and outdated clients? This single change can cascade and create additional complexity, which can lead to errors. That complexity multiplies when you combine multiple changes.

Instead, create a UDF, create_notification, and call that UDF directly from your client code with an FQL Call() statement.

With this change, newer versions of the UDF can accept calls from any version of the client. If the UDF expects a field and it is not provided, the UDF can set a reasonable default or calculated value and continue to completion. The second post in this series constructs a more complete example and shows how to test for existence of fields in UDF calls.

UDF-first development provides a number of other benefits, including support for attribute-based access control (ABAC), feature flags, versioning, and more. See this guide for additional information on UDFs.

Migrating in steps

Migrating in steps minimizes the risk of migrations and can allow for continuous uptime. Where possible, each step should change only one aspect of your database structure or data. A general pattern for updating the data type of a field has three steps: populating the values of the new field from existing data, confirming zero defects, and optionally removing any deprecated fields.

Populating new field values

The first step in a migration is creating a UDF that populates the value of your new field from existing data according to your business logic. The new value can be calculated using existing fields in the document, a calculated value, or other reasonable default.

Consider a field ipRange that stores a range of IP addresses in CIDR notation as an FQL string. You can create a new field ipRanges that stores the existing value as an FQL array without modifying the existing ipRange field.

You should call this UDF any time you access documents that have not yet been migrated, regardless of which data update strategy you choose.

Confirming zero defects

Next, create a second UDF that compares the value of your new field to the existing values. This UDF ensures that your data is migrated correctly. When you call this UDF varies based on your chosen data update strategy. Regardless of the strategy you choose, this UDF gives you the confidence that your migration succeeded on the document level. It also allows you to remove deprecated fields in the next step without worrying about data loss.

Removing deprecated fields

Removing deprecated fields is the final step in a migration. This step is strictly optional, but recommended. Removing deprecated fields reduces your data storage costs, particularly if those fields are indexed. It also removes unnecessary complexity from your data model. This is particularly helpful if you access Fauna via GraphQL, as you must explicitly define each field in your schema.

Choosing a data update strategy

You choose when to update your data, and how much of your data to update, based on your own use case and access patterns. There are three general data update strategies: just-in-time, immediate, and throttled batch updates.

Just-in-time updates

Just-in-time (JIT) updates wait until the first time a document is retrieved or altered to apply outstanding migrations. JIT updates check whether the specified document has been migrated and, if not, calls the UDF you specify before proceeding. The UDF-first pattern described in Encapsulating your data enables JIT updates without requiring the client to know a change has been made.

JIT updates are most appropriate for use cases where documents are accessed one at a time or in very small groups. They are especially good for documents with the optional ttl (time-to-live) field set, as these documents may age out of your database before they need to be migrated.

If you regularly retrieve many documents in a single query, JIT updates can degrade the performance of your query. In this case, you should choose between immediate and throttled batch updates.

Immediate updates

Immediate updates greedily apply your migration UDF to every matching document in your database in a single Fauna transaction. This simplifies future document retrieval, and maintains the performance of queries that return large data sets.

However, immediate updates require you to access and modify every document affected by your migration at once. If you infrequently access large portions of your data, this can create unnecessary costs. If you have indexes over the existing or new fields, these indexes must also be re-written along with your updated documents.

See Assessing the impact on your indexes for additional consideration for indexes and migrations.

Throttled batch updates

Throttled batch updates provide the benefits of JIT and immediate updates, but do so at the cost of additional complexity. Throttled batch updates use an external program to apply your migration UDF to groups (or "batches") of documents at a slower rate. You manipulate that rate by modifying either the number of documents in each batch or the period of time between each batch. This enables you to tune the time to completion, allowing you to migrate your entire data set without imposing any performance penalties.

If a request is made to access or alter a document that has not been migrated while your batch is still processing, you apply your migration UDF to that document first, just as you do with a JIT update.

Assessing the impact on your indexes

You cannot modify the terms or values of indexes, including binding objects, once they have been created. If you have an index over a previously existing field and want to use it for the newly migrated field, you must create a new index.

Fauna also limits concurrent index builds to 24 per account, not per database. Attempting to exceed this limit results in an HTTP 429 error that your application must handle. Additionally, index builds for collections with more than 128 documents are handled by a background task. This means that your transaction will complete successfully quickly, but you will not be able to query the index until it has finished building.

Conclusion

Successful data migrations depend heavily on planning. Use UDFs to encapsulate business logic, including performing any necessary data migrations. Break your migration in small steps with duplicated results to reduce risk at each stage. Finally, assess the impact on your indexes and develop a strategy for updating your data.

In the next post in this series, you learn how to implement the previous migration pattern using UDFs written in FQL.

Latest comments (0)