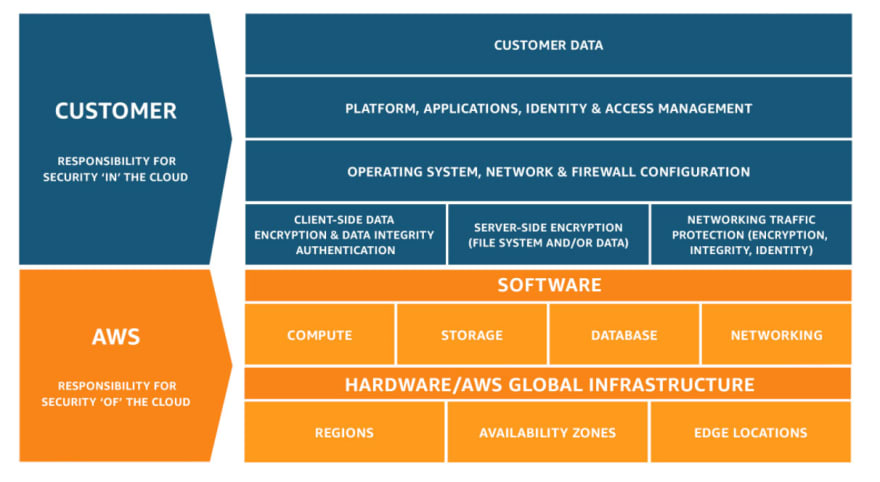

In this article we are going to take a look at some of AWS services required for setup of AWS Sagemaker. Once you have an understanding of the services listed in this blog, you can start using sagemaker for machine learning. This will ensure you have a safe environment to provision AWS services and allow compute resources. Knowledge about some of these services is critical as it helps in determining the right decisions to comply with AWS shared responsibility model while taking security into consideration. The model is shown below.

Some of the services we would need include:

- IAM account

- S3 bucket

- VPC

- EC2 instances.

Let's go into the detail of what each service is and how it can be provisioned.

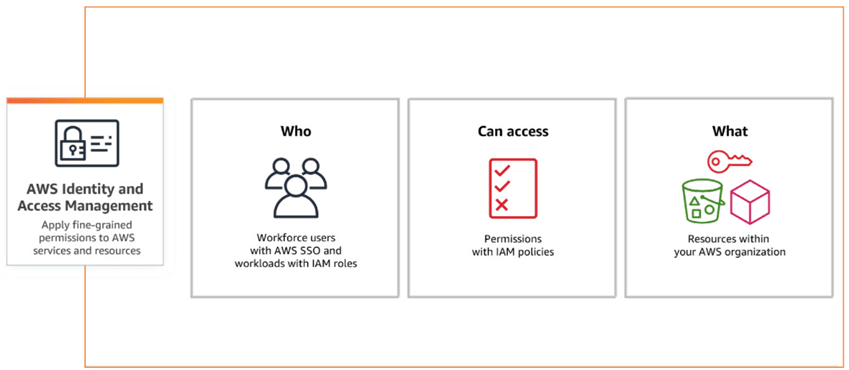

IAM (Identity and Access Management): This falls under 'security, identity and compliance' category under the AWS list of services. In order to access AWS services, you would need to provision IAM roles to gain and manage access to AWS resources. IAM policies are attached to specific roles, providing granular permissions and authentication to users, for specific services. IAM implements the concept of principals, actions, resources, and conditions. This defines which principals can perform which actions on which resources and under which conditions. Some of the most commonly used policy types include Identity based policies and resource based policies.

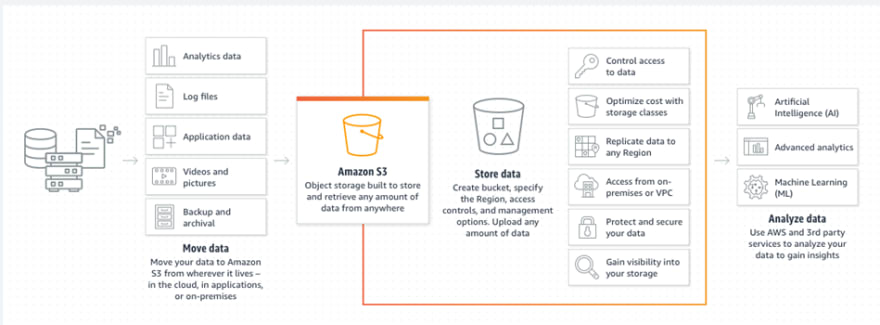

S3 (Simple Storage Service) - This falls under 'storage' category under the AWS list of services. Amazon S3 is an object storage service and is used to store and protect any amount of data for a range of use cases, such as data lakes, websites, mobile applications, backup and restore, archive, enterprise applications, IoT devices, and big data analytics. Some of the features provided by S3 include different storage classes, storage and access management, data processing via s3 object lambda, as well as logging and monitoring tools.

VPC (Virtual Private Cloud) - To maintain security for data and applications, AWS resources are often provisioned inside a virtual network. Not only is it considered more secure, it can be managed according to an organization's requirements, providing more flexibility, while still keeping the benefits of using the scalable infrastructure of AWS. As an example, AWS resources like EC2 instances can be commissioned inside VPC, by specifying an IP address range for the VPC, and adding subnets, security groups and configuration of route tables. We can initiate a public subnet for access to the internet like cloning github repositories etc., whereas a private subnet can be utilized for sensitive data.

Image Ref: https://www.geeksforgeeks.org/amazon-vpc-introduction-to-amazon-virtual-cloud/

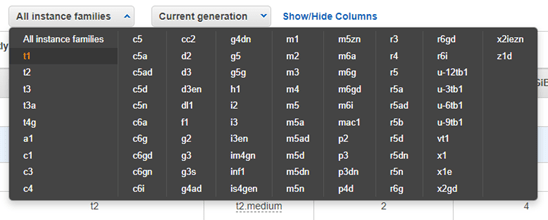

EC2 (Elastic Compute Cloud): In order to perform any computations in the cloud, you would need a virtual computing environment and the service offered by AWS is EC2. Apart from being highly scalable, EC2 instances come in a variety of configurations of CPU, memory, storage, and networking capacity. Using a service like this would allow to remove any dependency and investments to on-premise hardware. Some compute examples are shown below. EC2 spot Instances can be utilized for various stateless, fault-tolerant, or flexible applications such as big data, containerized workloads, CI/CD, web servers, high-performance computing (HPC), and test & development workloads.

Note: Image references from https://docs.aws.amazon.com/index.html

Top comments (0)