Web scraping is a technique that enables the extraction of data from websites. It is a powerful tool that can be used for many purposes such as data analysis, research, and automation. In this tutorial, we will learn how to build a simple web scraper using Python and BeautifulSoup. We will discuss the various benefits of web scraping and how it can be used to improve your business. We will also cover how to implement and use a web scraper effectively.

Understanding the Benefits of Web Scraping

Web scraping is a powerful technique that can be used to extract data from websites. It can help you gain insights into consumer behavior, industry trends, and competitor strategies. By leveraging web scraping, you can collect and analyze data from a wide range of sources to gain a competitive edge. You can also automate tasks such as data collection, which can save you time and resources.

Installing Required Libraries

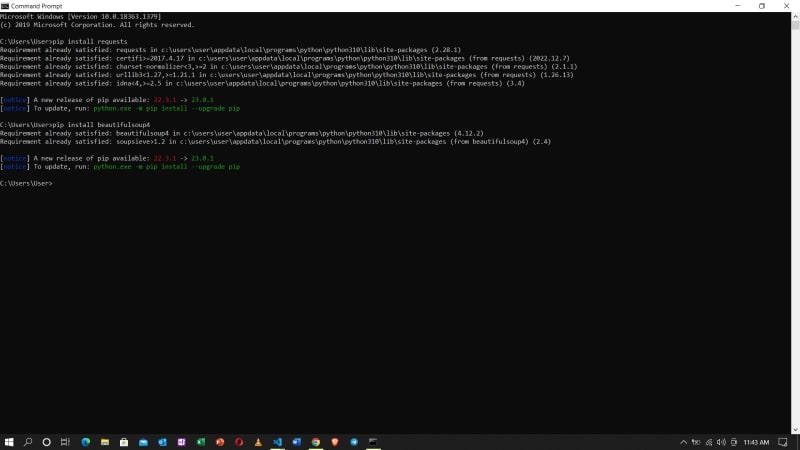

Before we dive into the coding part, we need to install two libraries: requests and BeautifulSoup. Requests is a library that allows us to send HTTP requests using Python, while BeautifulSoup is a library that helps us parse HTML and XML documents. The installation process is simple and can be done using a few lines of code:

pip install requests

pip install beautifulsoup4

I’m a windows user so I ran these lines of code on my command prompt. Mac and Linux users can install these libraries on their terminal.

Implementing and Using a Web Scraper

Now that we have installed the required libraries, we can start implementing and using our web scraper. The first step is to identify the website that we want to scrape. Once we have identified the website, we need to analyze its structure and identify the data that we want to extract. This will help us determine the HTML tags that we need to target with our web scraper.

Once we have identified the data that we want to extract, we can start building our web scraper. We start by sending an HTTP request to the website using the requests library. Once we have received the HTML content, we can parse it using BeautifulSoup. We can then extract the data that we need from the HTML content using various BeautifulSoup methods.

Here is an example code snippet that demonstrates how to implement and use a web scraper:

import requests

from bs4 import BeautifulSoup

url = '<https://www.example.com>'

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Extracting all the links on the website

links = []

for link in soup.find_all('a'):

links.append(link.get('href'))

print(links)

For my case, I used the link of one of my articles here on Dev.to

The output of this code will be a list of all the links on the website. We can modify this code to extract other data such as images, text, and tables from the website.

This was the results. The long list of links above are what the program scraped from one of my article’s page.

Conclusion

In this tutorial, we learned how to build a simple web scraper using Python and BeautifulSoup. We discussed the various benefits of web scraping and how it can be used to improve your business. We also covered how to implement and use a web scraper effectively. Web scraping is a powerful tool that can be used for various purposes, but it should be used ethically and responsibly. We encourage you to continue exploring and learning about web scraping techniques and tools.

Top comments (0)