If you’re looking to make it as a Senior Software Engineer, you’re probably aware of how important Concurrency concepts can be. With the rapid rise in the prevalence of multi-core machines, engineers who are able to skillfully navigate their complexity are highly desired by most tech companies today.

Concurrency problems are generally the interviewing barometer that separates Senior Engineers from Junior ones — and that can mean a difference in hundreds of thousands of dollars in compensation in some cases. Clearly, candidates who are serious about advancing their software engineering careers should have a solid grasp of this area.

To tap into this need, we worked closely with prominent software engineer C.H. Afzal to create a comprehensive course that guides learners through all they need to know to succeed at concurrency related questions for senior engineering interviews.

Check out Java Multithreading and Concurrency for Senior Engineering Interviews.

What is multithreading and why does it exist?

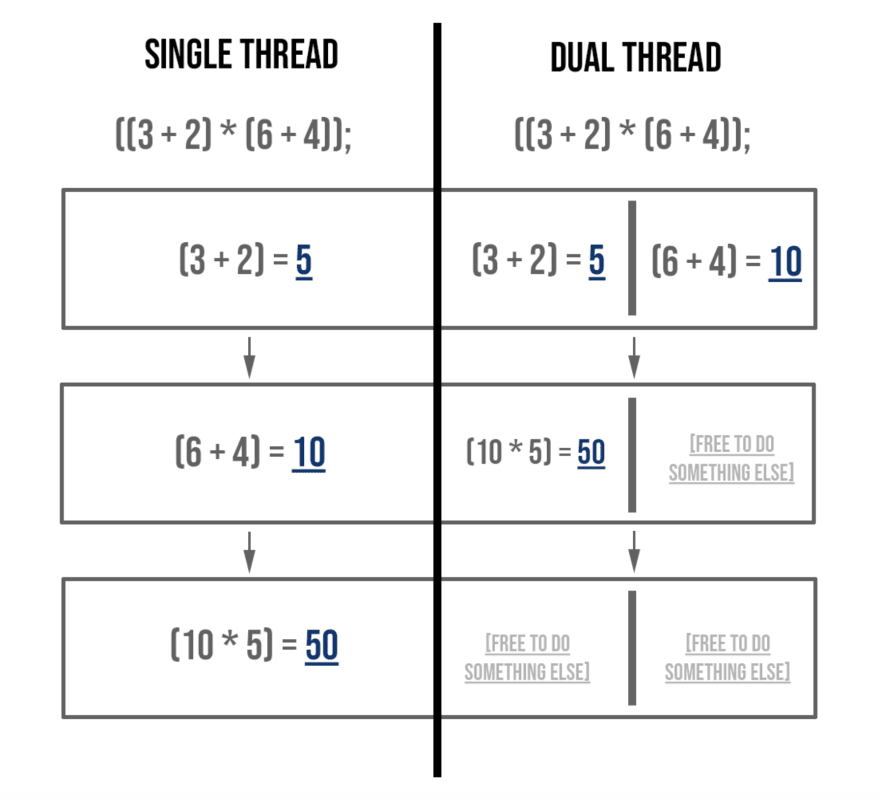

The simplest way to explain this is to think about how a machine with a single processor would compute a simple multi-step mathematical computation problem. Let’s consider the below function:

((3 + 2) * (6 + 4));

What the computer understands when it’s given this instruction is:

First, find (3 + 2)

Then, find (6 + 4)

Lastly, multiply the results with each other

If we assume that each of these steps takes exactly 1 unit of time, the above would be completed in 3 units of time.

But what if two threads could be run simultaneously? In this case, both addition problems could be handled by different processors at the same time and then subsequently multiplied by each other once the result is found. In this extremely simplified scenario, the same computation that previously took 3 units of time would be completed in only 2 units of time — a saving of 33%.

For a more practical example: if a processor wants to execute an instruction that requires pulling data from memory, it will generally take some number of clock cycles — let’s assume it takes 5. In this case, the next instruction can’t immediately start to use the value as it’s not yet ‘pulled’ from memory for 5 more clock cycles. A multithreaded processor could in this case simply start working on an instruction from another process in the meantime. It could, in fact, theoretically use these 5 clock cycles to execute 5 different tasks from 5 different processes.

In this way, multithreading on a given processor can give the illusion of multitasking to the user, even if at any one point in time the CPU is only actually executing one thread. When you combine the ability to have such “pseudo” multitasking on a single processor with the actual availability of multiple processors, countless processes can seamlessly be run simultaneously.

Benefits of multithreading

Higher throughput, or the ability to process more units of information in a given amount of time. (This assumes that the throughput ‘cost’ associated with dealing with multiple threads is lower than the efficiency it creates. This is usually, but not always, the case.)

More responsive applications that provide user seamless experiences and the illusion of multitasking. For example, an app’s UI will still be functional and responsive even while IO activity is happening in the background of an image processing app.

More efficient utilization of resources. Generally speaking, thread creation is less ‘costly’ compared to creating a brand new process. Web servers that use threads instead of creating a new process when fielding web requests consume far fewer resources.

Problems with Multithreading

More difficult to find bugs. The reasons for a process not executing successfully may now be external to the process itself. The execution order and prioritization of threads can’t always be predicted and is up to the operating system itself.

Higher cost of code maintenance, since the code has now had multiple levels of complexity added to it.

More demand on the system. The creation of each thread consumes additional memory, CPU cycles for book-keeping and time spent on switching ‘contexts.’ Additionally, keep in mind if a processor is going to run 5 threads simultaneously it will also need to keep information about each of those processes around and accessible while the other ones execute, requiring more registers.

The limitations of concurrency

No text on multithreading is complete without mentioning Amdahl’s Law. This law stipulates that there will always be a maximum speedup that can be achieved when the execution of a program is split into parallel threads.

Think of this crude example: one woman may be able to give birth to a baby in 9 months, but that doesn’t mean that nine women will be able to give birth to a baby in 1 month.

In the same way, software programs will always include portions which can’t be sped up, even if the number of processors is increased. These parts of the program must execute serially and aren’t sped up by running parallel threads.

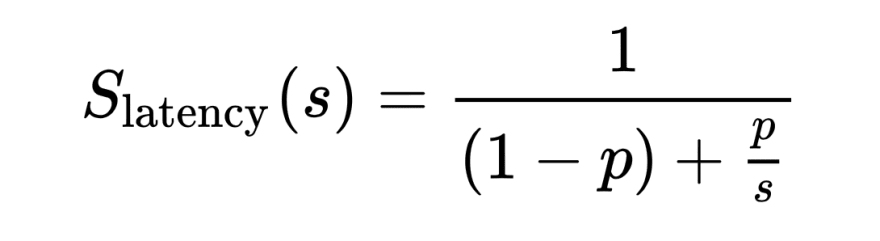

Amdahl’s law describes the theoretical limit at best a program can achieve by using additional computing resources:

- S(n) is the speed-up achieved by using n cores or threads.

- P is the fraction of the program that is parallelizable.

- (1 — P) is the fraction of the program that must be executed serially.

Example:

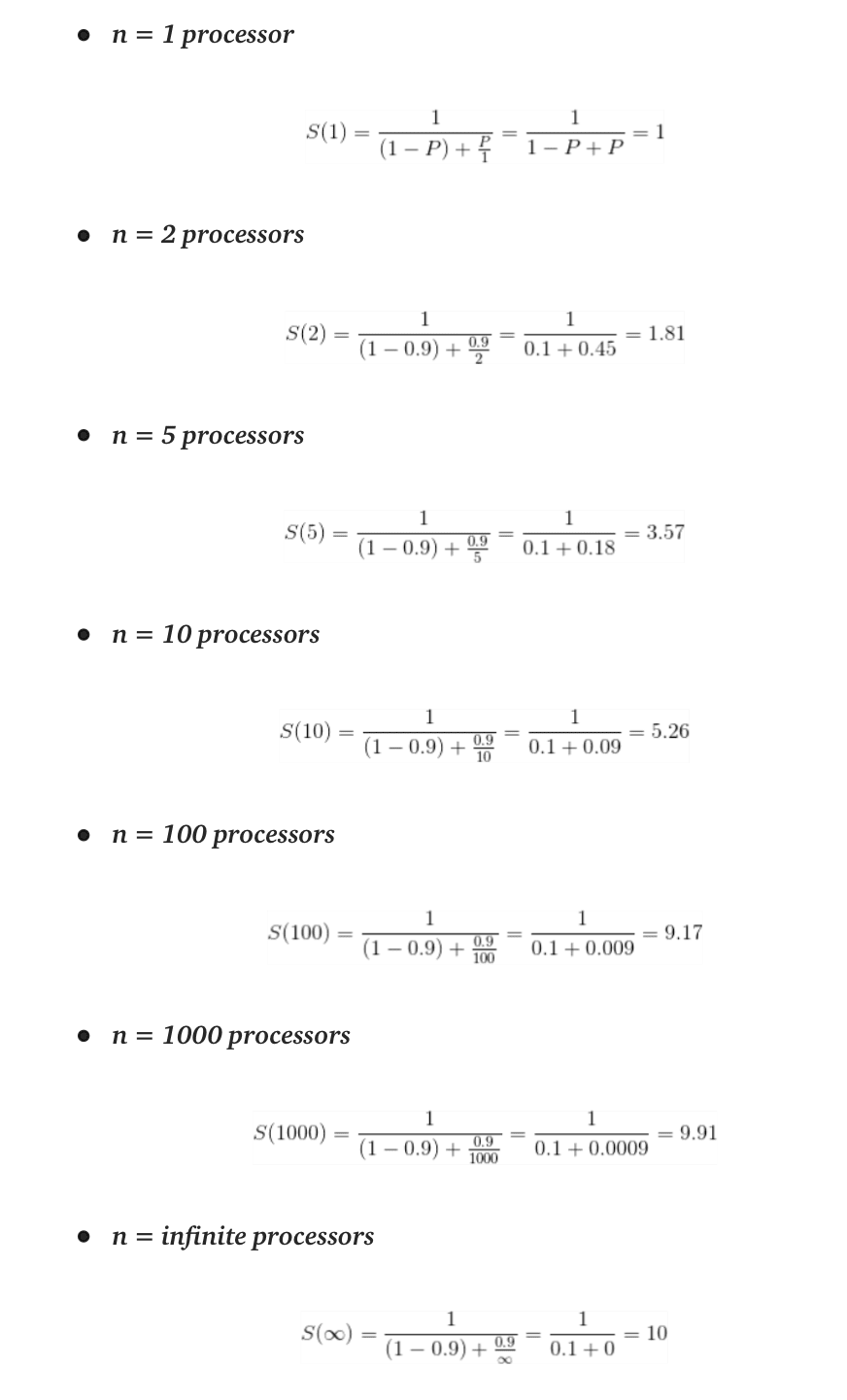

Say our program has a parallelizable portion P = 90% = 0.9. Now let’s see how the speed-up occurs as we increase the number of processes:

The speed-up increases as we increase the number of processors or threads. However, the theoretical maximum speed-up for our program with 10% serial execution will always be 10. We can’t speed up our program execution more than 10 times compared to when we run the same program on a single CPU or thread. To achieve greater speed-ups than 10, we must optimize or parallelize the serially executed portion of the code.

Also note that when we speed up our program execution roughly 5 times by employing 10 processors, we also decrease the utilization of those 10 processors by roughly 50% since now the 10 processors would remain idle for the rest of the time that a single processor would have been busy. Thus, increasing throughput by increasing the number of processors can also lead to less efficient utilization of resources.

Master concurrency by practicing real problems

Some example questions

How would you design a unisex bathroom that cannot have people of different sexes inside it simultaneously, or more than 3 people at a given time?

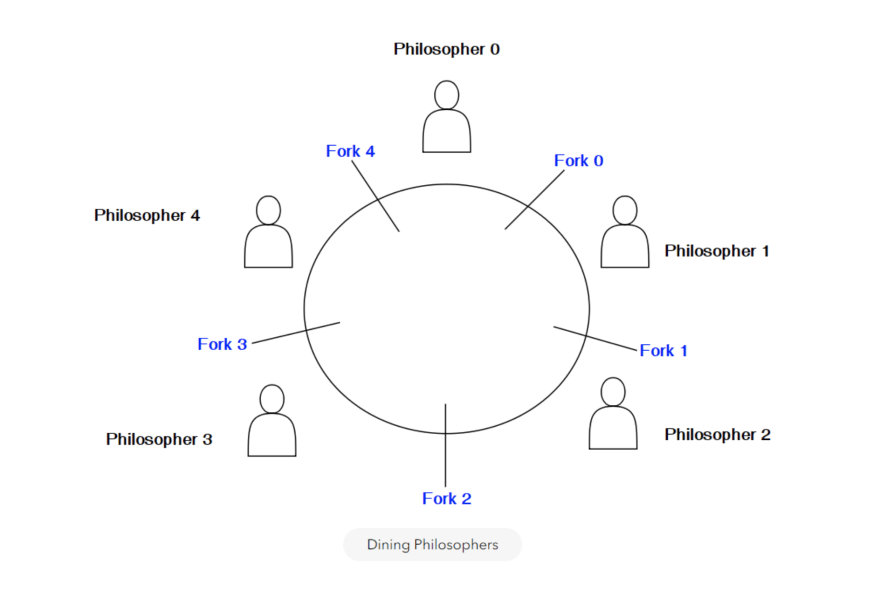

How would you solve a situation wherein 5 philosophers are eating at a roundtable, with each philosopher needing a fork in each hand in order to eat, but there are only 5 forks present?

To get practice with these problems in embedded coding environments, check out Java Multithreading and Concurrency for Senior Engineering Interviews on Educative.

Additional Resources

Article: 5 simple and effective Java techniques for strings and arrays

Course Track: Ace the Java Coding Interview

Top comments (1)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.