What is load balancing?

Load balancing in web development land is a strategy used to handle high volumes of requests from users by either spreading the traffic evenly or strategically across multiple servers.

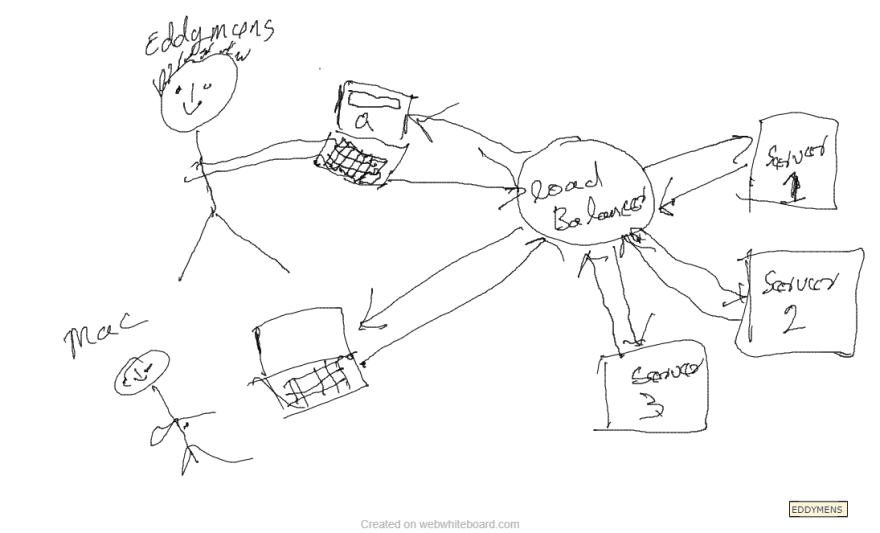

Traditional Load balancing

In a typical architecture with load balancing, you usually have one or more servers acting as load balancers solely dedicated to spreading traffic across the main application servers.

With this approach there is a single point of failure thus the load balancers themselves. Most teams use dedicated third-party load balancing services to improve their odds and also because sometimes they don’t have the capacity to run their own load balancers.

But there are other options teams can explore.

Another way

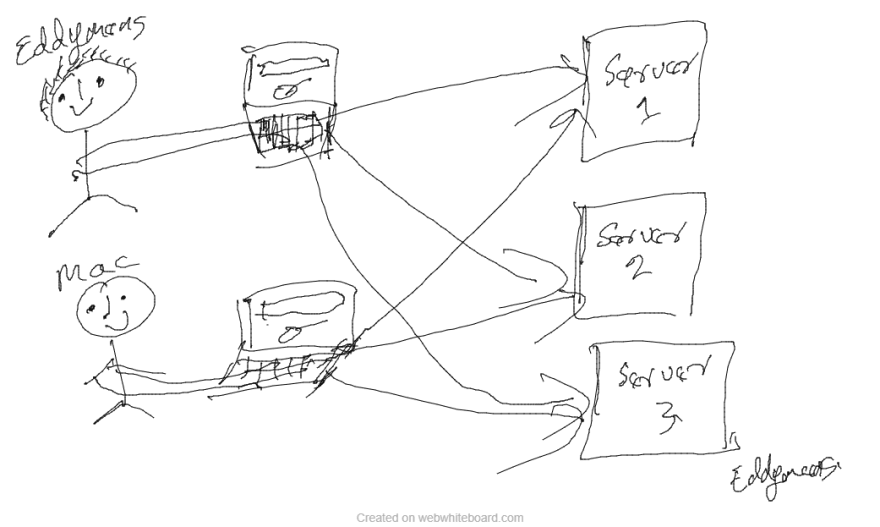

If you happen to have a Single Page App you might be able to explore the approach of allowing the Frontend to broadcast user requests directly to **all **the application servers, on receiving the first response returned by any of the servers you cancel, the connection to the others. You may also apply different strategies regarding how you broadcast the request. For example, you can have the right number of servers in every region based on the number of users from that region and route said users to those servers only.

The good thing with this approach is that we no longer have a single point of failure since we have no central nodes or load balancers routing traffic.

Things to note

If your APIs or web app is not stateless having users be served by different servers can be a challenge as the user session might be known by only one server.

This is not a silver bullet or a definite replacement for using Load balancers. This approach, for example, works best for small teams with a lot on their hands or a product that is just gaining traffic or apps that have known spike periods were having a load balancing setup might be an overkill.

Also, this approach is deeply embedded within the architecture of your application compared to using a load balancer that can be thrown in and thrown out easily, this also means it might be a challenge to tweak an existing app to go this route.

Also, note for user requests that require data modification, it might not be a good idea to broadcast it to all the servers, this can lead to problems like a double purchase or multiple entries. For such requests, you might have to ping one server then the next in case the first fails.

Conclusion

This is one example of ways to leverage one's coding skills to skip Ops #infracstructureAsCode

Top comments (0)