We recently switch to Jest framework when writing Unit and Integration tests in our React and Node applications. We used Mocha many years ago and AVA for a few years.

Changing framework is no big deal once you are used to write tests ( or even better used to TDD) extensively.

The fundamentals are the same in every framework ( and every programming language) but there are some nuances.

Jest documentation is very extensive and detailed, and I really suggest you reading it and going back to it every time you write a slightly more complex test or assertion, but what I'd like to share here is a recollection of tips and tricks that can hopefully save you some time and headaches.

Concurrent

This is actually one of the main reasons I loved AVA, tests are by default run concurrently, and for a good reason!

Tests should not rely on external apis/services, they should not rely on globals or other objects that are persisted across different tests, so why should not be run - very slowly - one after another, when they could and should be run all the same time (workers and thread permitting).

If, for some reason, and normally this happen only on integration tests, we need to preserve a certain order, then we can run them in a sequence / serially.

In Jest it is the opposite. You need to explicitly tell that a test should be run concurrently. see here

Each(table)

In some case you have some tests which are basically the same but with slight variations.

You could create individual tests or you could use each(table)

which basically will run a loop / map over your table/array and run the test with that specific payload (eventually running the assertion on that specific expected result).

This is a very interesting feature but I would be careful because it is easy to be carried away by "reusing and optimizing" too much making tests more complicated than they need to be or simply ending up having many of unnecessary duplicated tests.

Imagine you want to test your sum method:

const sum = (a, b) => a+b

test.each([

[1, 1, 2],

[1, 2, 3],

[2, 1, 3],

])('.add(%i, %i)', (a, b, expected) => {

expect(sum(a, b)).toBe(expected);

});

Imho, as good as this snippet is to explain how to use each(table), we should not write such a test.

Having multiple inputs for such thing would not add any value. Unless our method has some weird logic - like that over a certain sum the predefined maximum is always returned.

const cappedSum = (a, b) => {

const cap = 10

const tot = a + b

if (tot > cap) {

return cap

} else {

return tot

}

}

test.each([

[1, 2, 3],

[2, 4, 6],

[5, 5, 10],

[8, 7, 10],

[45, 95, 10]

])('.add(%i, %i)', (a, b, expected) => {

expect(cappedSum(a, b)).toBe(expected);

});

Still in this case I would probably write 2 simple tests which make this specific behaviour stand more out.

test("Two integers are added if total is below the cap [10]", () => {

expect(cappedSum(2, 4)).toBe(6);

})

test("Cap [10] is always returned if sum of two integers is higher", () => {

expect(cappedSum(5, 6)).toBe(10);

})

I'd rather be redundant here to raise attention to the specifics of this method. And also be clearer in case of a failing test.

Imagine that someone changes the value of the cap and your tests in your table start failing

In the results you will find:

operation › .add(45, 95)

expect(received).toBe(expected) // Object.is equality

Expected: 10

Received: 50

which does not make much sense because 45+95 gives 140 and both the expected 10 or received 50 do not match, and you would stare at the error message wondering "what the heck...!?"

Instead, reading:

operation › Cap [10] is always returned if sum of two integers is higher

expect(received).toBe(expected) // Object.is equality

Expected: 10

Received: 50

clearly let you figure out that something is wrong with the cap, and in this specific case that just the assertion and title are not updated with the updated code.

describe ( and generally about test names)

When you run Jest, tests are run by file, within that file you can have groups of related tests, which you put under a Describe block.

Although seen in every example around, describe is not mandatory, so if you have a tiny file with just a bunch of tests, you don't need really it.

In many cases though it is beneficial to group tests which share the same method under test and differ by the input and assertion.

Grouping and Naming properly is often underrated. You must remember that tests suites are supposed to succeed, and they can contain hundreds or thousands of tests.

When something goes wrong, locally or on you CI Pipeline, you want to be able to immediately have a grasp of what went wrong: the more information you have in the test failure message, the better.

The reader should understand your test without reading any other code.

Name your tests so well that others can diagnose failures from the name alone.

describe("UserCreation", ()=> {

it("returns new user when creation is successful")

it("throws an InvalidPayload error if name is undefined")

// etc

The concatenation of file name + describe + test name, together with the diff among expected and received values. (assuming you wrote specific enough assertions) will allow you to immediately spot the issue, and surgically intervene in seconds.

Imagine that your original implementation of create user returns a new user in this format :

{

name: "john",

surname: "doe",

id: 123

}

And your test will assert those 3 properties

it("returns new user when creation is successful ", () => {

const expected = {

id: expect.any(Number),

name: expect.any(String),

surname: expect.any(String)

}

const result = create()

expect(result).toMatchObject(expected)

})

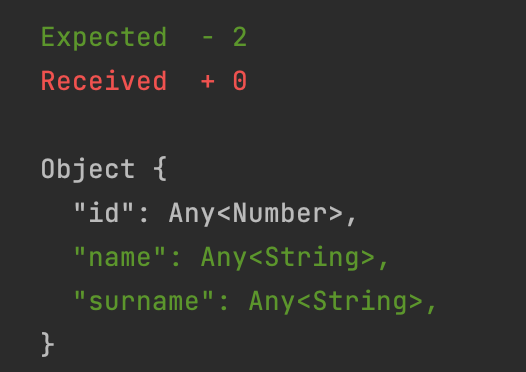

Reading such a failure message:

user-manager › UserCreation.returns new user when creation is successful

expect(received).toMatchObject(expected)

will clearly let anyone understand that your method is now returning an object just containing the ID of the new user, not all its data.

I know naming it's hard, but be precise and be disciplined in how you name and group your test files, test suits and individual tests. It will pay off whenever you have to debug a failing test.

On this topic I really suggest a very interesting article touching many aspects and pitfalls of writing Tests, and why writing a test is fundamentally different than coding for production:

Why good developers write bad tests

test structure

Whether you are using Jest or not, test structure should be clean and explicit.

Write your test in AAA Style, which means Arrange Act Assert

Arrange

Set up mock or db connection or service instance etc

Define input

Define expectation

Act

Run the code under test passing the input

Assert

run the assertion between the result and the expectation

The most important thing is keeping the reader within the test (following up a tip from the above article).

Don't worry about redundancy or helper methods.

Remember that people will go back to a test only when it starts failing, and at that point it is important that the purpose of the test, the set up and the error is understandable and debuggable quickly without having to click through many other variables or helper methods.

async

If the methods you want to test are asynchronous, be it callbacks, promises or async, it is not a problem with Jest.

The biggest pitfall I'd like to warn you about is when you forget to await or to add a return when you run your expectation.

This would cause your test to pass even if the asynchronous method is failing ( simply because Jest is not waiting, and when the test fails, it is already to late to notify it)

This happens very often if you jump from one sync test to another; consider these examples:

it('loads some data', async () => {

const data = await fetchData();

expect(data).toBe('loaded');

});

it('loads some data', () => {

return expect(fetchData()).toBe('loaded');

});

They are similar and do the same thing.

In the first we are telling jest the test is async and we are awaiting for the method to return the loaded data, then we run the assertion.

In the second example we just return the Expect.

If you forgot either the async / await or the return, the test will exit immediately, way before the data is loaded, and no assertion is done.

This is very dangerous because it could lead to false positives.

false positives, toThrow and expect.assertion

In some cases is useful to add a special Assertion in your test where you tell jest to count and make sure a certain number of expectations are run and passed.

This is extremely important in the case I mentioned above - if you forget to return expect or await your async method.

But it is also useful if your test has assertions inside try/catches or then/catch.

Having assertions within catch blocks is not an approach I would suggest, much better to use resolve/rejects or other ways, but sometimes I found it necessary like the example below:

it('validates payload', () => {

const p = {

// some payload that should fail

}

const throwingFunc = () => validate(p)

expect(throwingFunc).toThrow(ValidationError)

})

If I need to make more assertions on the Error being thrown beside just checking it's type/class - like ie making sure the error contains some specific inner properties or by regexing its detailed message - and I don't want the method to be executed multiple times, we need to catch the error and run assertion directly on it:

it('validates payload', () => {

const p = {

// some payload that should fail

}

expect.assertions(2)

try {

validate(p)

} catch (error) {

expect(error).toBeInstanceOf(ValidationError)

expect(error).toHaveProperty("details", [

"should have required property 'username'",

'email should match pattern "^\\S+@\\S+$"'

])

}

})

If I don't put expect.assertions(2), and then for some reason the logic is the validation is changed ( so that the payload passes, or instead of an error a true|false is returned) the test would pass silently, just because jest did not know there were some assertions to run.

No assertion run, means successful test

async & toThrow

Just to spice up a bit assertions on errors, just remember that when your method is asynchronous the expect syntax is a bit different.

Of course you can still rely on the catch block - but still remember of await and expect.assertions(1), but the preferred approach is using rejects:

it('throws USER_ALREADY_EXISTS when primary key is already in use', async () => {

const p = {

// some payload whose Id is already in use

}

const throwingFunc = () => createItem(p)

await expect(throwingFunc).rejects.toThrow(new RegExp(Errors.USER_ALREADY_EXISTS))

})

More info about testing Promises and Async code with resolve/rejects here

mocks

Mocking within Tests is a chapter per se, and I have mixed feelings about it.

Too many times I have seen overly engineered abstractions with loads of classes and method with dependency injection were tested through super complicated tests where everything was mocked and stubbed.

Very high code coverage and everything green in the CI pipeline, just to see production crash because, well the mocks were not really matching reality.

This is also the reason why, expecially with serverless, I prefer when possible to have integration tests - which hit the real thing not some weird dockerized emulator of some aws service.

This does not mean we never used aws-sdk-mock - haven't tried yet this version for SDK v3 - check this article for more info - but in general I try to write very simple unit tests, and very simple integration tests, keeping mocks to a minimum.

If you are a 100% Mock Advocate, I really suggest reading Mocking is a code smell by Eric Elliot which few years ago, really blew my mind.

Going back to Mocks in Jest.

If you just started with Mocks you may look at the documentation and then stare at the code and ask yourself: "Eh?!? Why? What's the point?!?"

How are you supposed to use that? Asserting on the mocked method would make no sense...

So this bring us to a broader topic that can lead us to dependency injection and inversion of control.

Using mocks can be hard and tricky because often our methods are just too coupled together and you have no access on the internal methods being used.

Imagine a method that validates some data, creates a payload and passes it to an api to create a user, then maps the result or catches errors and returns it.

const createUser = (data)=> {

// validate data

// build payload

// call api

// then map result to our needs

// catch and handle results from api

}

If you want to create a Test for this you don't want to invoke the real API and create the user for real ( for multiple reasons, the test could become flaky and depend on network issues or API availability, you don't want to unnecessarily creates users which you will have to tear down/ delete afterwards, you don't want to "spam" endpoint with invalid payloads to test all possible errors).

This is where mocking comes handy. BUT...

how do you access that internal method that calls the api?

Some may use Rewire to access internals of a module and overwrite them, or you can expose those specific methods in different modules and then mock their implementation, or you can rely on dependency injection and closures to decouple the behaviour and then easily mock it without too many headaches.

const createUser = (api)=>(data) {

// validate data

// build payload

api.call(payload) <-- now this can be the real thing or a mock we don't know and don't care

// then map result to our needs

// catch and handle results from api

}

To use that you first partially apply your method injecting the api class

const api = new 3rdPartyApi()

const userCreatorFunc = (api)

then use the real creator function which expects only the payload (that is your original method under test)

userCreatorFunc(myData)

So how do you mock your api?

const input = {username: "john"}

const response = {

ts: Date.now(),

id: 999,

name: "john",

}

const apiMock = {

create: jest.fn().mockReturnValue(Promise.resolve(response)),

}

const createdUser = await createUser(apiMock)(input)

const objToMatch = {

id: expect.any(Number),

userName: expect.any(String)

registrationDate: expect.any(Date),

// some other formatting and properties or data manipulation done in our method when we get the response

}

expect(createdUser).toMatchObject(objToMatch)

})

From here you can easily mock the faulty responses, and make sure you handle everything properly, without relying on network nor bothering the real API at all.

Mocking can go deeper and further, you can assert that the mocked method is called and with which parameters ( imaging you have some conditionals in your method which might or might not call the api based on certain input) and so on.

Honestly for the reasons above I am not a super fan of such mocking.

Strike a balance and keep it simple.

When you see it is getting too complicated, you are probably doing it wrong. And it is likely the case you should refactor your method in the first place.

keeping things simple is also one of the reasons why TDD is so good, you start small, with the tests, and the code naturally evolves in a way that is simple, logical, decouple, and easy to test.

Just dropping here a mock example of Axios calls which might be useful if you don't want or can't refactor your code to inject your external dependencies doing network calls.

import axios from "axios";

test('should throw an error if received a status code other than 200', async () => {

// @ts-ignore

axios.post.mockImplementationOnce(() => Promise.resolve({

status: 400,

statusText: 'Bad Request',

data: {},

config: {},

headers: {},

} as AxiosResponse)

)

const user = await createUser(input)

In this example, if your createUser method uses axios to invoke an api, you are mocking axios entirely so that the requests will not be made but your mocked response will be triggered .

toMatchObject & property matchers

Often we want to assert that our method returns a specific object but we don't want to hardcode lots of values in our setup/assertion.

Or we don't care to assert every single property in the object which is returned.

Imagine some dynamic values like Ids, or dates/timestamps and so on.

In this case asserting for equality would cause the error to fail.

toMatchObject is here very handy.

const result =createRandomUser('davide')

const expected = {

"name": "davide",

"email": expect.stringContaining("@"),

"isVerified": expect.any(Boolean),

"id": expect.any(Number),

"lastUpdated": expect.any(Date),

"countryCode": expect.stringMatching(/[A-Z]{2}/)

// result might contain some other props we are not intersted in asserting

}

expect(result).toMatchObject(expected)

Using toMatchObject in combination with other expect globals like ANY is very powerful to have tests that are generic enough but still validate the "type" of object being returned.

todo

Marking a test as TODO is very handy when you are jotting down ideas of possible test scenarios, or if you are preparing a list of tests for a junior dev or trainee you are mentoring, or simply to leave a trace of possible improvements/technical debt.

only

Only can be used when debugging a test.

Be very careful when committing after you are done. You might screw the entire Build pipeline and even risk putting something broken in production, because the only tests you are actually running, are well, those that you marked as .only!

To avoid such problems you can use a git hook (check Husky

and DotOnlyHunter) which scans your tests making sure you are not pushing any test where you forgot to remove .only.

failing

this is actually a feature I am missing in jest (which was available in AVA

Sometimes a test is failing but for some reason, you want to keep it without just skipping it. When/if the implementation is fixed, you are notified that the failing test, now is succeeding.

I can't tell you when and why I used that, but I found it very useful, and apparently I am not the only one, since there is github issue about it. Until the issue is closed we have to use a simple skip.

use the debugger

This is valid basically for every step of your development process. Drop those _console.log_s and start to use the Debugger and Breakpoints, no matter what your IDE is (here an example for VisualStudioCode) this allows to interrupt the running code and expect props and methods, and move step by step in the execution. A very useful,fast and pratical way of understanding what is really going on.

remember to make your test fail!

It does not matter if you are doing TDD (Test Driven Development - meaning you write the tests before you wrote / while writing the implementation) or writing tests for code you just wrote or you are refactoring.

Make sure your test fails!

If you wrote a test and it passes, don't just move on, taking for granted / hoping everything is ok. Maybe your assertion is broken, maybe Jest is not awaiting your result, maybe the edge-case you thing you are testing is not really ending up in the code you implemented.

Prove that your assertion is working - and your assumption is correct - by having the test failing first - ie by passing the wrong payload - and then adjust the Arrange part of the test to make it work again.

Kent C. Dodds has a very clear video explanation on how to do that.

Hope it helps. If you have other tips, feel free to comment below!

Top comments (5)

Great Read, insightful! I would also encourage you to read about "how to Run Jest Testing with Selenium and JavaScript"

lambdatest.com/blog/jest-tutorial-...

Nice article! I would also add - use

--findRelatedTestsoption in your pre-commit hook. I wrote about how it works in my article: dev.to/srshifu/under-the-hood-how-...that's brilliant! thank you!!

Wow, these tips and tricks are so handy. I wish that I had found this article a couple of weeks earlier as I started out. Anyways, I think that I understand how they come in handy now, after having learned it the hard way. I'm bookmarking it for reference and I'll recommend this to anyone getting started with jest.

Thank you for your kind words. Glad you liked the post.