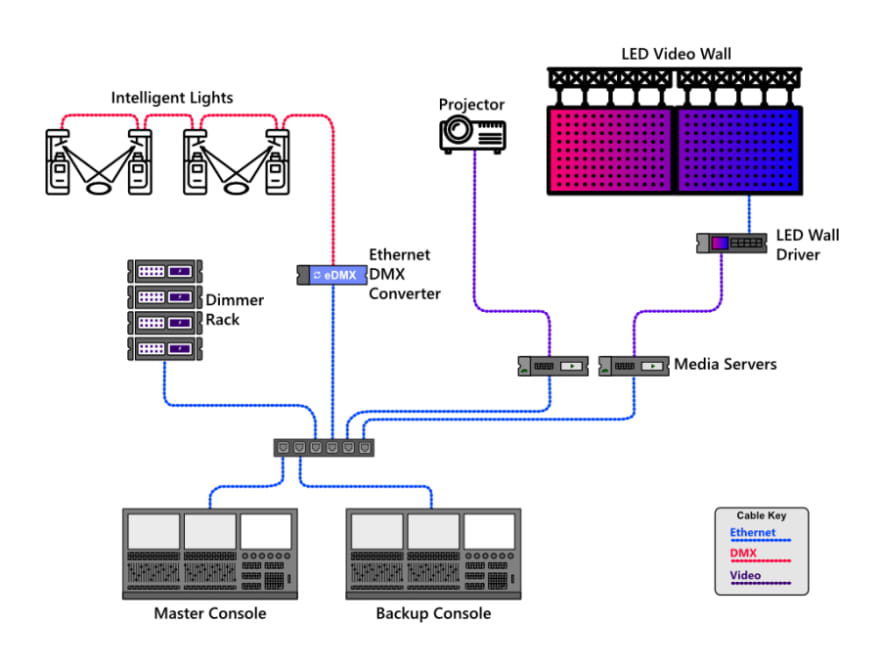

A little known fact is that the original, intelligent lights and programming hardware formed a distributed system. Each light had its own on-board memory which was used to store the different states (looks) used throughout the show, and the lighting console would send a command for each light to load a particular look. I’ve heard of a show that was too large to fit on the lights on-board memory, so the operator had to split the programming into two, using the interval to upload the second half. I can’t imagine how nervous they were during this process!

This approach was a good start into the world of intelligent lighting, but had some significant drawbacks, the biggest being the impossibility of programming the show ahead of arriving at the venue. This ruled out the option of using 3D tools like Capture to program shows using a virtual representation of the venue and lighting rig.

Fast forward a little, and the advancements in consumer CPU’s allowed a single device to calculate all the required control data fast enough that distributed systems were no longer needed. This saw manufacturers adopt the architecture of using a single lighting console to calculate everything, with some providing the ability to track its state on a backup / redundant console but none offering a distributed system.

Coming Full Circle

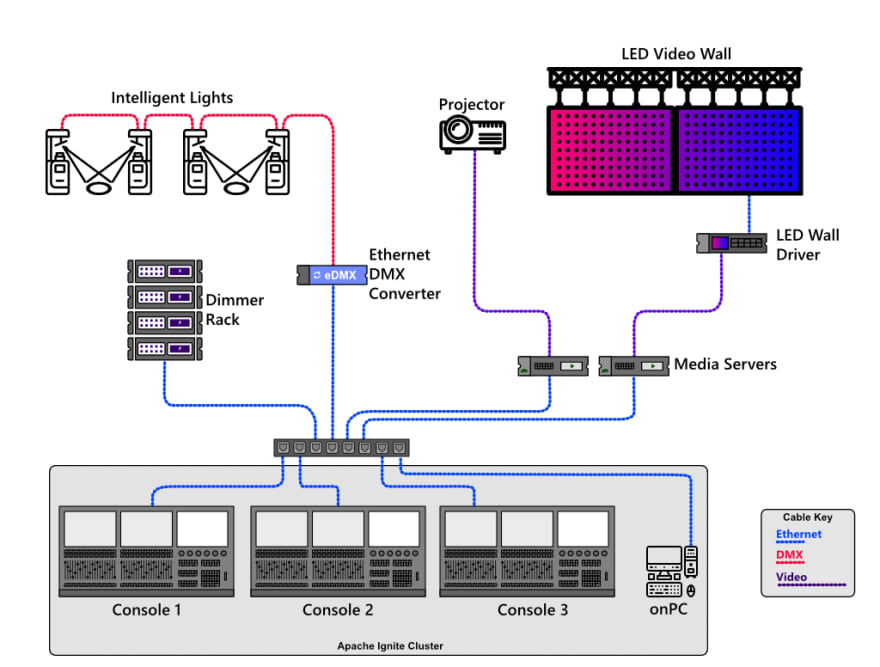

Only relativity recently has distributed systems come back into vogue, as shows have become more and more complex. With the complexity of shows like Eurovision, a single lighting console cannot calculate the control data fast enough. To get around this, multiple consoles are used together to control these massive shows. These console’s don’t just have ownership of a subsection of the lights, but instead create a Compute Grid, which is a high-performance computing technique to create a virtual supercomputer.

Grids are a form of distributed computing whereby a “super virtual computer” is composed of many networked loosely coupled computers acting together to perform very large tasks

In this article, I’m going to discuss how I’m using Apache Ignite to develop a distributed data and compute grid in order to provide high-availability and scalablity.

Apache Ignite

What is it?

Apache Ignite is an in-memory computing platform that is durable, strongly consistent, highly available and features powerful SQL, key-value, messaging and event APIs.

Traditionally it’s used in industries such as e-commerce, banking, IoT and telecommunication it boasts companies such as Microsoft, Apple, IBM, Barclays, American Express, Huawei and Siemens as users.

Most users of Apache Ignite will be deploying it to servers either in a public cloud-like Microsoft’s Azure or into their on-premise data centers. Though servers are the usual domain for Apache Ignite, with its flexible deployment model, I can embed it as part of the Light Console .NET Core library.

I’m able to develop a distributed system that provides almost unlimited horizontal scale utilising the experience of its experts in distributed systems. The Apache Ignite codebase consists of more than a million lines of code and has 223 contributors meaning it’d be a huge effort to recreate this functionality in-house!

Clustering

Apache Ignite is a fundamental pillar of my application architectures, providing data storage, service and event messaging capability. With being an integral part of my application, any consumer of the LightConsole.Core DLLwill either automatically connect to an existing session or create one on launch.

What this means is that anyone running the Light Console app will automatically discover existing nodes and join the cluster, thus increasing the compute and data storage resources of the overall grid.

Using this approach means that no single device is responsible for the entire system. Instead, each node (console or onPC software) takes responsibility for a subsection of data and compute.

Distributed Data Storage

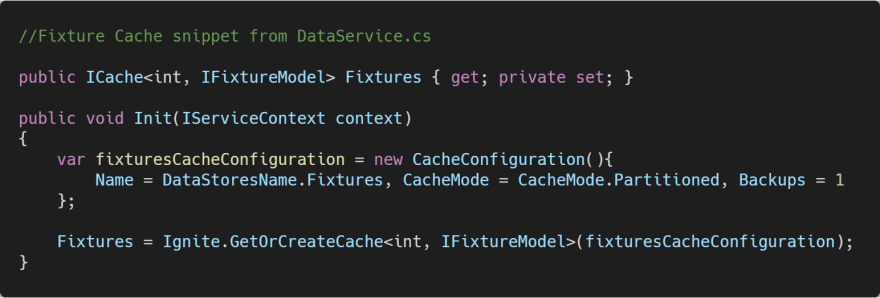

Data Storage within the Light Console uses Apache Ignites distributed key-value store, which you can think of as a distributed partitioned hash map, with each console owning a portion of the overall data.

The above example demonstrates how Apache Ignite might distribute _Fixture _objects stored within the FixtureCache. In actuality, I define a backup property of 1, which will ensure that a fixture doesn’t only exist in one instance of Light Console. This is how I mitigate against data loss when a console (node) crashes or goes offline.

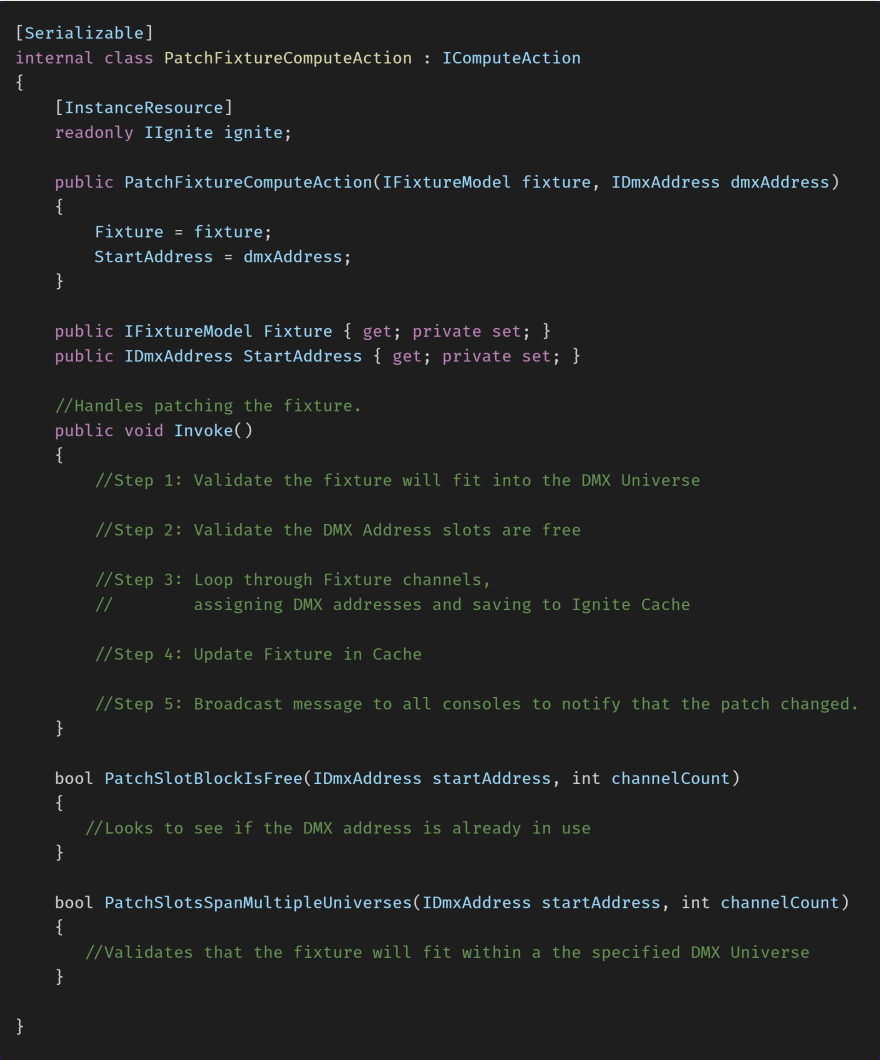

Affinity Colocation

As the show data is distributed across instances of Light Console, it’s important to ensure that any computations that make use of the data occur on an instance of Light Console that already has a copy of the data. This is called collocation and helps to significantly improve the performance of the application by reducing the need to move data around for the network for computation. The simplest example of a collocated computation currently within the project is the Fixture Patching mechanism. This is the process of assigning DMX Addresses to a fixtures control channels (such as pan, tilt, colour wheel, etc..).

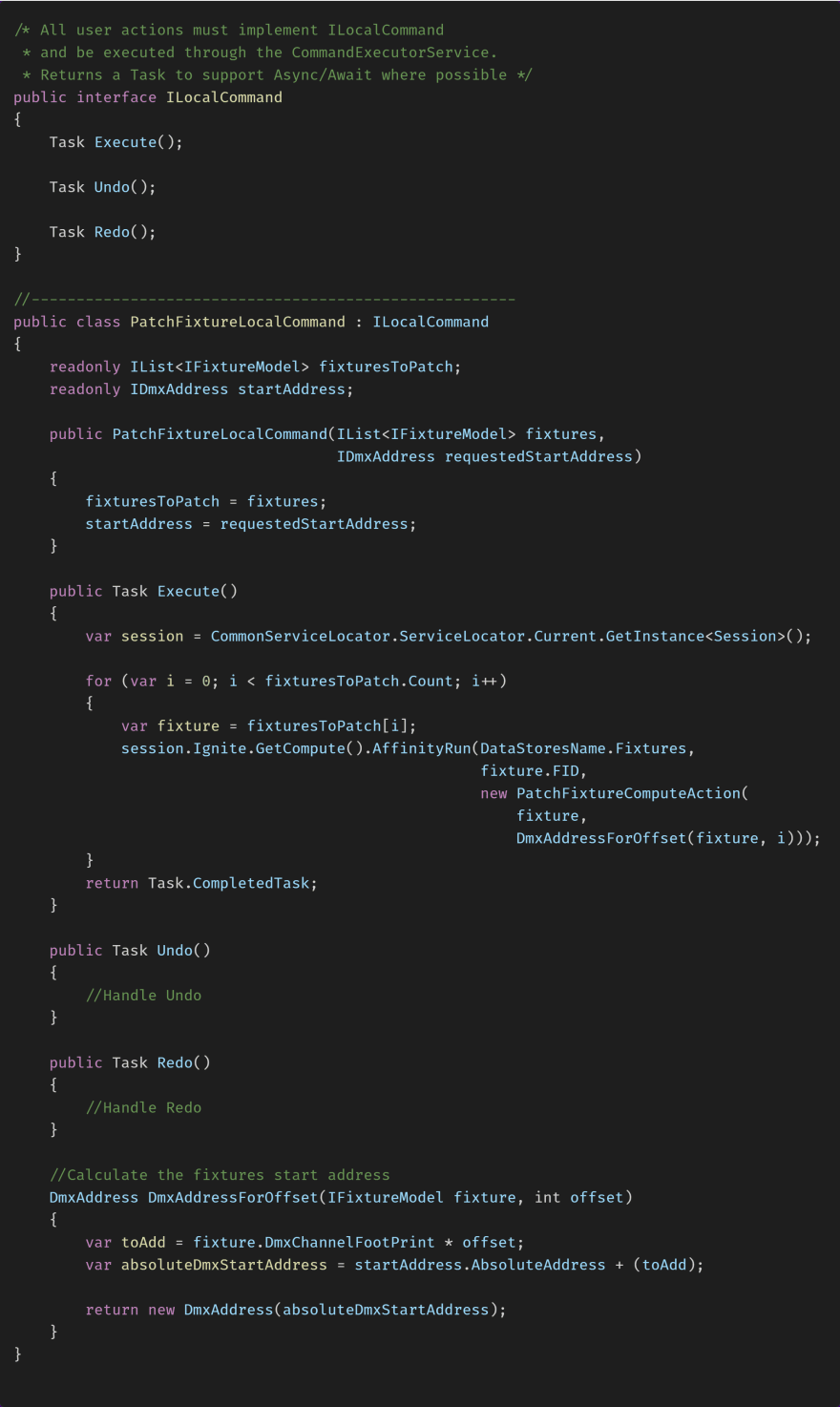

The Compute Action is invoked using the PatchFixtureLocalCommand, _which is defined below. The _PatchFixtureLocalCommand hides the implementation details of the command and implements the _ILocalCommand _interface to support Undo/Redo functionality.

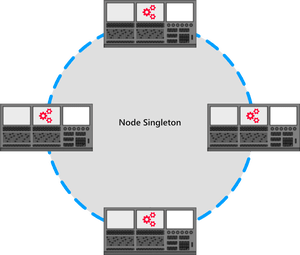

Distributed Services

Another feature of Apache Ignite that I’m using extensively is the Service Grid. Service Grid allows me to deploy services to the cluster that can be used by any of the consoles. The advantage of deploying services to the grid is that it provides continuous availability, load balancing and fault tolerance out of the box. I also have the ability to specify if a service should be a cluster-singleton, node-singleton, or key-affinity-singleton. Below you can see an example of a Node Singleton deployment, which would be deployed to each Light Console within the cluster.

Two of the most critical services currently found within Light Console are the PlaybackEngine and the_ SyncTick_ Service. Both of these services are deployed as Cluster Singletons, which means that only one instance will be running on the cluster at any given time. If the instance of Light Console which is running the service goes offline, then Apache Ignite will automatically redeploy the service to another console.

SyncTick Service

The SyncTick service is responsible for keeping all the currently running transitions (fades) and effects in sync with each other. This is achieved by broadcasting a tick event to all the nodes with a DateTime representing when the Tick occurred. If a transition or effect is running, upon receiving the Ticked message, it’ll calculate the next value for output and notify the PlaybackEngine. With this architecture, I’m able to speed up and slow down output data / calculations across the entire grid from a single location. This makes it possible for future versions of Light Console to support Timecode.

Messaging

In the above snippet, you’ll notice that the Tick event is using the SendOrdered _method of _IMessaging. This method allows me to ensure that the subscribers receive the Tick messages in the order that they’re sent.

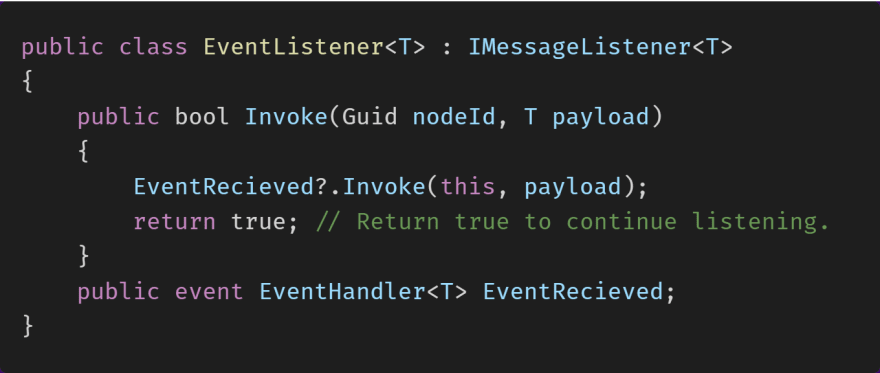

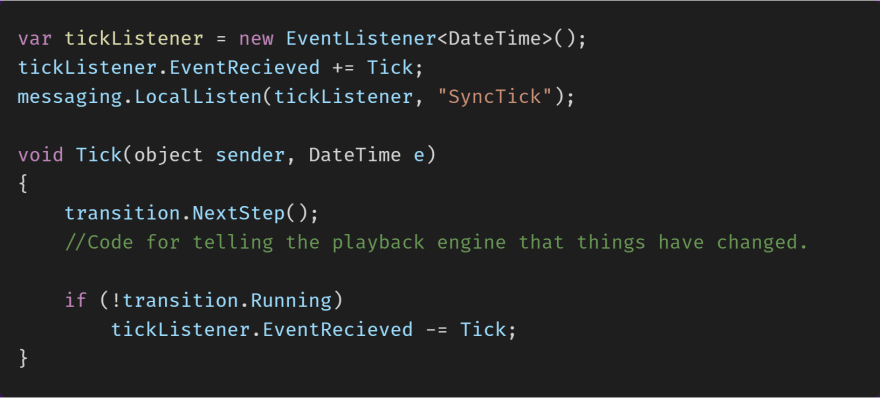

To subscribe to the messages, I then use the IMessaging LocalListen _method to register a message listener object which deals what to do when a message is received. To make my life easier, I ensure that I only ever call LocalListen and I use the _EventListener _class as defined below. The EventListener_ class allows me to use generics for the payload and easily attach to the EventRecieved event within the subscribing class.

Below you can see an example of a transition, which when started will create an EventListener and subscribe to the EventReceived event.

Wrapping Up

The above is just a small glimpse into how I’m using some of the feature available within Apache Ignite to power a distributed Lighting Control system. Whilst not exhaustive, I hope it gives you an idea of what’s possible and how you might also use Apache Ignite in your own projects.

It’s incredibly easy to get started with given it’s available as a Nuget package and has a rich set of documentation to help you understand features and how to add them to your apps.

Top comments (1)

I was just randomly searching who links to my site and you do (The DMX Wiki) so thanks :P