This is a writeup I've moved here from my personal website. It was originally published on 4/13/19

For the past couple of months, I’ve been heavily immersed in a graduate level machine learning course as part of my degree program. I haven’t been able to post about the cool work I’ve been doing because that would enable others to cheat (tsk tsk), but now I’m working on my final project and I would like to informally share my experience training a machine learning classifier to identify vulnerabilities in binaries.

Before we get too deep into this, please be aware that this is an ongoing project and I do not currently know if this technique will work. This series is meant to provide some insight into the practical application of machine learning, regardless of whether or not the results are positive (that’s science!).

The machine learning techniques we covered in the course are considered classical machine learning. We covered supervised and unsupervised learning and implemented several well-known algorithms from scratch. I’m not going to teach you the basics of machine learning in this post — if you’d like to learn how to choose a classifier or how machine learning algorithms work, I recommend perusing this curated list of tutorials. Because the course focused on classical techniques, I chose to also focus my attention there and ignore the more shiny neural networks and deep learning options. My project sets out to show that a straightforward inference technique, Naive Bayes, can be used to provide valuable information for a real problem. That problem is identifying vulnerabilities in binary code.

Binary code is the 0’s and 1’s that a program is made up of. If you’re a software developer, it’s the thing that your source code gets compiled into. If you’re a regular user, it’s the thing that you double click to launch an application. Binary code is made up of machine instructions that your CPU executes. These instructions essentially have two parts: the opcode, and any arguments.

All of these instructions and opcodes are packed into your binary file one right after the next. Different instructions take up more space than others, and their arguments can also be of varying length. If you open up a binary in a text editor, it looks something like this:

Because the size of instructions and their arguments can vary, we need a special tool called a disassembler to decode that binary file for us so that we can view the opcodes. Disassemblers do something else that’s cool, too, which is show you how logic flows through your program by decomposing it into blocks called “basic blocks” and drawing arrows between those blocks where there are jumps or conditionals that link them.

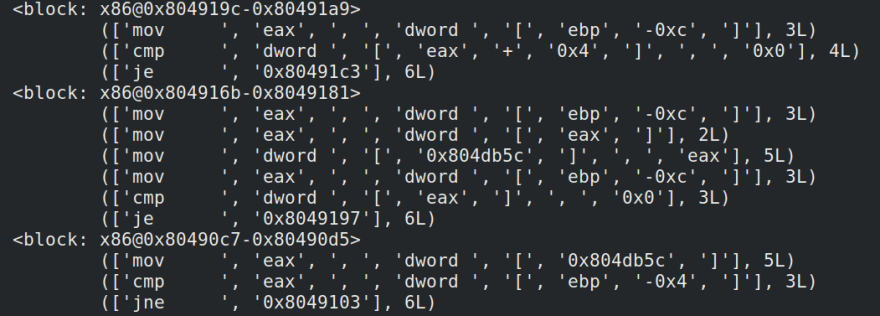

Some basic blocks from the “yolodex” CGC binary, as disassembled by BinaryNinja

My approach to discovering vulnerabilities relies on this block-level decomposition. I featurize binaries into basic blocks, enumerate the opcodes present in those blocks, and use that block-level data as one observation. I believe this may work because it is the series of instructions that a program executes that makes it vulnerable. Specific sequences, and their associated arguments, can leave a program vulnerable to buffer overflows or use-after-free or many other vulnerabilities. At the block-level, we have a fairly decent picture of which instructions are associated with one another in a sequence, and thus may be able to draw some conclusions about whether the program is vulnerable there.

The different kinds of vulnerabilities that can crop up in programs are well enumerated by the Common Weakness Enumeration (CWE). My approach attempts to classify which of these CWEs a given binary may manifest. Of course, to train a machine learning classifier to recognize vulnerabilities, I have to have some kind of training data set. I am using DARPA’s Cyber Grand Challenge set of Challenge Binaries as the basis for my training data. These binaries were written to contain vulnerabilities, and have readme files that describe the vulnerabilities in detail, including which CWEs they fall under. The first step on the road to creating my classifier is featurizing the binaries as I described above.

As I said, a regular text editor won’t do for reading a binary file, so I needed to choose a disassembler to break the challenge binaries out into their basic blocks. I chose to use Binary Ninja because it has a very easy-to-use Python API, and it’s hobbyist-level cheap (for comparison, the industry-standard disassembler is IDA Pro, which they will sell to you for roughly an arm, and continue to pick off your fingers and toes with renewal fees). I began by writing a quick script to go through a single binary and print out the opcodes it encountered in each block, just to validate that I was able to acquire the data I wanted.

The output of a Python script using Binary Ninja’s API to print basic blocks

Awesome. Step 1 complete. But none of this data is labeled. I want to label my basic blocks with the CWE that the binary contains. The CWEs are all described in a bunch of README.md files with no standard format. I briefly considered writing a script to pull the CWE labels out of the READMEs, but decided that the amount of time I would spend debugging edge cases and validating that the script actually grabbed the correct CWE numbers would be at least as much as just doing it by hand. So away I went, plugging CWE numbers into a spreadsheet to create a CSV mapping binaries to their CWEs.

Spreadsheet of CGC binaries labeled with their CWEs

This took me some hours, of which I mostly spent watching YouTube (bigclive’s teardowns are always a good way to spend some time). Rote tasks are good opportunities to learn a thing or two. Or to watch a show, pick your poison.

The first thing I noticed going through my labels is that some binaries are labeled with more than one CWE. This makes sense, but wasn’t something I had considered in my original approach. To simplify the problem, I made the (somewhat bad) decision to discard samples that are labeled with more than one CWE. There are better ways to handle this problem, but I only have three weekends to train, evaluate, and present my classifier, so I unfortunately do need to cut some corners. I calculated some basic statistics on my dataset to gain some insight into its composition.

Number of binaries:

108

Number of unique CWEs:

27

Distribution of binaries with respect to CWEs

Most of my samples are for CWEs 121 and 122. It’s important to note that this does not necessarily mean that my dataset is unrealistically biased. In fact, CWEs 121 and 122 are “Stack-based Buffer Overflow” and “Heap-based Buffer Overflow,” which are two very common vulnerabilities. That said, it’s worth being mindful of this skewing, as it will impact how our classifier trains.

Putting it all together, we now have a dataset where each row describes the instructions in one basic block, labeled with the CWE represented by the binary that the basic block was taken from. We’re ready to try to train a classifier.

Sample rows from the featurized data CSV

Next up: part 2

Top comments (0)