Search Engine Optimisation is usually a skill that is reserved for those in Digital Marketing, but I think that as a web developer, having competency in SEO is a valuable assest to have to differentiate yourself and display a bit more versatility.

What is SEO?

SEO (Search Engine Optimization) is the practice of optimizing a website or webpage to increase the quantity and quality of its traffic from a search engine’s organic results.[1]

There is a technical aspect behind SEO that web developers should have a basic understanding of, where measures are implemented to optimise the website/server so that search engines can easily crawl, index and then rank the website.

If you create an amazing site with great content but search engines struggle to index it, all that effort will be in vain.

Key habits that should be followed

- Make sure that all images have alt tags, as alt tags will provide search engines with a better context/description of what the image is, allowing them to index it properly.

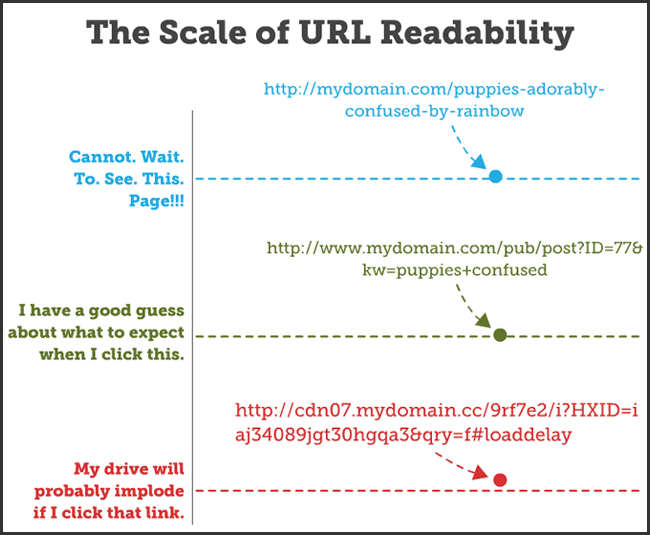

- When linking to another URL, words should be separated by hypens

'-'rather than underscores'_'as search engines read hypens as a space. - Try to keep domain names as short as possible while maintaining coherency, a domain with more than 15 characters should be avoided to ensure better readability for humans.

[2]

[2] - Images should have unique file names that try to describe the content of the image, and you should trying to avoid having duplicates of the same image as this will split the score for this image between each copy.

- 'Lazy-loading' images is a technique used to defer the loading of an image until it enters the viewpoint, instead of loading them upfront.

- Try to prioritise the usage of SVGs and WebPs over PNGs and JPEGs to increase the performance of the page.

WebP lossless images are 26% smaller in size compared to PNGs. WebP lossy images are 25-34% smaller than comparable JPEG images at equivalent SSIM quality index.[3]

What is Lighthouse?

Lighthouse is an open-source, automated tool for improving the quality of web pages. You can run it against any web page, public or requiring authentication. It has audits for performance, accessibility, progressive web apps, SEO and more.[4]

Google gives higher search page rankings to websites that achieve a higher lighthouse score. With the sheer number of web pages available, Google's crawlers can only spend a certain amount of time scraping your page before moving to the next one. Therefore it is imperative that your page gets a high score to stand out among the rest.

You can use Measure to test your page in a lab environment and PageSpeed Insights for field performance

Head component

The head component of a page will control how your page will appear on search engine results and social media posts.

The

<title>element for your homepage can list the name of your website or business, and could include other bits of important information like the physical location of the business or maybe a few of its main focuses or offerings. Try to make the title element brief and unique from other pages.-

A page's meta description tag gives Google and other search engines a summary of what the page is about. Meta description tags are important because Google might use them as snippets for your pages in search engine results.

Adding meta description tags to each of your pages is always a good practice in case Google cannot find a good selection of text to use in the snippet.[5]

Meta descriptions should accurately summarise the content of the page wile also being unique. Meta properties that cause a title, description and image to appear when a link to this page is shared on social media (example for Facebook

<meta name="og:url" content="your-website.com/the-current-page" >

<meta name="og:description" content="Web page description">

<meta name="og:type" content="website">

<meta name="og:description" content="your-website.com/your-image.png">

Hosting

- Your choice of web hosting provider can have a huge impact on your site, as the load time of your website is an important ranking factor. PageSpeed Insights Speed Score uses two important speed metrics: First Contentful Paint (FCP) and DOMContentLoaded (DCL).

- Location is another factor as choosing a web hosting service with server locations that are geographically close to your operational area so that you can ensure that there will be a naturally-fast server response time for your site.

- Having a reliable host is important to experience as little downtime as possible Downtime has an immediate effect, as your potential visitors will no longer be able to access the website. In the long term, downtime can increase your bounce rate, and that can take a long time to repair.

As web pages become more complex, referencing resources from numerous domains, DNS lookups can become a significant bottleneck in the browsing experience. Whenever a client needs to query a DNS resolver over the network, the latency introduced can be significant, depending on the proximity and number of name servers the resolver has to query.[6]

For example I used Namecheap to purchase a domain and hosting, and their hosting performs poorly and I optimised my site's code as much as I could but never get past 60 on Lighthouse's performance score. However I switched out my DNS name-servers to Cloudflare and saw my site hit 92 afterwards.

What is a CDN?

A content delivery network (CDN) is a group of geographically distributed servers that speed up the delivery of web content by bringing it closer to where users are. Data centres across the globe use caching, a process that temporarily stores copies of files, so that you can access internet content from a web-enabled device or browser more quickly through a server near you. CDNs cache content like web pages, images, and video in proxy servers near to your physical location.[7]

Essentially, copies of your site are stored at multiple, geographically diverse data centres so that users have faster and more reliable access to your site.

A CDN can be used to improve site speed and content availability, reduce bandwidth costs and improve site security by mitigating DDoS attacks.

Minimising JS and CSS

Minifying Javascript and CSS files can contribute to help your website load faster. Minification is the process of removing unnecessary characters from code without changing the way that code works. These unnecessary characters are usually things like: white-space characters, new line breaks, comments, block delimiters.

Minifying code can reduce the file size by 30-40%. Sometimes even as much as 50%. Concatenating files also helps reduce the load on your server and network. Combining multiple files into one lets a server send more data in a fewer number of connections.

To minimise your CSS and JS, you could use a tool like Minifier.

For a more advanced minimisation, PurgeCSS which is a tool to remove unused CSS. If you use a CSS framework like Tailwind or Bootstrap, chances are that you are only using a small subset of the framework, and a lot of unnecessary CSS will be included.

If you are a React developer and care about performance, Preact is a lightweight alternative to React that focuses on performance. Preact keeps the same modern API as React. And if that's not enough, there's an extensive compatibility layer with React to make sure your existing React code can be used in Preact. It's called preact/compat. Preact's bundle size is 3kB compared to the minified bundle of react + react-dom size's 128kB.

You can also use Gzip, a software application for file compression, to reduce the size of your CSS, HTML, and JavaScript files that are larger than 150 bytes.

Performance Analysis

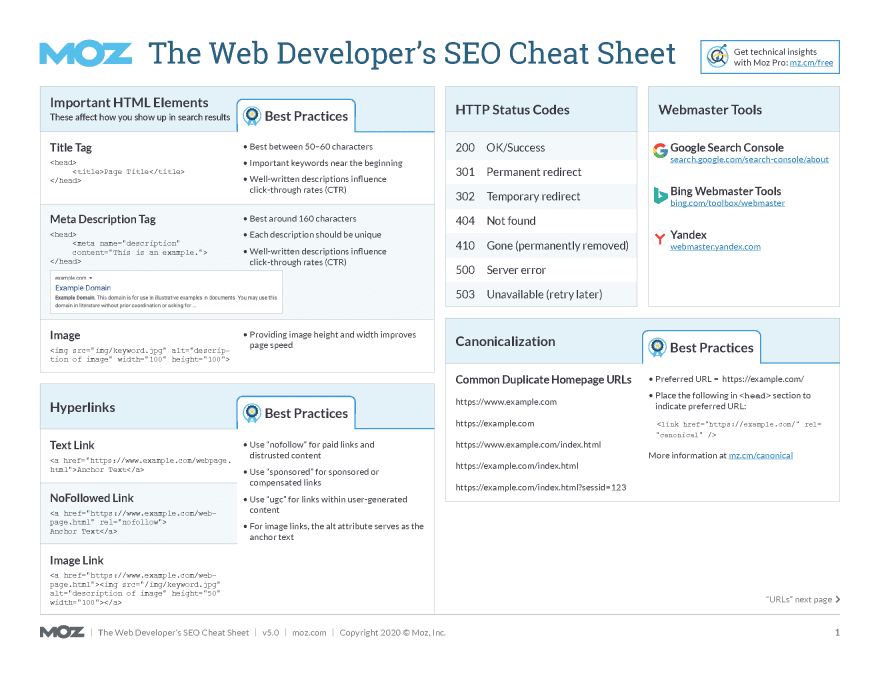

To analyse a page's performance on search engines, tools such as Google's Search Console and Bing Webmaster Tools can be utilised.

With these tools website owners can:

- See which parts of a site had problems crawling

- Test and submit sitemaps

- Analyze or generate robots.txt files

- Remove URLs already crawled

- Specify your preferred domain

- Identify issues with title and description meta tags

- Understand the top searches used to reach a site

- Get a glimpse at how search engines sees pages

- Receive notifications of quality guidelines violations and request a site reconsideration

More SEO Resources

Google SEO Starter Guide

The Indispensable Guide to SEO for Web Developers

The Web Developer's SEO Cheat Sheet

Top comments (0)