Originally published at:

https://blog.deversity.com/2019/10/scaling-postgres-db-for-better.html

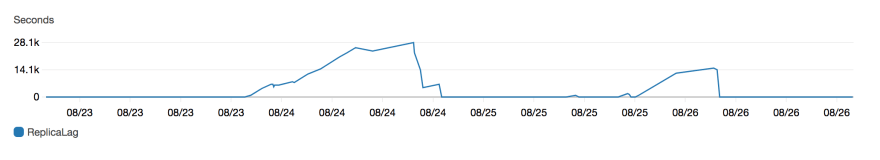

Not long ago we came across an issue with database scalability when working on a project for a client with expertise in data-driven management solutions. It threw both business growth and the project’s future into question. With a total size of around 3TB and number of rows that strive to 3 billions, we reached the limit of what a single-node Postgres database instance could do (in an acceptable manner). Read replicas were not effective because the application is write-intensive, and a significant lag was in evidence between the main node and replicas.

We tried various optimization techniques including ones from Postgres and in-memory processing and calculations, but we were still pushing the boundaries of scalability. As shown in the graph below, CPU was hit hard several times during the month.

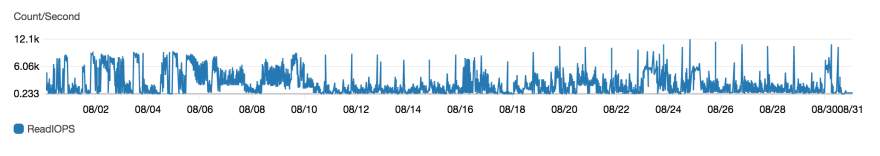

The average number of disk read operations per second shows that out working set was almost completely in memory on a daily basis. Even we tried with scaling up the DB instance class to a class with more RAM, it didn’t result in a dramatic drop in ReadIOPS.

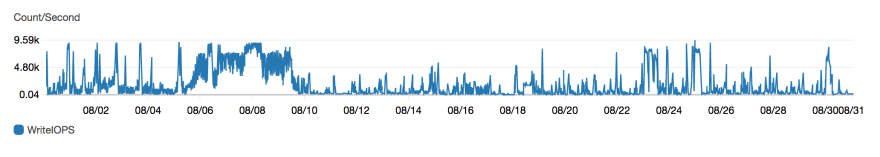

The average number of disk write operations per second also showed that our write-intensive processes put a really hard load on the DB, especially in the first couple of days of each month, which are always heavy on traffic.

In order to avoid stalling business growth for our client we needed to find a solution that would scale-out our database and improve querying performances. We were short on time and couldn’t allow developers to break from implementing new features and maintaining the project in general.

Attempt #1: DBA experts

Our first instinct was to consult a DBA expert on the matter and see whether particular configuration might alleviate our troubles. After consulting two seasoned PostgreSQL experts, all they could recommend was that we build our own custom Postgres cluster, to partition data on particular dimension and maintain it ourselves. Since our experience of introducing more parts to the system which requires maintenance from our team tells us that such action only leads to a technical debt, a fully-managed solution was more desirable in terms of reducing workload, and stress, and increasing maintainability.

Attempt #2: Amazon Aurora

Since the system was using AWS Relational Database Service (RDS), the next logical step was to try Aurora, a service from AWS. Aurora is a Postgres compatible database, that’s intended for high scalability. Unlike native Postgres, Aurora solves the problem of creating read replicas by replicating a storage layer through the AWS EBS infrastructure. It sounded like it could solve our problem with the replication lag, so we set out to try to migrate our existing native Postgres to AWS Aurora. We did it by creating an Aurora Read Replica out of our RDS Postgres instance. When the replica lag between the Postgres instance and the Aurora Read Replica went to zero, we stopped the replication. At that point, we promoted the Aurora Read Replica to the standalone Aurora Postgres cluster for reading and writing. Sadly, this migration gave us only a marginal performance increase and failed to provide a solution that was satisfactory in terms of performance.

Aurora uses distributed storage layer that is virtualized and sits on a proprietary virtualized storage system backed up by SSD, which is how they allow for greater speeds and greater number of IOPS. With Aurora in place we were able to execute a greater number of read and write operations per second, but heavy concurrent queries and transactions, even though faster, failed to provide results in an acceptable time frame. The reason behind this is most probably the fact that Aurora promises 3x performance improvement when it comes to Postgres, but what we needed was apparently at the greater order of magnitude.

Third time's a charm - Citus Cloud

Our quest for a managed solution continued, and soon afterwards, we stumbled upon Citus Cloud - a fully-managed distributed database as a service. As they state on their official website, Citus is an extension to Postgres that distributes your data and queries across nodes, so your database can scale and your queries are fast. It was important to us that the application would still be running on Postgres in order to reduce infrastructural changes and the need to re-architect everything from scratch. As stated above, Citus transforms Postgres into a distributed database by intelligently distributing your data and queries across multiple nodes. It was everything that DBA experts advised, but with the added benefit of being a managed solution.

Most SaaS applications already have a natural dimension on which to distribute data across nodes - dimensions such as tenant, customer, account etc. Our data model was potentially a good fit for a distributed database like Citus. Even though we had no previous experience of sharding or distributed databases, Citus seemed to be the best and fastest way to protect our development team throughput, and to scale out our database.

I’ll be honest with you - migration wasn’t entirely painless. Migrating an underlying infrastructure isn’t the most exciting job, especially when you know that premature changes could have a significant impact on the client’s business. There were a lot of things to do. We had to adjust the schema and queries all across the application. Even though Citus is a Postgres extension, it’s not a drop-in, magical replacement. Ok, it might be a bit magical.

Migrating our Postgres instance to Citus

Excellent documentation exists on the official Citus website, where they describe all the steps needed for migrating an existing application. Bellow are all the major steps we’ve taken during transition period.

Identifying a distribution strategy

The first step was to choose the distribution key and identify table types - distributed, referenced or local. In Citus, a distributed table is one that is spread across multiple server nodes. They divide each distributed table into multiple logical shards based on the distribution key (column). The best practice is to partition distributed tables by a common tenant_id column. This results in having all tenant’s data in one shard. Referenced tables are ones that are placed on all nodes in a Citus cluster and which you can then join against. All the tables that don’t need to be sharded because they’re small and not joined against, were left as standard Postgres tables.

Preparing source tables for migration

The second step was to modify the data structure for the application’s existing database by adding distribution keys and backfilling newly created columns. It was necessary to do this in small batches with time allotted for each, because updating missing values in those newly created columns led to an unacceptable load on the database.

Updating our Ruby on Rails app

The last step was to update the application code and queries to deal with the schema changes. Our client’s application is based on Ruby on Rails and what made our migrating process so smooth was the activerecord-multi-tenant gem which enabled us to scale-out by following the multi-tenant data model. This Ruby library is supported by the Citus core team and it evolved from their experience with other customers. You can find more details about this library here.

The following example describes Customer as the tenant model, and Payment model states that the payments table needs to have a customer_id column that references the customer to whom the payment belongs.

class Payment < ActiveRecord::Base

multi_tenant :customer

# ...

end

By adding multi_tenant :customer annotations to models, the library automatically takes care of including distribution key (customer_id) with all queries. In order for that to work, tenant always needs to be specified. One way is wrapping code in a block like this:

customer = Customer.find(session[:customer_id])

# ...

MultiTenant.with(customer) do

payment = Payment.find(params[:payment_id])

payment.update! updated_at: Time.current

end

We changed more than 100 files and modified 2000 lines of code before we were ready to use a distributed multi-tenant database like Citus. The most concerning issues were to find all places where Active Record generates queries and make sure that tenant_id is included wherever we query distributed tables. All model classes that reflect distributed tables had to be refactored in order to support multi-tenancy. Migrations meant for backfilling distributed tables took a significant amount of our time since we didn’t have tenant_id in all the tables that ended up as distributed ones.

Citus requires that primary keys contain the distribution column, so we had to modify primary keys of distributed tables and make them compound to include a tenant id. Making primary keys compound required modifying all the fabricators as well. Another thing to mention is that Citus errors out when you try to update the value of the distribution column. As a consequence, we had to prevent editing distribution table everywhere in the app where it was allowed before. Also, let's not forget how painful it was refactoring all the specs and features since our codebase exists for more than 6 years.

During migration we received tremendous assistance from Citus support and core teams, who were incredibly helpful. We really enjoyed learning from them.

Results

After migration, we succeeded in the most important aspect - our client doesn’t have to worry about how to manage its growth. Citus Cloud provides self-service scaling to deal with an increased load, by means of a web interface that makes it easy to either add new nodes or increase memory and CPU capacity for existing nodes. Naturally, managed database comes with a price. We were aware that the database bill is going to get larger once we migrated to Citus Cloud. Since we have multiple testing environments, we couldn’t afford having DB formations of the same size as production one. As an alternative, we setup multiple development formations that allow only 2 nodes with the maximum capacity of 8GB RAM, 4 vCPUs and 100GB of storage. When that’s not good enough to ensure that our application is rigorously tested before it is deployed, we fork production formation for that purpose only. There is a catch there. You can’t decrease the number of nodes. That leads to having really expensive staging formation that we tend to get rid off as soon as we’re done with testing.

Even though our bill increased by 20% for production DB instance only, our development team is now focused on the application business logic and not maintaining the database, which was our goal from the start. Previously, we worried about performance and running out of memory on our single-node Postgres instance. Even though we’re still really careful, we don’t need to tell our data team and other developers not to use certain “expensive” and “heavy” SQL features. CPU utilization is equally distributed across nodes. Database operations are much faster. We’re now able to execute some heavy aggregate functions on the whole data set which were in the domain of science fiction before we introduced Citus as our DB. This significant improvement in speed and performance is best reflected by the comments we’ve received from the client’s business intelligence and data teams:

- FREAKING AWESOME!

- So good I almost sharded myself. (pun intended)

- For all I grouped about the Citus changes, the database runs stuff REALLY fast now. It’s super nice.

- Awesome job on all the Citus stuff, it goes sooo much faster!

- This is a huge accomplishment!

- This is huge news! Thank you to the whole team for all the hard work on this.

Until the next success story...

P. S. We have no affiliation with Citus Cloud whatsoever, after a long (and moderately painful) migration, now we’re just a happy customer.

Top comments (1)

Great article. I'd love to see follow up on how you addressed big tenants. Because if tenant fits on single node everything is great. But if single node performance is not enough then Citus gets in a way. Distribution pattern is either "put tenant on single shard" or "spread data of every tenant on every shard" (distribution costs overweight parallelization processing when there are too many nodes).